kafka详解

1.Kafka概述

1.1kafka定义

-

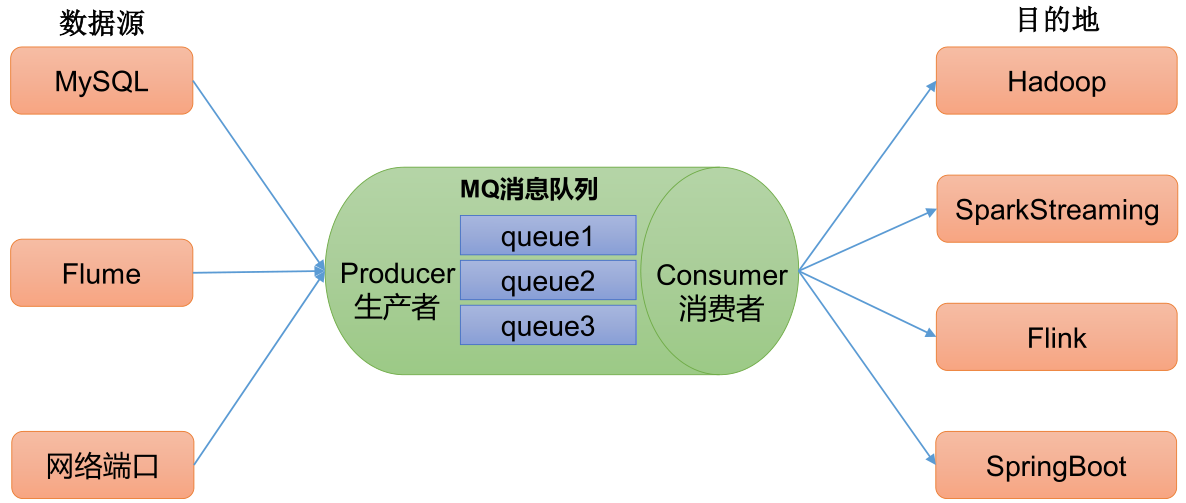

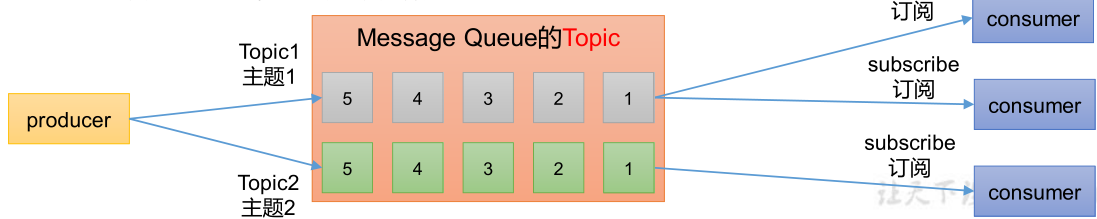

Kafka传统定义:Kafka是一个分布式的基于发布/订阅模式的消息队列(Message Queue),主要应用于大数据实时处理领域。使用Scala语言编写,是Apache的顶级项目。

发布/订阅:消息的发布者不会将消息直接发送给特定的订阅者,而是将发布的消息分为不同的类别,订阅者只接收感兴趣的信息。

-

Kafka最新定义:Kafka是一个开源的分布式事件流平台(Event Streaming Platform),主要应用于高性能数据管道、流分析、数据集成和关键任务应用。

1.2 消息队列

目前企业中比较常见的消息队列产品主要有Kafka、ActiveMQ、RabbitMQ、RocketMQ等。在大数据场景主要采用Kafka作为消息队列。在JavaEE开发中主要采用ActiveMQ、RabbitMQ、RocketMQ。

-

ActiveMQ:历史悠久,实现了JMS(Java Message Service)规范,支持性较好,性能相对不高。

-

RabbitMQ:可靠性高、安全。

-

RocketMQ:阿里开源的消息中间件,纯Java实现。

-

Kafka:分布式、高性能、跨语言。

1.2.1 传统消息队列的应用场景

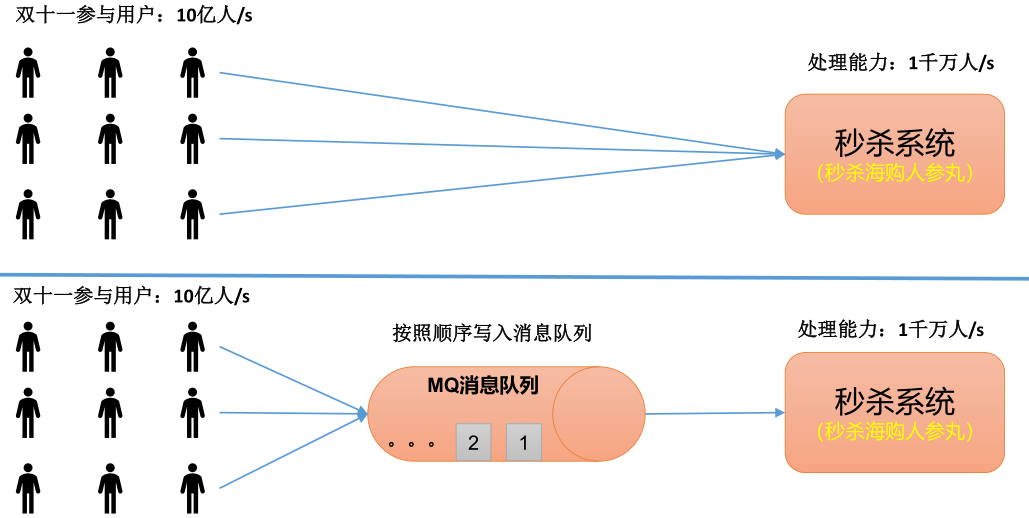

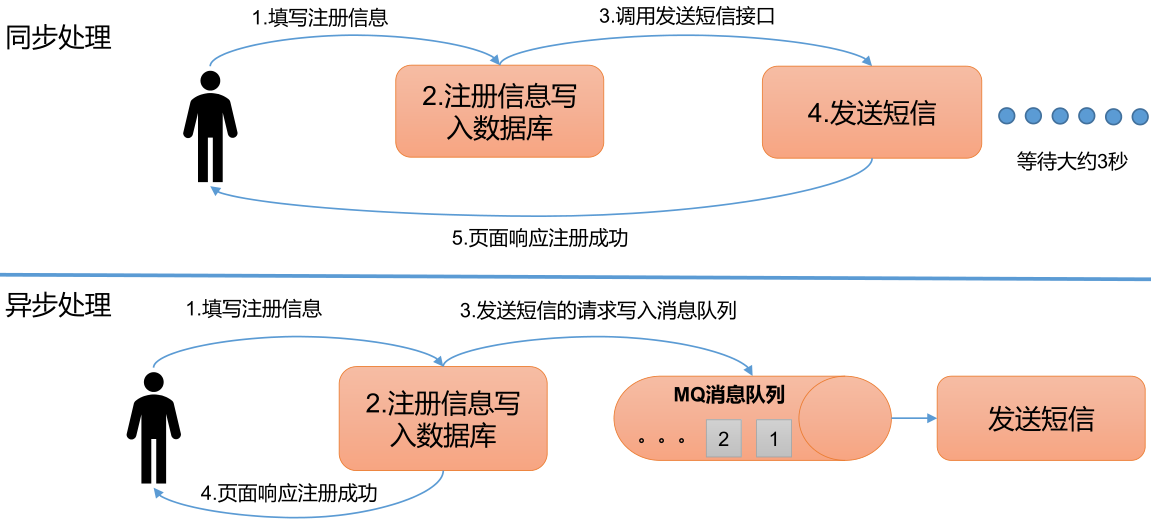

传统的消息队列的主要应用场景包括:缓冲/消峰、解耦和异步通信。

- 缓冲/消峰:有助于控制和优化数据流经过系统的速度,解决生产消息和消费消息的处理速度不一致的情况

- 解耦:允许你独立的扩展或修改两边的处理过程,只要确保它们遵守同样的接口约束。

- 异步通信:允许用户把一个消息放入队列,但并不立即处理它,然后在需要的时候再去处理它们。

1.2.2 消息队列的两种模式

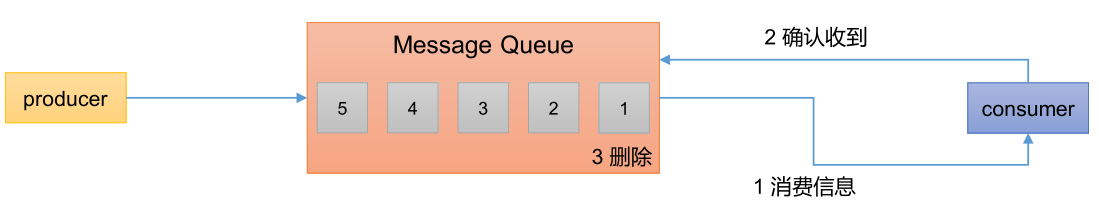

(1)点对点模式:消费者主动拉取数据,消息收到之后清除消息。

(2)发布/订阅模式:

-

可以有多个topic主题

-

消费者消费数据之后,不删除数据

-

每个消费者相互独立,都可以消费到数据

1.3 Kafka基础架构

-

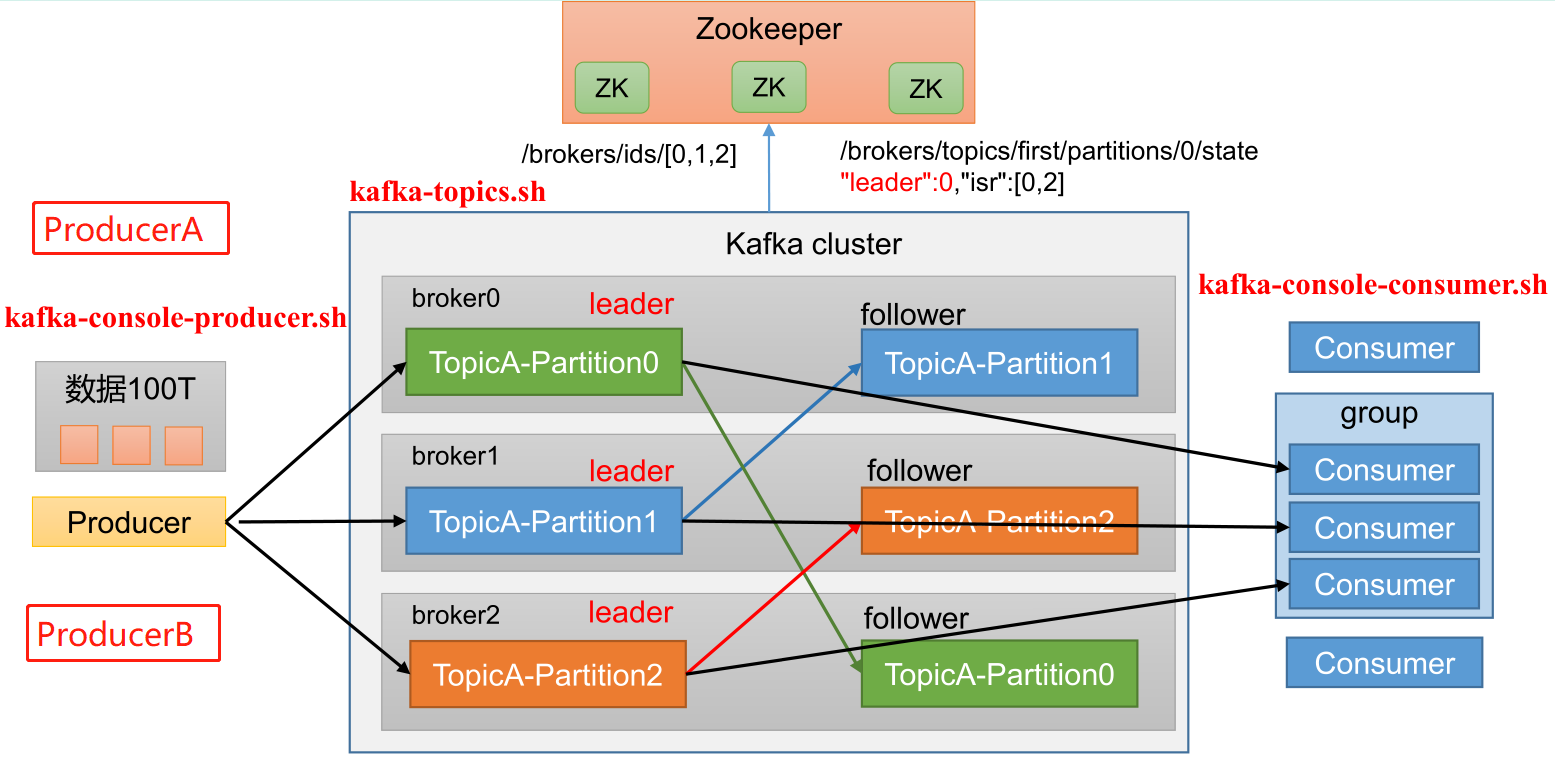

Producer:消息生产者,就是向Kafka broker发消息的客户端。

-

Consumer:消息消费者,向Kafka broker 获取消息的客户端。

-

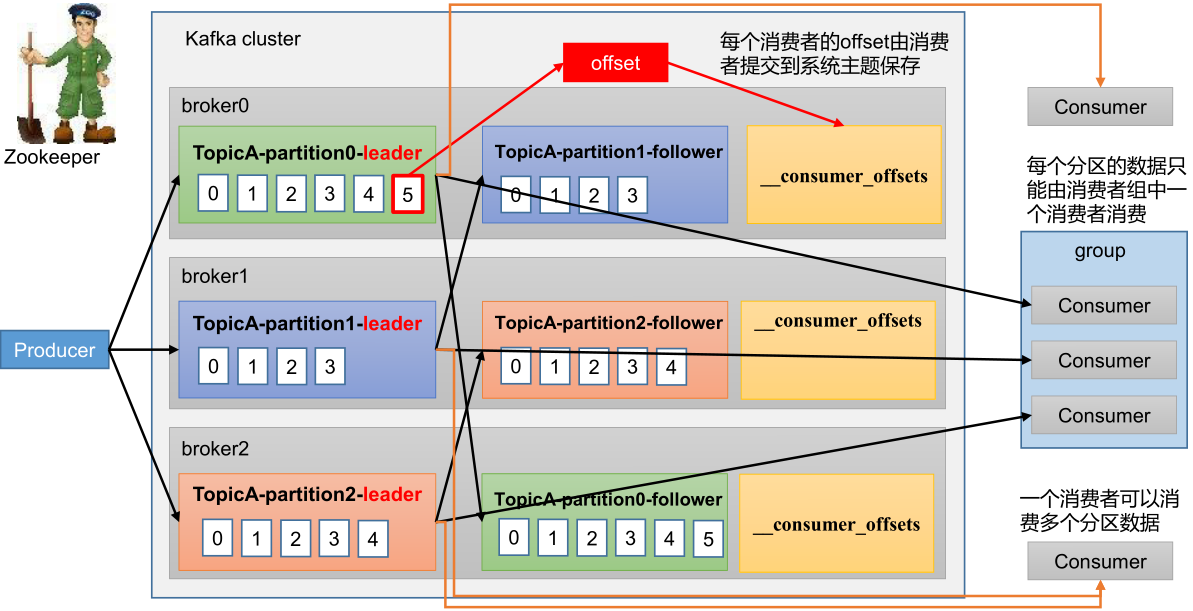

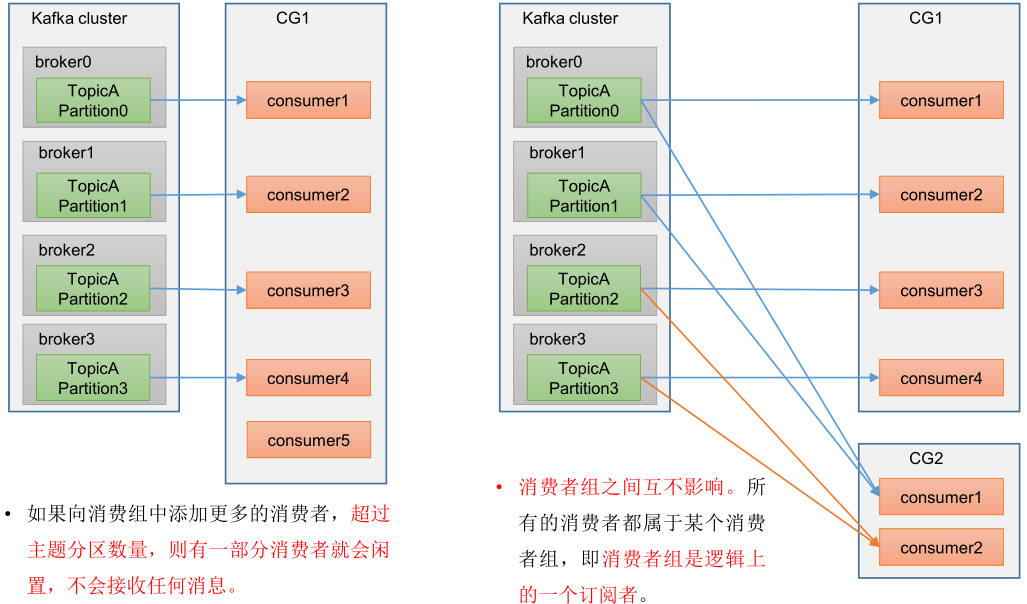

Consumer Group(CG):消费者组,由多个consumer组成。消费者组内每个消费者负责消费不同分区的数据,一个分区只能由一个组内消费者消费;消费者组之间互不影响,所有的消费者都属于某个消费者组,即消费者组是逻辑上的一个订阅者。

-

Broker:一台Kafka服务器就是一个broker。一个集群由多个broker组成。一个broker可以容纳多个topic。

-

Topic:可以理解为一个队列,生产者和消费者面向的都是一个topic。

-

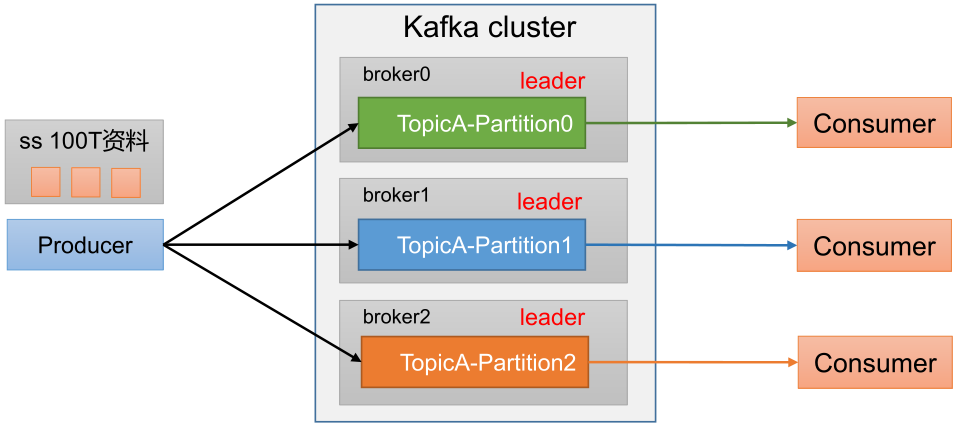

Partition:为了实现扩展性,一个非常大的topic可以分布到多个broker(即服务器)上,一个topic可以分为多个partition,每个partition是一个有序的队列。

-

Replica:副本。一个 topic 的每个分区都有若干个副本,一个 Leader 和若干个 Follower。

-

Leader:每个分区多个副本的“主”,生产者发送数据的对象,以及消费者消费数据的对象都是Leader。

-

Follower:每个分区多个副本中的“从”,实时从Leader中同步数据,保持和Leader数据的同步。Leader发生故障时,某个Follower会成为新的Leader。

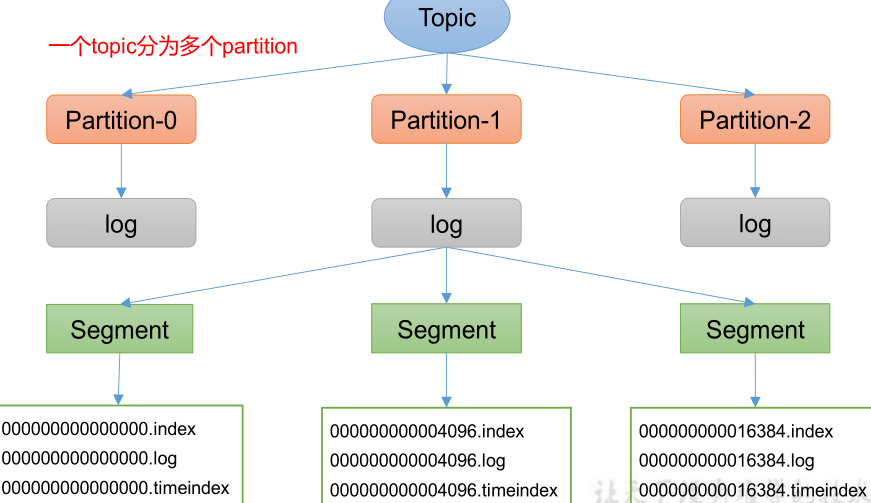

1.为方便扩展,并提高吞吐量,一个topic分为多个partition。

2.配合分区的设计,提出消费者组的概念,组内每个消费者并行消费。

3.为提高可用性,为每个partition增加若干副本,类似NameNodeHA。

4. ZK中记录谁是leader,Kafka2.8.0以后也可以配置不采用ZK。

2.Kafka安装与部署

(1)Kafka下载地址:https://kafka.apache.org/downloads.html,选择需要下载的版本,并将其上传到服务器上。

-rw-r--r-- 1 root root 41414555 10月 9 07:42 kafka_2.11-0.11.0.0.tgz(2)将压缩包进行解压

tar -zxvf kafka_2.11-0.11.0.0.tgz(3)修改解压之后的文件名

mv kafka_2.11-0.11.0.0 kafka(4)进入到 config 目录下修改server.properties配置文件,本文搭建的是单节点kafka。

查看代码

# The id of the broker. This must be set to a unique integer for each broker.

# broker的全局唯一编号,不能重复,只能是数字

broker.id=0

############################# Socket Server Settings #############################

# The address the socket server listens on. It will get the value returned from

# java.net.InetAddress.getCanonicalHostName() if not configured.

# FORMAT:

# listeners = listener_name://host_name:port

# EXAMPLE:

# listeners = PLAINTEXT://your.host.name:9092

listeners=PLAINTEXT://kafka1:9092

# Hostname and port the broker will advertise to producers and consumers. If not set,

# it uses the value for "listeners" if configured. Otherwise, it will use the value

# returned from java.net.InetAddress.getCanonicalHostName().

advertised.listeners=PLAINTEXT://8.110.110.110:9092

# Maps listener names to security protocols, the default is for them to be the same. See the config documentation for more details

#listener.security.protocol.map=PLAINTEXT:PLAINTEXT,SSL:SSL,SASL_PLAINTEXT:SASL_PLAINTEXT,SASL_SSL:SASL_SSL

# The number of threads that the server uses for receiving requests from the network and sending responses to the network

num.network.threads=3

# The number of threads that the server uses for processing requests, which may include disk I/O

num.io.threads=8

# The send buffer (SO_SNDBUF) used by the socket server

socket.send.buffer.bytes=102400

# The receive buffer (SO_RCVBUF) used by the socket server

socket.receive.buffer.bytes=102400

# The maximum size of a request that the socket server will accept (protection against OOM)

socket.request.max.bytes=104857600

############################# Log Basics #############################

# A comma separated list of directories under which to store log files

log.dirs=../logs

# The default number of log partitions per topic. More partitions allow greater

# parallelism for consumption, but this will also result in more files across

# the brokers.

num.partitions=1

# The number of threads per data directory to be used for log recovery at startup and flushing at shutdown.

# This value is recommended to be increased for installations with data dirs located in RAID array.

num.recovery.threads.per.data.dir=1

############################# Internal Topic Settings #############################

# The replication factor for the group metadata internal topics "__consumer_offsets" and "__transaction_state"

# For anything other than development testing, a value greater than 1 is recommended to ensure availability such as 3.

offsets.topic.replication.factor=1

transaction.state.log.replication.factor=1

transaction.state.log.min.isr=1

############################# Log Flush Policy #############################

# Messages are immediately written to the filesystem but by default we only fsync() to sync

# the OS cache lazily. The following configurations control the flush of data to disk.

# There are a few important trade-offs here:

# 1. Durability: Unflushed data may be lost if you are not using replication.

# 2. Latency: Very large flush intervals may lead to latency spikes when the flush does occur as there will be a lot of data to flush.

# 3. Throughput: The flush is generally the most expensive operation, and a small flush interval may lead to excessive seeks.

# The settings below allow one to configure the flush policy to flush data after a period of time or

# every N messages (or both). This can be done globally and overridden on a per-topic basis.

# The number of messages to accept before forcing a flush of data to disk

#log.flush.interval.messages=10000

# The maximum amount of time a message can sit in a log before we force a flush

#log.flush.interval.ms=1000

############################# Log Retention Policy #############################

# The following configurations control the disposal of log segments. The policy can

# be set to delete segments after a period of time, or after a given size has accumulated.

# A segment will be deleted whenever *either* of these criteria are met. Deletion always happens

# from the end of the log.

# The minimum age of a log file to be eligible for deletion due to age

log.retention.hours=168

# A size-based retention policy for logs. Segments are pruned from the log unless the remaining

# segments drop below log.retention.bytes. Functions independently of log.retention.hours.

#log.retention.bytes=1073741824

# The maximum size of a log segment file. When this size is reached a new log segment will be created.

log.segment.bytes=1073741824

# The interval at which log segments are checked to see if they can be deleted according

# to the retention policies

log.retention.check.interval.ms=300000

############################# Zookeeper #############################

# Zookeeper connection string (see zookeeper docs for details).

# This is a comma separated host:port pairs, each corresponding to a zk

# server. e.g. "127.0.0.1:3000,127.0.0.1:3001,127.0.0.1:3002".

# You can also append an optional chroot string to the urls to specify the

# root directory for all kafka znodes.

zookeeper.connect=zookeeper1:2181

# Timeout in ms for connecting to zookeeper

zookeeper.connection.timeout.ms=18000

############################# Group Coordinator Settings #############################

# The following configuration specifies the time, in milliseconds, that the GroupCoordinator will delay the initial consumer rebalance.

# The rebalance will be further delayed by the value of group.initial.rebalance.delay.ms as new members join the group, up to a maximum of max.poll.interval.ms.

# The default value for this is 3 seconds.

# We override this to 0 here as it makes for a better out-of-the-box experience for development and testing.

# However, in production environments the default value of 3 seconds is more suitable as this will help to avoid unnecessary, and potentially expensive, rebalances during application startup.

group.initial.rebalance.delay.ms=0

#exclude.internal.topics=false,默认是true,表示不能消费系统主题。为了查看该系统主题数据,所以该参数修改为false。

exclude.internal.topics=false(5)启动zookeeper

1.使用kafka内置的zk

[root@-9930 bin]# ls

connect-distributed.sh kafka-console-consumer.sh kafka-features.sh kafka-reassign-partitions.sh kafka-topics.sh zookeeper-server-stop.sh

connect-mirror-maker.sh kafka-console-producer.sh kafka-leader-election.sh kafka-replica-verification.sh kafka-verifiable-consumer.sh zookeeper-shell.sh

connect-standalone.sh kafka-consumer-groups.sh kafka-log-dirs.sh kafka-run-class.sh kafka-verifiable-producer.sh

kafka-acls.sh kafka-consumer-perf-test.sh kafka-metadata-shell.sh kafka-server-start.sh trogdor.sh

kafka-broker-api-versions.sh kafka-delegation-tokens.sh kafka-mirror-maker.sh kafka-server-stop.sh windows

kafka-cluster.sh kafka-delete-records.sh kafka-preferred-replica-election.sh kafka-storage.sh zookeeper-security-migration.sh

kafka-configs.sh kafka-dump-log.sh kafka-producer-perf-test.sh kafka-streams-application-reset.sh zookeeper-server-start.sh

[root@-9930 bin]# ./zookeeper-server-start.sh

USAGE: ./zookeeper-server-start.sh [-daemon] zookeeper.properties

[root@-9930 bin]# ./zookeeper-server-start.sh ../config/zookeeper.properties2.使用外部zk(推荐)

- 下载zookeeper,下载地址:https://zookeeper.apache.org/

- 解压压缩包:apache-zookeeper-3.7.1-bin.tar.gz,并修改文件名字为 zookeeper

- 修改配置文件zoo.cfg:dataDir=../data 数据存放的目录,以及监听的端口等、

[root@-9930 bin]# cat ../conf/zoo.cfg

# The number of milliseconds of each tick

tickTime=2000

# the directory where the snapshot is stored.

# do not use /tmp for storage, /tmp here is just

# example sakes.

dataDir=../data

# the port at which the clients will connect

clientPort=2181- 启动zookeeper

[root@-9930 bin]# ls

README.txt zkCli.cmd zkEnv.cmd zkServer.cmd zkServer.sh zkSnapshotComparer.sh zkSnapShotToolkit.sh zkTxnLogToolkit.sh

zkCleanup.sh zkCli.sh zkEnv.sh zkServer-initialize.sh zkSnapshotComparer.cmd zkSnapShotToolkit.cmd zkTxnLogToolkit.cmd

[root@-9930 bin]# ./zkServer.sh start ../conf/zoo.cfg

ZooKeeper JMX enabled by default

Using config: ../conf/zoo.cfg

Starting zookeeper ... STARTED

[root@-9930 bin]# (6)启动kafka,使用 jps 命令查看进程是否启动成功。

[root@-9930 bin]# ls

connect-distributed.sh kafka-console-consumer.sh kafka-features.sh kafka-reassign-partitions.sh kafka-topics.sh zookeeper-server-stop.sh

connect-mirror-maker.sh kafka-console-producer.sh kafka-leader-election.sh kafka-replica-verification.sh kafka-verifiable-consumer.sh zookeeper-shell.sh

connect-standalone.sh kafka-consumer-groups.sh kafka-log-dirs.sh kafka-run-class.sh kafka-verifiable-producer.sh

kafka-acls.sh kafka-consumer-perf-test.sh kafka-metadata-shell.sh kafka-server-start.sh trogdor.sh

kafka-broker-api-versions.sh kafka-delegation-tokens.sh kafka-mirror-maker.sh kafka-server-stop.sh windows

kafka-cluster.sh kafka-delete-records.sh kafka-preferred-replica-election.sh kafka-storage.sh zookeeper-security-migration.sh

kafka-configs.sh kafka-dump-log.sh kafka-producer-perf-test.sh kafka-streams-application-reset.sh zookeeper-server-start.sh

[root@-9930 bin]# ./kafka-server-start.sh -daemon ../config/server.properties

[root@-9930 bin]# jps

7761 QuorumPeerMain

15286 Jps

15150 Kafka(7)创建Topic(主题),查看Topic,修改Topic分区数(只能增加partition个数,不能减少),删除Topic

[root@-9930 bin]# ./kafka-topics.sh --create --zookeeper zookeeper1:2181 --replication-factor 1 --partitions 3 --topic kafka-demo

Created topic kafka-demo.

[root@-9930 bin]# ./kafka-topics.sh --describe --zookeeper zookeeper1:2181 --topic kafka-demo

Topic: kafka-demo TopicId: xjoO1e24Tm24JJMWUodD7w PartitionCount: 3 ReplicationFactor: 1 Configs:

Topic: kafka-demo Partition: 0 Leader: 0 Replicas: 0 Isr: 0

Topic: kafka-demo Partition: 1 Leader: 0 Replicas: 0 Isr: 0

Topic: kafka-demo Partition: 2 Leader: 0 Replicas: 0 Isr: 0

# 修改Topic:只能增加partition个数,不能减少

[root@-9930 bin]# ./kafka-topics.sh --alter --zookeeper zookeeper1:2181 --topic kafka-demo --partitions 5

WARNING: If partitions are increased for a topic that has a key, the partition logic or ordering of the messages will be affected

Adding partitions succeeded!

[root@-9930 bin]# ./kafka-topics.sh --describe --zookeeper zookeeper1:2181 --topic kafka-demo

Topic: kafka-demo TopicId: xjoO1e24Tm24JJMWUodD7w PartitionCount: 5 ReplicationFactor: 1 Configs:

Topic: kafka-demo Partition: 0 Leader: 0 Replicas: 0 Isr: 0

Topic: kafka-demo Partition: 1 Leader: 0 Replicas: 0 Isr: 0

Topic: kafka-demo Partition: 2 Leader: 0 Replicas: 0 Isr: 0

Topic: kafka-demo Partition: 3 Leader: 0 Replicas: 0 Isr: 0

Topic: kafka-demo Partition: 4 Leader: 0 Replicas: 0 Isr: 0

[root@-9930 bin]# ./kafka-topics.sh --delete --zookeeper zookeeper1:2181 --topic kafka-demo| 参数 | 描述 |

| --zookeeper | 连接的zookeeper主机名称和端口号。 |

| --topic <String: topic> | 操作的topic名称。 |

| --create | 创建主题。 |

| --delete | 删除主题。 |

| --alter | 修改主题。 |

| --list | 查看所有主题。 |

| -describe | 查看主题详细描述。 |

| --partitions <Integer: # of partitions> | 设置分区数。 |

| --replication-factor<Integer: replication factor> | 设置分区副本。 |

(8)启动生产者,并发送消息

[root@-9930 bin]# ./kafka-console-producer.sh --broker-list kafka1:9092 --topic kafka-demo

>hello

>is

>kafka

>| 参数 | 描述 |

| --broker-list | 连接的Kafka Broker主机名称和端口号。 |

| --topic <String: topic> | 操作的topic名称。 |

(9)启动消费者,并接收消息

[root@-9930 bin]# ./kafka-console-consumer.sh --bootstrap-server kafka1:9092 --topic kafka-demo --from-beginning

hello

is

kafka| 参数 | 描述 |

| --bootstrap-server <String: server toconnect to> | 连接的Kafka Broker主机名称和端口号。 |

| --topic <String: topic> | 操作的topic名称。 |

| --from-beginning | 从头开始消费。 |

| --group <String: consumer group id> | 指定消费者组名称。 |

3.Kafka生产者

3.1生产者消息发送流程

3.1.1发送原理

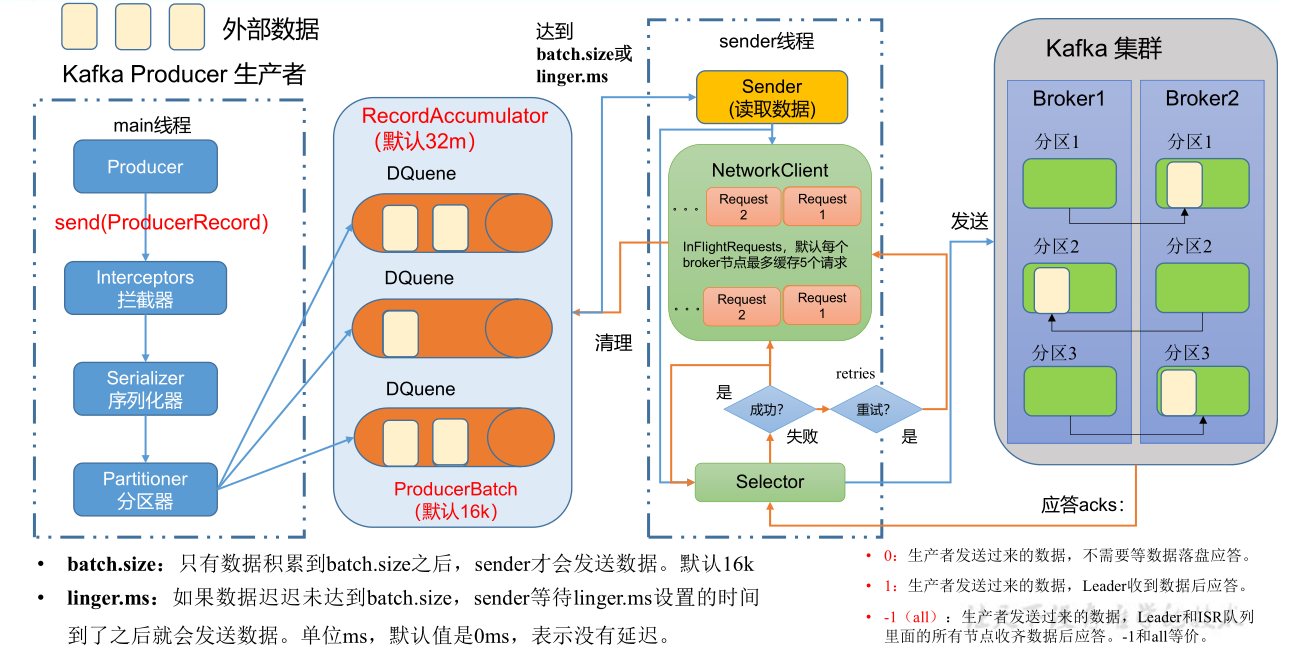

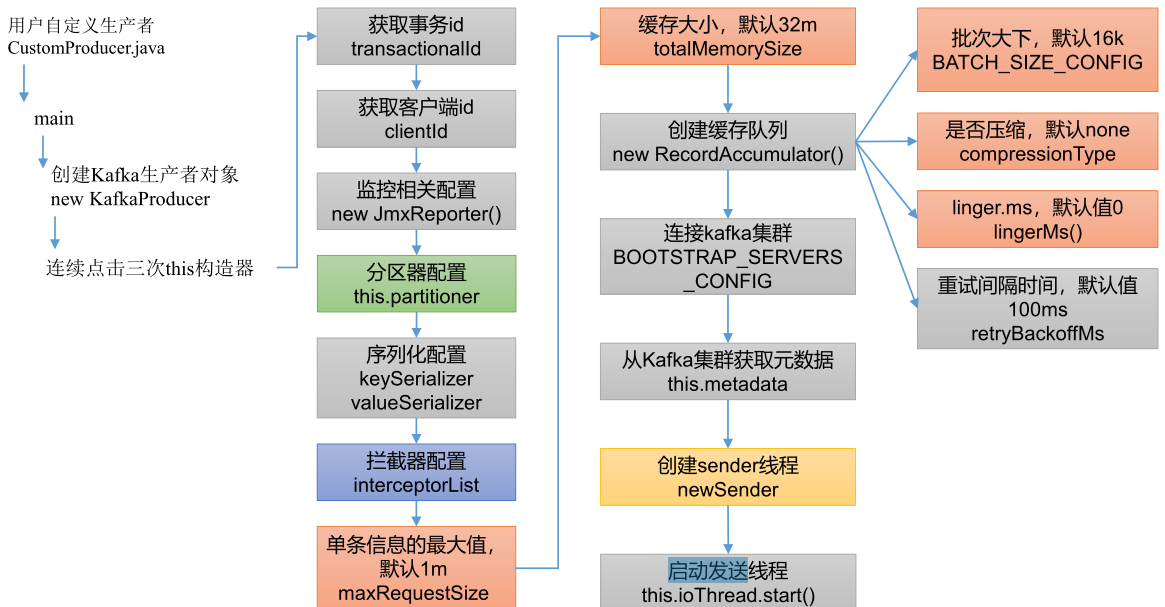

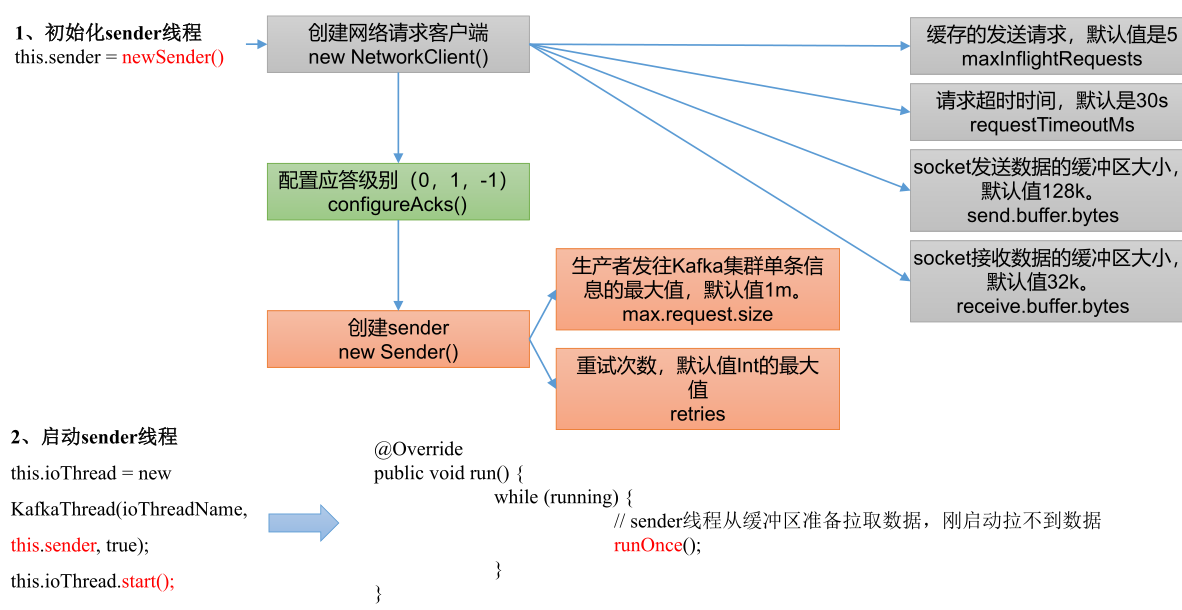

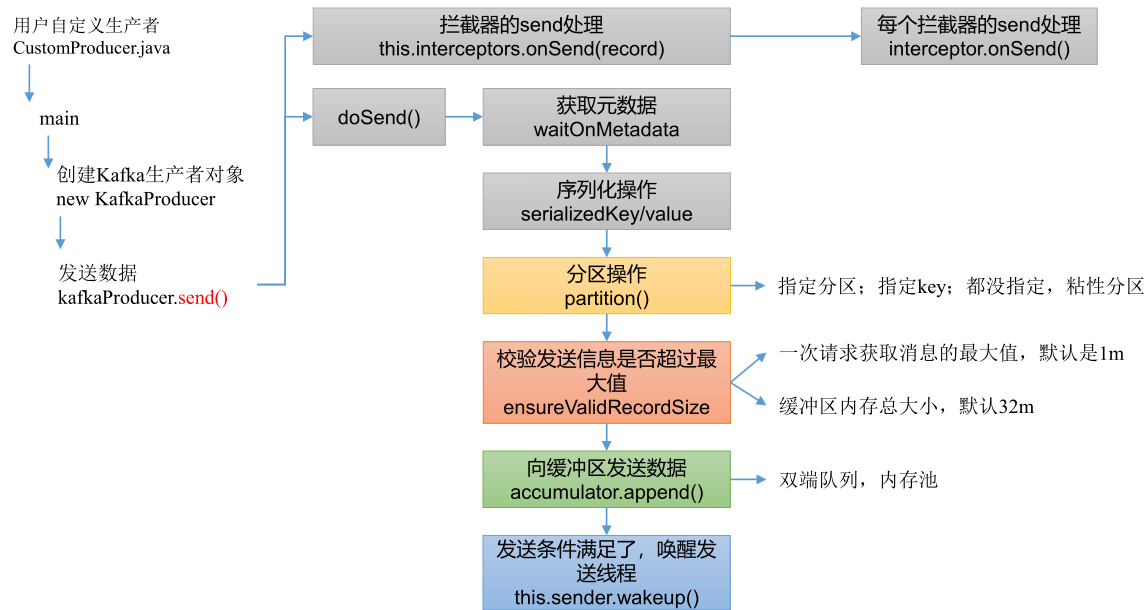

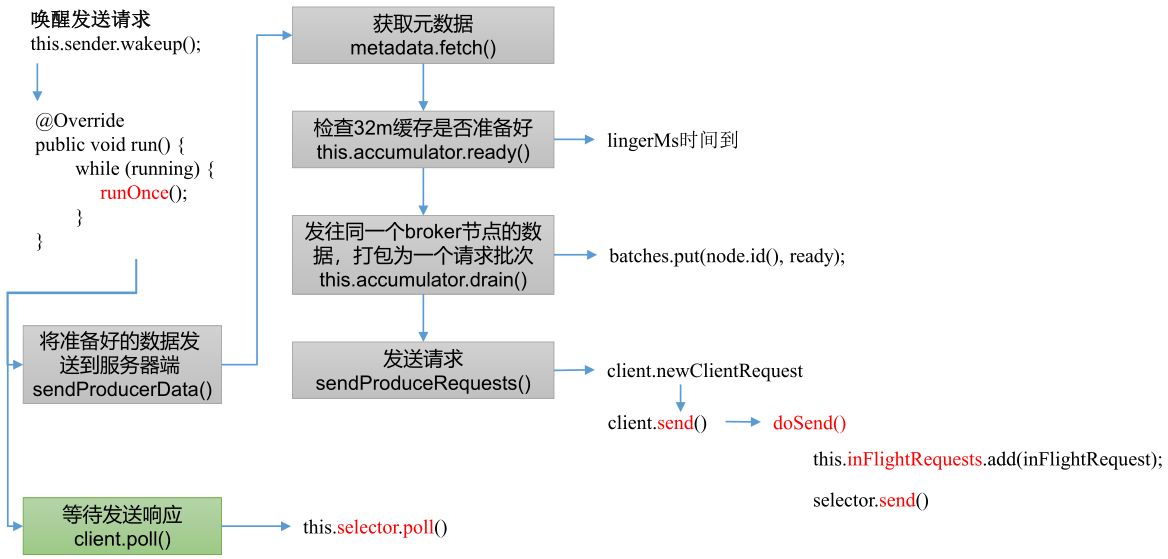

在消息发送的过程中,涉及到了两个线程——main线程和Sender线程。在main线程中创建了一个双端队列RecordAccumulator(默认32m)。main线程将消息发送给RecordAccumulator,Sender线程不断从RecordAccumulator中拉取消息发送到Kafka Broker。

main线程初始化:

sender线程初始化:

main线程发送数据到缓冲区:

sender线程发送数据:

3.1.2生产者参数列表

| 参数 | 描述 |

| bootstrap.servers | 生产者连接集群所需的broker地址清单。例如kafka1:9092,kafka2:9092,kafka3:9092,可以设置1个或者多个,中间用逗号隔开。注意这里并非需要所有的broker地址,因为生产者从给定的broker里查找到其他broker信息。 |

| key.serializer和value.serializer | 指定发送消息的key和value的序列化类型。一定要写全类名。 |

| buffer.memory | RecordAccumulator缓冲区总大小,默认32m。 |

| batch.size | 缓冲区一批数据最大值,默认16k。适当增加该值,可以提高吞吐量,但是如果该值设置太大,会导致数据传输延迟增加。 |

| linger.ms | 如果数据迟迟未达到batch.size,sender等待linger.time之后就会发送数据。单位ms,默认值是0ms,表示没有延迟。生产环境建议该值大小为5-100ms之间。 |

| acks | 0:生产者发送过来的数据,不需要等数据落盘应答。 1:生产者发送过来的数据,Leader收到数据后应答。 -1(all):生产者发送过来的数据,Leader+和isr队列里面的所有节点收齐数据后应答。默认值是-1,-1和all是等价的。 |

| max.in.flight.requests.per.connection | 允许最多没有返回ack的次数,默认为5,开启幂等性要保证该值是 1-5的数字。 |

| retries | 当消息发送出现错误的时候,系统会重发消息。retries表示重试次数。默认是int最大值,2147483647。 如果设置了重试,还想保证消息的有序性,需要设置MAX_IN_FLIGHT_REQUESTS_PER_CONNECTION=1,否则在重试此失败消息的时候,其他的消息可能发送成功了。 |

| retry.backoff.ms | 两次重试之间的时间间隔,默认是100ms。 |

| enable.idempotence | 是否开启幂等性,默认true,开启幂等性。 |

| compression.type | 生产者发送的所有数据的压缩方式。默认是none,也就是不压缩。 支持压缩类型:none、gzip、snappy、lz4和zstd。 |

3.2 Kafka消息发送API

3.2.1 普通异步发送

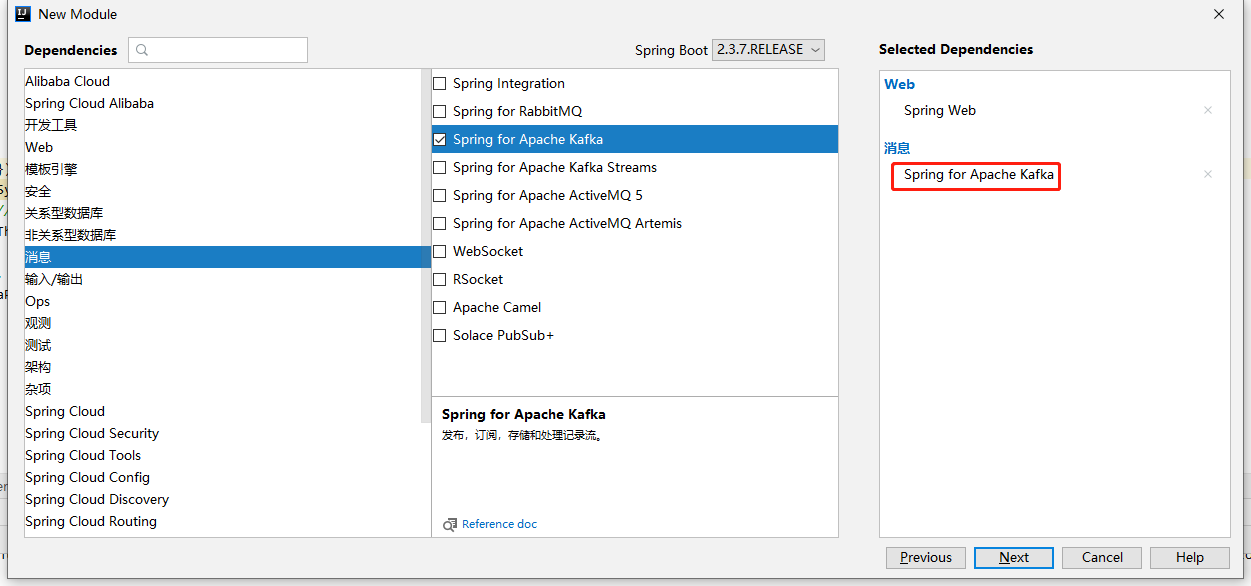

1. 引入依赖,IDEA中创建项目的时候可以直接勾选Spring for Apache Kafka,自动添加依赖。

<dependency>

<groupId>org.springframework.kafka</groupId>

<artifactId>spring-kafka</artifactId>

</dependency>或者手动引入org.apache.kafka.kafka-clients 依赖,两者均可。

<!-- https://mvnrepository.com/artifact/org.apache.kafka/kafka-clients -->

<!--<dependency>

<groupId>org.apache.kafka</groupId>

<artifactId>kafka-clients</artifactId>

<version>3.0.0</version>

</dependency>-->2. 创建自定义生产者 CustomerProducer

import org.apache.kafka.clients.producer.KafkaProducer;

import org.apache.kafka.clients.producer.ProducerConfig;

import org.apache.kafka.clients.producer.ProducerRecord;

import org.apache.kafka.common.serialization.StringSerializer;

import java.util.Properties;

/**

* @author huangdh

* @version 1.0

* @description:

* @date 2022-11-05 16:22

*/

public class CustomProducer {

public static void main(String[] args) {

// 创建kafka生产者的配置对象

Properties properties = new Properties();

// 连接kafka

properties.put(ProducerConfig.BOOTSTRAP_SERVERS_CONFIG,"8.8.80.8:9092");

// key value 序列化设置(必须)

// org.apache.kafka.common.serialization.StringSerializer

properties.put(ProducerConfig.KEY_SERIALIZER_CLASS_CONFIG, StringSerializer.class.getName());

properties.put(ProducerConfig.VALUE_SERIALIZER_CLASS_CONFIG,StringSerializer.class.getName());

// 创建kafka生产者对象

KafkaProducer<String, String> kafkaProducer = new KafkaProducer<>(properties);

// 调用send方法,发送消息

for (int i = 0; i < 500; i++) {

kafkaProducer.send(new ProducerRecord<>("kafka-offset","learn kafka by yourself, 第" + i + "天"));

}

// 3.关闭资源

kafkaProducer.close();

}

}3.2.2 带回调函数的异步/同步发送

回调函数会在producer收到ack时调用,为异步调用,该方法有两个参数,分别是元数据信息(RecordMetadata)和异常信息(Exception),如果Exception为null,说明消息发送成功,如果Exception不为null,说明消息发送失败。

1. 创建自定义类:CustomProducerCallback

import org.apache.kafka.clients.producer.Callback;

import org.apache.kafka.clients.producer.KafkaProducer;

import org.apache.kafka.clients.producer.ProducerConfig;

import org.apache.kafka.clients.producer.ProducerRecord;

import org.apache.kafka.clients.producer.RecordMetadata;

import org.apache.kafka.common.serialization.StringSerializer;

import java.util.Properties;

import java.util.concurrent.ExecutionException;

/**

* @author huangdh

* @version 1.0

* @description:

* @date 2022-11-09 13:49

*/

public class CustomProducerCallback {

public static void main(String[] args) throws InterruptedException, ExecutionException {

// 1. 创建kafka生产者的配置对象

Properties properties = new Properties();

// 2. 给kafka配置对象添加配置信息

properties.put(ProducerConfig.BOOTSTRAP_SERVERS_CONFIG,"8.8.8.8:9092");

properties.put(ProducerConfig.KEY_SERIALIZER_CLASS_CONFIG, StringSerializer.class.getName());

properties.put(ProducerConfig.VALUE_SERIALIZER_CLASS_CONFIG,StringSerializer.class.getName());

// 3. 创建kafka生产者对象

KafkaProducer<String,String> kafkaProducer = new KafkaProducer<String, String>(properties);

// 4. 调用send方法,发送消息

for (int i = 0; i < 600; i++) {

// 异步发送

/*kafkaProducer.send(new ProducerRecord<>("kafka","learn kafka by yourself, 第" + i + "天"), new Callback() {

@Override

public void onCompletion(RecordMetadata recordMetadata, Exception e) {

if (e == null){

System.out.println("主题:" + recordMetadata.topic() + "->" + "分区:" + recordMetadata.partition());

}else {

e.printStackTrace();

}

}

});*/

// 同步发送,在异步发送的基础上调用get()方法

RecordMetadata kafka = kafkaProducer.send(new ProducerRecord<>("kafka-offset", "learn kafka by yourself, 第" + i + "天"), new Callback() {

@Override

public void onCompletion(RecordMetadata recordMetadata, Exception e) {

if (e == null) {

System.out.println("主题:" + recordMetadata.topic() + "->" + "分区:" + recordMetadata.partition());

} else {

e.printStackTrace();

}

}

}).get();

System.out.println("同步发送返回结果:" + "分区:" + kafka.partition() + " 主题:" + kafka.topic() + " offset:" + kafka.offset());

// 延迟一会,会看到消息发往不同的分区

Thread.sleep(100);

}

// 5. 关闭资源

kafkaProducer.close();

}

}主题:kafka-offset->分区:0

同步发送返回结果:分区:0 主题:kafka-offset offset:3187

21:10:22.837 [kafka-producer-network-thread | producer-1] DEBUG org.apache.kafka.clients.NetworkClient - [Producer clientId=producer-1] Sending PRODUCE request with header RequestHeader(apiKey=PRODUCE, apiVersion=9, clientId=producer-1, correlationId=61) and timeout 30000 to node 0: {acks=-1,timeout=30000,partitionSizes=[kafka-offset-2=101]}

21:10:22.849 [kafka-producer-network-thread | producer-1] DEBUG org.apache.kafka.clients.NetworkClient - [Producer clientId=producer-1] Received PRODUCE response from node 0 for request with header RequestHeader(apiKey=PRODUCE, apiVersion=9, clientId=producer-1, correlationId=61): ProduceResponseData(responses=[TopicProduceResponse(name='kafka-offset', partitionResponses=[PartitionProduceResponse(index=2, errorCode=0, baseOffset=2586, logAppendTimeMs=-1, logStartOffset=0, recordErrors=[], errorMessage=null)])], throttleTimeMs=0)

主题:kafka-offset->分区:2

同步发送返回结果:分区:2 主题:kafka-offset offset:2586

21:10:22.950 [kafka-producer-network-thread | producer-1] DEBUG org.apache.kafka.clients.NetworkClient - [Producer clientId=producer-1] Sending PRODUCE request with header RequestHeader(apiKey=PRODUCE, apiVersion=9, clientId=producer-1, correlationId=62) and timeout 30000 to node 0: {acks=-1,timeout=30000,partitionSizes=[kafka-offset-1=101]}

21:10:22.970 [kafka-producer-network-thread | producer-1] DEBUG org.apache.kafka.clients.NetworkClient - [Producer clientId=producer-1] Received PRODUCE response from node 0 for request with header RequestHeader(apiKey=PRODUCE, apiVersion=9, clientId=producer-1, correlationId=62): ProduceResponseData(responses=[TopicProduceResponse(name='kafka-offset', partitionResponses=[PartitionProduceResponse(index=1, errorCode=0, baseOffset=2773, logAppendTimeMs=-1, logStartOffset=0, recordErrors=[], errorMessage=null)])], throttleTimeMs=0)

主题:kafka-offset->分区:1

同步发送返回结果:分区:1 主题:kafka-offset offset:2773

21:10:23.073 [main] INFO org.apache.kafka.clients.producer.KafkaProducer - [Producer clientId=producer-1] Closing the Kafka producer with timeoutMillis = 9223372036854775807 ms.

21:10:23.073 [kafka-producer-network-thread | producer-1] DEBUG org.apache.kafka.clients.producer.internals.Sender - [Producer clientId=producer-1] Beginning shutdown of Kafka producer I/O thread, sending remaining records.

21:10:23.078 [kafka-producer-network-thread | producer-1] DEBUG org.apache.kafka.clients.producer.internals.Sender - [Producer clientId=producer-1] Shutdown of Kafka producer I/O thread has completed.

21:10:23.078 [main] INFO org.apache.kafka.common.metrics.Metrics - Metrics scheduler closed3.3 生产者分区

3.3.1 分区的好处

-

便于合理使用存储资源,每个Partition在一个Broker上存储,可以把海量的数据按照分区切割成一块一块数据存储在多台Broker上。合理控制分区的任务,可以实现负载均衡的效果。

- 提高并行度,生产者可以以分区为单位发送数据;消费者可以以分区为单位进行消费数据。

3.3.2 生产者消息发送分区策略

1)默认的分区器 DefaultPartitioner

/*

* Licensed to the Apache Software Foundation (ASF) under one or more

* contributor license agreements. See the NOTICE file distributed with

* this work for additional information regarding copyright ownership.

* The ASF licenses this file to You under the Apache License, Version 2.0

* (the "License"); you may not use this file except in compliance with

* the License. You may obtain a copy of the License at

*

* http://www.apache.org/licenses/LICENSE-2.0

*

* Unless required by applicable law or agreed to in writing, software

* distributed under the License is distributed on an "AS IS" BASIS,

* WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

* See the License for the specific language governing permissions and

* limitations under the License.

*/

package org.apache.kafka.clients.producer.internals;

import org.apache.kafka.clients.producer.Partitioner;

import org.apache.kafka.common.Cluster;

import org.apache.kafka.common.utils.Utils;

import java.util.Map;

/**

* The default partitioning strategy:

* <ul>

* <li>If a partition is specified in the record, use it

* <li>If no partition is specified but a key is present choose a partition based on a hash of the key

* <li>If no partition or key is present choose the sticky partition that changes when the batch is full.

*

* See KIP-480 for details about sticky partitioning.

*/

public class DefaultPartitioner implements Partitioner {

private final StickyPartitionCache stickyPartitionCache = new StickyPartitionCache();

public void configure(Map<String, ?> configs) {}

/**

* Compute the partition for the given record.

*

* @param topic The topic name

* @param key The key to partition on (or null if no key)

* @param keyBytes serialized key to partition on (or null if no key)

* @param value The value to partition on or null

* @param valueBytes serialized value to partition on or null

* @param cluster The current cluster metadata

*/

public int partition(String topic, Object key, byte[] keyBytes, Object value, byte[] valueBytes, Cluster cluster) {

return partition(topic, key, keyBytes, value, valueBytes, cluster, cluster.partitionsForTopic(topic).size());

}

/**

* Compute the partition for the given record.

*

* @param topic The topic name

* @param numPartitions The number of partitions of the given {@code topic}

* @param key The key to partition on (or null if no key)

* @param keyBytes serialized key to partition on (or null if no key)

* @param value The value to partition on or null

* @param valueBytes serialized value to partition on or null

* @param cluster The current cluster metadata

*/

public int partition(String topic, Object key, byte[] keyBytes, Object value, byte[] valueBytes, Cluster cluster,

int numPartitions) {

if (keyBytes == null) {

return stickyPartitionCache.partition(topic, cluster);

}

// hash the keyBytes to choose a partition

return Utils.toPositive(Utils.murmur2(keyBytes)) % numPartitions;

}

public void close() {}

/**

* If a batch completed for the current sticky partition, change the sticky partition.

* Alternately, if no sticky partition has been determined, set one.

*/

public void onNewBatch(String topic, Cluster cluster, int prevPartition) {

stickyPartitionCache.nextPartition(topic, cluster, prevPartition);

}

}(1)指明partition的情况下,直接将指明的值作为partition值;例如partition=0,所有数据写入分区0。

(2)没有指明partition值但有key的情况下,将key的hash值与topic的partition数进行取余得到partition值;例如:key1的hash值=5,key2的hash值=6,topic的partition数=2,那么key1对应的value1写入1号分区,key2对应的value2写入0号分区。

(3)既没有partition值又没有key值的情况下,Kafka采用StickyPartition(黏性分区器),会随机选择一个分区,并尽可能一直使用该分区,待该分区的batch已满或者已完成,Kafka再随机选择一个分区进行使用(和上一次的分区不同)。例如:第一次随机选择0号分区,等0号分区当前批次满了(默认16k)或者linger.ms设置的时间到,Kafka再随机选择一个分区进行使用(如果还是0会继续随机)。

#######################################################################

由于后面所有案例都需要配置 kafka 的基础信息,因此,此处将kafka公共配置信息抽取出来,代码如下:

package com.atguigu.springcloud.kafka.producer;

import org.apache.kafka.clients.producer.KafkaProducer;

import org.apache.kafka.clients.producer.ProducerConfig;

import org.apache.kafka.common.serialization.StringSerializer;

import java.util.Properties;

/**

* @author huangdh

* @version 1.0

* @description:

* @date 2022-11-10 17:09

*/

public class KafkaProducerFactory {

public static KafkaProducer<String,String> getProducer(){

// 1. 创建kafka生产者的配置对象

Properties properties = new Properties();

// 2. 给kafka配置对象添加配置信息

properties.put(ProducerConfig.BOOTSTRAP_SERVERS_CONFIG,"8.8.80.8:9092");

properties.put(ProducerConfig.KEY_SERIALIZER_CLASS_CONFIG, StringSerializer.class.getName());

properties.put(ProducerConfig.VALUE_SERIALIZER_CLASS_CONFIG,StringSerializer.class.getName());

// 3.添加自定义分区器

// properties.put(ProducerConfig.PARTITIONER_CLASS_CONFIG,"com.atguigu.springcloud.kafka.partitions.CustomerPartitioner");

// 4.batch.size: 批次大小,默认16K

// properties.put(ProducerConfig.BATCH_SIZE_CONFIG,16384);

// 5.linger.ms: 等待时间,默认为0

// properties.put(ProducerConfig.LINGER_MS_CONFIG,1);

// 6.RecordAccumulator:缓冲区大小,默认32M:buffer.memory

// properties.put(ProducerConfig.BUFFER_MEMORY_CONFIG,33554432);

// 6.compression.type:压缩,默认none,可配置值gzip、snappy、lz4和zstd

// properties.put(ProducerConfig.COMPRESSION_TYPE_CONFIG,"snappy");

// 7.设置事务id(必须)

// properties.put(ProducerConfig.TRANSACTIONAL_ID_CONFIG,"transaction_id_0");

// 8.开启幂等性(开启事务,必须开启幂等性,默认为true)

// properties.put(ProducerConfig.ENABLE_IDEMPOTENCE_CONFIG,true);

return new KafkaProducer<String, String>(properties);

}

}设置日志打印级别:

设置main函数的日志打印级别为 info 级别,默认为debug级别,添加如下代码:

// 修改日志打印级别,默认为debug级别

static {

LoggerContext loggerContext = (LoggerContext) LoggerFactory.getILoggerFactory();

List<Logger> loggerList = loggerContext.getLoggerList();

loggerList.forEach(logger -> {

logger.setLevel(Level.INFO);

});

}默认打印日志级别,输出一些 DEBUG org.apache.kafka.clients.NetworkClient 信息,比较混乱。

主题:kafka-offset->分区:1

21:40:55.386 [kafka-producer-network-thread | producer-1] DEBUG org.apache.kafka.clients.NetworkClient - [Producer clientId=producer-1] Sending PRODUCE request with header RequestHeader(apiKey=PRODUCE, apiVersion=9, clientId=producer-1, correlationId=6) and timeout 30000 to node 0: {acks=-1,timeout=30000,partitionSizes=[kafka-offset-1=107]}

21:40:55.401 [kafka-producer-network-thread | producer-1] DEBUG org.apache.kafka.clients.NetworkClient - [Producer clientId=producer-1] Received PRODUCE response from node 0 for request with header RequestHeader(apiKey=PRODUCE, apiVersion=9, clientId=producer-1, correlationId=6): ProduceResponseData(responses=[TopicProduceResponse(name='kafka-offset', partitionResponses=[PartitionProduceResponse(index=1, errorCode=0, baseOffset=2787, logAppendTimeMs=-1, logStartOffset=0, recordErrors=[], errorMessage=null)])], throttleTimeMs=0)

主题:kafka-offset->分区:1

21:40:55.486 [kafka-producer-network-thread | producer-1] DEBUG org.apache.kafka.clients.NetworkClient - [Producer clientId=producer-1] Sending PRODUCE request with header RequestHeader(apiKey=PRODUCE, apiVersion=9, clientId=producer-1, correlationId=7) and timeout 30000 to node 0: {acks=-1,timeout=30000,partitionSizes=[kafka-offset-1=107]}

21:40:55.497 [kafka-producer-network-thread | producer-1] DEBUG org.apache.kafka.clients.NetworkClient - [Producer clientId=producer-1] Received PRODUCE response from node 0 for request with header RequestHeader(apiKey=PRODUCE, apiVersion=9, clientId=producer-1, correlationId=7): ProduceResponseData(responses=[TopicProduceResponse(name='kafka-offset', partitionResponses=[PartitionProduceResponse(index=1, errorCode=0, baseOffset=2788, logAppendTimeMs=-1, logStartOffset=0, recordErrors=[], errorMessage=null)])], throttleTimeMs=0)

主题:kafka-offset->分区:1

21:40:55.588 [main] INFO org.apache.kafka.clients.producer.KafkaProducer - [Producer clientId=producer-1] Closing the Kafka producer with timeoutMillis = 9223372036854775807 ms.

21:40:55.589 [kafka-producer-network-thread | producer-1] DEBUG org.apache.kafka.clients.producer.internals.Sender - [Producer clientId=producer-1] Beginning shutdown of Kafka producer I/O thread, sending remaining records.

21:40:55.596 [kafka-producer-network-thread | producer-1] DEBUG org.apache.kafka.clients.producer.internals.Sender - [Producer clientId=producer-1] Shutdown of Kafka producer I/O thread has completed.

21:40:55.596 [main] INFO org.apache.kafka.common.metrics.Metrics - Metrics scheduler closed修改之后日志打印详情

主题:kafka-offset->分区:1

主题:kafka-offset->分区:1

主题:kafka-offset->分区:1

主题:kafka-offset->分区:1

主题:kafka-offset->分区:1

案例一:根据自定义规则指定发送分区,如果value中包含“kafka”信息时,则将其发送到0分区。

-

定义类实现Partitioner接口。

-

重写partition()方法。

(1)自定义分区类,实现 Partitioner 接口

package com.atguigu.springcloud.kafka.partitions;

import org.apache.kafka.clients.producer.Partitioner;

import org.apache.kafka.common.Cluster;

import java.util.Map;

/**

* @author huangdh

* @version 1.0

* @description:

* @date 2022-11-10 17:44

*/

public class CustomerPartitioner implements Partitioner {

@Override

public int partition(String topic, Object key, byte[] keyBytes, Object value, byte[] valueBytes, Cluster cluster) {

// 获取消息

String msgValue = value.toString();

// 创建分区

int partition;

// 如果value中包含有指定信息时,则将其发送到指定的分区

if (msgValue.contains("kafka")){

partition = 0;

}else {

partition = 1;

}

return partition;

}

@Override

public void close() {

}

@Override

public void configure(Map<String, ?> configs) {

}

}(2)自定义类 CustomProducerCallbackPartitions

import ch.qos.logback.classic.Level;

import ch.qos.logback.classic.Logger;

import ch.qos.logback.classic.LoggerContext;

import org.apache.kafka.clients.producer.Callback;

import org.apache.kafka.clients.producer.KafkaProducer;

import org.apache.kafka.clients.producer.ProducerRecord;

import org.apache.kafka.clients.producer.RecordMetadata;

import org.slf4j.LoggerFactory;

import java.util.List;

/**

* @author huangdh

* @version 1.0

* @description: 将数据发往指定分区

* @date 2022-11-10 17:06

*/

public class CustomProducerCallbackPartitions {

// 修改日志打印级别,默认为debug级别

static {

LoggerContext loggerContext = (LoggerContext) LoggerFactory.getILoggerFactory();

List<Logger> loggerList = loggerContext.getLoggerList();

loggerList.forEach(logger -> {

logger.setLevel(Level.INFO);

});

}

public static void main(String[] args) throws InterruptedException {

KafkaProducer<String, String> producer = KafkaProducerFactory.getProducer();

for (int i = 0; i < 5; i++) {

String msg = "work hard ";

if (i%2 == 0){

msg = "learn kafka by yourself,第" + i + "day";

}else {

msg = msg + "第" + i + "day";

}

// 自定义分区

producer.send(new ProducerRecord<>("kafka",msg), new Callback() {

@Override

public void onCompletion(RecordMetadata metadata, Exception exception) {

if (exception == null) {

System.out.println("主题:" + metadata.topic() + "->" + "分区:" + metadata.partition());

} else {

exception.printStackTrace();

}

}

});

Thread.sleep(100);

}

// 关闭资源

producer.close();

}

}(3)运行结果:

主题:kafka->分区:0

主题:kafka->分区:1

主题:kafka->分区:0

主题:kafka->分区:1

主题:kafka->分区:0#######################################################################

案例二:没有指明partition值但有key的情况下,将key的hash值与topic的partition数进行取余得到partition值

查看“kafka”主题分区详情:一共7个分区

[root@-9930 bin]# ./kafka-topics.sh --describe --zookeeper zookeeper1:2181 --topic kafka

Topic: kafka TopicId: aW-JTB_PQw-aUpXd0hF47A PartitionCount: 7 ReplicationFactor: 1 Configs:

Topic: kafka Partition: 0 Leader: 0 Replicas: 0 Isr: 0

Topic: kafka Partition: 1 Leader: 0 Replicas: 0 Isr: 0

Topic: kafka Partition: 2 Leader: 0 Replicas: 0 Isr: 0

Topic: kafka Partition: 3 Leader: 0 Replicas: 0 Isr: 0

Topic: kafka Partition: 4 Leader: 0 Replicas: 0 Isr: 0

Topic: kafka Partition: 5 Leader: 0 Replicas: 0 Isr: 0

Topic: kafka Partition: 6 Leader: 0 Replicas: 0 Isr: 0(1)key值设置为 String partition = "a"时,主题分区为。

主题:kafka->分区:5

主题:kafka->分区:5

主题:kafka->分区:5

主题:kafka->分区:5

主题:kafka->分区:5(2)key值设置为 String partition = "b"时,主题分区为。

主题:kafka->分区:6

主题:kafka->分区:6

主题:kafka->分区:6

主题:kafka->分区:6

主题:kafka->分区:6(3) key值设置为 String partition = "c"时,主题分区为。

主题:kafka->分区:0

主题:kafka->分区:0

主题:kafka->分区:0

主题:kafka->分区:0

主题:kafka->分区:0

3.4 如何提高生产者吞吐量

-

batch.size:修改批次大小,默认16K。

-

linger.ms:修改等待时间,默认0。

-

RecordAccumulator:修改缓冲区大小,默认32M:buffer.memory

-

compression.type:修改压缩,默认none,可配置值:gzip、snappy、lz4和zstd

// 4.batch.size: 批次大小,默认16K

properties.put(ProducerConfig.BATCH_SIZE_CONFIG,16384);

// 5.linger.ms: 等待时间,默认为0

properties.put(ProducerConfig.LINGER_MS_CONFIG,1);

// 6.RecordAccumulator:缓冲区大小,默认32M:buffer.memory

properties.put(ProducerConfig.BUFFER_MEMORY_CONFIG,33554432);

// 6.compression.type:压缩,默认none,可配置值gzip、snappy、lz4和zstd

properties.put(ProducerConfig.COMPRESSION_TYPE_CONFIG,"snappy");

3.5 如何提高数据可靠性

3.5.1 ACK应答原理

-

acks:0,生产者发送过来的数据,不需要等数据落盘应答。Leader节点收到消息之后,在数据落盘之前就挂掉了,则会导致数据丢失。数据可靠性分析:数据丢失。

-

acks:1,生产者发送过来的数据,Leader收到数据后应答。应答完成之后,还没开始同步副本,Leader节点就挂掉了,新的Leader不会再收到之前发送的消息,因为生产者已经认为消息发送成功了。 数据可靠性分析:数据丢失。

-

acks:-1(all):生产者发送过来的数据,Leader和ISR队列里面的所有节点收齐数据后应答。

思考:acks:-1(all),Leader收到数据,所有Follower都开始同步数据,但有一个Follower,因为某种故障,迟迟不能与Leader进行同步,那这个问题怎么解决呢?

Leader维护了一个动态的in-syncreplicaset(ISR),意为和Leader保持同步的Follower+Leader集合(leader:0,isr:0,1,2)。如果Follower长时间未向Leader发送通信请求或同步数据,则该Follower将被踢出ISR。该时间阈值由replica.lag.time.max.ms参数设定,默认30s。例如2超时,(leader:0, isr:0,1)。这样就不用等长期联系不上或者已经故障的节点。

acks:-1(all)数据可靠性分析:

-

如果分区副本设置为1个,或者ISR里应答的最小副本数量(min.insync.replicas默认为1)设置为1,和ack=1的效果是一样的,仍然有丢数的风险(leader:0,isr:0)。

-

数据完全可靠条件=ACK级别设置为-1+分区副本大于等于2+ISR里应答的最小副本数量大于等于2。

// 设置acks

properties.put(ProducerConfig.ACKS_CONFIG, "all");3.5.2可靠性总结

-

acks=0,生产者发送过来数据就不管了,可靠性差,效率高。

-

acks=1,生产者发送过来数据Leader应答,可靠性中等,效率中等。

-

acks=-1,生产者发送过来数据Leader和ISR队列里面所有Follwer应答,可靠性高,效率低。

在生产环境中,acks=0很少使用;acks=1,一般用于传输普通日志,允许丢个别数据;acks=-1,一般用于传输和钱相关的数据,对可靠性要求比较高的场景。

3.6 数据去重

3.6.1 数据传递语义

-

至少一次(AtLeastOnce)=ACK级别设置为-1+分区副本大于等于2+ISR里应答的最小副本数量大于等于2。可以保证数据不丢失,但是不能保证数据不重复;

-

最多一次(AtMostOnce)=ACK级别设置为0。可以保证数据不重复,但是不能保证数据不丢失。

-

精确一次(ExactlyOnce):精确一次(ExactlyOnce)=幂等性+至少一次(ack=-1+分区副本数>=2+ISR最小副本数量>=2)。对于一些非常重要的信息,比如和钱相关的数据,要求数据既不能重复也不丢失。

Kafka0.11版本以后,引入了一项重大特性:幂等性和事务。

3.6.2 幂等性

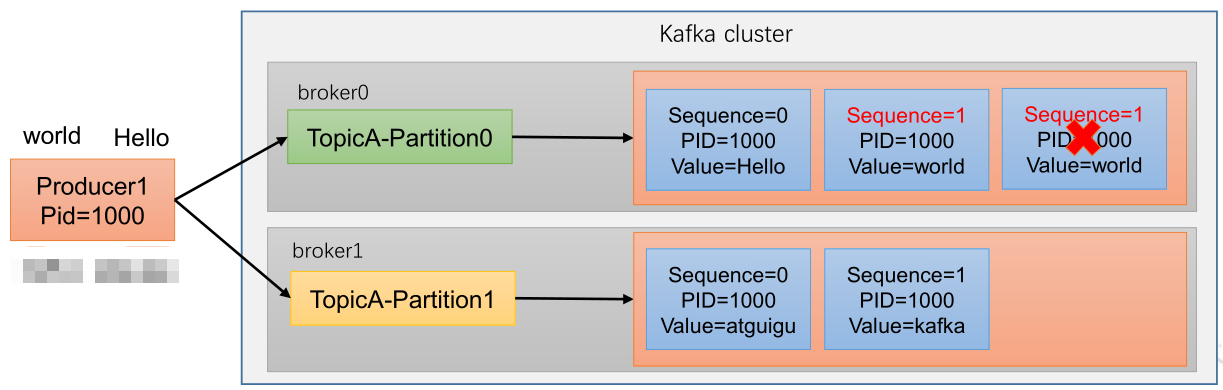

幂等性:就是指Producer不论向Broker发送多少次重复数据,Broker端都只会持久化一条,保证了不重复。

重复数据的判断标准:具有<PID,Partition,SeqNumber>相同主键的消息提交时,Broker只会持久化一条。其中PID是Kafka每次重启都会分配一个新的;Partition表示分区号;SequenceNumber是单调自增的。

所以幂等性只能保证的是在单分区单会话内不重复。

如何使用幂等性?

开启参数enable.idempotence 默认为true,false关闭。

// 8.开启幂等性(开启事务,必须开启幂等性,默认为true)

properties.put(ProducerConfig.ENABLE_IDEMPOTENCE_CONFIG,true);

3.6.3 生产者事务

1)Kafka事务原理,开启事务,必须开启幂等性。

2)Kafka事务一共有5个API

/**

* See {@link KafkaProducer#initTransactions()}

*/

void initTransactions();

/**

* See {@link KafkaProducer#beginTransaction()}

*/

void beginTransaction() throws ProducerFencedException;

/**

* See {@link KafkaProducer#sendOffsetsToTransaction(Map, String)}

*/

@Deprecated

void sendOffsetsToTransaction(Map<TopicPartition, OffsetAndMetadata> offsets,

String consumerGroupId) throws ProducerFencedException;

/**

* See {@link KafkaProducer#sendOffsetsToTransaction(Map, ConsumerGroupMetadata)}

*/

void sendOffsetsToTransaction(Map<TopicPartition, OffsetAndMetadata> offsets,

ConsumerGroupMetadata groupMetadata) throws ProducerFencedException;

/**

* See {@link KafkaProducer#commitTransaction()}

*/

void commitTransaction() throws ProducerFencedException;

/**

* See {@link KafkaProducer#abortTransaction()}

*/

void abortTransaction() throws ProducerFencedException;

3)使用kafka事务配置如下

// 7.设置事务id(必须)

properties.put(ProducerConfig.TRANSACTIONAL_ID_CONFIG,"transaction_id_0");

// 8.开启幂等性(开启事务,必须开启幂等性,默认为true)

properties.put(ProducerConfig.ENABLE_IDEMPOTENCE_CONFIG,true);

4)自定类 :CustomProducerTransactions 实现kafka事务

import ch.qos.logback.classic.Level;

import ch.qos.logback.classic.Logger;

import ch.qos.logback.classic.LoggerContext;

import org.apache.kafka.clients.producer.Callback;

import org.apache.kafka.clients.producer.KafkaProducer;

import org.apache.kafka.clients.producer.ProducerRecord;

import org.apache.kafka.clients.producer.RecordMetadata;

import org.slf4j.LoggerFactory;

import java.util.List;

/**

* @author huangdh

* @version 1.0

* @description:

* @date 2022-11-10 21:45

*/

public class CustomProducerTransactions {

// 修改日志打印级别,默认为debug级别

static {

LoggerContext loggerContext = (LoggerContext) LoggerFactory.getILoggerFactory();

List<Logger> loggerList = loggerContext.getLoggerList();

loggerList.forEach(logger -> {

logger.setLevel(Level.INFO);

});

}

public static void main(String[] args) {

KafkaProducer<String, String> producer = KafkaProducerFactory.getProducer();

// 初始化事务

producer.initTransactions();

// 开启事务

producer.beginTransaction();

try {

for (int i = 0; i < 5; i++) {

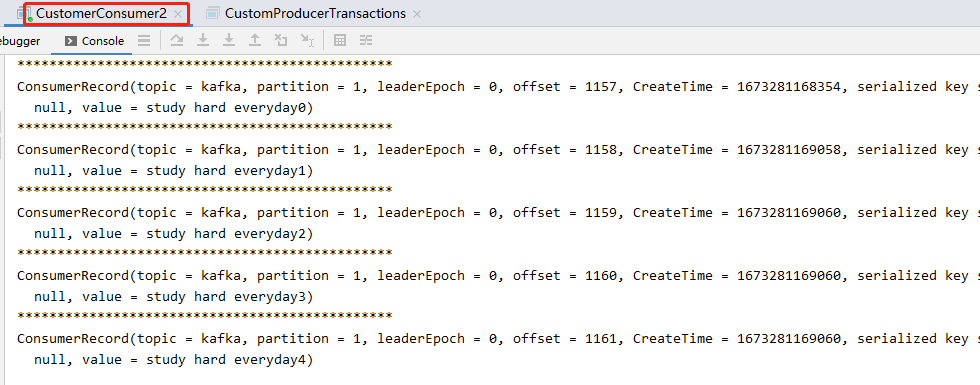

producer.send(new ProducerRecord<>("kafka", "study hard everyday" + i), new Callback() {

@Override

public void onCompletion(RecordMetadata recordMetadata, Exception e) {

if (e == null) {

System.out.println("主题:" + recordMetadata.topic() + "->" + "分区:" + recordMetadata.partition());

} else {

e.printStackTrace();

}

}

});

}

// int i = 1/0;

// 提交事务

producer.commitTransaction();

} catch (Exception e) {

// 终止事务

producer.abortTransaction();

e.printStackTrace();

}finally {

producer.close();

}

}

}执行结果如下:

23:04:15.842 [kafka-producer-network-thread | producer-transaction_id_0] INFO org.apache.kafka.clients.producer.internals.TransactionManager - [Producer clientId=producer-transaction_id_0, transactionalId=transaction_id_0] Discovered transaction coordinator 8.8.80.8:9092 (id: 0 rack: null)

23:04:15.979 [kafka-producer-network-thread | producer-transaction_id_0] INFO org.apache.kafka.clients.producer.internals.TransactionManager - [Producer clientId=producer-transaction_id_0, transactionalId=transaction_id_0] ProducerId set to 1000 with epoch 4

主题:kafka->分区:1

主题:kafka->分区:1

主题:kafka->分区:1

主题:kafka->分区:1

主题:kafka->分区:1消费者消费详情如下:

执行过程中抛异常时:

23:05:56.610 [main] INFO org.apache.kafka.clients.producer.KafkaProducer - [Producer clientId=producer-transaction_id_0, transactionalId=transaction_id_0] Aborting incomplete transaction

org.apache.kafka.common.errors.TransactionAbortedException: Failing batch since transaction was aborted

org.apache.kafka.common.errors.TransactionAbortedException: Failing batch since transaction was aborted

org.apache.kafka.common.errors.TransactionAbortedException: Failing batch since transaction was aborted

org.apache.kafka.common.errors.TransactionAbortedException: Failing batch since transaction was aborted

org.apache.kafka.common.errors.TransactionAbortedException: Failing batch since transaction was aborted

java.lang.ArithmeticException: / by zero

at com.atguigu.springcloud.kafka.producer.CustomProducerTransactions.main(CustomProducerTransactions.java:53)

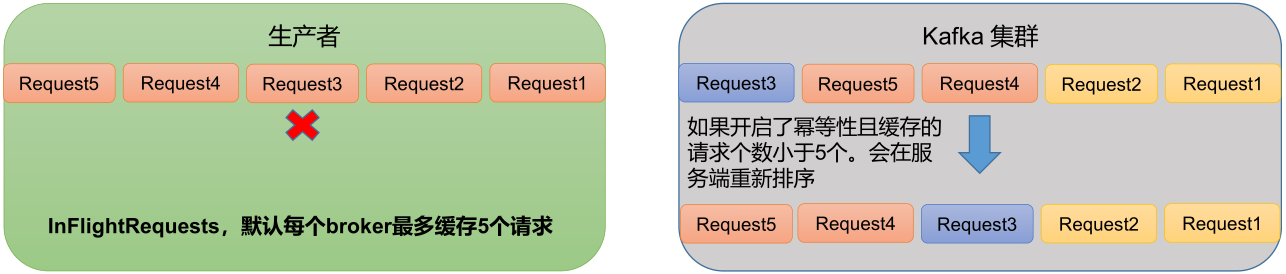

3.7 数据有序/数据乱序

-

单分区内,有序。多分区内,分区与分区间无序。

-

kafka在1.x版本之前,保证数据单分区内有序,条件如下:

-

max.in.flight.requests.per.connection=1(不需要考虑是否开启幂等性)。

-

-

kafka在1.x及以后版本保证数据单分区有序,条件如下:

-

未开启幂等性:max.in.flight.requests.per.connection需要设置为1。

-

开启幂等性:max.in.flight.requests.per.connection需要设置小于等于5。因为在kafka1.x以后,启用幂等后,kafka服务端会缓存producer发来的最近5个request的元数据,故无论如何,都可以保证最近5个request的数据都是有序的。

-

4.Kafka Broker

4.1 Kafka Broker工作流程

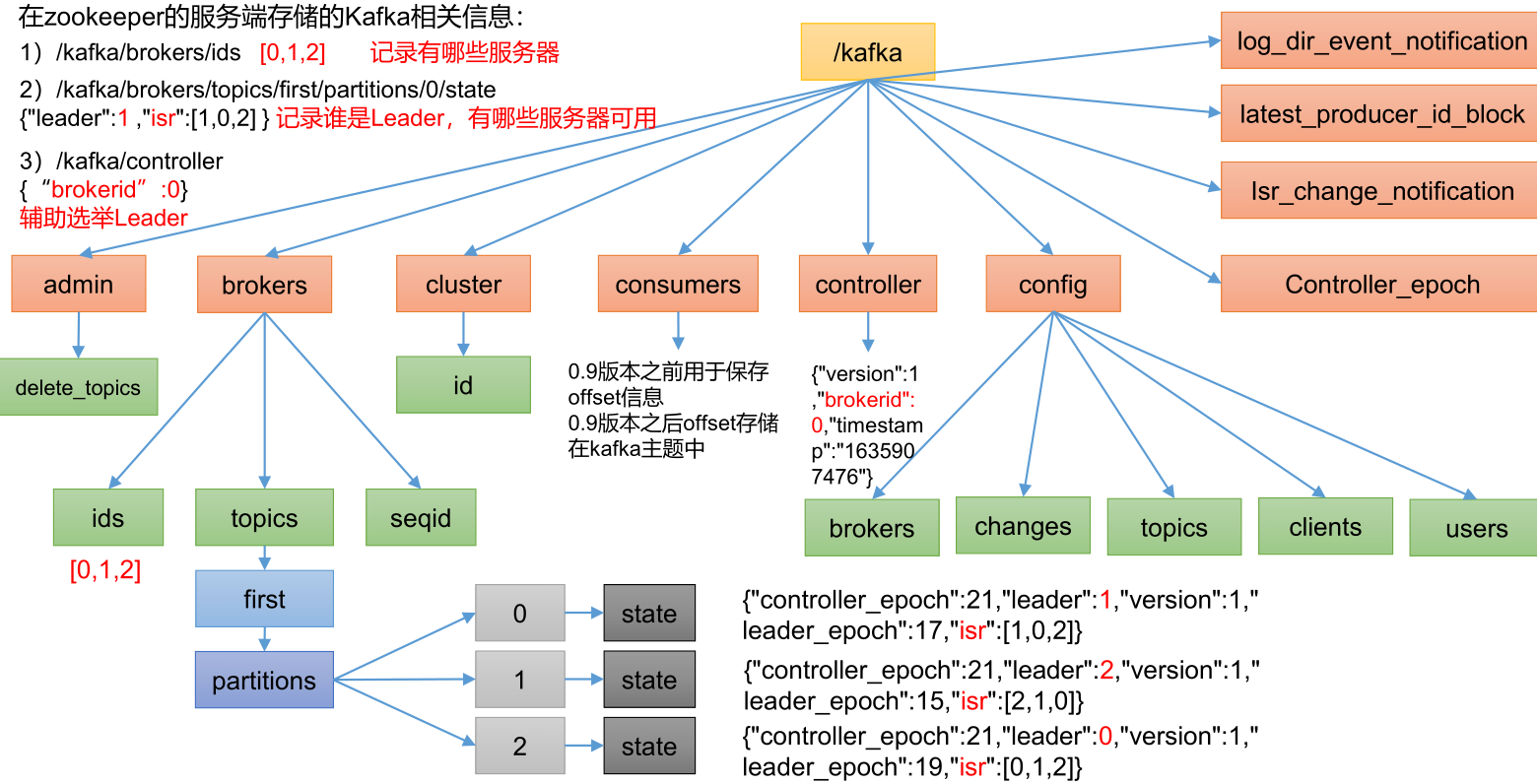

4.1.1 Zookeeper存储的Kafka信息

-

/kafka/brokers/ids:[0,1,2],记录有哪些服务器。

-

/kafka/brokers/topics/first/partitions/0/state:{"leader":1,"isr":[1,0,2] } 记录谁是Leader,有哪些服务器可用。

-

/kafka/controller:{“brokerid”:0} 辅助选举Leader

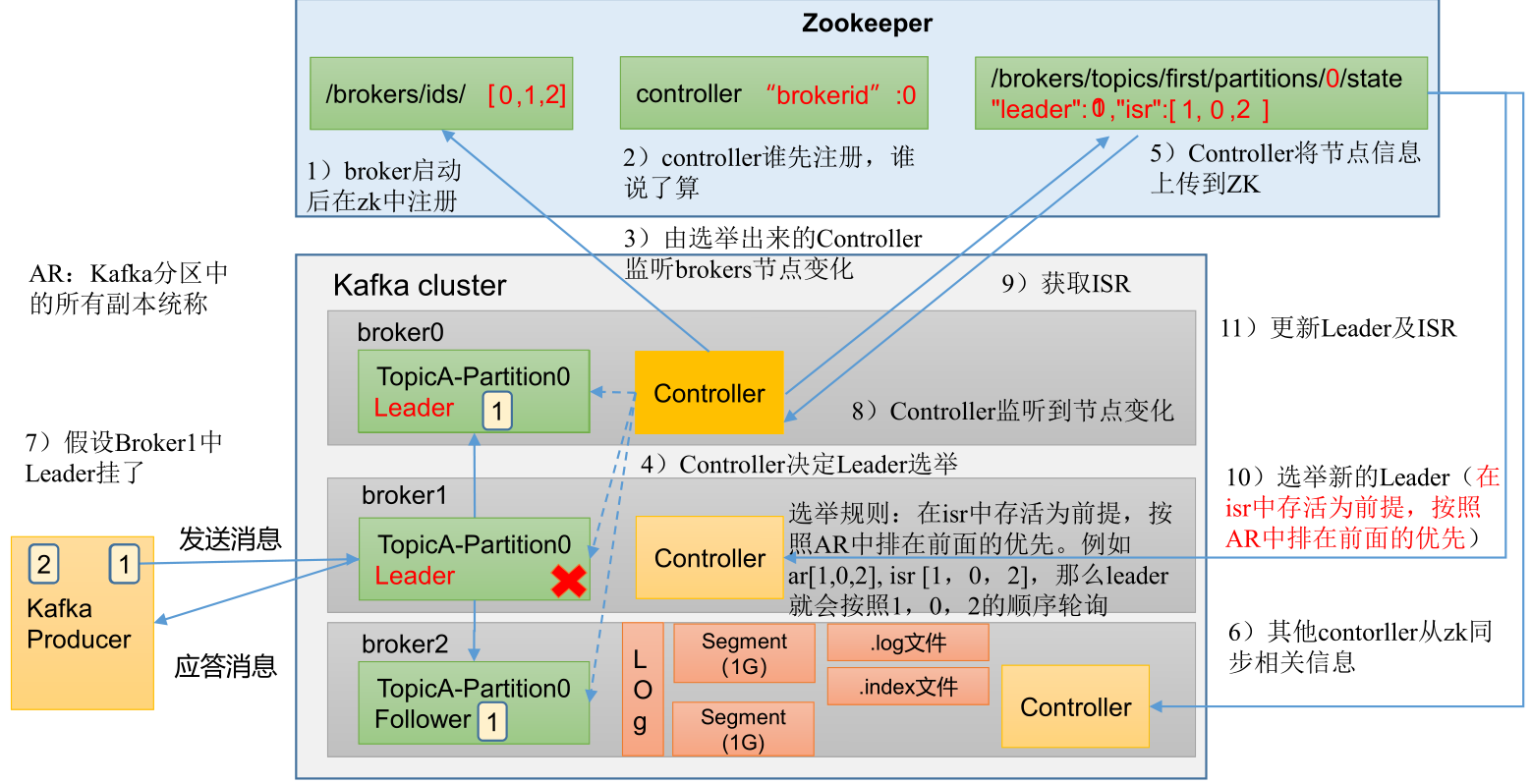

4.1.2 Kafka Broker工作流程

-

broker启动后在zk中注册

-

controller谁先注册,谁说了算

-

由选举出来的controller监听brokers节点变化

-

controller决定Leader选举

-

controller将节点信息上传到zk中

-

其他controller从zk同步相关信息

-

假设broker1中Leader挂了

-

controller监听到节点发生变化

-

获取ISR

-

选举新的Leader(在isr中存活为前提,按照AR中排在前面的优先,例如:ar[1,0,2],那么leader就会按照1,0,2的顺序轮询)

-

更新Leader及ISR

4.1.3 Broker重要参数

| 参数名称 | 描述 |

| replica.lag.time.max.ms | ISR中,如果Follower长时间未向Leader发送通信请求或同步数据,则该Follower将被踢出ISR。该时间阈值,默认30s。 |

| auto.leader.rebalance.enable | 默认是true。 自动Leader Partition 平衡。 |

| leader.imbalance.per.broker.percentage | 默认是10%。每个broker允许的不平衡的leader的比率。如果每个broker超过了这个值,控制器会触发leader的平衡。 |

| leader.imbalance.check.interval.seconds | 默认值300秒。检查leader负载是否平衡的间隔时间。 |

| log.segment.bytes | Kafka中log日志是分成一块块存储的,此配置是指log日志划分 成块的大小,默认值1G。 |

| log.index.interval.bytes | 默认4kb,kafka里面每当写入了4kb大小的日志(.log),然后就往index文件里面记录一个索引。 |

| log.retention.hours | Kafka中数据保存的时间,默认7天。 |

| log.retention.minute | Kafka中数据保存的时间,分钟级别,默认关闭。 |

| log.retention.ms | Kafka中数据保存的时间,毫秒级别,默认关闭。 |

| log.retention.check.interval.ms | 检查数据是否保存超时的间隔,默认是5分钟。 |

| log.retention.bytes | 默认等于-1,表示无穷大。超过设置的所有日志总大小,删除最早的segment。 |

| log.cleanup.policy | 默认是delete,表示所有数据启用删除策略; 如果设置值为compact,表示所有数据启用压缩策略。 |

| num.io.threads | 默认是8。负责写磁盘的线程数。整个参数值要占总核数的50%。 |

| num.replica.fetchers | 副本拉取线程数,这个参数占总核数的50%的1/3 。 |

| num.network.threads | 强制页缓存刷写到磁盘的条数,默认是long的最大值,9223372036854775807。一般不建议修改, 交给系统自己管理。 |

| log.flush.interval.ms | 每隔多久,刷数据到磁盘,默认是null。一般不建议修改,交给系统自己管理。 |

4.2 Kafka副本

4.2.1 kafka副本

-

Kafka副本作用:提高数据可靠性。

-

Kafka默认副本1个,生产环境一般配置为2个,保证数据可靠性;太多副本会增加磁盘存储空间,增加网络上数据传输,降低效率。

-

Kafka中副本分为:Leader和Follower。Kafka生产者只会把数据发往Leader,然后Follower找Leader进行同步数据。

-

Kafka分区中的所有副本统称为AR(Assigned Repllicas),AR = ISR + OSR。

-

ISR,表示和Leader保持同步的Follower集合。如果Follower长时间未向Leader发送通信请求或同步数据,则该Follower将被踢出ISR。该时间阈值由replica.lag.time.max.ms参数设定,默认30s。Leader发生故障之后,就会从ISR中选举新的Leader。

-

OSR,表示Follower与Leader副本同步时,延迟过多的副本。

-

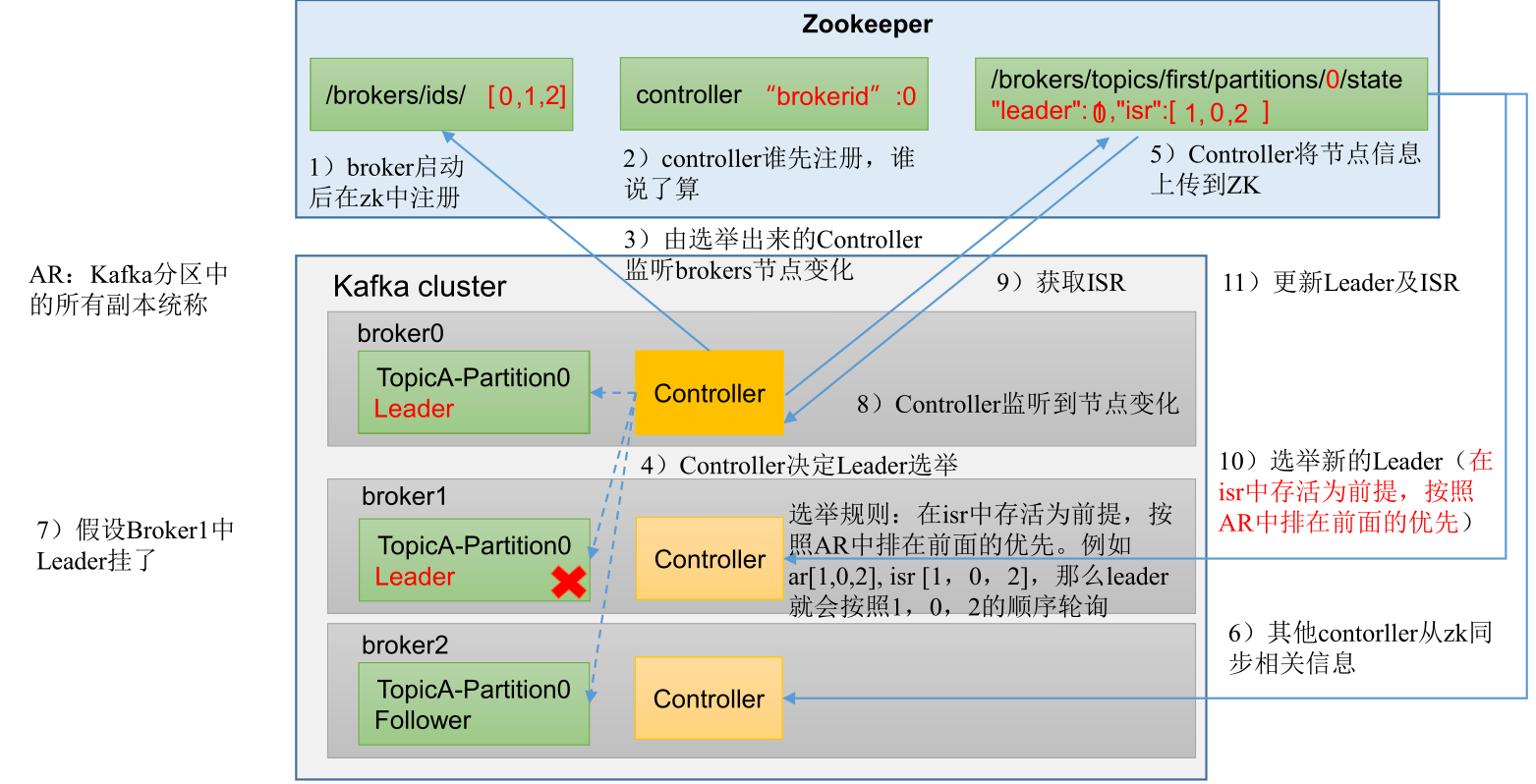

4.2.2 Leader选举流程

Kafka集群中有一个broker的Controller会被选举为Controller Leader,负责管理集群broker的上下线,所有topic的分区副本分配和Leader选举等工作。Controller的信息同步工作是依赖于Zookeeper的。

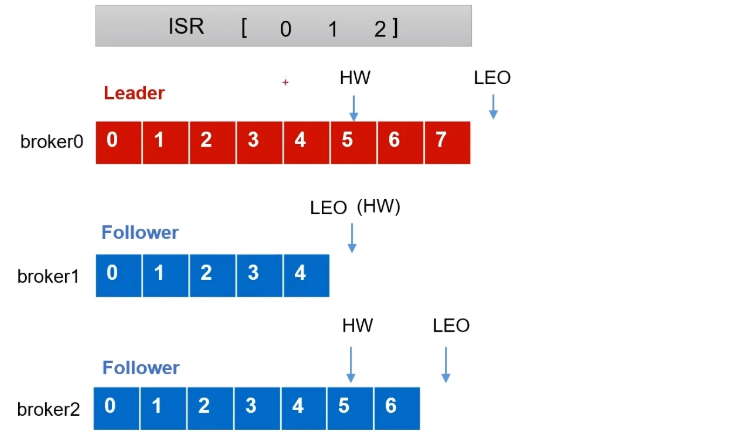

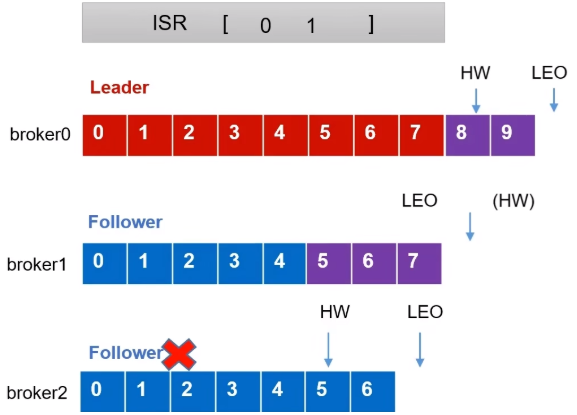

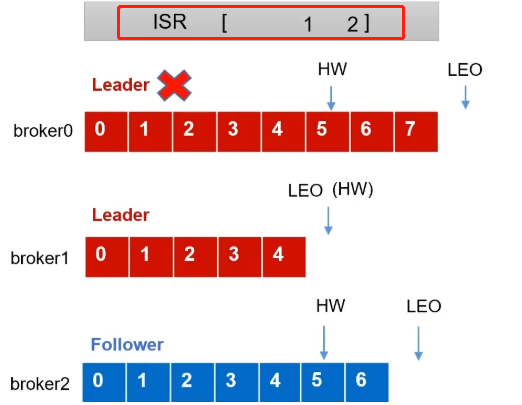

4.2.3 Leader和Follower故障处理细节

LEO(Log End Offset):每个副本的最后一个offset,LEO其实就是最新的offset+1。

HW(High Watermark):所有副本中最小的LEO。

1)Follower故障

(1)Follower发生故障后会被临时踢出ISR。

(2)这个期间Leader和Follower继续接收数据。

(3)待该Follower恢复后,Follower会读取本地磁盘记录上次的HW,并将log文件高于HW的部分截取掉,从HW开始向Leader进行同步。

(4)等该Follower的LEO大于等于该Partition的HW,即Follower追上Leader之后,就可以重新加入ISR了。

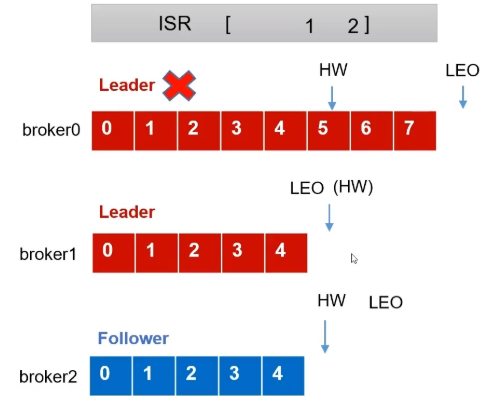

2)Leader故障处理机制

(1)Leader发生故障之后,会从ISR中选出一个新的Leader。

(2)为保证多个副本之间的数据一致性,其余的Follower会先将各自的log文件高于HW的部分截掉,然后从新的Leader同步数据。

注意:这只能保证副本之间的数据一致性,并不能保证数据不丢失或者不重复。

4.3 文件存储

4.3.1 文件储存机制

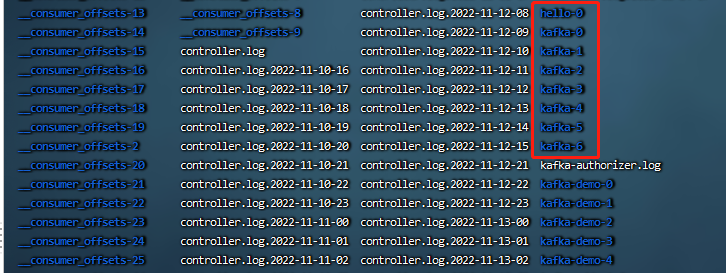

1)Topic数据存储机制

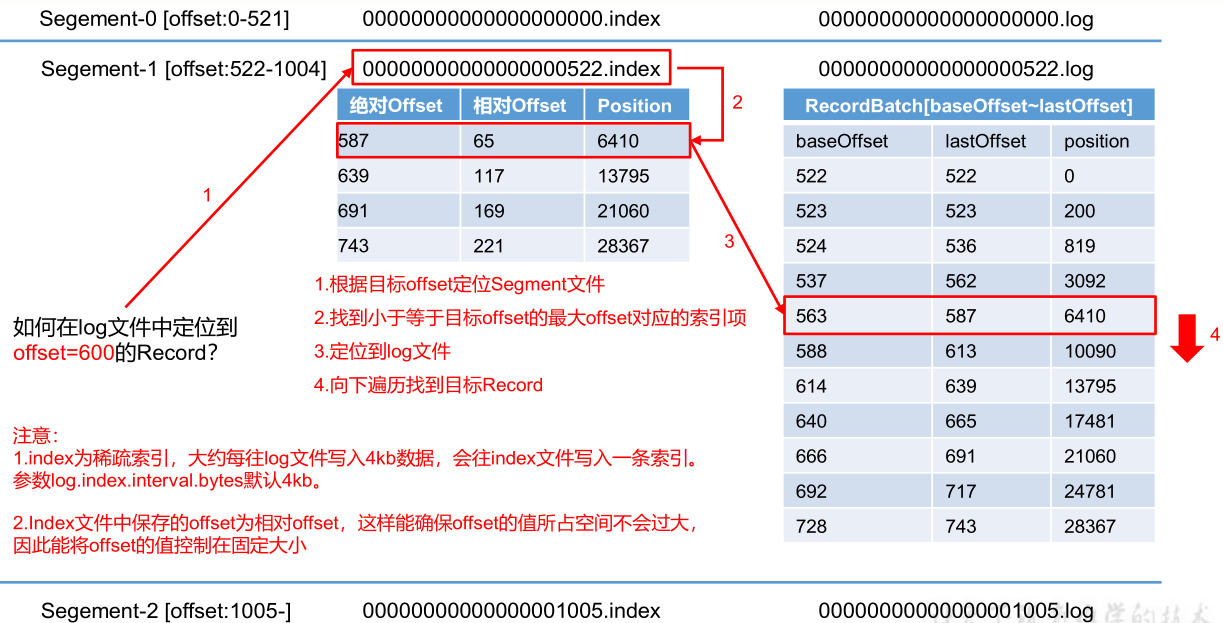

Topic是逻辑上的概念,而partition是物理上的概念,每个partition对应于一个log文件,该log文件中存储的就是Producer生产的数据。Producer生产的数据会被不断追加到该log文件末端,为防止log文件过大导致数据定位效率低下,Kafka采取了分片和索引机制,将每个partition分为多个segment。每个segment包括:“.index”文件、“.log”文件和.timeindex等文件。这些文件位于一个文件夹下,该文件夹的命名规则为:topic名称+分区序号,例如:first-0。

-rw-r--r-- 1 root root 10485760 11月 13 21:05 00000000000000000000.index

-rw-r--r-- 1 root root 245851 11月 13 21:10 00000000000000000000.log

-rw-r--r-- 1 root root 10485756 11月 13 21:05 00000000000000000000.timeindex

-rw-r--r-- 1 root root 10 11月 13 20:51 00000000000000002643.snapshot

-rw-r--r-- 1 root root 8 11月 13 20:51 leader-epoch-checkpoint-

.log:日志文件

-

.index:偏移量索引文件

-

.timeindex:时间戳索引文件

2)log文件和index文件

| 日志存储参数配置 | |

| 参数名称 | 描述 |

| log.segment.bytes | Kafka中log日志是分成一块块存储的,此配置是指log日志划分成块的大小,默认值1G。 |

| log.index.interval.bytes | 默认4kb,kafka里面每当写入了4kb大小的日志(.log),然后就往index文件里面记录一个索引。 稀疏索引。 |

4.3.2 文件清理策略

Kafka中默认的日志保存时间为7天,可以通过调整如下参数修改保存时间。

-

log.retention.hours,最低优先级小时,默认7天。

-

log.retention.minutes,分钟。

-

log.retention.ms,最高优先级毫秒。

-

log.retention.check.interval.ms,负责设置检查周期,默认5分钟。

Kafka中提供的日志清理策略有delete和compact两种。

(1)delete日志删除:将过期数据删除。log.cleanup.policy = delete 所有数据启用删除策略。

a.基于时间:默认打开。以segment中所有记录中的最大时间戳作为该文件时间戳。

b.基于大小:默认关闭。超过设置的所有日志总大小,删除最早的segment。log.retention.bytes,默认等于-1,表示无穷大。

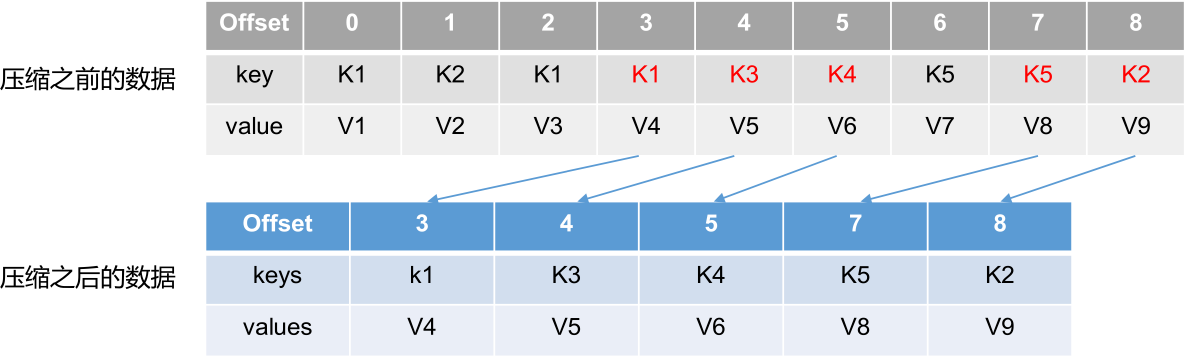

(2)compact日志压缩:compact日志压缩:对于相同key的不同value值,只保留最后一个版本。log.cleanup.policy=compact所有数据启用压缩策略。

压缩后的offset可能是不连续的,比如上图中没有6,当从这些offset消费消息时,将会拿到比这个offset大的offset对应的消息,实际上会拿到offset为7的消息,并从这个位置开始消费。

这种策略只适合特殊场景,比如消息的key是用户ID,value是用户的资料,通过这种压缩策略,整个消息集里就保存了所有用户最新的资料。

4.4 高效读写数据

1. Kafka是分布式集群,可以采用分区技术,并行度高。

2. 读数据采用稀疏索引,可以快速定位到要消费的数据。

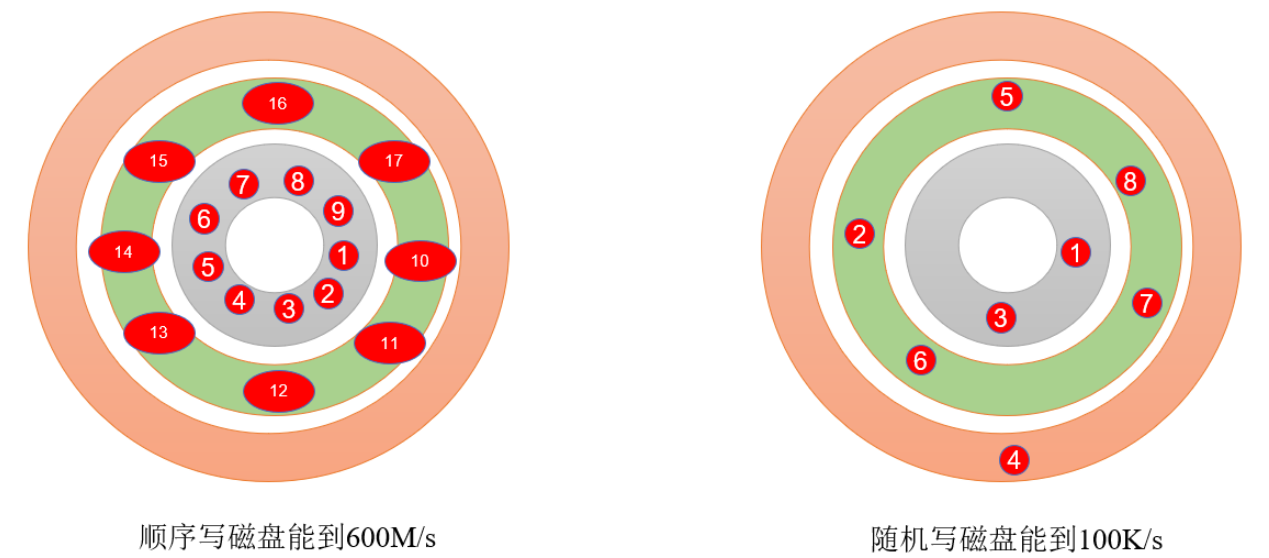

3. 顺序写磁盘。

Kafka的producer生产数据,要写入到log文件中,写的过程是一直追加到文件末端,为顺序写。官网有数据表明,同样的磁盘,顺序写能到600M/s,而随机写只有100K/s。这与磁盘的机械机构有关,顺序写之所以快,是因为其省去了大量磁头寻址的时间。

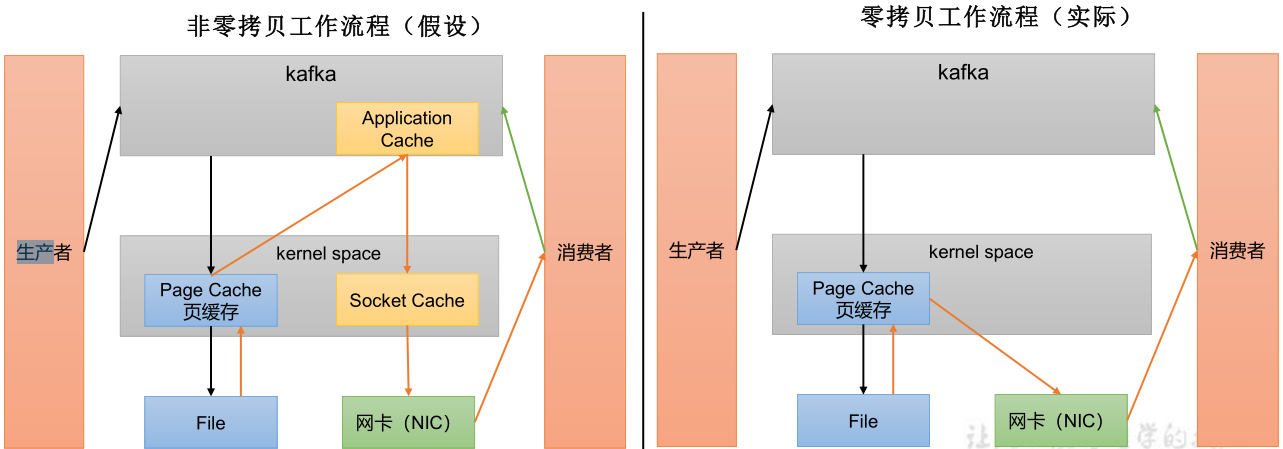

4. 页缓存 + 零拷贝技术

零拷贝:Kafka的数据加工处理操作交由Kafka生产者和Kafka消费者处理。Kafka Broker应用层不关心存储的数据,所以就不用走应用层,传输效率高。

PageCache页缓存:Kafka重度依赖底层操作系统提供的PageCache功能。当上层有写操作时,操作系统只是将数据写入PageCache。当读操作发生时,先从PageCache中查找,如果找不到,再去磁盘中读取。实际上PageCache是把尽可能多的空闲内存都当做了磁盘缓存来使用。

| 参数名称 | 描述 |

| log.flush.interval.messages | 强制页缓存刷写到磁盘的条数,默认是long的最大值,9223372036854775807。一般不建议修改,交给系统自己管理。 |

| log.flush.interval.ms | 每隔多久,刷数据到磁盘,默认是null。一般不建议修改,交给系统自己管理。 |

5. Kafka消费者

5.1 Kafka消费方式

5.1.1 pull(拉)模式

consumer采用从broker中主动拉取数据。Kafka采用这种方式。pull模式不足之处是,如果Kafka没有数据,消费者可能会陷入循环中,一直返回空数据。

5.1.2 push(推)模式

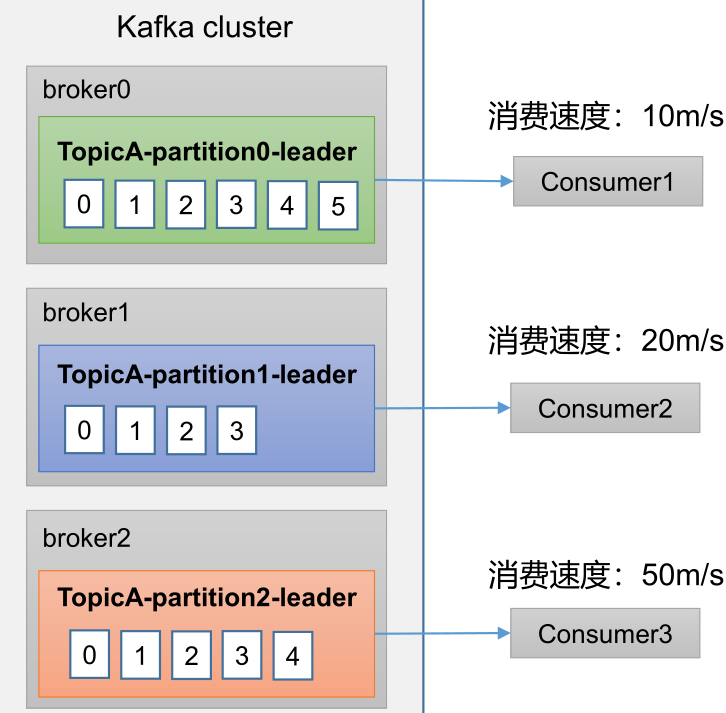

Kafka没有采用这种方式,因为由broker决定消息发送速率,很难适应所有消费者的消费速率。例如推送的速度是50m/s,Consumer1、Consumer2就来不及处理消息。

5.2 Kafka消费者工作流程

5.2.1 kafka消费者工作流程

(1)消费者组ConsumerGroup(CG)

ConsumerGroup(CG):消费者组,由多个consumer组成。形成一个消费者组的条件,是所有消费者的groupid相同。

-

消费者组内每个消费者负责消费不同分区的数据,一个分区只能由一个组内消费者消费。

-

消费者组之间互不影响。所有的消费者都属于某个消费者组,即消费者组是逻辑上的一个订阅者。

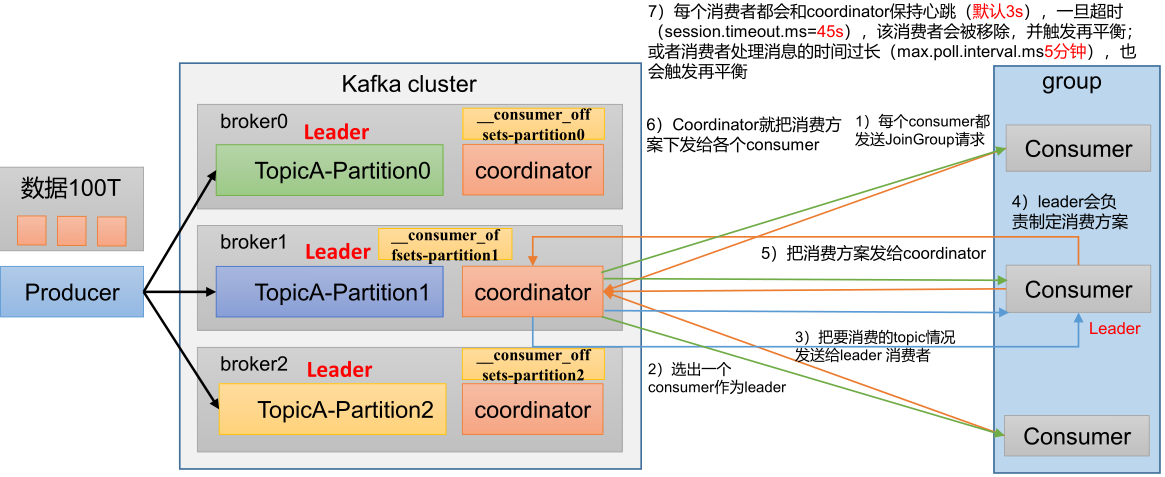

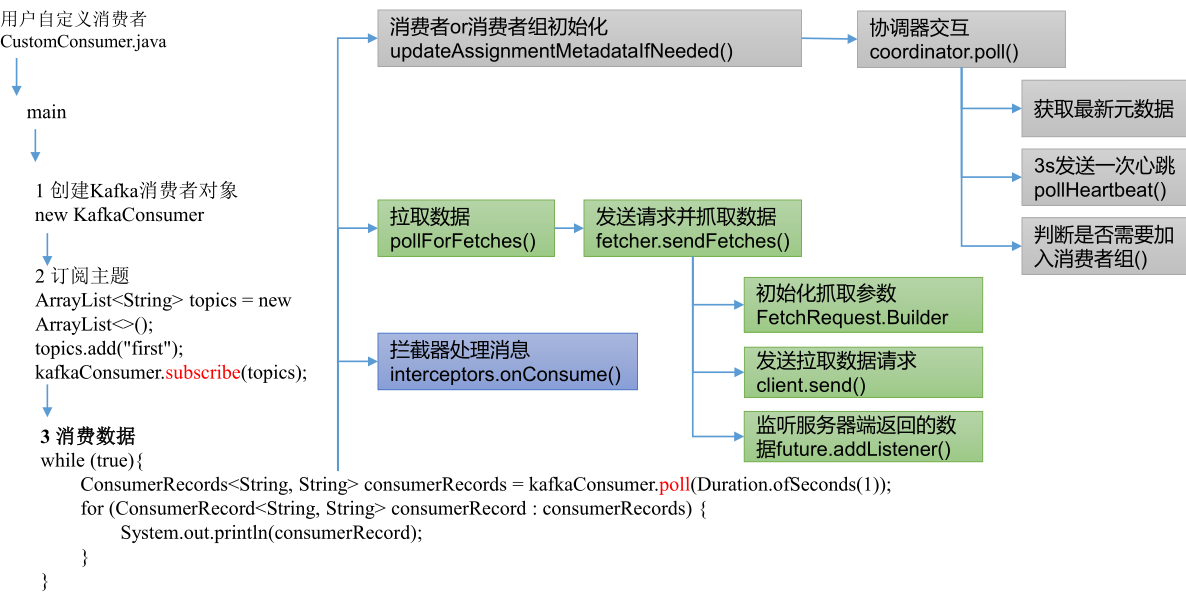

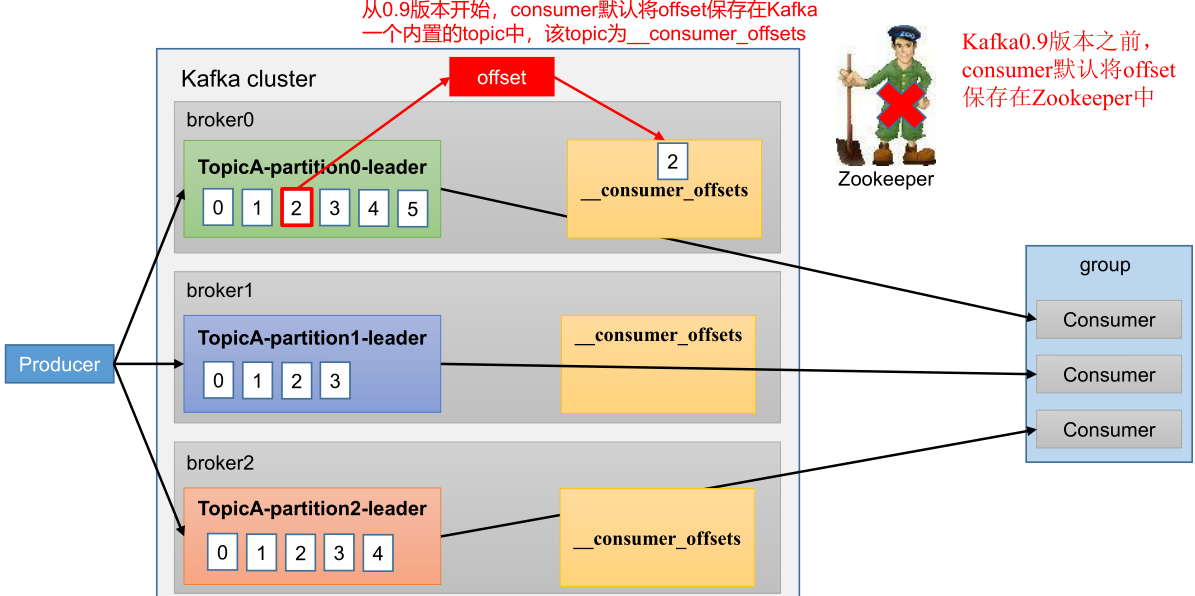

(2)消费者组初始化流程

coordinator:辅助实现消费者组的初始化和分区的分配。coordinator节点选择= groupid的hashcode值% 50(__consumer_offsets的分区数量)。

例如:groupid的hashcode值= 1,1% 50 = 1,那么__consumer_offsets主题的1号分区,在哪个broker上,就选择这个节点的coordinator作为这个消费者组的老大。消费者组下的所有的消费者提交offset的时候就往这个分区去提交offset。

- 每个consumer都发送JoinGroup请求。

- 选出一个consumer作为leader。

- 把要消费的topic情况发送给leader 消费者。

- leader会负责制定消费方案。

- 把消费方案发给coordinator。

- Coordinator就把消费方案下发给各个consumer。

- 每个消费者都会和coordinator保持心跳(默认3s),一旦超时(session.timeout.ms=45s),该消费者会被移除,并触发再平衡;或者消费者处理消息的时间过长(max.poll.interval.ms5分钟),也会触发再平衡。

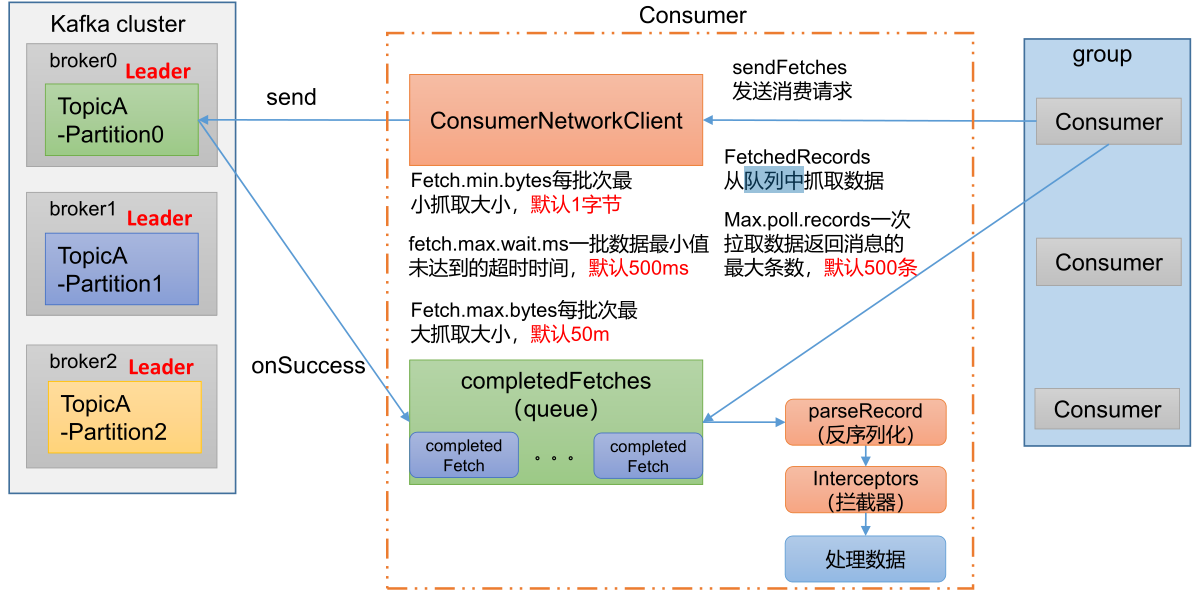

(3)消费者组消费详细流程

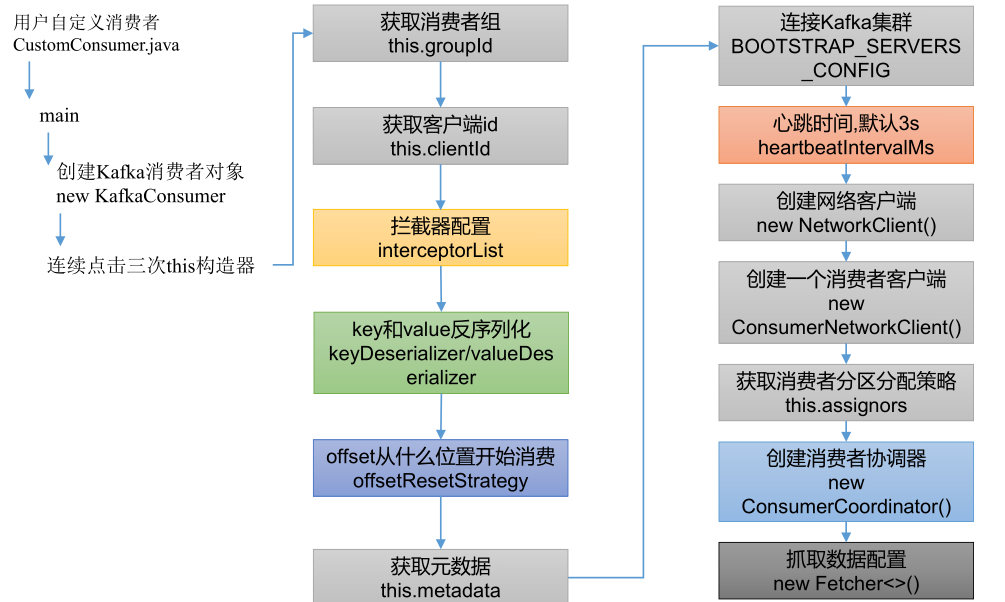

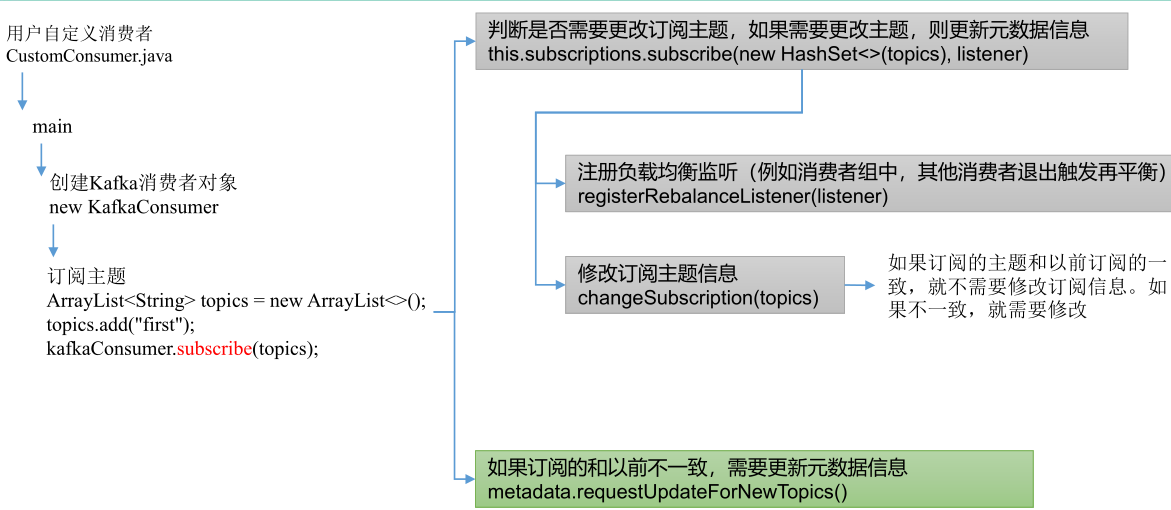

消费者初始化:

消费者订阅主题:

消费者拉取和处理数据:

5.2.2 消费者重要参数

| 参数名称 | 描述 |

| bootstrap.servers | 向Kafka集群建立初始连接用到的host/port列表。 |

| key.deserializer/value.deserializer | 指定接收消息的key和value的反序列化类型。一定要写全类名。 |

| group.id | 标记消费者所属的消费者组。 |

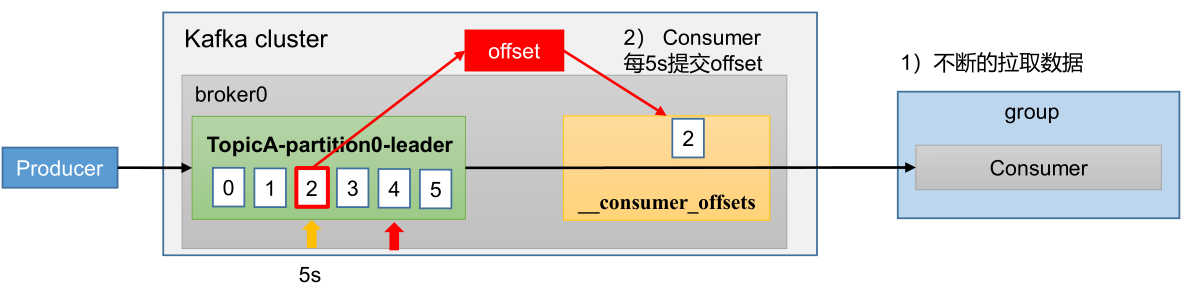

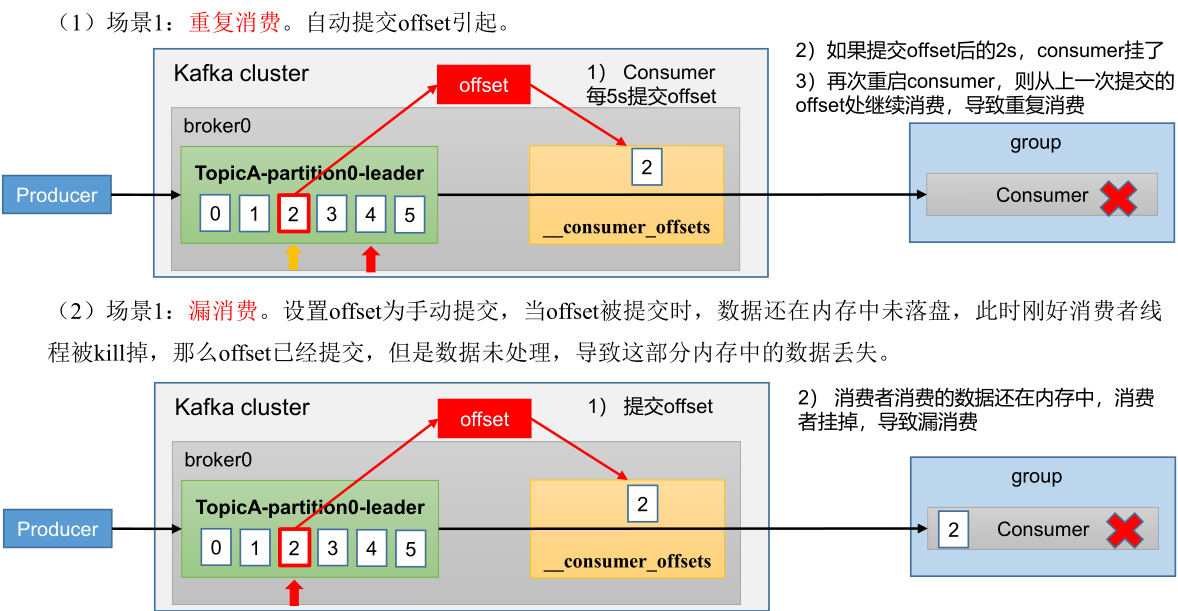

| enable.auto.commit | 默认值为true,消费者会自动周期性地向服务器提交偏移量。 |

| auto.commit.interval.ms | 如果设置了 enable.auto.commit 的值为true, 则该值定义了消费者偏移量向Kafka提交的频率,默认5s。 |

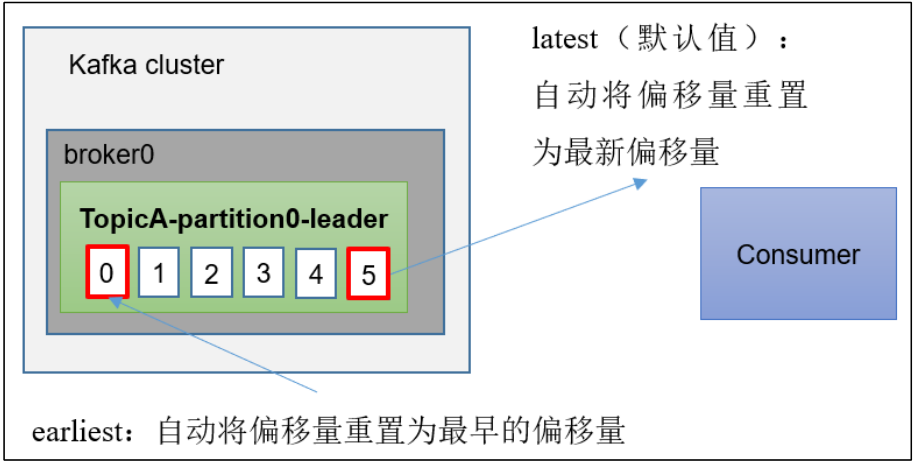

| auto.offset.reset | 当Kafka中没有初始偏移量或当前偏移量在服务器中不存在(如,数据被删除了),该如何处理? earliest:自动重置偏移量到最早的偏移量。 latest:默认,自动重置偏移量为最新的偏移量。 none:如果消费组原来的(previous)偏移量不存在,则向消费者抛异常。 anything:向消费者抛异常。 |

| offsets.topic.num.partitions | __consumer_offsets的分区数,默认是50个分区。 |

| heartbeat.interval.ms | Kafka消费者和coordinator之间的心跳时间,默认3s。 该条目的值必须小于 session.timeout.ms ,也不应该高于 session.timeout.ms 的1/3。 |

| session.timeout.ms | Kafka消费者和coordinator之间连接超时时间,默认45s。超过该值,该消费者被移除,消费者组执行再平衡。 |

| max.poll.interval.ms | 消费者处理消息的最大时长,默认是5分钟。超过该值,该消费者被移除,消费者组执行再平衡。 |

| fetch.min.bytes | 默认1个字节。消费者获取服务器端一批消息最小的字节数。 |

| fetch.max.wait.ms | 默认500ms。如果没有从服务器端获取到一批数据的最小字节数。该时间到,仍然会返回数据。 |

| fetch.max.bytes | 默认Default: 52428800(50 m)。消费者获取服务器端一批消息最大的字节数。如果服务器端一批次的数据大于该值(50m)仍然可以拉取回来这批数据,因此,这不是一个绝对最大值。一批次的大小受message.max.bytes (broker config)or max.message.bytes (topic config)影响。 |

| max.poll.records | 一次poll拉取数据返回消息的最大条数,默认是500条。 |

5.3 消费者API

***消费者配置信息***

import org.apache.kafka.clients.consumer.ConsumerConfig;

import org.apache.kafka.clients.consumer.KafkaConsumer;

import org.apache.kafka.clients.consumer.RoundRobinAssignor;

import org.apache.kafka.clients.consumer.StickyAssignor;

import org.apache.kafka.common.serialization.StringDeserializer;

import java.util.Properties;

/**

* @author huangdh

* @version 1.0

* @description:消费者

* @date 2022-11-11 18:06

*/

public class KafkaConsumerFactory {

public static KafkaConsumer<String,String> getConsumer(){

// 1.创建消费者的配置对象

Properties properties = new Properties();

// 2.给消费者配置对象添加参数

properties.put(ConsumerConfig.BOOTSTRAP_SERVERS_CONFIG,"8.8.80.88:9092");

// 配置序列化 必须

properties.put(ConsumerConfig.KEY_DESERIALIZER_CLASS_CONFIG, StringDeserializer.class.getName());

properties.put(ConsumerConfig.VALUE_DESERIALIZER_CLASS_CONFIG,StringDeserializer.class.getName());

// 配置消费者组(组名任意起名) 必须

properties.put(ConsumerConfig.GROUP_ID_CONFIG,"kafka-consumer-offset-3");

// 修改分区分配策略,Kafka默认的分区分配策略就是Range + CooperativeSticky

// RoundRobin轮询分区策略,是把所有的partition 和所有的consumer 都列出来,然后按照hashcode进行排序,最后通过轮询算法来分配partition 给到各个消费者。

// properties.put(ConsumerConfig.PARTITION_ASSIGNMENT_STRATEGY_CONFIG, RoundRobinAssignor.class.getName());

// Sticky以及再平衡,可以理解为分配的结果带有“粘性的”。即在执行一次新的分配之前,考虑上一次分配的结果,尽量少的调整分配的变动,可以节省大量的开销。

// properties.put(ConsumerConfig.PARTITION_ASSIGNMENT_STRATEGY_CONFIG, StickyAssignor.class.getName());

// 是否自动提交offset

// properties.put(ConsumerConfig.ENABLE_AUTO_COMMIT_CONFIG,true);

properties.put(ConsumerConfig.ENABLE_AUTO_COMMIT_CONFIG,false);

return new KafkaConsumer<String, String>(properties);

}

}

(1)自定义消费者,消费“kafka”主题

import ch.qos.logback.classic.Level;

import ch.qos.logback.classic.LoggerContext;

import org.apache.kafka.clients.consumer.ConsumerRecord;

import org.apache.kafka.clients.consumer.ConsumerRecords;

import org.apache.kafka.clients.consumer.KafkaConsumer;

import org.slf4j.LoggerFactory;

import java.time.Duration;

import java.util.ArrayList;

import java.util.List;

/**

* @author huangdh

* @version 1.0

* @description:

* @date 2022-11-11 18:35

*/

public class CustomerConsumer1 {

// 修改日志打印级别,默认为debug级别

static {

LoggerContext loggerContext = (LoggerContext) LoggerFactory.getILoggerFactory();

List<ch.qos.logback.classic.Logger> loggerList = loggerContext.getLoggerList();

loggerList.forEach(logger -> {

logger.setLevel(Level.INFO);

});

}

public static void main(String[] args) {

// 1.创建消费者

KafkaConsumer<String, String> consumer = KafkaConsumerFactory.getConsumer();

// 2. 订阅要消费的主题(可以同时消费多个主题)

ArrayList<String> topics = new ArrayList<>();

topics.add("kafka");

consumer.subscribe(topics);

// 3.消费/拉取的数据

while (true) {

// 每隔一秒拉取一次数据

ConsumerRecords<String, String> consumerRecords = consumer.poll(Duration.ofSeconds(1));

for (ConsumerRecord<String, String> record : consumerRecords) {

System.out.println("***********************************************");

System.out.println(record);

System.out.println("***********************************************");

}

}

}

}消费日志信息如下:

ConsumerRecord(topic = kafka, partition = 6, leaderEpoch = 0, offset = 484, CreateTime = 1668522493867, serialized key size = -1, serialized value size = 32, headers = RecordHeaders(headers = [], isReadOnly = false), key = null, value = learn kafka by yourself, 第1天)

***********************************************

ConsumerRecord(topic = kafka, partition = 5, leaderEpoch = 0, offset = 515, CreateTime = 1668522494311, serialized key size = -1, serialized value size = 32, headers = RecordHeaders(headers = [], isReadOnly = false), key = null, value = learn kafka by yourself, 第5天)

***********************************************

ConsumerRecord(topic = kafka, partition = 6, leaderEpoch = 0, offset = 485, CreateTime = 1668522494544, serialized key size = -1, serialized value size = 32, headers = RecordHeaders(headers = [], isReadOnly = false), key = null, value = learn kafka by yourself, 第7天)

***********************************************

ConsumerRecord(topic = kafka, partition = 4, leaderEpoch = 0, offset = 510, CreateTime = 1668522494878, serialized key size = -1, serialized value size = 33, headers = RecordHeaders(headers = [], isReadOnly = false), key = null, value = learn kafka by yourself, 第10天)

***********************************************(2)指定分区消费(消费1分区)

import org.apache.kafka.clients.consumer.ConsumerRecord;

import org.apache.kafka.clients.consumer.ConsumerRecords;

import org.apache.kafka.clients.consumer.KafkaConsumer;

import org.apache.kafka.common.TopicPartition;

import java.time.Duration;

import java.util.ArrayList;

/**

* @author huangdh

* @version 1.0

* @description:

* @date 2022-11-11 18:35

*/

public class CustomerConsumerPartitions {

public static void main(String[] args) {

// 1.创建消费者

KafkaConsumer<String, String> consumer = KafkaConsumerFactory.getConsumer();

// 2.订阅主题(指定分区消费)

ArrayList<TopicPartition> topicPartitions = new ArrayList<>();

topicPartitions.add(new TopicPartition("kafka",1));

consumer.assign(topicPartitions);

// 3.消费/拉取的数据

while (true) {

ConsumerRecords<String, String> consumerRecords = consumer.poll(Duration.ofSeconds(1));

for (ConsumerRecord<String, String> record : consumerRecords) {

System.out.println(record);

System.out.println("***********************************************");

}

}

}

}消费日志信息如下:

ConsumerRecord(topic = kafka, partition = 1, leaderEpoch = 0, offset = 673, CreateTime = 1668522780239, serialized key size = -1, serialized value size = 32, headers = RecordHeaders(headers = [], isReadOnly = false), key = null, value = learn kafka by yourself, 第5天)

***********************************************

ConsumerRecord(topic = kafka, partition = 1, leaderEpoch = 0, offset = 674, CreateTime = 1668522781364, serialized key size = -1, serialized value size = 33, headers = RecordHeaders(headers = [], isReadOnly = false), key = null, value = learn kafka by yourself, 第15天)

***********************************************

ConsumerRecord(topic = kafka, partition = 1, leaderEpoch = 0, offset = 675, CreateTime = 1668522782138, serialized key size = -1, serialized value size = 33, headers = RecordHeaders(headers = [], isReadOnly = false), key = null, value = learn kafka by yourself, 第22天)

***********************************************

ConsumerRecord(topic = kafka, partition = 1, leaderEpoch = 0, offset = 676, CreateTime = 1668522782482, serialized key size = -1, serialized value size = 33, headers = RecordHeaders(headers = [], isReadOnly = false), key = null, value = learn kafka by yourself, 第25天)

***********************************************

ConsumerRecord(topic = kafka, partition = 1, leaderEpoch = 0, offset = 677, CreateTime = 1668522782927, serialized key size = -1, serialized value size = 33, headers = RecordHeaders(headers = [], isReadOnly = false), key = null, value = learn kafka by yourself, 第29天)

***********************************************

ConsumerRecord(topic = kafka, partition = 1, leaderEpoch = 0, offset = 678, CreateTime = 1668522784038, serialized key size = -1, serialized value size = 33, headers = RecordHeaders(headers = [], isReadOnly = false), key = null, value = learn kafka by yourself, 第39天)

***********************************************

ConsumerRecord(topic = kafka, partition = 1, leaderEpoch = 0, offset = 679, CreateTime = 1668522784709, serialized key size = -1, serialized value size = 33, headers = RecordHeaders(headers = [], isReadOnly = false), key = null, value = learn kafka by yourself, 第45天)

***********************************************

ConsumerRecord(topic = kafka, partition = 1, leaderEpoch = 0, offset = 680, CreateTime = 1668522786041, serialized key size = -1, serialized value size = 33, headers = RecordHeaders(headers = [], isReadOnly = false), key = null, value = learn kafka by yourself, 第57天)(3)消费者组消费案例

消费者组配置信息如下,同属于:“kafka-consumer-group”组。

// 1.创建消费者的配置对象

Properties properties = new Properties();

// 2.给消费者配置对象添加参数

properties.put(ConsumerConfig.BOOTSTRAP_SERVERS_CONFIG,"8.8.80.8:9092");

// 配置序列化 必须

properties.put(ConsumerConfig.KEY_DESERIALIZER_CLASS_CONFIG, StringDeserializer.class.getName());

properties.put(ConsumerConfig.VALUE_DESERIALIZER_CLASS_CONFIG,StringDeserializer.class.getName());

// 配置消费者组(组名任意起名) 必须

properties.put(ConsumerConfig.GROUP_ID_CONFIG,"kafka-consumer-group");消费者1:

import ch.qos.logback.classic.Level;

import ch.qos.logback.classic.LoggerContext;

import org.apache.kafka.clients.consumer.ConsumerRecord;

import org.apache.kafka.clients.consumer.ConsumerRecords;

import org.apache.kafka.clients.consumer.KafkaConsumer;

import org.slf4j.LoggerFactory;

import java.time.Duration;

import java.util.ArrayList;

import java.util.List;

/**

* @author huangdh

* @version 1.0

* @description:

* @date 2022-11-11 18:35

*/

public class CustomerConsumer1 {

// 修改日志打印级别,默认为debug级别

static {

LoggerContext loggerContext = (LoggerContext) LoggerFactory.getILoggerFactory();

List<ch.qos.logback.classic.Logger> loggerList = loggerContext.getLoggerList();

loggerList.forEach(logger -> {

logger.setLevel(Level.INFO);

});

}

public static void main(String[] args) {

// 1.创建消费者

KafkaConsumer<String, String> consumer = KafkaConsumerFactory.getConsumer();

// 2. 订阅要消费的主题(可以同时消费多个主题)

ArrayList<String> topics = new ArrayList<>();

topics.add("kafka");

consumer.subscribe(topics);

// 3.消费/拉取的数据

while (true) {

ConsumerRecords<String, String> consumerRecords = consumer.poll(Duration.ofSeconds(1));

for (ConsumerRecord<String, String> record : consumerRecords) {

System.out.println(record);

System.out.println("***********************************************");

}

}

}

}消费者2和消费者3代码同消费者1,复制改名即可。启动消费者1、消费者2、消费者3,消费日志如下:

首先,查看主题:“kafka”的分区数,详情如下:总共7分分区

[root@-9930 bin]# ./kafka-topics.sh --describe --zookeeper zookeeper1:2181 --topic kafka

Topic: kafka TopicId: aW-JTB_PQw-aUpXd0hF47A PartitionCount: 7 ReplicationFactor: 1 Configs:

Topic: kafka Partition: 0 Leader: 0 Replicas: 0 Isr: 0

Topic: kafka Partition: 1 Leader: 0 Replicas: 0 Isr: 0

Topic: kafka Partition: 2 Leader: 0 Replicas: 0 Isr: 0

Topic: kafka Partition: 3 Leader: 0 Replicas: 0 Isr: 0

Topic: kafka Partition: 4 Leader: 0 Replicas: 0 Isr: 0

Topic: kafka Partition: 5 Leader: 0 Replicas: 0 Isr: 0

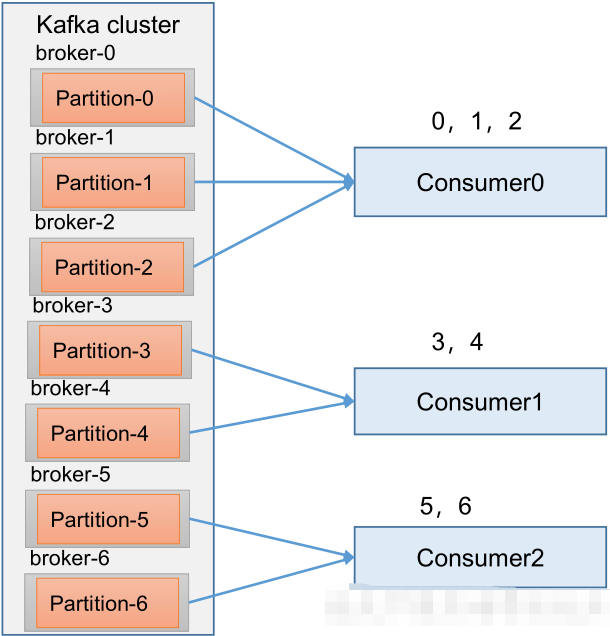

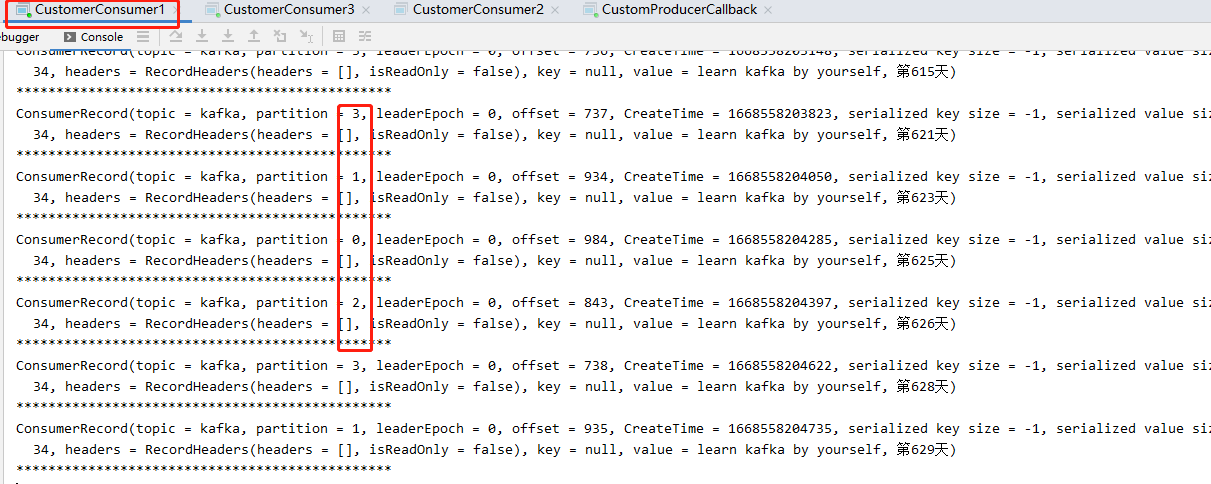

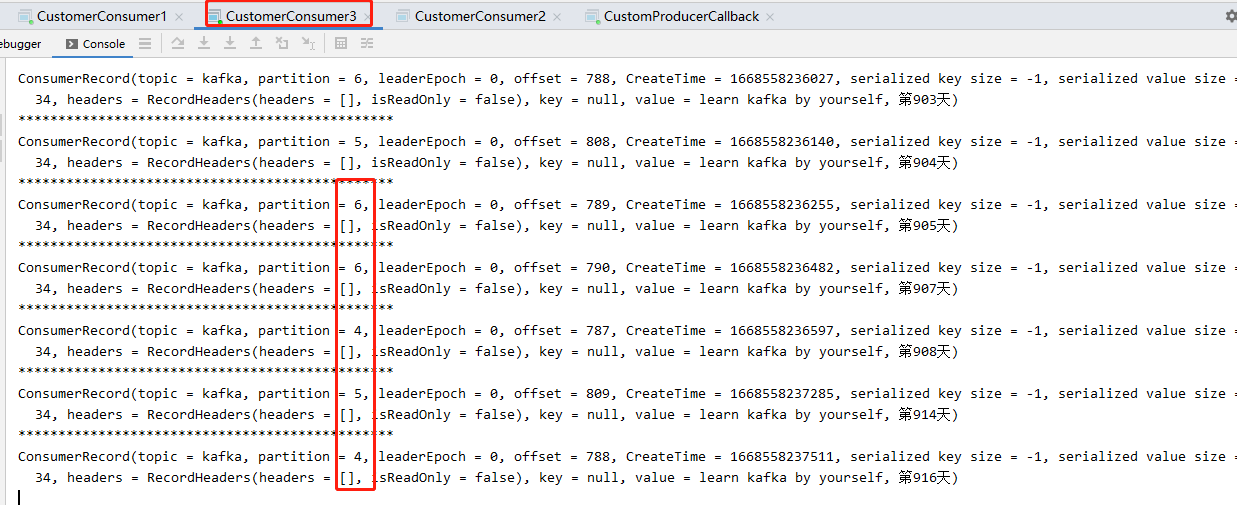

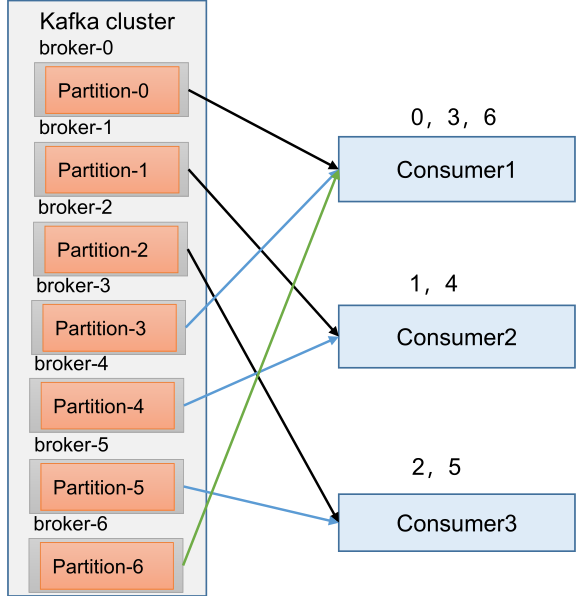

Topic: kafka Partition: 6 Leader: 0 Replicas: 0 Isr: 0consumer1,消费分区为:0/1/2

ConsumerRecord(topic = kafka, partition = 2, leaderEpoch = 0, offset = 613, CreateTime = 1668523256431, serialized key size = -1, serialized value size = 32, headers = RecordHeaders(headers = [], isReadOnly = false), key = null, value = learn kafka by yourself, 第2天)

***********************************************

ConsumerRecord(topic = kafka, partition = 0, leaderEpoch = 0, offset = 743, CreateTime = 1668523256883, serialized key size = -1, serialized value size = 32, headers = RecordHeaders(headers = [], isReadOnly = false), key = null, value = learn kafka by yourself, 第6天)

***********************************************

ConsumerRecord(topic = kafka, partition = 2, leaderEpoch = 0, offset = 614, CreateTime = 1668523257114, serialized key size = -1, serialized value size = 32, headers = RecordHeaders(headers = [], isReadOnly = false), key = null, value = learn kafka by yourself, 第8天)

***********************************************

ConsumerRecord(topic = kafka, partition = 1, leaderEpoch = 0, offset = 681, CreateTime = 1668523257688, serialized key size = -1, serialized value size = 33, headers = RecordHeaders(headers = [], isReadOnly = false), key = null, value = learn kafka by yourself, 第13天)

***********************************************

ConsumerRecord(topic = kafka, partition = 0, leaderEpoch = 0, offset = 744, CreateTime = 1668523258029, serialized key size = -1, serialized value size = 33, headers = RecordHeaders(headers = [], isReadOnly = false), key = null, value = learn kafka by yourself, 第16天)

***********************************************

ConsumerRecord(topic = kafka, partition = 1, leaderEpoch = 0, offset = 682, CreateTime = 1668523258144, serialized key size = -1, serialized value size = 33, headers = RecordHeaders(headers = [], isReadOnly = false), key = null, value = learn kafka by yourself, 第17天)

***********************************************

ConsumerRecord(topic = kafka, partition = 0, leaderEpoch = 0, offset = 745, CreateTime = 1668523258256, serialized key size = -1, serialized value size = 33, headers = RecordHeaders(headers = [], isReadOnly = false), key = null, value = learn kafka by yourself, 第18天)

***********************************************consumer2,消费分区为:3/4

ConsumerRecord(topic = kafka, partition = 3, leaderEpoch = 0, offset = 492, CreateTime = 1668523257454, serialized key size = -1, serialized value size = 33, headers = RecordHeaders(headers = [], isReadOnly = false), key = null, value = learn kafka by yourself, 第11天)

***********************************************

ConsumerRecord(topic = kafka, partition = 4, leaderEpoch = 0, offset = 530, CreateTime = 1668523257916, serialized key size = -1, serialized value size = 33, headers = RecordHeaders(headers = [], isReadOnly = false), key = null, value = learn kafka by yourself, 第15天)

***********************************************

ConsumerRecord(topic = kafka, partition = 3, leaderEpoch = 0, offset = 493, CreateTime = 1668523258369, serialized key size = -1, serialized value size = 33, headers = RecordHeaders(headers = [], isReadOnly = false), key = null, value = learn kafka by yourself, 第19天)

***********************************************

ConsumerRecord(topic = kafka, partition = 3, leaderEpoch = 0, offset = 494, CreateTime = 1668523258818, serialized key size = -1, serialized value size = 33, headers = RecordHeaders(headers = [], isReadOnly = false), key = null, value = learn kafka by yourself, 第23天)

***********************************************

ConsumerRecord(topic = kafka, partition = 3, leaderEpoch = 0, offset = 495, CreateTime = 1668523259156, serialized key size = -1, serialized value size = 33, headers = RecordHeaders(headers = [], isReadOnly = false), key = null, value = learn kafka by yourself, 第26天)

***********************************************

ConsumerRecord(topic = kafka, partition = 3, leaderEpoch = 0, offset = 496, CreateTime = 1668523259383, serialized key size = -1, serialized value size = 33, headers = RecordHeaders(headers = [], isReadOnly = false), key = null, value = learn kafka by yourself, 第28天)

***********************************************

ConsumerRecord(topic = kafka, partition = 4, leaderEpoch = 0, offset = 531, CreateTime = 1668523259497, serialized key size = -1, serialized value size = 33, headers = RecordHeaders(headers = [], isReadOnly = false), key = null, value = learn kafka by yourself, 第29天)consumer3,消费分区为:5/6

***********************************************

ConsumerRecord(topic = kafka, partition = 5, leaderEpoch = 0, offset = 530, CreateTime = 1668523256129, serialized key size = -1, serialized value size = 32, headers = RecordHeaders(headers = [], isReadOnly = false), key = null, value = learn kafka by yourself, 第0天)

***********************************************

ConsumerRecord(topic = kafka, partition = 5, leaderEpoch = 0, offset = 531, CreateTime = 1668523256543, serialized key size = -1, serialized value size = 32, headers = RecordHeaders(headers = [], isReadOnly = false), key = null, value = learn kafka by yourself, 第3天)

***********************************************

ConsumerRecord(topic = kafka, partition = 6, leaderEpoch = 0, offset = 502, CreateTime = 1668523256769, serialized key size = -1, serialized value size = 32, headers = RecordHeaders(headers = [], isReadOnly = false), key = null, value = learn kafka by yourself, 第5天)

***********************************************

ConsumerRecord(topic = kafka, partition = 6, leaderEpoch = 0, offset = 503, CreateTime = 1668523257573, serialized key size = -1, serialized value size = 33, headers = RecordHeaders(headers = [], isReadOnly = false), key = null, value = learn kafka by yourself, 第12天)

***********************************************

ConsumerRecord(topic = kafka, partition = 6, leaderEpoch = 0, offset = 504, CreateTime = 1668523257801, serialized key size = -1, serialized value size = 33, headers = RecordHeaders(headers = [], isReadOnly = false), key = null, value = learn kafka by yourself, 第14天)

***********************************************

ConsumerRecord(topic = kafka, partition = 6, leaderEpoch = 0, offset = 505, CreateTime = 1668523258594, serialized key size = -1, serialized value size = 33, headers = RecordHeaders(headers = [], isReadOnly = false), key = null, value = learn kafka by yourself, 第21天)从日志信息可知,每个分区只可由一个消费者消费。

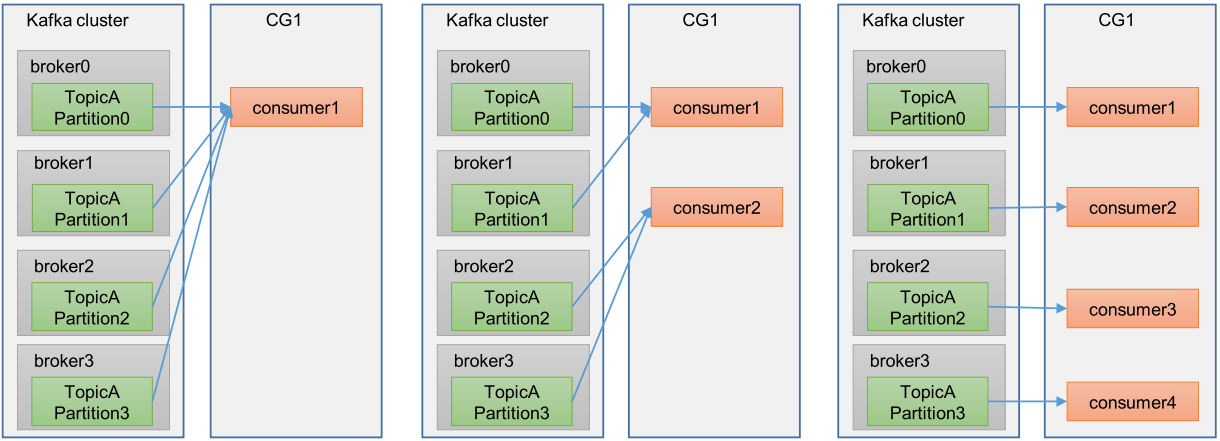

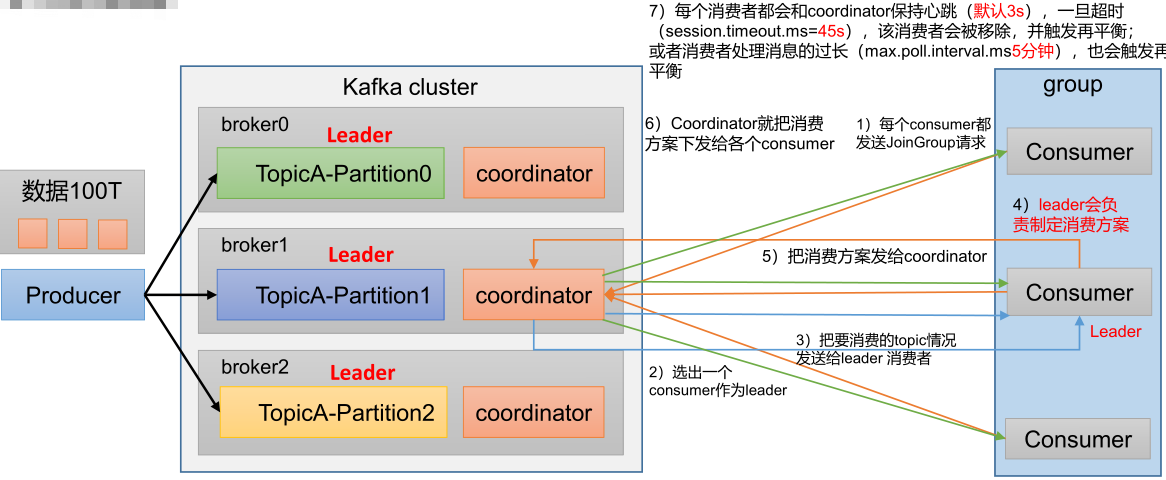

5.4 分区的分配以及再平衡

一个consumer group中有多个consumer组成,一个topic有多个partition组成。那么问题来了,到底由哪个consumer来消费哪个

partition的数据?

Kafka有四种主流的分区分配策略:Range、RoundRobin、Sticky、CooperativeSticky。

可以通过配置参数partition.assignment.strategy,修改分区的分配策略。默认策略是Range + CooperativeSticky。Kafka可以同时使用多个分区分配策略。

| 参数名称 | 描述 |

| heartbeat.interval.ms | Kafka消费者和coordinator之间的心跳时间,默认3s。 该条目的值必须小于 session.timeout.ms ,也不应该高于 session.timeout.ms 的1/3。 |

| session.timeout.ms | Kafka消费者和coordinator之间连接超时时间,默认45s。超过该值,该消费者被移除,消费者组执行再平衡。 |

| max.poll.interval.ms | 消费者处理消息的最大时长,默认是5分钟。超过该值,该消费者被移除,消费者组执行再平衡。 |

| partition.assignment.strategy | 消费者分区分配策略,默认策略是Range +CooperativeSticky。Kafka可以同时使用多个分区分配策略。可以选择的策略包括:Range、RoundRobin、Sticky、CooperativeSticky |

5.4.1 Range以及再平衡

1)Range分区策略原理

Range 是对每个topic 而言的。首先对同一个topic 里面的分区按照序号进行排序,并对消费者按照字母顺序进行排序。假如现在有7 个分区,3 个消费者,排序后的分区将会是0,1,2,3,4,5,6;消费者排序完之后将会是C0,C1,C2。

通过partitions数/consumer数来决定每个消费者应该消费几个分区。如果除不尽,那么前面几个消费者将会多消费1 个分区。

例如,7/3 = 2 余1 ,除不尽,那么消费者C0 便会多消费1 个分区。8/3=2余2,除不尽,那么C0和C1分别多消费一个。

注意:如果只是针对1 个topic 而言,C0消费者多消费1个分区影响不是很大。但是如果有N 多个topic,那么针对每个topic,消费者C0都将多消费1 个分区,topic越多,C0消费的分区会比其他消费者明显多消费N 个分区。即,多个topic的时候Range分区策略容易产生数据倾斜!

Coding:启动CustomerConsumer1、CustomerConsumer2、CustomerConsumer3,并往“kafka”主题中发送1000条数据,查看三个消费者消费情况。

消费者1:

ConsumerRecord(topic = kafka, partition = 2, leaderEpoch = 0, offset = 613, CreateTime = 1668523256431, serialized key size = -1, serialized value size = 32, headers = RecordHeaders(headers = [], isReadOnly = false), key = null, value = learn kafka by yourself, 第2天)

***********************************************

ConsumerRecord(topic = kafka, partition = 0, leaderEpoch = 0, offset = 743, CreateTime = 1668523256883, serialized key size = -1, serialized value size = 32, headers = RecordHeaders(headers = [], isReadOnly = false), key = null, value = learn kafka by yourself, 第6天)

***********************************************

ConsumerRecord(topic = kafka, partition = 2, leaderEpoch = 0, offset = 614, CreateTime = 1668523257114, serialized key size = -1, serialized value size = 32, headers = RecordHeaders(headers = [], isReadOnly = false), key = null, value = learn kafka by yourself, 第8天)

***********************************************

ConsumerRecord(topic = kafka, partition = 1, leaderEpoch = 0, offset = 681, CreateTime = 1668523257688, serialized key size = -1, serialized value size = 33, headers = RecordHeaders(headers = [], isReadOnly = false), key = null, value = learn kafka by yourself, 第13天)

***********************************************

ConsumerRecord(topic = kafka, partition = 0, leaderEpoch = 0, offset = 744, CreateTime = 1668523258029, serialized key size = -1, serialized value size = 33, headers = RecordHeaders(headers = [], isReadOnly = false), key = null, value = learn kafka by yourself, 第16天)

***********************************************

ConsumerRecord(topic = kafka, partition = 1, leaderEpoch = 0, offset = 682, CreateTime = 1668523258144, serialized key size = -1, serialized value size = 33, headers = RecordHeaders(headers = [], isReadOnly = false), key = null, value = learn kafka by yourself, 第17天)

***********************************************

ConsumerRecord(topic = kafka, partition = 0, leaderEpoch = 0, offset = 745, CreateTime = 1668523258256, serialized key size = -1, serialized value size = 33, headers = RecordHeaders(headers = [], isReadOnly = false), key = null, value = learn kafka by yourself, 第18天)

***********************************************

消费者2

ConsumerRecord(topic = kafka, partition = 3, leaderEpoch = 0, offset = 492, CreateTime = 1668523257454, serialized key size = -1, serialized value size = 33, headers = RecordHeaders(headers = [], isReadOnly = false), key = null, value = learn kafka by yourself, 第11天)

***********************************************

ConsumerRecord(topic = kafka, partition = 4, leaderEpoch = 0, offset = 530, CreateTime = 1668523257916, serialized key size = -1, serialized value size = 33, headers = RecordHeaders(headers = [], isReadOnly = false), key = null, value = learn kafka by yourself, 第15天)

***********************************************

ConsumerRecord(topic = kafka, partition = 3, leaderEpoch = 0, offset = 493, CreateTime = 1668523258369, serialized key size = -1, serialized value size = 33, headers = RecordHeaders(headers = [], isReadOnly = false), key = null, value = learn kafka by yourself, 第19天)

***********************************************

ConsumerRecord(topic = kafka, partition = 3, leaderEpoch = 0, offset = 494, CreateTime = 1668523258818, serialized key size = -1, serialized value size = 33, headers = RecordHeaders(headers = [], isReadOnly = false), key = null, value = learn kafka by yourself, 第23天)

***********************************************

ConsumerRecord(topic = kafka, partition = 3, leaderEpoch = 0, offset = 495, CreateTime = 1668523259156, serialized key size = -1, serialized value size = 33, headers = RecordHeaders(headers = [], isReadOnly = false), key = null, value = learn kafka by yourself, 第26天)

***********************************************

ConsumerRecord(topic = kafka, partition = 3, leaderEpoch = 0, offset = 496, CreateTime = 1668523259383, serialized key size = -1, serialized value size = 33, headers = RecordHeaders(headers = [], isReadOnly = false), key = null, value = learn kafka by yourself, 第28天)

***********************************************

ConsumerRecord(topic = kafka, partition = 4, leaderEpoch = 0, offset = 531, CreateTime = 1668523259497, serialized key size = -1, serialized value size = 33, headers = RecordHeaders(headers = [], isReadOnly = false), key = null, value = learn kafka by yourself, 第29天)

消费者3:

***********************************************

ConsumerRecord(topic = kafka, partition = 5, leaderEpoch = 0, offset = 530, CreateTime = 1668523256129, serialized key size = -1, serialized value size = 32, headers = RecordHeaders(headers = [], isReadOnly = false), key = null, value = learn kafka by yourself, 第0天)

***********************************************

ConsumerRecord(topic = kafka, partition = 5, leaderEpoch = 0, offset = 531, CreateTime = 1668523256543, serialized key size = -1, serialized value size = 32, headers = RecordHeaders(headers = [], isReadOnly = false), key = null, value = learn kafka by yourself, 第3天)

***********************************************

ConsumerRecord(topic = kafka, partition = 6, leaderEpoch = 0, offset = 502, CreateTime = 1668523256769, serialized key size = -1, serialized value size = 32, headers = RecordHeaders(headers = [], isReadOnly = false), key = null, value = learn kafka by yourself, 第5天)

***********************************************

ConsumerRecord(topic = kafka, partition = 6, leaderEpoch = 0, offset = 503, CreateTime = 1668523257573, serialized key size = -1, serialized value size = 33, headers = RecordHeaders(headers = [], isReadOnly = false), key = null, value = learn kafka by yourself, 第12天)

***********************************************

ConsumerRecord(topic = kafka, partition = 6, leaderEpoch = 0, offset = 504, CreateTime = 1668523257801, serialized key size = -1, serialized value size = 33, headers = RecordHeaders(headers = [], isReadOnly = false), key = null, value = learn kafka by yourself, 第14天)

***********************************************

ConsumerRecord(topic = kafka, partition = 6, leaderEpoch = 0, offset = 505, CreateTime = 1668523258594, serialized key size = -1, serialized value size = 33, headers = RecordHeaders(headers = [], isReadOnly = false), key = null, value = learn kafka by yourself, 第21天)2)Range再平衡

将CustomerConsumer2终止,并查看CustomerConsumer1和CustomerConsumer3的消费情况。

说明:消费者组需要按照超时时间45s来判断它是否退出,所以需要等待,时间到了45s后,判断它真的退出就会把任务分配给其他broker执行。

CustomerConsumer1消费日志,CustomerConsumer1消费到了3号分区的数据:

CustomerConsumer3消费日志,CustomerConsumer3消耗到了4号分区的数据:

说明:消费者2已经被踢出消费者组,所以重新按照range方式分配。

然后再启动CustomerConsumer2,并查看CustomerConsumer1/2/3的消费情况。

消费者1:

ConsumerRecord(topic = kafka, partition = 2, leaderEpoch = 0, offset = 613, CreateTime = 1668523256431, serialized key size = -1, serialized value size = 32, headers = RecordHeaders(headers = [], isReadOnly = false), key = null, value = learn kafka by yourself, 第2天)

***********************************************