JAVA多线程-种类及生命周期

3. 多线程种类

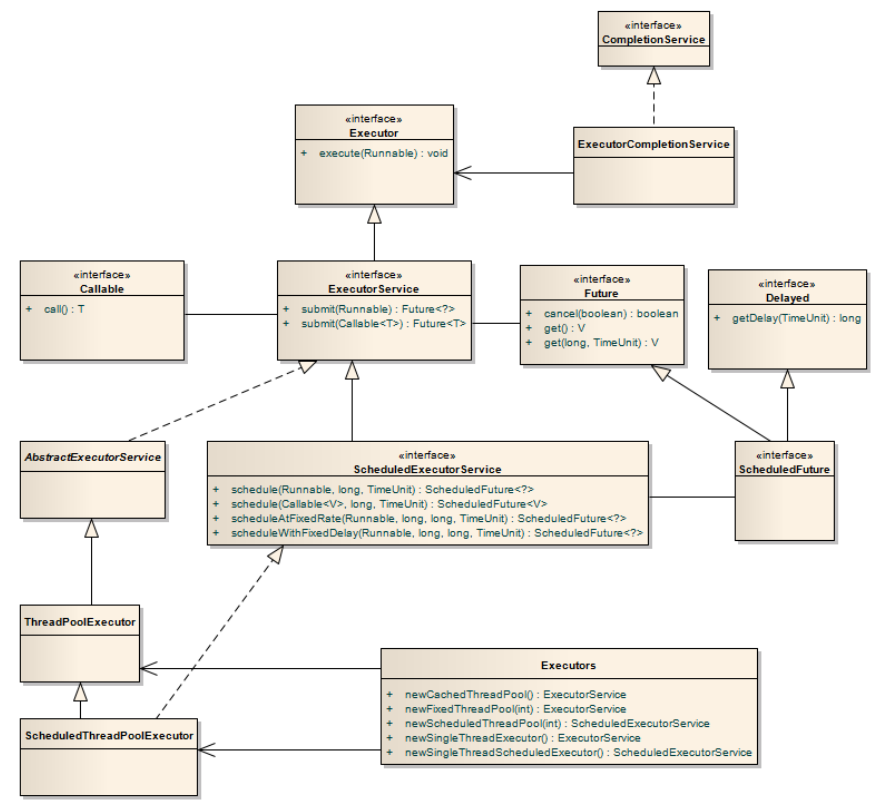

Java中线程池的顶级接口是Executor,严格意义上讲Exccutor并不是一个线程池,而是一个执行线程的工具。真正的线程池接口是ExecutorService。

Executor源码:

点击查看代码

public interface Executor {

/**

* Executes the given command at some time in the future. The command

* may execute in a new thread, in a pooled thread, or in the calling

* thread, at the discretion of the {@code Executor} implementation.

*

* @param command the runnable task

* @throws RejectedExecutionException if this task cannot be

* accepted for execution

* @throws NullPointerException if command is null

*/

void execute(Runnable command);

}

3.1.4

Executor与ExecutorService关系如图所示:

ExecutorService源码:

点击查看代码

/**

* An {@link Executor} that provides methods to manage termination and

* methods that can produce a {@link Future} for tracking progress of

* one or more asynchronous tasks.

*

* <p>An {@code ExecutorService} can be shut down, which will cause

* it to reject new tasks. Two different methods are provided for

* shutting down an {@code ExecutorService}. The {@link #shutdown}

* method will allow previously submitted tasks to execute before

* terminating, while the {@link #shutdownNow} method prevents waiting

* tasks from starting and attempts to stop currently executing tasks.

* Upon termination, an executor has no tasks actively executing, no

* tasks awaiting execution, and no new tasks can be submitted. An

* unused {@code ExecutorService} should be shut down to allow

* reclamation of its resources.

*

* <p>Method {@code submit} extends base method {@link

* Executor#execute(Runnable)} by creating and returning a {@link Future}

* that can be used to cancel execution and/or wait for completion.

* Methods {@code invokeAny} and {@code invokeAll} perform the most

* commonly useful forms of bulk execution, executing a collection of

* tasks and then waiting for at least one, or all, to

* complete. (Class {@link ExecutorCompletionService} can be used to

* write customized variants of these methods.)

*

* <p>The {@link Executors} class provides factory methods for the

* executor services provided in this package.

*

* <h3>Usage Examples</h3>

*

* Here is a sketch of a network service in which threads in a thread

* pool service incoming requests. It uses the preconfigured {@link

* Executors#newFixedThreadPool} factory method:

*

* <pre> {@code

* class NetworkService implements Runnable {

* private final ServerSocket serverSocket;

* private final ExecutorService pool;

*

* public NetworkService(int port, int poolSize)

* throws IOException {

* serverSocket = new ServerSocket(port);

* pool = Executors.newFixedThreadPool(poolSize);

* }

*

* public void run() { // run the service

* try {

* for (;;) {

* pool.execute(new Handler(serverSocket.accept()));

* }

* } catch (IOException ex) {

* pool.shutdown();

* }

* }

* }

*

* class Handler implements Runnable {

* private final Socket socket;

* Handler(Socket socket) { this.socket = socket; }

* public void run() {

* // read and service request on socket

* }

* }}</pre>

*

* The following method shuts down an {@code ExecutorService} in two phases,

* first by calling {@code shutdown} to reject incoming tasks, and then

* calling {@code shutdownNow}, if necessary, to cancel any lingering tasks:

*

* <pre> {@code

* void shutdownAndAwaitTermination(ExecutorService pool) {

* pool.shutdown(); // Disable new tasks from being submitted

* try {

* // Wait a while for existing tasks to terminate

* if (!pool.awaitTermination(60, TimeUnit.SECONDS)) {

* pool.shutdownNow(); // Cancel currently executing tasks

* // Wait a while for tasks to respond to being cancelled

* if (!pool.awaitTermination(60, TimeUnit.SECONDS))

* System.err.println("Pool did not terminate");

* }

* } catch (InterruptedException ie) {

* // (Re-)Cancel if current thread also interrupted

* pool.shutdownNow();

* // Preserve interrupt status

* Thread.currentThread().interrupt();

* }

* }}</pre>

*

* <p>Memory consistency effects: Actions in a thread prior to the

* submission of a {@code Runnable} or {@code Callable} task to an

* {@code ExecutorService}

* <a href="package-summary.html#MemoryVisibility"><i>happen-before</i></a>

* any actions taken by that task, which in turn <i>happen-before</i> the

* result is retrieved via {@code Future.get()}.

*

* @since 1.5

* @author Doug Lea

*/

public interface ExecutorService extends Executor {

/**

* Initiates an orderly shutdown in which previously submitted

* tasks are executed, but no new tasks will be accepted.

* Invocation has no additional effect if already shut down.

*

* <p>This method does not wait for previously submitted tasks to

* complete execution. Use {@link #awaitTermination awaitTermination}

* to do that.

*

* @throws SecurityException if a security manager exists and

* shutting down this ExecutorService may manipulate

* threads that the caller is not permitted to modify

* because it does not hold {@link

* java.lang.RuntimePermission}{@code ("modifyThread")},

* or the security manager's {@code checkAccess} method

* denies access.

*/

void shutdown();

/**

* Attempts to stop all actively executing tasks, halts the

* processing of waiting tasks, and returns a list of the tasks

* that were awaiting execution.

*

* <p>This method does not wait for actively executing tasks to

* terminate. Use {@link #awaitTermination awaitTermination} to

* do that.

*

* <p>There are no guarantees beyond best-effort attempts to stop

* processing actively executing tasks. For example, typical

* implementations will cancel via {@link Thread#interrupt}, so any

* task that fails to respond to interrupts may never terminate.

*

* @return list of tasks that never commenced execution

* @throws SecurityException if a security manager exists and

* shutting down this ExecutorService may manipulate

* threads that the caller is not permitted to modify

* because it does not hold {@link

* java.lang.RuntimePermission}{@code ("modifyThread")},

* or the security manager's {@code checkAccess} method

* denies access.

*/

List<Runnable> shutdownNow();

/**

* Returns {@code true} if this executor has been shut down.

*

* @return {@code true} if this executor has been shut down

*/

boolean isShutdown();

/**

* Returns {@code true} if all tasks have completed following shut down.

* Note that {@code isTerminated} is never {@code true} unless

* either {@code shutdown} or {@code shutdownNow} was called first.

*

* @return {@code true} if all tasks have completed following shut down

*/

boolean isTerminated();

/**

* Blocks until all tasks have completed execution after a shutdown

* request, or the timeout occurs, or the current thread is

* interrupted, whichever happens first.

*

* @param timeout the maximum time to wait

* @param unit the time unit of the timeout argument

* @return {@code true} if this executor terminated and

* {@code false} if the timeout elapsed before termination

* @throws InterruptedException if interrupted while waiting

*/

boolean awaitTermination(long timeout, TimeUnit unit)

throws InterruptedException;

/**

* Submits a value-returning task for execution and returns a

* Future representing the pending results of the task. The

* Future's {@code get} method will return the task's result upon

* successful completion.

*

* <p>

* If you would like to immediately block waiting

* for a task, you can use constructions of the form

* {@code result = exec.submit(aCallable).get();}

*

* <p>Note: The {@link Executors} class includes a set of methods

* that can convert some other common closure-like objects,

* for example, {@link java.security.PrivilegedAction} to

* {@link Callable} form so they can be submitted.

*

* @param task the task to submit

* @param <T> the type of the task's result

* @return a Future representing pending completion of the task

* @throws RejectedExecutionException if the task cannot be

* scheduled for execution

* @throws NullPointerException if the task is null

*/

<T> Future<T> submit(Callable<T> task);

/**

* Submits a Runnable task for execution and returns a Future

* representing that task. The Future's {@code get} method will

* return the given result upon successful completion.

*

* @param task the task to submit

* @param result the result to return

* @param <T> the type of the result

* @return a Future representing pending completion of the task

* @throws RejectedExecutionException if the task cannot be

* scheduled for execution

* @throws NullPointerException if the task is null

*/

<T> Future<T> submit(Runnable task, T result);

/**

* Submits a Runnable task for execution and returns a Future

* representing that task. The Future's {@code get} method will

* return {@code null} upon <em>successful</em> completion.

*

* @param task the task to submit

* @return a Future representing pending completion of the task

* @throws RejectedExecutionException if the task cannot be

* scheduled for execution

* @throws NullPointerException if the task is null

*/

Future<?> submit(Runnable task);

/**

* Executes the given tasks, returning a list of Futures holding

* their status and results when all complete.

* {@link Future#isDone} is {@code true} for each

* element of the returned list.

* Note that a <em>completed</em> task could have

* terminated either normally or by throwing an exception.

* The results of this method are undefined if the given

* collection is modified while this operation is in progress.

*

* @param tasks the collection of tasks

* @param <T> the type of the values returned from the tasks

* @return a list of Futures representing the tasks, in the same

* sequential order as produced by the iterator for the

* given task list, each of which has completed

* @throws InterruptedException if interrupted while waiting, in

* which case unfinished tasks are cancelled

* @throws NullPointerException if tasks or any of its elements are {@code null}

* @throws RejectedExecutionException if any task cannot be

* scheduled for execution

*/

<T> List<Future<T>> invokeAll(Collection<? extends Callable<T>> tasks)

throws InterruptedException;

/**

* Executes the given tasks, returning a list of Futures holding

* their status and results

* when all complete or the timeout expires, whichever happens first.

* {@link Future#isDone} is {@code true} for each

* element of the returned list.

* Upon return, tasks that have not completed are cancelled.

* Note that a <em>completed</em> task could have

* terminated either normally or by throwing an exception.

* The results of this method are undefined if the given

* collection is modified while this operation is in progress.

*

* @param tasks the collection of tasks

* @param timeout the maximum time to wait

* @param unit the time unit of the timeout argument

* @param <T> the type of the values returned from the tasks

* @return a list of Futures representing the tasks, in the same

* sequential order as produced by the iterator for the

* given task list. If the operation did not time out,

* each task will have completed. If it did time out, some

* of these tasks will not have completed.

* @throws InterruptedException if interrupted while waiting, in

* which case unfinished tasks are cancelled

* @throws NullPointerException if tasks, any of its elements, or

* unit are {@code null}

* @throws RejectedExecutionException if any task cannot be scheduled

* for execution

*/

<T> List<Future<T>> invokeAll(Collection<? extends Callable<T>> tasks,

long timeout, TimeUnit unit)

throws InterruptedException;

/**

* Executes the given tasks, returning the result

* of one that has completed successfully (i.e., without throwing

* an exception), if any do. Upon normal or exceptional return,

* tasks that have not completed are cancelled.

* The results of this method are undefined if the given

* collection is modified while this operation is in progress.

*

* @param tasks the collection of tasks

* @param <T> the type of the values returned from the tasks

* @return the result returned by one of the tasks

* @throws InterruptedException if interrupted while waiting

* @throws NullPointerException if tasks or any element task

* subject to execution is {@code null}

* @throws IllegalArgumentException if tasks is empty

* @throws ExecutionException if no task successfully completes

* @throws RejectedExecutionException if tasks cannot be scheduled

* for execution

*/

<T> T invokeAny(Collection<? extends Callable<T>> tasks)

throws InterruptedException, ExecutionException;

/**

* Executes the given tasks, returning the result

* of one that has completed successfully (i.e., without throwing

* an exception), if any do before the given timeout elapses.

* Upon normal or exceptional return, tasks that have not

* completed are cancelled.

* The results of this method are undefined if the given

* collection is modified while this operation is in progress.

*

* @param tasks the collection of tasks

* @param timeout the maximum time to wait

* @param unit the time unit of the timeout argument

* @param <T> the type of the values returned from the tasks

* @return the result returned by one of the tasks

* @throws InterruptedException if interrupted while waiting

* @throws NullPointerException if tasks, or unit, or any element

* task subject to execution is {@code null}

* @throws TimeoutException if the given timeout elapses before

* any task successfully completes

* @throws ExecutionException if no task successfully completes

* @throws RejectedExecutionException if tasks cannot be scheduled

* for execution

*/

<T> T invokeAny(Collection<? extends Callable<T>> tasks,

long timeout, TimeUnit unit)

throws InterruptedException, ExecutionException, TimeoutException;

}

3.1 常用4种线程池

3.1.1 newCachedThreadPool

创建一个可根据需要创建新线程的线程池,但是在之前构造的线程可用时将重用它们。对于执行很多短期异步任务的程序,使用newCacheThreadPool可提高程序的性能。newCachedThreadPool调用execute 将重用以前构造的线程(如果线程可用),如果现有的线程没有可用的,则创建一个新线程并添加到线程池中。终止并从缓存中移除已有60秒中未被使用的线程。因此,长时间保持空闲线程不会使用任何资源。

点击查看代码

/**

* Creates a thread pool that creates new threads as needed, but

* will reuse previously constructed threads when they are

* available. These pools will typically improve the performance

* of programs that execute many short-lived asynchronous tasks.

* Calls to {@code execute} will reuse previously constructed

* threads if available. If no existing thread is available, a new

* thread will be created and added to the pool. Threads that have

* not been used for sixty seconds are terminated and removed from

* the cache. Thus, a pool that remains idle for long enough will

* not consume any resources. Note that pools with similar

* properties but different details (for example, timeout parameters)

* may be created using {@link ThreadPoolExecutor} constructors.

*

* @return the newly created thread pool

*/

public static ExecutorService newCachedThreadPool() {

return new ThreadPoolExecutor(0, Integer.MAX_VALUE,

60L, TimeUnit.SECONDS,

new SynchronousQueue<Runnable>());

}

/**

* Creates a {@code SynchronousQueue} with nonfair access policy.

*/

public SynchronousQueue() {

this(false);

}

/**

* Creates a {@code SynchronousQueue} with the specified fairness policy.

*

* @param fair if true, waiting threads contend in FIFO order for

* access; otherwise the order is unspecified.

*/

public SynchronousQueue(boolean fair) {

transferer = fair ? new TransferQueue<E>() : new TransferStack<E>();

}

SynchronousQueue源码如下:

点击查看代码

/*

* DO NOT ALTER OR REMOVE COPYRIGHT NOTICES OR THIS FILE HEADER.

*

* This code is free software; you can redistribute it and/or modify it

* under the terms of the GNU General Public License version 2 only, as

* published by the Free Software Foundation. Oracle designates this

* particular file as subject to the "Classpath" exception as provided

* by Oracle in the LICENSE file that accompanied this code.

*

* This code is distributed in the hope that it will be useful, but WITHOUT

* ANY WARRANTY; without even the implied warranty of MERCHANTABILITY or

* FITNESS FOR A PARTICULAR PURPOSE. See the GNU General Public License

* version 2 for more details (a copy is included in the LICENSE file that

* accompanied this code).

*

* You should have received a copy of the GNU General Public License version

* 2 along with this work; if not, write to the Free Software Foundation,

* Inc., 51 Franklin St, Fifth Floor, Boston, MA 02110-1301 USA.

*

* Please contact Oracle, 500 Oracle Parkway, Redwood Shores, CA 94065 USA

* or visit www.oracle.com if you need additional information or have any

* questions.

*/

/*

* This file is available under and governed by the GNU General Public

* License version 2 only, as published by the Free Software Foundation.

* However, the following notice accompanied the original version of this

* file:

*

* Written by Doug Lea, Bill Scherer, and Michael Scott with

* assistance from members of JCP JSR-166 Expert Group and released to

* the public domain, as explained at

* http://creativecommons.org/publicdomain/zero/1.0/

*/

package java.util.concurrent;

import java.util.concurrent.locks.LockSupport;

import java.util.concurrent.locks.ReentrantLock;

import java.util.*;

import java.util.Spliterator;

import java.util.Spliterators;

/**

* A {@linkplain BlockingQueue blocking queue} in which each insert

* operation must wait for a corresponding remove operation by another

* thread, and vice versa. A synchronous queue does not have any

* internal capacity, not even a capacity of one. You cannot

* {@code peek} at a synchronous queue because an element is only

* present when you try to remove it; you cannot insert an element

* (using any method) unless another thread is trying to remove it;

* you cannot iterate as there is nothing to iterate. The

* <em>head</em> of the queue is the element that the first queued

* inserting thread is trying to add to the queue; if there is no such

* queued thread then no element is available for removal and

* {@code poll()} will return {@code null}. For purposes of other

* {@code Collection} methods (for example {@code contains}), a

* {@code SynchronousQueue} acts as an empty collection. This queue

* does not permit {@code null} elements.

*

* <p>Synchronous queues are similar to rendezvous channels used in

* CSP and Ada. They are well suited for handoff designs, in which an

* object running in one thread must sync up with an object running

* in another thread in order to hand it some information, event, or

* task.

*

* <p>This class supports an optional fairness policy for ordering

* waiting producer and consumer threads. By default, this ordering

* is not guaranteed. However, a queue constructed with fairness set

* to {@code true} grants threads access in FIFO order.

*

* <p>This class and its iterator implement all of the

* <em>optional</em> methods of the {@link Collection} and {@link

* Iterator} interfaces.

*

* <p>This class is a member of the

* <a href="{@docRoot}/../technotes/guides/collections/index.html">

* Java Collections Framework</a>.

*

* @since 1.5

* @author Doug Lea and Bill Scherer and Michael Scott

* @param <E> the type of elements held in this collection

*/

public class SynchronousQueue<E> extends AbstractQueue<E>

implements BlockingQueue<E>, java.io.Serializable {

private static final long serialVersionUID = -3223113410248163686L;

/*

* This class implements extensions of the dual stack and dual

* queue algorithms described in "Nonblocking Concurrent Objects

* with Condition Synchronization", by W. N. Scherer III and

* M. L. Scott. 18th Annual Conf. on Distributed Computing,

* Oct. 2004 (see also

* http://www.cs.rochester.edu/u/scott/synchronization/pseudocode/duals.html).

* The (Lifo) stack is used for non-fair mode, and the (Fifo)

* queue for fair mode. The performance of the two is generally

* similar. Fifo usually supports higher throughput under

* contention but Lifo maintains higher thread locality in common

* applications.

*

* A dual queue (and similarly stack) is one that at any given

* time either holds "data" -- items provided by put operations,

* or "requests" -- slots representing take operations, or is

* empty. A call to "fulfill" (i.e., a call requesting an item

* from a queue holding data or vice versa) dequeues a

* complementary node. The most interesting feature of these

* queues is that any operation can figure out which mode the

* queue is in, and act accordingly without needing locks.

*

* Both the queue and stack extend abstract class Transferer

* defining the single method transfer that does a put or a

* take. These are unified into a single method because in dual

* data structures, the put and take operations are symmetrical,

* so nearly all code can be combined. The resulting transfer

* methods are on the long side, but are easier to follow than

* they would be if broken up into nearly-duplicated parts.

*

* The queue and stack data structures share many conceptual

* similarities but very few concrete details. For simplicity,

* they are kept distinct so that they can later evolve

* separately.

*

* The algorithms here differ from the versions in the above paper

* in extending them for use in synchronous queues, as well as

* dealing with cancellation. The main differences include:

*

* 1. The original algorithms used bit-marked pointers, but

* the ones here use mode bits in nodes, leading to a number

* of further adaptations.

* 2. SynchronousQueues must block threads waiting to become

* fulfilled.

* 3. Support for cancellation via timeout and interrupts,

* including cleaning out cancelled nodes/threads

* from lists to avoid garbage retention and memory depletion.

*

* Blocking is mainly accomplished using LockSupport park/unpark,

* except that nodes that appear to be the next ones to become

* fulfilled first spin a bit (on multiprocessors only). On very

* busy synchronous queues, spinning can dramatically improve

* throughput. And on less busy ones, the amount of spinning is

* small enough not to be noticeable.

*

* Cleaning is done in different ways in queues vs stacks. For

* queues, we can almost always remove a node immediately in O(1)

* time (modulo retries for consistency checks) when it is

* cancelled. But if it may be pinned as the current tail, it must

* wait until some subsequent cancellation. For stacks, we need a

* potentially O(n) traversal to be sure that we can remove the

* node, but this can run concurrently with other threads

* accessing the stack.

*

* While garbage collection takes care of most node reclamation

* issues that otherwise complicate nonblocking algorithms, care

* is taken to "forget" references to data, other nodes, and

* threads that might be held on to long-term by blocked

* threads. In cases where setting to null would otherwise

* conflict with main algorithms, this is done by changing a

* node's link to now point to the node itself. This doesn't arise

* much for Stack nodes (because blocked threads do not hang on to

* old head pointers), but references in Queue nodes must be

* aggressively forgotten to avoid reachability of everything any

* node has ever referred to since arrival.

*/

/**

* Shared internal API for dual stacks and queues.

*/

abstract static class Transferer<E> {

/**

* Performs a put or take.

*

* @param e if non-null, the item to be handed to a consumer;

* if null, requests that transfer return an item

* offered by producer.

* @param timed if this operation should timeout

* @param nanos the timeout, in nanoseconds

* @return if non-null, the item provided or received; if null,

* the operation failed due to timeout or interrupt --

* the caller can distinguish which of these occurred

* by checking Thread.interrupted.

*/

abstract E transfer(E e, boolean timed, long nanos);

}

/** The number of CPUs, for spin control */

static final int NCPUS = Runtime.getRuntime().availableProcessors();

/**

* The number of times to spin before blocking in timed waits.

* The value is empirically derived -- it works well across a

* variety of processors and OSes. Empirically, the best value

* seems not to vary with number of CPUs (beyond 2) so is just

* a constant.

*/

static final int maxTimedSpins = (NCPUS < 2) ? 0 : 32;

/**

* The number of times to spin before blocking in untimed waits.

* This is greater than timed value because untimed waits spin

* faster since they don't need to check times on each spin.

*/

static final int maxUntimedSpins = maxTimedSpins * 16;

/**

* The number of nanoseconds for which it is faster to spin

* rather than to use timed park. A rough estimate suffices.

*/

static final long spinForTimeoutThreshold = 1000L;

/** Dual stack */

static final class TransferStack<E> extends Transferer<E> {

/*

* This extends Scherer-Scott dual stack algorithm, differing,

* among other ways, by using "covering" nodes rather than

* bit-marked pointers: Fulfilling operations push on marker

* nodes (with FULFILLING bit set in mode) to reserve a spot

* to match a waiting node.

*/

/* Modes for SNodes, ORed together in node fields */

/** Node represents an unfulfilled consumer */

static final int REQUEST = 0;

/** Node represents an unfulfilled producer */

static final int DATA = 1;

/** Node is fulfilling another unfulfilled DATA or REQUEST */

static final int FULFILLING = 2;

/** Returns true if m has fulfilling bit set. */

static boolean isFulfilling(int m) { return (m & FULFILLING) != 0; }

/** Node class for TransferStacks. */

static final class SNode {

volatile SNode next; // next node in stack

volatile SNode match; // the node matched to this

volatile Thread waiter; // to control park/unpark

Object item; // data; or null for REQUESTs

int mode;

// Note: item and mode fields don't need to be volatile

// since they are always written before, and read after,

// other volatile/atomic operations.

SNode(Object item) {

this.item = item;

}

boolean casNext(SNode cmp, SNode val) {

return cmp == next &&

UNSAFE.compareAndSwapObject(this, nextOffset, cmp, val);

}

/**

* Tries to match node s to this node, if so, waking up thread.

* Fulfillers call tryMatch to identify their waiters.

* Waiters block until they have been matched.

*

* @param s the node to match

* @return true if successfully matched to s

*/

boolean tryMatch(SNode s) {

if (match == null &&

UNSAFE.compareAndSwapObject(this, matchOffset, null, s)) {

Thread w = waiter;

if (w != null) { // waiters need at most one unpark

waiter = null;

LockSupport.unpark(w);

}

return true;

}

return match == s;

}

/**

* Tries to cancel a wait by matching node to itself.

*/

void tryCancel() {

UNSAFE.compareAndSwapObject(this, matchOffset, null, this);

}

boolean isCancelled() {

return match == this;

}

// Unsafe mechanics

private static final sun.misc.Unsafe UNSAFE;

private static final long matchOffset;

private static final long nextOffset;

static {

try {

UNSAFE = sun.misc.Unsafe.getUnsafe();

Class<?> k = SNode.class;

matchOffset = UNSAFE.objectFieldOffset

(k.getDeclaredField("match"));

nextOffset = UNSAFE.objectFieldOffset

(k.getDeclaredField("next"));

} catch (Exception e) {

throw new Error(e);

}

}

}

/** The head (top) of the stack */

volatile SNode head;

boolean casHead(SNode h, SNode nh) {

return h == head &&

UNSAFE.compareAndSwapObject(this, headOffset, h, nh);

}

/**

* Creates or resets fields of a node. Called only from transfer

* where the node to push on stack is lazily created and

* reused when possible to help reduce intervals between reads

* and CASes of head and to avoid surges of garbage when CASes

* to push nodes fail due to contention.

*/

static SNode snode(SNode s, Object e, SNode next, int mode) {

if (s == null) s = new SNode(e);

s.mode = mode;

s.next = next;

return s;

}

/**

* Puts or takes an item.

*/

@SuppressWarnings("unchecked")

E transfer(E e, boolean timed, long nanos) {

/*

* Basic algorithm is to loop trying one of three actions:

*

* 1. If apparently empty or already containing nodes of same

* mode, try to push node on stack and wait for a match,

* returning it, or null if cancelled.

*

* 2. If apparently containing node of complementary mode,

* try to push a fulfilling node on to stack, match

* with corresponding waiting node, pop both from

* stack, and return matched item. The matching or

* unlinking might not actually be necessary because of

* other threads performing action 3:

*

* 3. If top of stack already holds another fulfilling node,

* help it out by doing its match and/or pop

* operations, and then continue. The code for helping

* is essentially the same as for fulfilling, except

* that it doesn't return the item.

*/

SNode s = null; // constructed/reused as needed

int mode = (e == null) ? REQUEST : DATA;

for (;;) {

SNode h = head;

if (h == null || h.mode == mode) { // empty or same-mode

if (timed && nanos <= 0) { // can't wait

if (h != null && h.isCancelled())

casHead(h, h.next); // pop cancelled node

else

return null;

} else if (casHead(h, s = snode(s, e, h, mode))) {

SNode m = awaitFulfill(s, timed, nanos);

if (m == s) { // wait was cancelled

clean(s);

return null;

}

if ((h = head) != null && h.next == s)

casHead(h, s.next); // help s's fulfiller

return (E) ((mode == REQUEST) ? m.item : s.item);

}

} else if (!isFulfilling(h.mode)) { // try to fulfill

if (h.isCancelled()) // already cancelled

casHead(h, h.next); // pop and retry

else if (casHead(h, s=snode(s, e, h, FULFILLING|mode))) {

for (;;) { // loop until matched or waiters disappear

SNode m = s.next; // m is s's match

if (m == null) { // all waiters are gone

casHead(s, null); // pop fulfill node

s = null; // use new node next time

break; // restart main loop

}

SNode mn = m.next;

if (m.tryMatch(s)) {

casHead(s, mn); // pop both s and m

return (E) ((mode == REQUEST) ? m.item : s.item);

} else // lost match

s.casNext(m, mn); // help unlink

}

}

} else { // help a fulfiller

SNode m = h.next; // m is h's match

if (m == null) // waiter is gone

casHead(h, null); // pop fulfilling node

else {

SNode mn = m.next;

if (m.tryMatch(h)) // help match

casHead(h, mn); // pop both h and m

else // lost match

h.casNext(m, mn); // help unlink

}

}

}

}

/**

* Spins/blocks until node s is matched by a fulfill operation.

*

* @param s the waiting node

* @param timed true if timed wait

* @param nanos timeout value

* @return matched node, or s if cancelled

*/

SNode awaitFulfill(SNode s, boolean timed, long nanos) {

/*

* When a node/thread is about to block, it sets its waiter

* field and then rechecks state at least one more time

* before actually parking, thus covering race vs

* fulfiller noticing that waiter is non-null so should be

* woken.

*

* When invoked by nodes that appear at the point of call

* to be at the head of the stack, calls to park are

* preceded by spins to avoid blocking when producers and

* consumers are arriving very close in time. This can

* happen enough to bother only on multiprocessors.

*

* The order of checks for returning out of main loop

* reflects fact that interrupts have precedence over

* normal returns, which have precedence over

* timeouts. (So, on timeout, one last check for match is

* done before giving up.) Except that calls from untimed

* SynchronousQueue.{poll/offer} don't check interrupts

* and don't wait at all, so are trapped in transfer

* method rather than calling awaitFulfill.

*/

final long deadline = timed ? System.nanoTime() + nanos : 0L;

Thread w = Thread.currentThread();

int spins = (shouldSpin(s) ?

(timed ? maxTimedSpins : maxUntimedSpins) : 0);

for (;;) {

if (w.isInterrupted())

s.tryCancel();

SNode m = s.match;

if (m != null)

return m;

if (timed) {

nanos = deadline - System.nanoTime();

if (nanos <= 0L) {

s.tryCancel();

continue;

}

}

if (spins > 0)

spins = shouldSpin(s) ? (spins-1) : 0;

else if (s.waiter == null)

s.waiter = w; // establish waiter so can park next iter

else if (!timed)

LockSupport.park(this);

else if (nanos > spinForTimeoutThreshold)

LockSupport.parkNanos(this, nanos);

}

}

/**

* Returns true if node s is at head or there is an active

* fulfiller.

*/

boolean shouldSpin(SNode s) {

SNode h = head;

return (h == s || h == null || isFulfilling(h.mode));

}

/**

* Unlinks s from the stack.

*/

void clean(SNode s) {

s.item = null; // forget item

s.waiter = null; // forget thread

/*

* At worst we may need to traverse entire stack to unlink

* s. If there are multiple concurrent calls to clean, we

* might not see s if another thread has already removed

* it. But we can stop when we see any node known to

* follow s. We use s.next unless it too is cancelled, in

* which case we try the node one past. We don't check any

* further because we don't want to doubly traverse just to

* find sentinel.

*/

SNode past = s.next;

if (past != null && past.isCancelled())

past = past.next;

// Absorb cancelled nodes at head

SNode p;

while ((p = head) != null && p != past && p.isCancelled())

casHead(p, p.next);

// Unsplice embedded nodes

while (p != null && p != past) {

SNode n = p.next;

if (n != null && n.isCancelled())

p.casNext(n, n.next);

else

p = n;

}

}

// Unsafe mechanics

private static final sun.misc.Unsafe UNSAFE;

private static final long headOffset;

static {

try {

UNSAFE = sun.misc.Unsafe.getUnsafe();

Class<?> k = TransferStack.class;

headOffset = UNSAFE.objectFieldOffset

(k.getDeclaredField("head"));

} catch (Exception e) {

throw new Error(e);

}

}

}

/** Dual Queue */

static final class TransferQueue<E> extends Transferer<E> {

/*

* This extends Scherer-Scott dual queue algorithm, differing,

* among other ways, by using modes within nodes rather than

* marked pointers. The algorithm is a little simpler than

* that for stacks because fulfillers do not need explicit

* nodes, and matching is done by CAS'ing QNode.item field

* from non-null to null (for put) or vice versa (for take).

*/

/** Node class for TransferQueue. */

static final class QNode {

volatile QNode next; // next node in queue

volatile Object item; // CAS'ed to or from null

volatile Thread waiter; // to control park/unpark

final boolean isData;

QNode(Object item, boolean isData) {

this.item = item;

this.isData = isData;

}

boolean casNext(QNode cmp, QNode val) {

return next == cmp &&

UNSAFE.compareAndSwapObject(this, nextOffset, cmp, val);

}

boolean casItem(Object cmp, Object val) {

return item == cmp &&

UNSAFE.compareAndSwapObject(this, itemOffset, cmp, val);

}

/**

* Tries to cancel by CAS'ing ref to this as item.

*/

void tryCancel(Object cmp) {

UNSAFE.compareAndSwapObject(this, itemOffset, cmp, this);

}

boolean isCancelled() {

return item == this;

}

/**

* Returns true if this node is known to be off the queue

* because its next pointer has been forgotten due to

* an advanceHead operation.

*/

boolean isOffList() {

return next == this;

}

// Unsafe mechanics

private static final sun.misc.Unsafe UNSAFE;

private static final long itemOffset;

private static final long nextOffset;

static {

try {

UNSAFE = sun.misc.Unsafe.getUnsafe();

Class<?> k = QNode.class;

itemOffset = UNSAFE.objectFieldOffset

(k.getDeclaredField("item"));

nextOffset = UNSAFE.objectFieldOffset

(k.getDeclaredField("next"));

} catch (Exception e) {

throw new Error(e);

}

}

}

/** Head of queue */

transient volatile QNode head;

/** Tail of queue */

transient volatile QNode tail;

/**

* Reference to a cancelled node that might not yet have been

* unlinked from queue because it was the last inserted node

* when it was cancelled.

*/

transient volatile QNode cleanMe;

TransferQueue() {

QNode h = new QNode(null, false); // initialize to dummy node.

head = h;

tail = h;

}

/**

* Tries to cas nh as new head; if successful, unlink

* old head's next node to avoid garbage retention.

*/

void advanceHead(QNode h, QNode nh) {

if (h == head &&

UNSAFE.compareAndSwapObject(this, headOffset, h, nh))

h.next = h; // forget old next

}

/**

* Tries to cas nt as new tail.

*/

void advanceTail(QNode t, QNode nt) {

if (tail == t)

UNSAFE.compareAndSwapObject(this, tailOffset, t, nt);

}

/**

* Tries to CAS cleanMe slot.

*/

boolean casCleanMe(QNode cmp, QNode val) {

return cleanMe == cmp &&

UNSAFE.compareAndSwapObject(this, cleanMeOffset, cmp, val);

}

/**

* Puts or takes an item.

*/

@SuppressWarnings("unchecked")

E transfer(E e, boolean timed, long nanos) {

/* Basic algorithm is to loop trying to take either of

* two actions:

*

* 1. If queue apparently empty or holding same-mode nodes,

* try to add node to queue of waiters, wait to be

* fulfilled (or cancelled) and return matching item.

*

* 2. If queue apparently contains waiting items, and this

* call is of complementary mode, try to fulfill by CAS'ing

* item field of waiting node and dequeuing it, and then

* returning matching item.

*

* In each case, along the way, check for and try to help

* advance head and tail on behalf of other stalled/slow

* threads.

*

* The loop starts off with a null check guarding against

* seeing uninitialized head or tail values. This never

* happens in current SynchronousQueue, but could if

* callers held non-volatile/final ref to the

* transferer. The check is here anyway because it places

* null checks at top of loop, which is usually faster

* than having them implicitly interspersed.

*/

QNode s = null; // constructed/reused as needed

boolean isData = (e != null);

for (;;) {

QNode t = tail;

QNode h = head;

if (t == null || h == null) // saw uninitialized value

continue; // spin

if (h == t || t.isData == isData) { // empty or same-mode

QNode tn = t.next;

if (t != tail) // inconsistent read

continue;

if (tn != null) { // lagging tail

advanceTail(t, tn);

continue;

}

if (timed && nanos <= 0) // can't wait

return null;

if (s == null)

s = new QNode(e, isData);

if (!t.casNext(null, s)) // failed to link in

continue;

advanceTail(t, s); // swing tail and wait

Object x = awaitFulfill(s, e, timed, nanos);

if (x == s) { // wait was cancelled

clean(t, s);

return null;

}

if (!s.isOffList()) { // not already unlinked

advanceHead(t, s); // unlink if head

if (x != null) // and forget fields

s.item = s;

s.waiter = null;

}

return (x != null) ? (E)x : e;

} else { // complementary-mode

QNode m = h.next; // node to fulfill

if (t != tail || m == null || h != head)

continue; // inconsistent read

Object x = m.item;

if (isData == (x != null) || // m already fulfilled

x == m || // m cancelled

!m.casItem(x, e)) { // lost CAS

advanceHead(h, m); // dequeue and retry

continue;

}

advanceHead(h, m); // successfully fulfilled

LockSupport.unpark(m.waiter);

return (x != null) ? (E)x : e;

}

}

}

/**

* Spins/blocks until node s is fulfilled.

*

* @param s the waiting node

* @param e the comparison value for checking match

* @param timed true if timed wait

* @param nanos timeout value

* @return matched item, or s if cancelled

*/

Object awaitFulfill(QNode s, E e, boolean timed, long nanos) {

/* Same idea as TransferStack.awaitFulfill */

final long deadline = timed ? System.nanoTime() + nanos : 0L;

Thread w = Thread.currentThread();

int spins = ((head.next == s) ?

(timed ? maxTimedSpins : maxUntimedSpins) : 0);

for (;;) {

if (w.isInterrupted())

s.tryCancel(e);

Object x = s.item;

if (x != e)

return x;

if (timed) {

nanos = deadline - System.nanoTime();

if (nanos <= 0L) {

s.tryCancel(e);

continue;

}

}

if (spins > 0)

--spins;

else if (s.waiter == null)

s.waiter = w;

else if (!timed)

LockSupport.park(this);

else if (nanos > spinForTimeoutThreshold)

LockSupport.parkNanos(this, nanos);

}

}

/**

* Gets rid of cancelled node s with original predecessor pred.

*/

void clean(QNode pred, QNode s) {

s.waiter = null; // forget thread

/*

* At any given time, exactly one node on list cannot be

* deleted -- the last inserted node. To accommodate this,

* if we cannot delete s, we save its predecessor as

* "cleanMe", deleting the previously saved version

* first. At least one of node s or the node previously

* saved can always be deleted, so this always terminates.

*/

while (pred.next == s) { // Return early if already unlinked

QNode h = head;

QNode hn = h.next; // Absorb cancelled first node as head

if (hn != null && hn.isCancelled()) {

advanceHead(h, hn);

continue;

}

QNode t = tail; // Ensure consistent read for tail

if (t == h)

return;

QNode tn = t.next;

if (t != tail)

continue;

if (tn != null) {

advanceTail(t, tn);

continue;

}

if (s != t) { // If not tail, try to unsplice

QNode sn = s.next;

if (sn == s || pred.casNext(s, sn))

return;

}

QNode dp = cleanMe;

if (dp != null) { // Try unlinking previous cancelled node

QNode d = dp.next;

QNode dn;

if (d == null || // d is gone or

d == dp || // d is off list or

!d.isCancelled() || // d not cancelled or

(d != t && // d not tail and

(dn = d.next) != null && // has successor

dn != d && // that is on list

dp.casNext(d, dn))) // d unspliced

casCleanMe(dp, null);

if (dp == pred)

return; // s is already saved node

} else if (casCleanMe(null, pred))

return; // Postpone cleaning s

}

}

private static final sun.misc.Unsafe UNSAFE;

private static final long headOffset;

private static final long tailOffset;

private static final long cleanMeOffset;

static {

try {

UNSAFE = sun.misc.Unsafe.getUnsafe();

Class<?> k = TransferQueue.class;

headOffset = UNSAFE.objectFieldOffset

(k.getDeclaredField("head"));

tailOffset = UNSAFE.objectFieldOffset

(k.getDeclaredField("tail"));

cleanMeOffset = UNSAFE.objectFieldOffset

(k.getDeclaredField("cleanMe"));

} catch (Exception e) {

throw new Error(e);

}

}

}

/**

* The transferer. Set only in constructor, but cannot be declared

* as final without further complicating serialization. Since

* this is accessed only at most once per public method, there

* isn't a noticeable performance penalty for using volatile

* instead of final here.

*/

private transient volatile Transferer<E> transferer;

/**

* Creates a {@code SynchronousQueue} with nonfair access policy.

*/

public SynchronousQueue() {

this(false);

}

/**

* Creates a {@code SynchronousQueue} with the specified fairness policy.

*

* @param fair if true, waiting threads contend in FIFO order for

* access; otherwise the order is unspecified.

*/

public SynchronousQueue(boolean fair) {

transferer = fair ? new TransferQueue<E>() : new TransferStack<E>();

}

/**

* Adds the specified element to this queue, waiting if necessary for

* another thread to receive it.

*

* @throws InterruptedException {@inheritDoc}

* @throws NullPointerException {@inheritDoc}

*/

public void put(E e) throws InterruptedException {

if (e == null) throw new NullPointerException();

if (transferer.transfer(e, false, 0) == null) {

Thread.interrupted();

throw new InterruptedException();

}

}

/**

* Inserts the specified element into this queue, waiting if necessary

* up to the specified wait time for another thread to receive it.

*

* @return {@code true} if successful, or {@code false} if the

* specified waiting time elapses before a consumer appears

* @throws InterruptedException {@inheritDoc}

* @throws NullPointerException {@inheritDoc}

*/

public boolean offer(E e, long timeout, TimeUnit unit)

throws InterruptedException {

if (e == null) throw new NullPointerException();

if (transferer.transfer(e, true, unit.toNanos(timeout)) != null)

return true;

if (!Thread.interrupted())

return false;

throw new InterruptedException();

}

/**

* Inserts the specified element into this queue, if another thread is

* waiting to receive it.

*

* @param e the element to add

* @return {@code true} if the element was added to this queue, else

* {@code false}

* @throws NullPointerException if the specified element is null

*/

public boolean offer(E e) {

if (e == null) throw new NullPointerException();

return transferer.transfer(e, true, 0) != null;

}

/**

* Retrieves and removes the head of this queue, waiting if necessary

* for another thread to insert it.

*

* @return the head of this queue

* @throws InterruptedException {@inheritDoc}

*/

public E take() throws InterruptedException {

E e = transferer.transfer(null, false, 0);

if (e != null)

return e;

Thread.interrupted();

throw new InterruptedException();

}

/**

* Retrieves and removes the head of this queue, waiting

* if necessary up to the specified wait time, for another thread

* to insert it.

*

* @return the head of this queue, or {@code null} if the

* specified waiting time elapses before an element is present

* @throws InterruptedException {@inheritDoc}

*/

public E poll(long timeout, TimeUnit unit) throws InterruptedException {

E e = transferer.transfer(null, true, unit.toNanos(timeout));

if (e != null || !Thread.interrupted())

return e;

throw new InterruptedException();

}

/**

* Retrieves and removes the head of this queue, if another thread

* is currently making an element available.

*

* @return the head of this queue, or {@code null} if no

* element is available

*/

public E poll() {

return transferer.transfer(null, true, 0);

}

/**

* Always returns {@code true}.

* A {@code SynchronousQueue} has no internal capacity.

*

* @return {@code true}

*/

public boolean isEmpty() {

return true;

}

/**

* Always returns zero.

* A {@code SynchronousQueue} has no internal capacity.

*

* @return zero

*/

public int size() {

return 0;

}

/**

* Always returns zero.

* A {@code SynchronousQueue} has no internal capacity.

*

* @return zero

*/

public int remainingCapacity() {

return 0;

}

/**

* Does nothing.

* A {@code SynchronousQueue} has no internal capacity.

*/

public void clear() {

}

/**

* Always returns {@code false}.

* A {@code SynchronousQueue} has no internal capacity.

*

* @param o the element

* @return {@code false}

*/

public boolean contains(Object o) {

return false;

}

/**

* Always returns {@code false}.

* A {@code SynchronousQueue} has no internal capacity.

*

* @param o the element to remove

* @return {@code false}

*/

public boolean remove(Object o) {

return false;

}

/**

* Returns {@code false} unless the given collection is empty.

* A {@code SynchronousQueue} has no internal capacity.

*

* @param c the collection

* @return {@code false} unless given collection is empty

*/

public boolean containsAll(Collection<?> c) {

return c.isEmpty();

}

/**

* Always returns {@code false}.

* A {@code SynchronousQueue} has no internal capacity.

*

* @param c the collection

* @return {@code false}

*/

public boolean removeAll(Collection<?> c) {

return false;

}

/**

* Always returns {@code false}.

* A {@code SynchronousQueue} has no internal capacity.

*

* @param c the collection

* @return {@code false}

*/

public boolean retainAll(Collection<?> c) {

return false;

}

/**

* Always returns {@code null}.

* A {@code SynchronousQueue} does not return elements

* unless actively waited on.

*

* @return {@code null}

*/

public E peek() {

return null;

}

/**

* Returns an empty iterator in which {@code hasNext} always returns

* {@code false}.

*

* @return an empty iterator

*/

public Iterator<E> iterator() {

return Collections.emptyIterator();

}

/**

* Returns an empty spliterator in which calls to

* {@link java.util.Spliterator#trySplit()} always return {@code null}.

*

* @return an empty spliterator

* @since 1.8

*/

public Spliterator<E> spliterator() {

return Spliterators.emptySpliterator();

}

/**

* Returns a zero-length array.

* @return a zero-length array

*/

public Object[] toArray() {

return new Object[0];

}

/**

* Sets the zeroeth element of the specified array to {@code null}

* (if the array has non-zero length) and returns it.

*

* @param a the array

* @return the specified array

* @throws NullPointerException if the specified array is null

*/

public <T> T[] toArray(T[] a) {

if (a.length > 0)

a[0] = null;

return a;

}

/**

* @throws UnsupportedOperationException {@inheritDoc}

* @throws ClassCastException {@inheritDoc}

* @throws NullPointerException {@inheritDoc}

* @throws IllegalArgumentException {@inheritDoc}

*/

public int drainTo(Collection<? super E> c) {

if (c == null)

throw new NullPointerException();

if (c == this)

throw new IllegalArgumentException();

int n = 0;

for (E e; (e = poll()) != null;) {

c.add(e);

++n;

}

return n;

}

/**

* @throws UnsupportedOperationException {@inheritDoc}

* @throws ClassCastException {@inheritDoc}

* @throws NullPointerException {@inheritDoc}

* @throws IllegalArgumentException {@inheritDoc}

*/

public int drainTo(Collection<? super E> c, int maxElements) {

if (c == null)

throw new NullPointerException();

if (c == this)

throw new IllegalArgumentException();

int n = 0;

for (E e; n < maxElements && (e = poll()) != null;) {

c.add(e);

++n;

}

return n;

}

/*

* To cope with serialization strategy in the 1.5 version of

* SynchronousQueue, we declare some unused classes and fields

* that exist solely to enable serializability across versions.

* These fields are never used, so are initialized only if this

* object is ever serialized or deserialized.

*/

@SuppressWarnings("serial")

static class WaitQueue implements java.io.Serializable { }

static class LifoWaitQueue extends WaitQueue {

private static final long serialVersionUID = -3633113410248163686L;

}

static class FifoWaitQueue extends WaitQueue {

private static final long serialVersionUID = -3623113410248163686L;

}

private ReentrantLock qlock;

private WaitQueue waitingProducers;

private WaitQueue waitingConsumers;

/**

* Saves this queue to a stream (that is, serializes it).

* @param s the stream

* @throws java.io.IOException if an I/O error occurs

*/

private void writeObject(java.io.ObjectOutputStream s)

throws java.io.IOException {

boolean fair = transferer instanceof TransferQueue;

if (fair) {

qlock = new ReentrantLock(true);

waitingProducers = new FifoWaitQueue();

waitingConsumers = new FifoWaitQueue();

}

else {

qlock = new ReentrantLock();

waitingProducers = new LifoWaitQueue();

waitingConsumers = new LifoWaitQueue();

}

s.defaultWriteObject();

}

/**

* Reconstitutes this queue from a stream (that is, deserializes it).

* @param s the stream

* @throws ClassNotFoundException if the class of a serialized object

* could not be found

* @throws java.io.IOException if an I/O error occurs

*/

private void readObject(java.io.ObjectInputStream s)

throws java.io.IOException, ClassNotFoundException {

s.defaultReadObject();

if (waitingProducers instanceof FifoWaitQueue)

transferer = new TransferQueue<E>();

else

transferer = new TransferStack<E>();

}

// Unsafe mechanics

static long objectFieldOffset(sun.misc.Unsafe UNSAFE,

String field, Class<?> klazz) {

try {

return UNSAFE.objectFieldOffset(klazz.getDeclaredField(field));

} catch (NoSuchFieldException e) {

// Convert Exception to corresponding Error

NoSuchFieldError error = new NoSuchFieldError(field);

error.initCause(e);

throw error;

}

}

}

3.1.2 newFixedThreadPool

创建一个可重用的固定线程数的线程池,以共享的无界队列方式来运行这些线程。在任意点,大多数线程nThreads线程会处于任务的活动状态。如果所有的线程处于活动状态的时候提交附加任务,则在有可用线程之前,附加任务将在队列中等待。如果在关闭前的执行期间由于失败导致任何线程终止,那么一个新线程将代替它执行后续的任务(如果需要)。在某个线程被显示地关闭之前,线程池中将一直存在。

点击查看代码

/**

* Creates a thread pool that reuses a fixed number of threads

* operating off a shared unbounded queue. At any point, at most

* {@code nThreads} threads will be active processing tasks.

* If additional tasks are submitted when all threads are active,

* they will wait in the queue until a thread is available.

* If any thread terminates due to a failure during execution

* prior to shutdown, a new one will take its place if needed to

* execute subsequent tasks. The threads in the pool will exist

* until it is explicitly {@link ExecutorService#shutdown shutdown}.

*

* @param nThreads the number of threads in the pool

* @return the newly created thread pool

* @throws IllegalArgumentException if {@code nThreads <= 0}

*/

public static ExecutorService newFixedThreadPool(int nThreads) {

return new ThreadPoolExecutor(nThreads, nThreads,

0L, TimeUnit.MILLISECONDS,

new LinkedBlockingQueue<Runnable>());

}

/**

* Creates a {@code LinkedBlockingQueue} with a capacity of

* {@link Integer#MAX_VALUE}.

*/

public LinkedBlockingQueue() {

this(Integer.MAX_VALUE);

}

/**

* Creates a {@code LinkedBlockingQueue} with the given (fixed) capacity.

*

* @param capacity the capacity of this queue

* @throws IllegalArgumentException if {@code capacity} is not greater

* than zero

*/

public LinkedBlockingQueue(int capacity) {

if (capacity <= 0) throw new IllegalArgumentException();

this.capacity = capacity;

last = head = new Node<E>(null);

}

LinkedBlockingQueue阻塞队列源码如下:

点击查看代码

/*

* DO NOT ALTER OR REMOVE COPYRIGHT NOTICES OR THIS FILE HEADER.

*

* This code is free software; you can redistribute it and/or modify it

* under the terms of the GNU General Public License version 2 only, as

* published by the Free Software Foundation. Oracle designates this

* particular file as subject to the "Classpath" exception as provided

* by Oracle in the LICENSE file that accompanied this code.

*

* This code is distributed in the hope that it will be useful, but WITHOUT

* ANY WARRANTY; without even the implied warranty of MERCHANTABILITY or

* FITNESS FOR A PARTICULAR PURPOSE. See the GNU General Public License

* version 2 for more details (a copy is included in the LICENSE file that

* accompanied this code).

*

* You should have received a copy of the GNU General Public License version

* 2 along with this work; if not, write to the Free Software Foundation,

* Inc., 51 Franklin St, Fifth Floor, Boston, MA 02110-1301 USA.

*

* Please contact Oracle, 500 Oracle Parkway, Redwood Shores, CA 94065 USA

* or visit www.oracle.com if you need additional information or have any

* questions.

*/

/*

* This file is available under and governed by the GNU General Public

* License version 2 only, as published by the Free Software Foundation.

* However, the following notice accompanied the original version of this

* file:

*

* Written by Doug Lea with assistance from members of JCP JSR-166

* Expert Group and released to the public domain, as explained at

* http://creativecommons.org/publicdomain/zero/1.0/

*/

package java.util.concurrent;

import java.util.concurrent.atomic.AtomicInteger;

import java.util.concurrent.locks.Condition;

import java.util.concurrent.locks.ReentrantLock;

import java.util.AbstractQueue;

import java.util.Collection;

import java.util.Iterator;

import java.util.NoSuchElementException;

import java.util.Spliterator;

import java.util.Spliterators;

import java.util.function.Consumer;

/**

* An optionally-bounded {@linkplain BlockingQueue blocking queue} based on

* linked nodes.

* This queue orders elements FIFO (first-in-first-out).

* The <em>head</em> of the queue is that element that has been on the

* queue the longest time.

* The <em>tail</em> of the queue is that element that has been on the

* queue the shortest time. New elements

* are inserted at the tail of the queue, and the queue retrieval

* operations obtain elements at the head of the queue.

* Linked queues typically have higher throughput than array-based queues but

* less predictable performance in most concurrent applications.

*

* <p>The optional capacity bound constructor argument serves as a

* way to prevent excessive queue expansion. The capacity, if unspecified,

* is equal to {@link Integer#MAX_VALUE}. Linked nodes are

* dynamically created upon each insertion unless this would bring the

* queue above capacity.

*

* <p>This class and its iterator implement all of the

* <em>optional</em> methods of the {@link Collection} and {@link

* Iterator} interfaces.

*

* <p>This class is a member of the

* <a href="{@docRoot}/../technotes/guides/collections/index.html">

* Java Collections Framework</a>.

*

* @since 1.5

* @author Doug Lea

* @param <E> the type of elements held in this collection

*/

public class LinkedBlockingQueue<E> extends AbstractQueue<E>

implements BlockingQueue<E>, java.io.Serializable {

private static final long serialVersionUID = -6903933977591709194L;

/*

* A variant of the "two lock queue" algorithm. The putLock gates

* entry to put (and offer), and has an associated condition for

* waiting puts. Similarly for the takeLock. The "count" field

* that they both rely on is maintained as an atomic to avoid

* needing to get both locks in most cases. Also, to minimize need

* for puts to get takeLock and vice-versa, cascading notifies are

* used. When a put notices that it has enabled at least one take,

* it signals taker. That taker in turn signals others if more

* items have been entered since the signal. And symmetrically for

* takes signalling puts. Operations such as remove(Object) and

* iterators acquire both locks.

*

* Visibility between writers and readers is provided as follows:

*

* Whenever an element is enqueued, the putLock is acquired and

* count updated. A subsequent reader guarantees visibility to the

* enqueued Node by either acquiring the putLock (via fullyLock)

* or by acquiring the takeLock, and then reading n = count.get();

* this gives visibility to the first n items.

*

* To implement weakly consistent iterators, it appears we need to

* keep all Nodes GC-reachable from a predecessor dequeued Node.

* That would cause two problems:

* - allow a rogue Iterator to cause unbounded memory retention

* - cause cross-generational linking of old Nodes to new Nodes if

* a Node was tenured while live, which generational GCs have a

* hard time dealing with, causing repeated major collections.

* However, only non-deleted Nodes need to be reachable from

* dequeued Nodes, and reachability does not necessarily have to

* be of the kind understood by the GC. We use the trick of

* linking a Node that has just been dequeued to itself. Such a

* self-link implicitly means to advance to head.next.

*/

/**

* Linked list node class

*/

static class Node<E> {

E item;

/**

* One of:

* - the real successor Node

* - this Node, meaning the successor is head.next

* - null, meaning there is no successor (this is the last node)

*/

Node<E> next;

Node(E x) { item = x; }

}

/** The capacity bound, or Integer.MAX_VALUE if none */

private final int capacity;

/** Current number of elements */

private final AtomicInteger count = new AtomicInteger();

/**

* Head of linked list.

* Invariant: head.item == null

*/

transient Node<E> head;

/**

* Tail of linked list.

* Invariant: last.next == null

*/

private transient Node<E> last;

/** Lock held by take, poll, etc */

private final ReentrantLock takeLock = new ReentrantLock();

/** Wait queue for waiting takes */

private final Condition notEmpty = takeLock.newCondition();

/** Lock held by put, offer, etc */

private final ReentrantLock putLock = new ReentrantLock();

/** Wait queue for waiting puts */

private final Condition notFull = putLock.newCondition();

/**

* Signals a waiting take. Called only from put/offer (which do not

* otherwise ordinarily lock takeLock.)

*/

private void signalNotEmpty() {

final ReentrantLock takeLock = this.takeLock;

takeLock.lock();

try {

notEmpty.signal();

} finally {

takeLock.unlock();

}

}

/**

* Signals a waiting put. Called only from take/poll.

*/

private void signalNotFull() {

final ReentrantLock putLock = this.putLock;

putLock.lock();

try {

notFull.signal();

} finally {

putLock.unlock();

}

}

/**

* Links node at end of queue.

*

* @param node the node

*/

private void enqueue(Node<E> node) {

// assert putLock.isHeldByCurrentThread();

// assert last.next == null;

last = last.next = node;

}

/**

* Removes a node from head of queue.

*

* @return the node

*/

private E dequeue() {

// assert takeLock.isHeldByCurrentThread();

// assert head.item == null;

Node<E> h = head;

Node<E> first = h.next;

h.next = h; // help GC

head = first;

E x = first.item;

first.item = null;

return x;

}

/**

* Locks to prevent both puts and takes.

*/

void fullyLock() {

putLock.lock();

takeLock.lock();

}

/**

* Unlocks to allow both puts and takes.

*/

void fullyUnlock() {

takeLock.unlock();

putLock.unlock();

}

// /**

// * Tells whether both locks are held by current thread.

// */

// boolean isFullyLocked() {

// return (putLock.isHeldByCurrentThread() &&

// takeLock.isHeldByCurrentThread());

// }

/**

* Creates a {@code LinkedBlockingQueue} with a capacity of

* {@link Integer#MAX_VALUE}.

*/

public LinkedBlockingQueue() {

this(Integer.MAX_VALUE);

}

/**

* Creates a {@code LinkedBlockingQueue} with the given (fixed) capacity.

*

* @param capacity the capacity of this queue

* @throws IllegalArgumentException if {@code capacity} is not greater

* than zero

*/

public LinkedBlockingQueue(int capacity) {

if (capacity <= 0) throw new IllegalArgumentException();

this.capacity = capacity;

last = head = new Node<E>(null);

}

/**

* Creates a {@code LinkedBlockingQueue} with a capacity of

* {@link Integer#MAX_VALUE}, initially containing the elements of the

* given collection,

* added in traversal order of the collection's iterator.

*

* @param c the collection of elements to initially contain

* @throws NullPointerException if the specified collection or any

* of its elements are null

*/

public LinkedBlockingQueue(Collection<? extends E> c) {

this(Integer.MAX_VALUE);

final ReentrantLock putLock = this.putLock;

putLock.lock(); // Never contended, but necessary for visibility

try {

int n = 0;

for (E e : c) {

if (e == null)

throw new NullPointerException();

if (n == capacity)

throw new IllegalStateException("Queue full");

enqueue(new Node<E>(e));

++n;

}

count.set(n);

} finally {

putLock.unlock();

}

}

// this doc comment is overridden to remove the reference to collections

// greater in size than Integer.MAX_VALUE

/**

* Returns the number of elements in this queue.

*

* @return the number of elements in this queue

*/

public int size() {

return count.get();

}

// this doc comment is a modified copy of the inherited doc comment,

// without the reference to unlimited queues.

/**

* Returns the number of additional elements that this queue can ideally

* (in the absence of memory or resource constraints) accept without

* blocking. This is always equal to the initial capacity of this queue

* less the current {@code size} of this queue.

*

* <p>Note that you <em>cannot</em> always tell if an attempt to insert

* an element will succeed by inspecting {@code remainingCapacity}

* because it may be the case that another thread is about to

* insert or remove an element.

*/

public int remainingCapacity() {

return capacity - count.get();

}

/**

* Inserts the specified element at the tail of this queue, waiting if

* necessary for space to become available.

*

* @throws InterruptedException {@inheritDoc}

* @throws NullPointerException {@inheritDoc}

*/

public void put(E e) throws InterruptedException {

if (e == null) throw new NullPointerException();

// Note: convention in all put/take/etc is to preset local var

// holding count negative to indicate failure unless set.

int c = -1;

Node<E> node = new Node<E>(e);

final ReentrantLock putLock = this.putLock;

final AtomicInteger count = this.count;

putLock.lockInterruptibly();

try {

/*

* Note that count is used in wait guard even though it is

* not protected by lock. This works because count can

* only decrease at this point (all other puts are shut

* out by lock), and we (or some other waiting put) are

* signalled if it ever changes from capacity. Similarly

* for all other uses of count in other wait guards.

*/

while (count.get() == capacity) {

notFull.await();

}

enqueue(node);

c = count.getAndIncrement();

if (c + 1 < capacity)

notFull.signal();

} finally {

putLock.unlock();

}

if (c == 0)

signalNotEmpty();

}

/**

* Inserts the specified element at the tail of this queue, waiting if

* necessary up to the specified wait time for space to become available.

*

* @return {@code true} if successful, or {@code false} if

* the specified waiting time elapses before space is available

* @throws InterruptedException {@inheritDoc}

* @throws NullPointerException {@inheritDoc}

*/

public boolean offer(E e, long timeout, TimeUnit unit)

throws InterruptedException {

if (e == null) throw new NullPointerException();

long nanos = unit.toNanos(timeout);

int c = -1;

final ReentrantLock putLock = this.putLock;

final AtomicInteger count = this.count;

putLock.lockInterruptibly();

try {

while (count.get() == capacity) {

if (nanos <= 0)

return false;

nanos = notFull.awaitNanos(nanos);

}

enqueue(new Node<E>(e));

c = count.getAndIncrement();

if (c + 1 < capacity)

notFull.signal();

} finally {

putLock.unlock();

}

if (c == 0)

signalNotEmpty();

return true;

}

/**

* Inserts the specified element at the tail of this queue if it is

* possible to do so immediately without exceeding the queue's capacity,

* returning {@code true} upon success and {@code false} if this queue

* is full.

* When using a capacity-restricted queue, this method is generally

* preferable to method {@link BlockingQueue#add add}, which can fail to

* insert an element only by throwing an exception.

*

* @throws NullPointerException if the specified element is null

*/

public boolean offer(E e) {

if (e == null) throw new NullPointerException();

final AtomicInteger count = this.count;

if (count.get() == capacity)

return false;

int c = -1;

Node<E> node = new Node<E>(e);

final ReentrantLock putLock = this.putLock;

putLock.lock();

try {

if (count.get() < capacity) {

enqueue(node);

c = count.getAndIncrement();

if (c + 1 < capacity)

notFull.signal();

}

} finally {

putLock.unlock();

}

if (c == 0)

signalNotEmpty();

return c >= 0;

}

public E take() throws InterruptedException {

E x;

int c = -1;

final AtomicInteger count = this.count;

final ReentrantLock takeLock = this.takeLock;

takeLock.lockInterruptibly();

try {

while (count.get() == 0) {

notEmpty.await();

}

x = dequeue();

c = count.getAndDecrement();

if (c > 1)

notEmpty.signal();

} finally {

takeLock.unlock();

}

if (c == capacity)

signalNotFull();

return x;

}

public E poll(long timeout, TimeUnit unit) throws InterruptedException {

E x = null;

int c = -1;

long nanos = unit.toNanos(timeout);

final AtomicInteger count = this.count;

final ReentrantLock takeLock = this.takeLock;

takeLock.lockInterruptibly();

try {

while (count.get() == 0) {

if (nanos <= 0)

return null;

nanos = notEmpty.awaitNanos(nanos);

}

x = dequeue();

c = count.getAndDecrement();

if (c > 1)

notEmpty.signal();

} finally {

takeLock.unlock();

}

if (c == capacity)

signalNotFull();

return x;

}

public E poll() {

final AtomicInteger count = this.count;

if (count.get() == 0)

return null;

E x = null;

int c = -1;

final ReentrantLock takeLock = this.takeLock;

takeLock.lock();

try {

if (count.get() > 0) {

x = dequeue();

c = count.getAndDecrement();

if (c > 1)

notEmpty.signal();

}

} finally {

takeLock.unlock();

}

if (c == capacity)

signalNotFull();

return x;

}

public E peek() {

if (count.get() == 0)

return null;

final ReentrantLock takeLock = this.takeLock;

takeLock.lock();

try {

Node<E> first = head.next;

if (first == null)

return null;

else

return first.item;

} finally {

takeLock.unlock();

}

}

/**

* Unlinks interior Node p with predecessor trail.

*/

void unlink(Node<E> p, Node<E> trail) {

// assert isFullyLocked();

// p.next is not changed, to allow iterators that are

// traversing p to maintain their weak-consistency guarantee.

p.item = null;

trail.next = p.next;

if (last == p)

last = trail;

if (count.getAndDecrement() == capacity)

notFull.signal();

}

/**

* Removes a single instance of the specified element from this queue,

* if it is present. More formally, removes an element {@code e} such

* that {@code o.equals(e)}, if this queue contains one or more such

* elements.

* Returns {@code true} if this queue contained the specified element

* (or equivalently, if this queue changed as a result of the call).

*

* @param o element to be removed from this queue, if present

* @return {@code true} if this queue changed as a result of the call

*/

public boolean remove(Object o) {