Centos7系统编译Hadoop3.3.4

1、背景

最近在学习hadoop,此篇文章简单记录一下通过源码来编译hadoop。为什么要重新编译hadoop源码,是因为为了匹配不同操作系统的本地库环境。

2、编译源码

2.1 下载并解压源码

[root@hadoop01 ~]# mkdir /opt/hadoop

[root@hadoop01 ~]# cd /opt/hadoop/

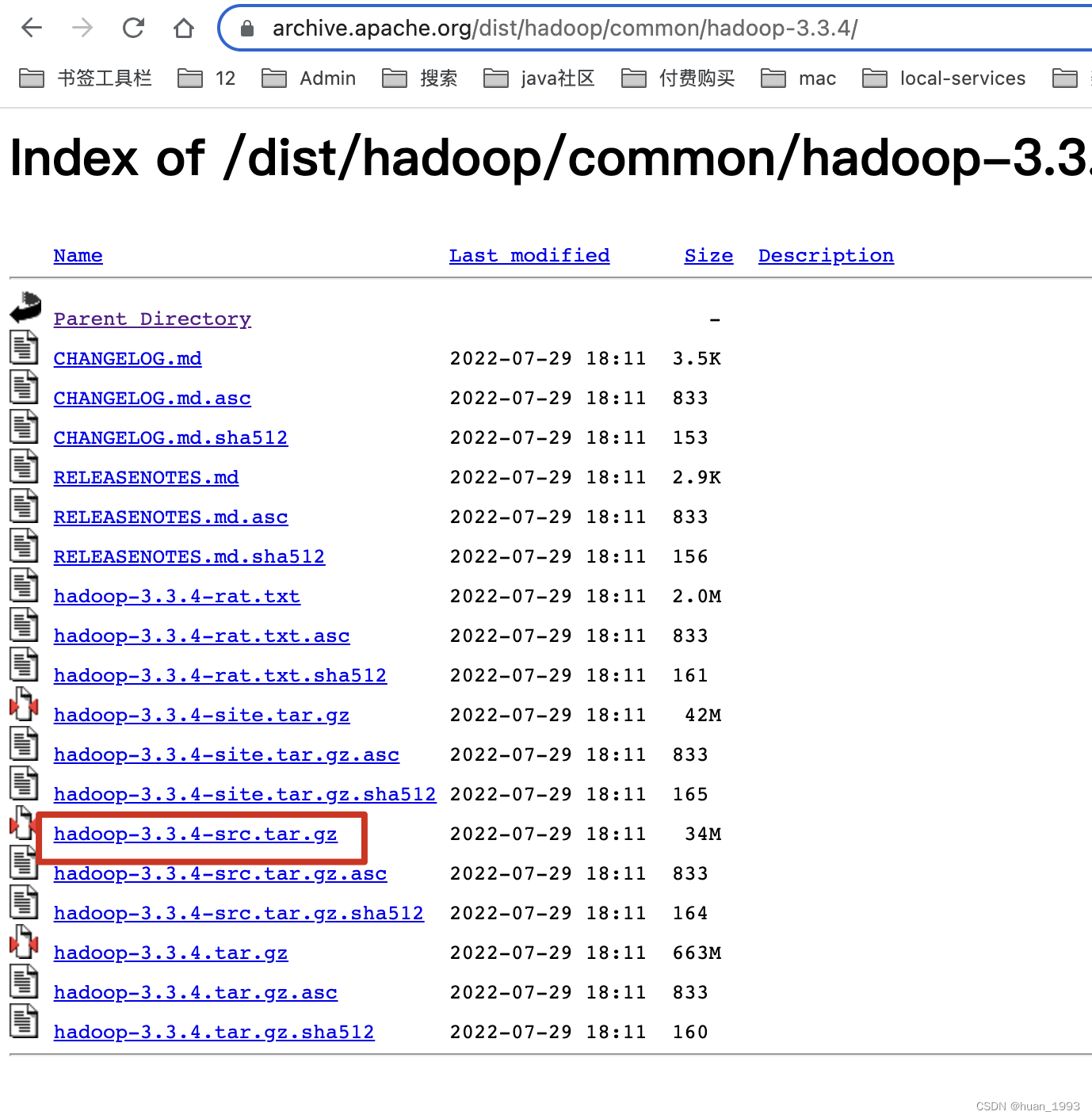

[root@hadoop01 hadoop]# wget https://archive.apache.org/dist/hadoop/common/hadoop-3.3.4/hadoop-3.3.4-src.tar.gz

[root@hadoop01 hadoop]# tar -zxvf hadoop-3.3.4-src.tar.gz

[root@hadoop01 hadoop]# rm -rvf hadoop-3.3.4-src.tar.gz

2.2 查看编译hadoop必要的环境

[root@hadoop01 hadoop]# pwd

/opt/hadoop

[root@hadoop01 hadoop]# cd hadoop-3.3.4-src/

[root@hadoop01 hadoop-3.3.4-src]# cat BUILDING.txt

Build instructions for Hadoop

----------------------------------------------------------------------------------

Requirements:

* Unix System

* JDK 1.8

* Maven 3.3 or later

* Protocol Buffers 3.7.1 (if compiling native code)

* CMake 3.1 or newer (if compiling native code)

* Zlib devel (if compiling native code)

* Cyrus SASL devel (if compiling native code)

* One of the compilers that support thread_local storage: GCC 4.8.1 or later, Visual Studio,

Clang (community version), Clang (version for iOS 9 and later) (if compiling native code)

* openssl devel (if compiling native hadoop-pipes and to get the best HDFS encryption performance)

* Linux FUSE (Filesystem in Userspace) version 2.6 or above (if compiling fuse_dfs)

* Doxygen ( if compiling libhdfspp and generating the documents )

* Internet connection for first build (to fetch all Maven and Hadoop dependencies)

* python (for releasedocs)

* bats (for shell code testing)

* Node.js / bower / Ember-cli (for YARN UI v2 building)

----------------------------------------------------------------------------------

可以看到需要JDK1.8及以上、maven3.3及以上等等

2.3 安装JDK

需要注意JDK的版本,参考这个文档。https://cwiki.apache.org/confluence/display/HADOOP/Hadoop+Java+Versions

2.4 安装maven

[root@hadoop01 hadoop]# wget https://dlcdn.apache.org/maven/maven-3/3.9.0/binaries/apache-maven-3.9.0-bin.tar.gz

[root@hadoop01 hadoop]# tar -zxvf apache-maven-3.9.0-bin.tar.gz -C /usr/local

# 编辑环境变量

[root@hadoop01 hadoop]# vim /etc/profile

# 配置maven

export M2_HOME=/usr/local/apache-maven-3.9.0

export PATH=${M2_HOME}/bin:$PATH

[root@hadoop01 hadoop]# source /etc/profile

# 查看maven的版本

[root@hadoop01 apache-maven-3.9.0]# mvn -version

Apache Maven 3.9.0 (9b58d2bad23a66be161c4664ef21ce219c2c8584)

Maven home: /usr/local/apache-maven-3.9.0

Java version: 1.8.0_333, vendor: Oracle Corporation, runtime: /usr/local/jdk8/jre

Default locale: zh_CN, platform encoding: UTF-8

OS name: "linux", version: "5.11.12-300.el7.aarch64", arch: "aarch64", family: "unix"

# 配置aliyun镜像加速访问

[root@hadoop01 hadoop]# vim /usr/local/apache-maven-3.9.0/conf/settings.xml

<mirrors>

<mirror>

<id>alimaven</id>

<name>aliyun maven</name>

<url>http://maven.aliyun.com/nexus/content/groups/public/</url>

<mirrorOf>central</mirrorOf>

</mirror>

</mirrors>

2.5 安装编译相关的依赖

[root@hadoop01 hadoop]# yum install gcc gcc-c++ make autoconf automake libtool curl lzo-devel zlib-devel openssl openssl-devel ncurses-devel snappy snappy-devel bzip2 bzip2-devel lzo lzo-devel lzop libXtst zlib doxygen cyrus-sasl* saslwrapper-devel* -y

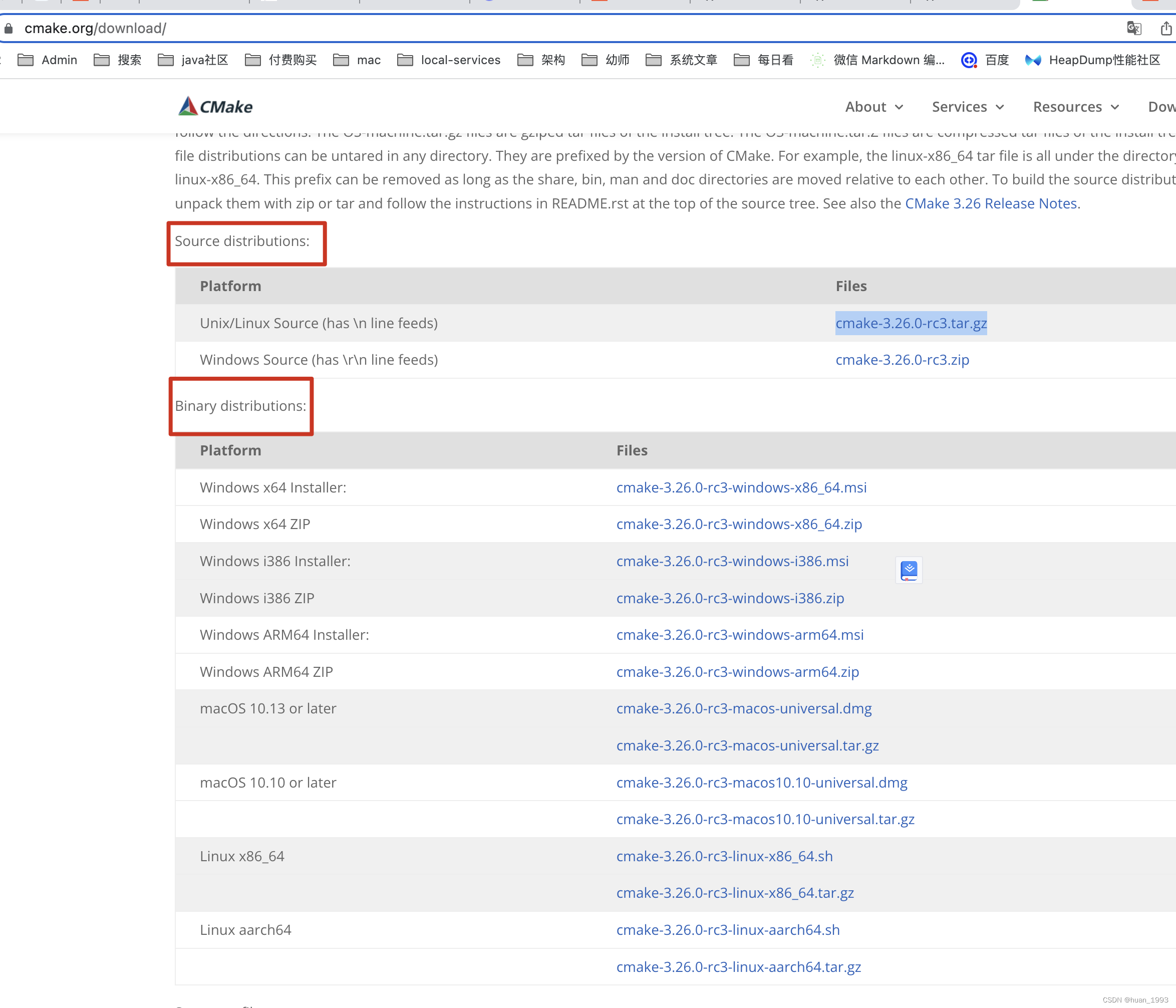

2.6 安装cmake

hadoop要求* CMake 3.1 or newer (if compiling native code) cmake的版本在3.1及其以上。cmake不是必须的。

# 卸载已有的cmake

[root@hadoop01 hadoop]# yum erase cmake

# 下载cmake(此处需要根据自己的操作系统进行下载)

[root@hadoop01 hadoop]# wget https://github.com/Kitware/CMake/releases/download/v3.25.2/cmake-3.25.2.tar.gz

[root@hadoop01 hadoop]# tar -zxvf cmake-3.25.2.tar.gz

# 编译和安装cmake

[root@hadoop01 cmake-3.25.2-linux-aarch64]# cd cmake-3.25.2/ && ./configure && make && make install

# 查看cmake的版本

[root@hadoop01 cmake-3.25.2]# cmake -version

cmake version 3.25.2

CMake suite maintained and supported by Kitware (kitware.com/cmake).

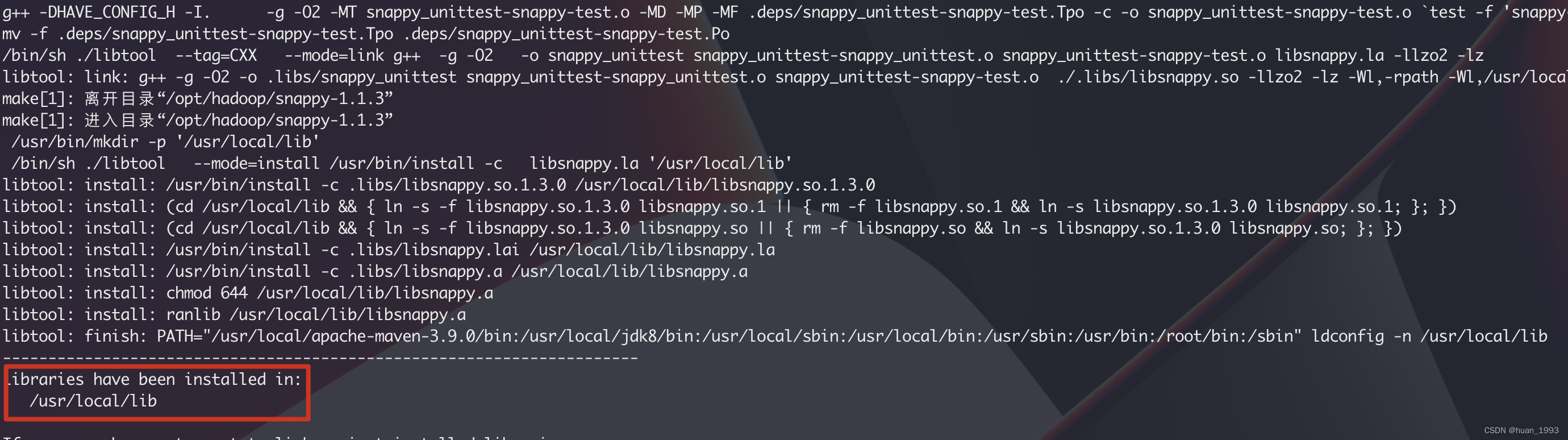

2.7 安装Snappy

* Snappy compression (only used for hadoop-mapreduce-client-nativetask)

# 卸载已安装的snappy

[root@hadoop01 hadoop]# rm -rf /usr/local/lib/libsnappy* && rm -rf /lib64/libsnappy*

[root@hadoop01 hadoop]# wget https://src.fedoraproject.org/repo/pkgs/snappy/snappy-1.1.3.tar.gz/7358c82f133dc77798e4c2062a749b73/snappy-1.1.3.tar.gz

[root@hadoop01 snappy]# tar -zxvf snappy-1.1.3.tar.gz

[root@hadoop01 snappy]# cd snappy-1.1.3/ && ./configure && make && make install

[root@hadoop01 build]#

2.8 安装ProtocolBuffer

* Protocol Buffers 3.7.1 (if compiling native code) 安装3.7.1的版本

[root@hadoop01 hadoop]# wget https://github.com/protocolbuffers/protobuf/releases/download/v3.7.1/protobuf-java-3.7.1.tar.gz

[root@hadoop01 hadoop]# tar -zxvf protobuf-java-3.7.1.tar.gz

# 编译和安装

[root@hadoop01 hadoop]# cd protobuf-3.7.1/ && ./autogen.sh && ./configure && make && make install

# 验证是否安装成功

[root@hadoop01 protobuf-3.7.1]# protoc --version

libprotoc 3.7.1

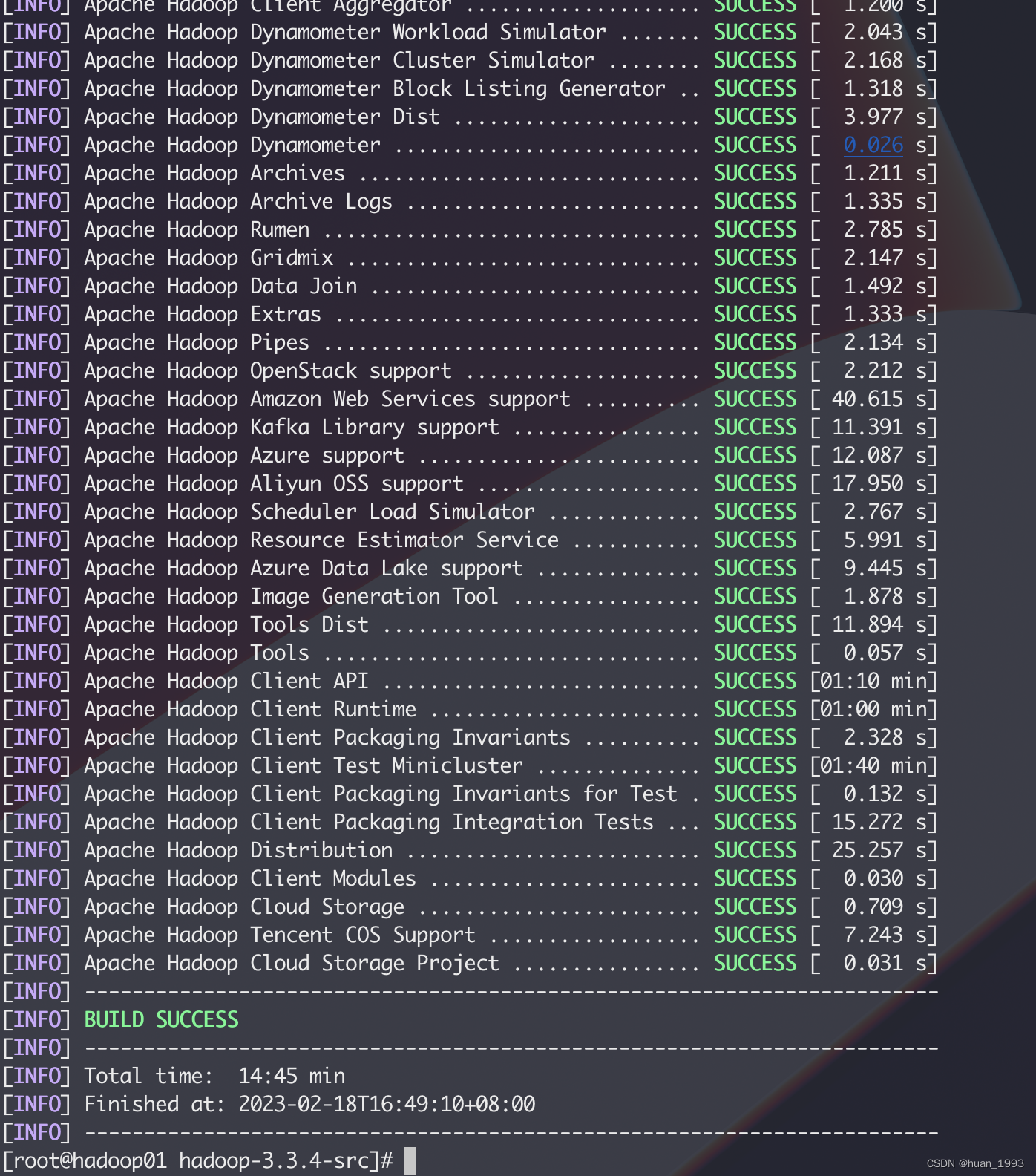

2.9 编译hadoop

[root@hadoop01 hadoop-3.3.4-src]# pwd

/opt/hadoop/hadoop-3.3.4-src

[root@hadoop01 hadoop-3.3.4-src]# export MAVEN_OPTS="-Xms3072m -Xmx3072m" && mvn clean package -Pdist,native -DskipTests -Dtar -Dbundle.snappy -Dsnappy.lib=/usr/local/lib -e

此处的mvn 命令也可以从BUILDING.txt文件中获取。

2.10 编译后的安装包路径

hadoop-3.3.4-src/hadoop-dist/target/hadoop-3.3.4.tar.gz

2.11 检测native

[root@hadoop01 hadoop]# tar -zxvf hadoop-3.3.4.tar.gz

[root@hadoop01 bin]# cd hadoop-3.3.4/bin

[root@hadoop01 bin]# ./hadoop checknative -a

2023-02-18 16:58:39,698 INFO bzip2.Bzip2Factory: Successfully loaded & initialized native-bzip2 library system-native

2023-02-18 16:58:39,700 INFO zlib.ZlibFactory: Successfully loaded & initialized native-zlib library

2023-02-18 16:58:39,700 WARN erasurecode.ErasureCodeNative: ISA-L support is not available in your platform... using builtin-java codec where applicable

2023-02-18 16:58:39,760 INFO nativeio.NativeIO: The native code was built without PMDK support.

Native library checking:

hadoop: true /opt/hadoop/hadoop-3.3.4/lib/native/libhadoop.so.1.0.0

zlib: true /lib64/libz.so.1

zstd : false

bzip2: true /lib64/libbz2.so.1

openssl: true /lib64/libcrypto.so

ISA-L: false libhadoop was built without ISA-L support

PMDK: false The native code was built without PMDK support.

2023-02-18 16:58:39,764 INFO util.ExitUtil: Exiting with status 1: ExitException

可以看到上方还有很多false的,不过这不影响hadoop的使用。 如果要解决的话,可以安装这些依赖,然后重新编译hadoop。

3、参考文章

1、https://www.vvave.net/archives/how-to-build-hadoop-334-native-libraries-full-kit-on-amd64.html

2、https://cwiki.apache.org/confluence/display/HADOOP/Hadoop+Java+Versions

本文来自博客园,作者:huan1993,转载请注明原文链接:https://www.cnblogs.com/huan1993/p/17136933.html