ELK 日志分析系统

一、ELK 介绍

ELK是3个开源软件的缩写,分别为Elasticsearch 、 Logstash和Kibana , 它们都是开源软件。不过现在还新增了一个Beats,它是一个轻量级的日志收集处理工具(Agent),Beats占用资源少,适合于在各个服务器上搜集日志后传输给Logstash,官方也推荐此工具,目前由于原本的ELK Stack成员中加入了Beats工具所以已改名为Elastic Stack。

Elasticsearch是个开源分布式搜索引擎,提供搜集、分析、存储数据3大功能。它的特点有:分布式,零配置,自动发现,索引自动分片,索引副本机制,restful风格接口,多数据源,自动搜索负载等。

Logstash主要是用来日志的搜集、分析、过滤日志的工具,支持大量的数据获取方式。一般工作方式为c/s架构,Client端安装在需要收集日志的主机上,server端负责将收到的各节点日志进行过滤、修改等操作在一并发往Elasticsearch上去。

Kibana也是一个开源和免费的工具,Kibana可以为 Logstash和 ElasticSearch提供的日志分析友好的 Web 界面,可以帮助汇总、分析和搜索重要数据日志。

Beats在这里是一个轻量级日志采集器,其实Beats家族有6个成员,早期的ELK架构中使用Logstash收集、解析日志,但是Logstash对内存、CPU、io等资源消耗比较高。相比 Logstash,Beats所占系统的CPU和内存几乎可以忽略不计。

二、基础环境配置

1.虚拟机

规划3个节点,其中1个作为主节点,2个作为数据节点:

|

节点IP |

节点规划 |

主机名 |

|

192.168.100.11 |

Elasticsearch+Kibana(主) |

elk_1 |

|

192.168.100.12 |

Elasticsearch+Logstash(数据) |

elk_2 |

|

192.168.100.13 |

Elasticsearch(数据) |

elk_3 |

2.修改主机名

elk_1节点:

[root@localhost ~]# hostnamectl set-hostname elk_1

[root@localhost ~]# bash

[root@elk_1 ~]#

elk_2节点:

[root@localhost ~]# hostnamectl set-hostname elk_2

[root@localhost ~]# bash

[root@elk_2 ~]#

elk_3节点:

[root@localhost ~]# hostnamectl set-hostname elk_3

[root@localhost ~]# bash

[root@elk_3 ~]#

3.配置 hosts 文件

elk_1节点:

[root@elk_1 ~]# vi /etc/hosts [root@elk_1 ~]# cat /etc/hosts 127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4 ::1 localhost localhost.localdomain localhost6 localhost6.localdomain6 192.168.100.11 elk-1 192.168.100.12 elk-2 192.168.100.13 elk-3

elk_1节点复制 hosts 文件传到 elk_2 中:

[root@elk_1 ~]# scp /etc/hosts elk_2:/etc/hosts

root@elk_2's password:******

elk_3 也是这样:

[root@elk_1 ~]# scp /etc/hosts elk_3:/etc/hosts

root@elk_3's password:******

4.yum安装

部署ELK环境需要jdk1.8以上的JDK版本软件环境,我们使用opnejdk1.8,3节点全部安装(以elk-1节点为例)。

elk-1节点:

[root@elk_1 ~]# yum install -y java-1.8.0-openjdk java-1.8.0-openjdk-devel vim net-tools wget

[root@elk_1 ~]# java -version

openjdk version "1.8.0_242"

OpenJDK Runtime Environment (build 1.8.0_242-b08)

OpenJDK 64-Bit Server VM (build 25.242-b08, mixed mode)

elk_2 和 elk_3 都需要安装JDK

三、部署 Elasticserach

1.下载 Elasticserach

下载 Elasticserach-6.0.0.rpm 包

[root@elk_1 ~] wget https://artifacts.elastic.co/downloads/elasticsearch/elasticsearch-6.0.0.rpm

[root@elk_1 ~]# ls

anaconda-ks.cfg elasticsearch-6.0.0.rpm

elk_1 的 elasticsearch-6.0.0.rpm 包传给 elk_2

[root@elk_1 ~]# scp elasticsearch-6.0.0.rpm elk_2:/root

root@elk_2's password:******

elk_3 也需传过去跟上面步骤一样

2.安装 Elasticserach

elk_2 和 elk_3 节点也需要安装。

[root@elk_1 ~]# rpm -ivh elasticsearch-6.0.0.rpm

// 参数含义:i表示安装,v表示显示安装过程,h表示显示进度

warning: elasticsearch-6.0.0.rpm: Header V4 RSA/SHA512 Signature, key ID d88e42b4: NOKEY

Preparing... ################################# [100%]

Creating elasticsearch group... OK

Creating elasticsearch user... OK

Updating / installing...

1:elasticsearch-0:6.0.0-1 ################################# [100%]

### NOT starting on installation, please execute the following statements to configure elasticsearch service to start automatically using systemd

sudo systemctl daemon-reload

sudo systemctl enable elasticsearch.service

### You can start elasticsearch service by executing

sudo systemctl start elasticsearch.service

3.配置 Elasticserach

配置elasticsearch的配置文件,配置文件:/etc/elasticsearch/elasticsearch.yml

elk-1节点:增加以下红色字样(//为解释,这里用不到的配置文件被删除),注意IP。

[root@elk_1 ~]# vi /etc/elasticsearch/elasticsearch.yml

# ======= Elasticsearch Configuration ===========

#

# NOTE: Elasticsearch comes with reasonable defaults for most settings.

# Before you set out to tweak and tune the configuration, make sure you

# understand what are you trying to accomplish and the consequences.

#

# The primary way of configuring a node is via this file. This template lists

# the most important settings you may want to configure for a production cluster.

#

# Please consult the documentation for further information on configuration options:

# https://www.elastic.co/guide/en/elasticsearch/reference/index.html

#

# ------------------Cluster --------------------

# Use a descriptive name for your cluster:

cluster.name: ELK

//配置es的集群名称,默认是elasticsearch,es会自动发现在同一网段下的es,如果在同一网段下有多个集群,就可以用这个属性来区分不同的集群。

# ------------------------Node -----------------

# Use a descriptive name for the node:

node.name: elk-1

//节点名,默认随机指定一个name列表中名字,该列表在es的jar包中config文件夹里name.txt文件中,其中有很多作者添加的有趣名字。

node.master: true

//指定该节点是否有资格被选举成为node,默认是true,es是默认集群中的第一台机器为master,如果这台机挂了就会重新选举master。 其他两节点为false

node.data: false

//指定该节点是否存储索引数据,默认为true。其他两节点为true

# ----------------- Paths ----------------

# Path to directory where to store the data (separate multiple locations by comma):

path.data: /var/lib/elasticsearch

//索引数据存储位置(保持默认,不要开启注释)

# Path to log files:

path.logs: /var/log/elasticsearch

//设置日志文件的存储路径,默认是es根目录下的logs文件夹

# --------------- Network ------------------

# Set the bind address to a specific IP (IPv4 or IPv6):

network.host: 192.168.100.11

//设置绑定的ip地址,可以是ipv4或ipv6的,默认为0.0.0.0。

# Set a custom port for HTTP:

http.port: 9200

//启动的es对外访问的http端口,默认9200

# For more information, consult the network module documentation.

# --------------------Discovery ----------------

# Pass an initial list of hosts to perform discovery when new node is started:

# The default list of hosts is ["127.0.0.1", "[::1]"]

#discovery.zen.ping.unicast.hosts: ["host1", "host2"]

discovery.zen.ping.unicast.hosts: ["elk-1","elk-2","elk-3"]

//设置集群中master节点的初始列表,可以通过这些节点来自动发现新加入集群的节点。

elk_2 节点和 elk_3 节点可以使用上面的 scp 命令传过去。(标红色的地方需要修改) elk_1 是主节点使用 elk_2 和 elk_3 的配置不太一样

例如:elk_2:

[root@elk_2 ~]# vi /etc/elasticsearch/elasticsearch.yml

cluster.name: ELK

node.name: elk-2

node.master: false

node.data: true

path.data: /var/lib/elasticsearch

path.logs: /var/log/elasticsearch

network.host: 192.168.100.12

http.port: 9200

discovery.zen.ping.unicast.hosts: ["elk-1","elk-2","elk-3"]

4.启动服务

通过命令启动es服务,启动后使用ps命令查看进程是否存在或者使用netstat命令查看是否端口启动。

[root@elk_1 ~]# systemctl start elasticsearch

[root@elk_1 ~]# ps -ef |grep elasticsearch

elastic+ 19280 1 0 09:00 ? 00:00:54 /bin/java -Xms1g -Xmx1g -XX:+UseConcMarkSweepGC -XX:CMSInitiatingOccupancyFraction=75 -XX:+UseCMSInitiatingOccupancyOnly -XX:+AlwaysPreTouch -server -Xss1m -Djava.awt.headless=true -Dfile.encoding=UTF-8 -Djna.nosys=true -XX:-OmitStackTraceInFastThrow -Dio.netty.noUnsafe=true -Dio.netty.noKeySetOptimization=true -Dio.netty.recycler.maxCapacityPerThread=0 -Dlog4j.shutdownHookEnabled=false -Dlog4j2.disable.jmx=true -XX:+HeapDumpOnOutOfMemoryError -XX:HeapDumpPath=/var/lib/elasticsearch -Des.path.home=/usr/share/elasticsearch -Des.path.conf=/etc/elasticsearch -cp /usr/share/elasticsearch/lib/* org.elasticsearch.bootstrap.Elasticsearch -p /var/run/elasticsearch/elasticsearch.pid --quiet

root 19844 19230 0 10:54 pts/0 00:00:00 grep --color=auto elasticsearch

[root@elk_1 ~]# netstat -lntp

Active Internet connections (only servers)

Proto Recv-Q Send-Q Local Address Foreign Address State PID/Program name

tcp 0 0 0.0.0.0:22 0.0.0.0:* LISTEN 1446/sshd

tcp 0 0 127.0.0.1:25 0.0.0.0:* LISTEN 1994/master

tcp6 0 0 192.168.40.11:9200 :::* LISTEN 19280/java

tcp6 0 0 192.168.40.11:9300 :::* LISTEN 19280/java

tcp6 0 0 :::22 :::* LISTEN 1446/sshd

tcp6 0 0 ::1:25 :::* LISTEN 1994/master

有以上端口或者进程存在,证明es服务启动成功。

elk_2 和 elk_3 节点也需要启动。

5.检测集群状态

使用curl命令来检查集群状态

elk_1 节点:

[root@elk_1 ~]# curl '192.168.100.11:9200/_cluster/health?pretty'

{

"cluster_name" : "ELK",

"status" : "green",

//为green则代表健康没问题,yellow或者red 则是集群有问题

"timed_out" : false,

//是否有超时

"number_of_nodes" : 3,

//集群中的节点数量

"number_of_data_nodes" : 2,

//集群中data节点的数量

"active_primary_shards" : 1,

"active_shards" : 2,

"relocating_shards" : 0,

"initializing_shards" : 0,

"unassigned_shards" : 0,

"delayed_unassigned_shards" : 0,

"number_of_pending_tasks" : 0,

"number_of_in_flight_fetch" : 0,

"task_max_waiting_in_queue_millis" : 0,

"active_shards_percent_as_number" : 100.0

}

四、部署 Kibana

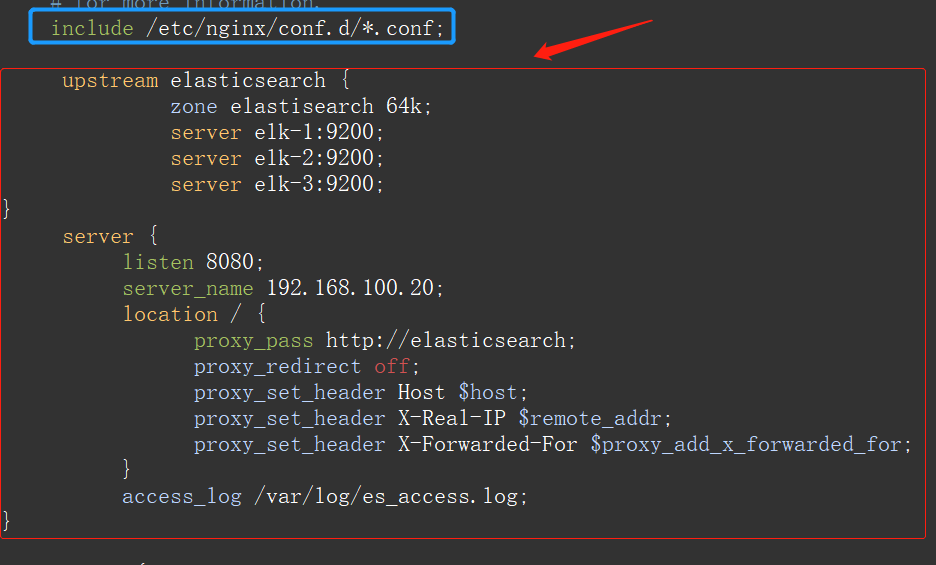

下载部署nginx进行负载均衡

[root@elk-1 ~]# wget -O /etc/yum.repos.d/epel.repo http://mirrors.aliyun.com/repo/epel-7.repo

[root@elk-1 ~]# yum install nginx -y

[root@elk-1 ~]# systemctl start nginx

[root@elk-1 ~]# vim /etc/nginx/nginx.conf

upstream elasticsearch {

zone elasticsearch 64K;

server elk-1:9200;

server elk-2:9200;

server elk-3:9200;

}

server {

listen 8080;

server_name 192.168.100.11;

location / {

proxy_pass http://elasticsearch;

proxy_redirect off;

proxy_set_header Host $host;

proxy_set_header X-Real-IP $remote_addr;

proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for;

}

access_log /var/log/es_access.log;

}

1.下载 Kibana

Kibana 只需要在主节点上安装就行了。

[root@elk_1 ~] wget https://artifacts.elastic.co/downloads/kibana/kibana-6.0.0-x86_64.rpm

2.安装 Kibana

[root@elk_1 ~]# rpm -ivh kibana-6.0.0-x86_64.rpm

warning: kibana-6.0.0-x86_64.rpm: Header V4 RSA/SHA512 Signature, key ID d88e42b4: NOKEY

Preparing... ################################# [100%]

Updating / installing...

1:kibana-6.0.0-1 ################################# [100%]

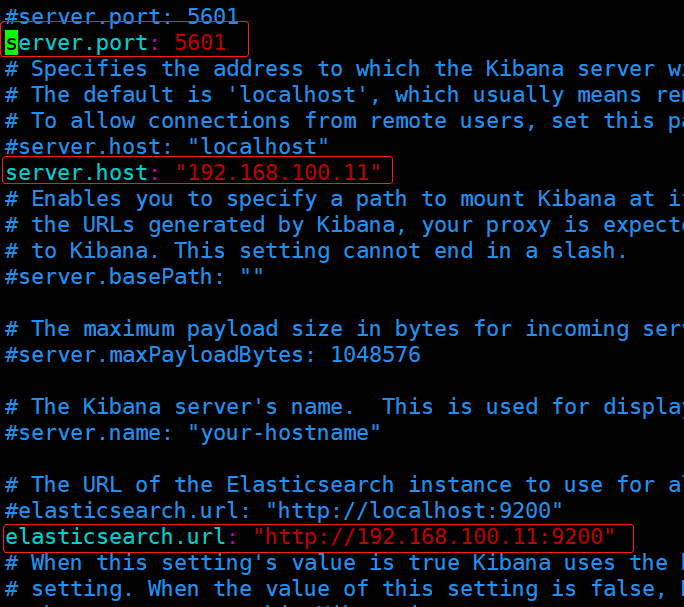

3.配置 Kibana

[root@elk_1 ~]# vim /etc/kibana/kibana.yml

server.port: 5601

server.host: "192.168.100.11"

elasticsearch.url: "http://192.168.100.11:9200"

把上面的代码写在下面的相应位置上。

4.启动 Kibana

[root@elk_1 ~]# systemctl start kibana

[root@elk_1 ~]# ps -ef |grep kibana

kibana 19958 1 41 11:26 ? 00:00:03 /usr/share/kibana/bin/../node/bin/node --no-warnings /usr/share/kibana/bin/../src/cli -c /etc/kibana/kibana.yml

root 19970 19230 0 11:26 pts/0 00:00:00 grep --color=auto kibana

[root@elk-1 ~]# netstat -lntp |grep node

tcp 0 0 192.168.40.11:5601 0.0.0.0:* LISTEN 19958/node

启动成功后网页访问,可以访问到如下界面。http://192.168.100.11:5601 访问的这个页面。

五、 部署 Logstash

1.下载 Logstash

[root@elk_2 ~]# wget https://artifacts.elastic.co/downloads/logstash/logstash-6.0.0.rpm

2.安装 Logstash

[root@elk_2 ~]# rpm -ivh logstash-6.0.0.rpm

warning: logstash-6.0.0.rpm: Header V4 RSA/SHA512 Signature, key ID d88e42b4: NOKEY

Preparing... ################################# [100%]

Updating / installing...

1:logstash-1:6.0.0-1 ################################# [100%]

Using provided startup.options file: /etc/logstash/startup.options

3.配置 logstash

配置/etc/logstash/logstash.yml,修改增加如下:

[root@elk_2 ~]# vim /etc/logstash/logstash.yml

http.host: "192.168.100.12"

配置logstash收集syslog日志:

[root@elk_2 ~]# vim /etc/logstash/conf.d/syslog.conf

input {

file {

path => "/var/log/messages"

type => "systemlog"

start_position => "beginning"

stat_interval => "3"

}

}

output {

elasticsearch {

hosts => ["192.168.100.11:9200","192.168.100.12:9200","192.168.100.13:9200"]

index => "system-log-%{+YYYY.MM.dd}"

}

}

检测配置文件是否错误:

[root@elk_2 ~]# ln -s /usr/share/logstash/bin/logstash /usr/bin

// 创建软连接,方便使用logstash命令

[root@elk_2 ~]# logstash --path.settings /etc/logstash/ -f /etc/logstash/conf.d/syslog.conf --config.test_and_exit

Sending Logstash's logs to /var/log/logstash which is now configured via log4j2.properties

Configuration OK

// 为ok则代表没问题

参数解释:

1. --path.settings : 用于指定logstash的配置文件所在的目录

2. -f : 指定需要被检测的配置文件的路径

3. --config.test_and_exit : 指定检测完之后就退出,不然就会直接启动了

4.授权

[root@elk_2 ~]# chmod 755 /var/log/messages

[root@elk_2 ~]# chown -R logstash /var/lib/logstash/

5.启动 logstash

检查配置文件没有问题后,启动Logstash服务:

[root@elk_2 ~]# systemctl start logstash

使用命令ps,查看进程:

[root@elk_2 ~]#ps -ef |grep logstash logstash 21835 1 12 16:45 ? 00:03:01 /bin/java -XX:+UseParNewGC -XX:+UseConcMarkSweepGC -XX:CMSInitiatingOccupancyFraction=75 -XX:+UseCMSInitiatingOccupancyOnly -XX:+DisableExplicitGC -Djava.awt.headless=true -Dfile.encoding=UTF-8 -XX:+HeapDumpOnOutOfMemoryError -Xmx1g -Xms256m -Xss2048k -Djffi.boot.library.path=/usr/share/logstash/vendor/jruby/lib/jni -Xbootclasspath/a:/usr/share/logstash/vendor/jruby/lib/jruby.jar -classpath : -Djruby.home=/usr/share/logstash/vendor/jruby -Djruby.lib=/usr/share/logstash/vendor/jruby/lib -Djruby.script=jruby -Djruby.shell=/bin/sh org.jruby.Main /usr/share/logstash/lib/bootstrap/environment.rb logstash/runner.rb --path.settings /etc/logstash root 21957 20367 0 17:10 pts/2 00:00:00 grep --color=auto logstash

使用netstat命令,查看进程端口:

[root@elk_2 ~]# netstat -lntp

Active Internet connections (only servers)

Proto Recv-Q Send-Q Local Address Foreign Address State PID/Program name

tcp 0 0 0.0.0.0:22 0.0.0.0:* LISTEN 957/sshd

tcp 0 0 127.0.0.1:25 0.0.0.0:* LISTEN 1152/master

tcp 0 0 0.0.0.0:80 0.0.0.0:* LISTEN 7390/nginx: master

tcp6 0 0 :::10514 :::* LISTEN 7403/java

tcp6 0 0 192.168.100.12:9300 :::* LISTEN 6418/java

tcp6 0 0 :::22 :::* LISTEN 957/sshd

tcp6 0 0 ::1:25 :::* LISTEN 1152/master

tcp6 0 0 192.168.100.12:9600 :::* LISTEN 7403/java

tcp6 0 0 192.168.100.12:9200 :::* LISTEN 6418/java

主要查看9600 端口。

如果出问题了:

(1)启动服务后,有进程但是没有端口。首先查看日志内容:

[root@elk_2 ~]# cat /var/log/logstash/logstash-plain.log | grep que

(2)通过日志确定是权限问题,因为之前我们以root的身份在终端启动过Logstash,所以产生的相关文件的权限用户和权限组都是root:

[root@elk_2 ~]# ll /var/lib/logstash/

total 4

drwxr-xr-x. 2 root root 6 Dec 6 15:45 dead_letter_queue

drwxr-xr-x. 2 root root 6 Dec 6 15:45 queue

-rw-r--r--. 1 root root 36 Dec 6 15:45 uuid

(3)修改/var/lib/logstash/目录的所属组为logstash,并重启服务:

[root@elk_2 ~]# chown -R logstash /var/lib/logstash/

[root@elk_2 ~]# systemctl restart logstash //重启服务后即可

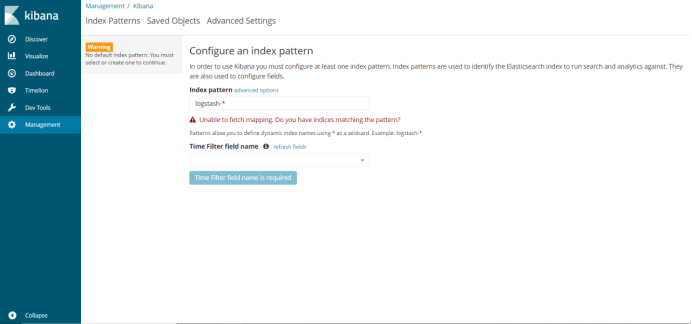

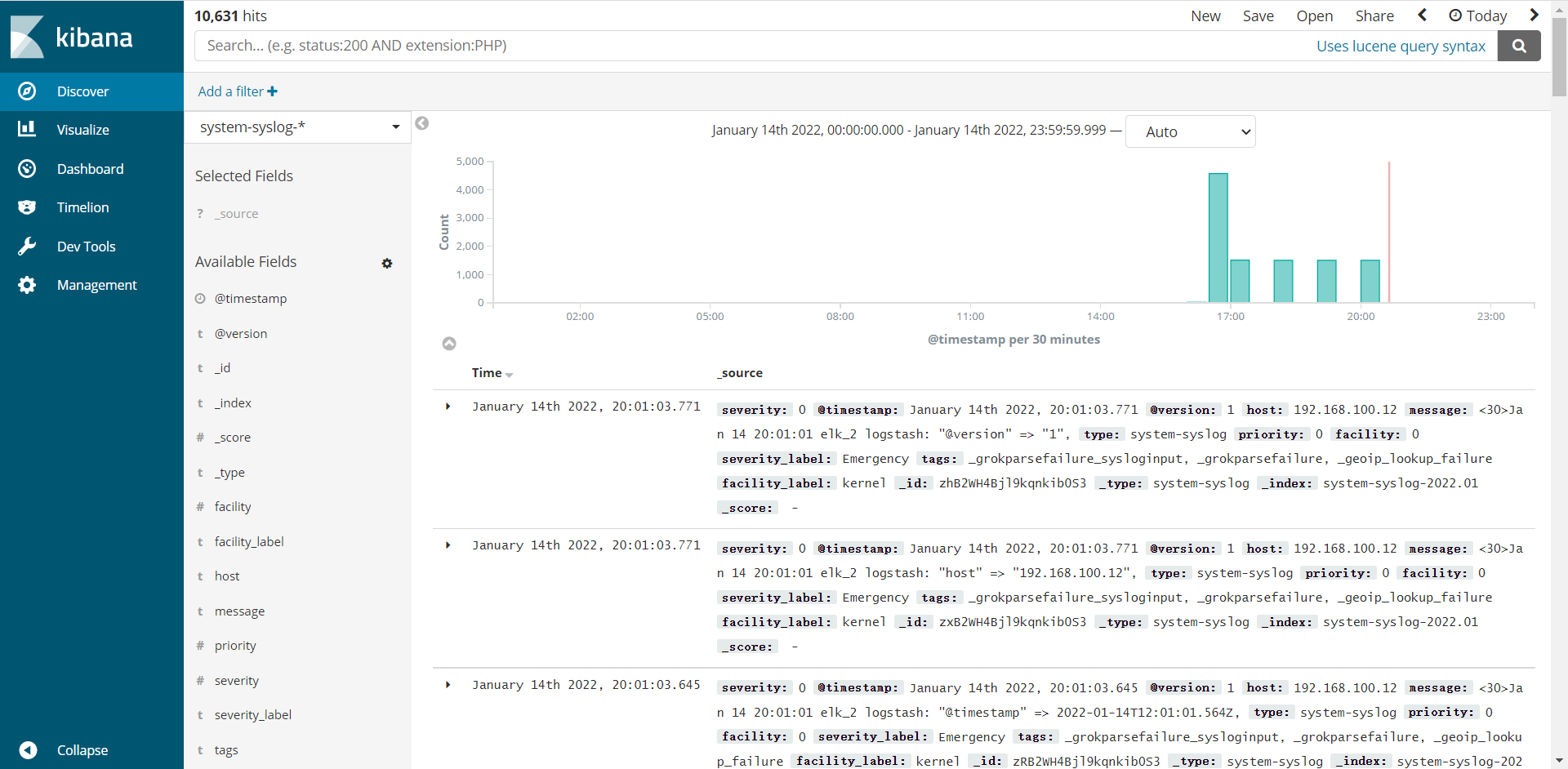

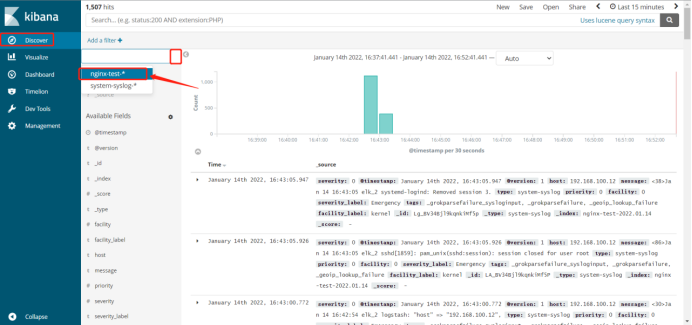

6.kibana 上查看日志

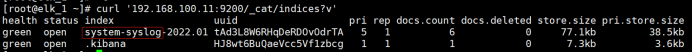

之前部署Kibana完成后,还没有检索日志。现在Logstash部署完成,我们回到Kibana服务器上查看日志索引,执行命令如下:

[root@elk_1 ~]# curl '192.168.100.11:9200/_cat/indices?v'

health status index uuid pri rep docs.count docs.deleted store.size pri.store.size

green open system-syslog-2022.01 tAd3L8W6RHqDeRDOvOdrTA 5 1 10631 0 2.8mb 1.3mb

green open .kibana HJ8wt6BuQaeVcc5Vf1zbcg 1 1 3 0 26.1kb 13kb

# 删除日志索引:

curl -XDELETE -u elastic:changeme http://192.168.100.30:9200/acc-apply-2018.08.09,acc-apply-2018.08.10

(友情提示:它需要时间,可长时间。如果一直没有那么那就该去查一下配置了)

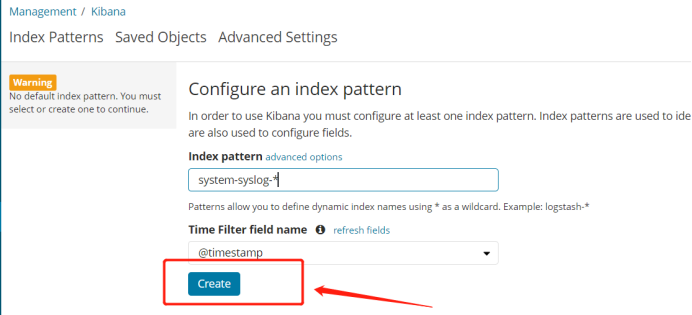

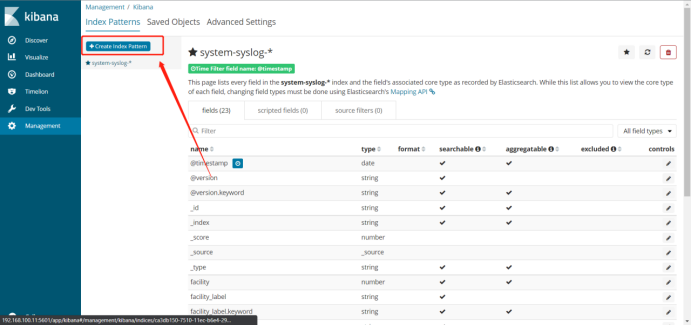

7.Web 界面配置

浏览器访问192.168.100.11:5601,到Kibana上配置索引:

看红色标注的地方,复制它到web页面。

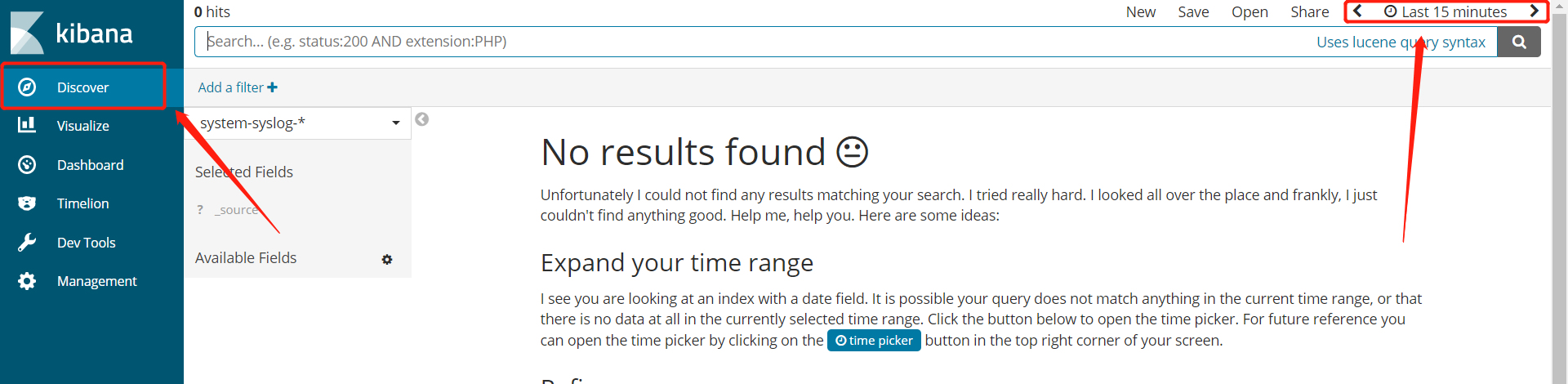

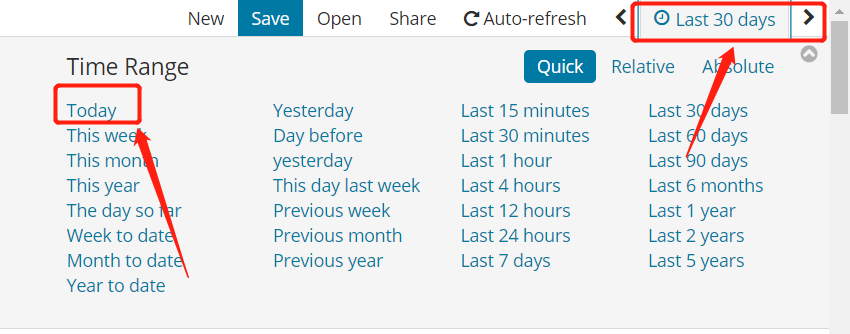

按完之后按、按Discover。出现的这个是乱码显现。

跟着下图走就可以解决乱码了。

这就是正常界面。

六、Logstash 收集 Nginx 日志

1.下载 Nginx

[root@elk_2 ~]#wget http://nginx.org/packages/centos/7/x86_64/RPMS/nginx-1.16.1-1.el7.ngx.x86_64.rpm

2.安装 Nginx

[root@elk_2 ~]# rpm -ivh nginx-1.16.1-1.el7.ngx.x86_64.rpm

警告:nginx-1.16.1-1.el7.ngx.x86_64.rpm: 头V4 RSA/SHA1 Signature,密钥 ID 7bd9bf62: NOKEY

准备中... ################################# [100%]

正在升级/安装...

1:nginx-1:1.16.1-1.el7.ngx ( 3%################################# [100%]

----------------------------------------------------------------------

Thanks for using nginx!

Please find the official documentation for nginx here:

* http://nginx.org/en/docs/

Please subscribe to nginx-announce mailing list to get

the most important news about nginx:

* http://nginx.org/en/support.html

Commercial subscriptions for nginx are available on:

* http://nginx.com/products/

----------------------------------------------------------------------

3.配置 Logstash

elk_2上:编辑Nginx配置文件,加入如下内容:

[root@elk_2 ~]# vim /etc/logstash/conf.d/nginx.conf

input {

file {

path => "/tmp/elk_access.log"

start_position => "beginning"

type => "nginx"

}

}

filter {

grok {

match => { "message" => "%{IPORHOST:http_host} %{IPORHOST:clientip} - %{USERNAME:remote_user} \[%{HTTPDATE:timestamp}\] \"(?:%{WORD:http_verb} %{NOTSPACE:http_request}(?: HTTP/%{NUMBER:http_version})?|%{DATA:raw_http_request})\" %{NUMBER:response} (?:%{NUMBER:bytes_read}|-) %{QS:referrer} %{QS:agent} %{QS:xforwardedfor} %{NUMBER:request_time:float}"}

}

geoip {

source => "clientip"

}

}

output {

stdout { codec => rubydebug }

elasticsearch {

hosts => ["192.168.100.12:9200"]

index => "nginx-test-%{+YYYY.MM.dd}"

}

}

使用logstash命令检查文件是否错误:

[root@elk_2 ~]# logstash --path.settings /etc/logstash/ -f /etc/logstash/conf.d/nginx.conf --config.test_and_exit

Sending Logstash's logs to /var/log/logstash which is now configured via log4j2.properties

Configuration OK

编辑监听Nginx日志配置文件,加入如下内容:

# 不移走这个文件就 elk.conf 就执不了。因为这个文件也是 80 端口

[root@elk-2 ~]# mv /etc/nginx/conf.d/default.conf /tmp/

[root@elk_2 ~]#vim /etc/nginx/conf.d/elk.conf

server {

listen 80;

server_name elk.com;

location / {

proxy_pass http://192.168.100.11:5601;

proxy_set_header Host $host;

proxy_set_header X-Real-IP $remote_addr;

proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for;

}

access_log /tmp/elk_access.log main2;

}

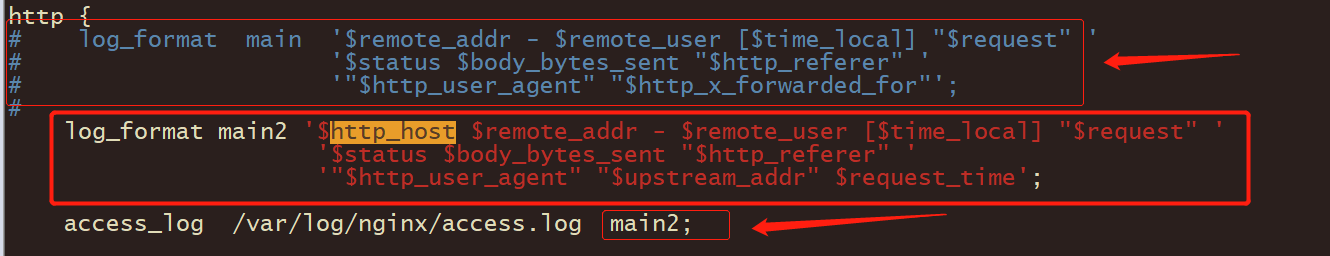

修改Nginx日志配置文件,增加如下内容(需注意Nginx配置文件格式):

[root@elk_2 ~]# vim /etc/nginx/nginx.conf

http {

include /etc/nginx/mime.types;

default_type application/octet-stream;

# log_format main '$remote_addr - $remote_user [$time_local] "$request" '

# '$status $body_bytes_sent "$http_referer" '

# '"$http_user_agent" "$http_x_forwarded_for"';

#插入这些红色的代码

log_format main2 '$http_host $remote_addr - $remote_user [$time_local] "$request" '

'$status $body_bytes_sent "$http_referer" '

'"$http_user_agent" "$upstream_addr" $request_time';

access_log /var/log/nginx/access.log main2;

sendfile on;

#tcp_nopush on;

keepalive_timeout 65;

[root@elk_2 ~]# nginx -t

nginx: the configuration file /etc/nginx/nginx.conf syntax is ok

nginx: configuration file /etc/nginx/nginx.conf test is successful

启动

[root@elk-2 ~]# systemctl restart logstash

在/etc/hosts文件中添加下面信息

192.168.100.30 elk.com

浏览器访问,检查是否有日志产生。

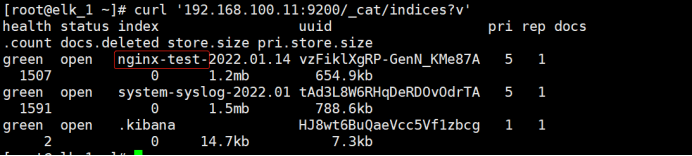

4.Web 页面配置

浏览器访问192.168.100.11:5601,到Kibana上配置索引:

查看 elk_1 主节点的收到没有

[root@elk_1 ~]# curl '192.168.100.11:9200/_cat/indices?v' health status index uuid pri rep d green open system-syslog-2022.01 tAd3L8W6RHqDeRDOvOdrTA 5 1 green open nginx-test-2022.01.14 vzFiklXgRP-GenN_KMe87A 5 1

green open .kibana HJ8wt6BuQaeVcc5Vf1zbcg 1 1

跟着点击即可,如果出现乱码显现就去修改他的时间。

七、 Beats 采集日志

1.下载与安装 Beats

在elk-3主机上下载和安装Beats:

[root@elk_3 ~]# wget https://artifacts.elastic.co/downloads/beats/filebeat/filebeat-6.0.0-x86_64.rpm

--2020-03-30 22:41:52-- https://artifacts.elastic.co/downloads/beats/filebeat/filebeat-6.0.0-x86_64.rpm

正在解析主机 artifacts.elastic.co (artifacts.elastic.co)... 151.101.230.222, 2a04:4e42:1a::734

正在连接 artifacts.elastic.co (artifacts.elastic.co)|151.101.230.222|:443... 已连接。

已发出 HTTP 请求,正在等待回应... 200 OK

长度:11988378 (11M) [binary/octet-stream]

正在保存至: “filebeat-6.0.0-x86_64.rpm.1”

100%[===================================>] 11,988,378 390KB/s 用时 30s

2020-03-30 22:42:24 (387 KB/s) - 已保存 “filebeat-6.0.0-x86_64.rpm.1” [11988378/11988378])

[root@elk_3 ~]# rpm -ivh filebeat-6.0.0-x86_64.rpm

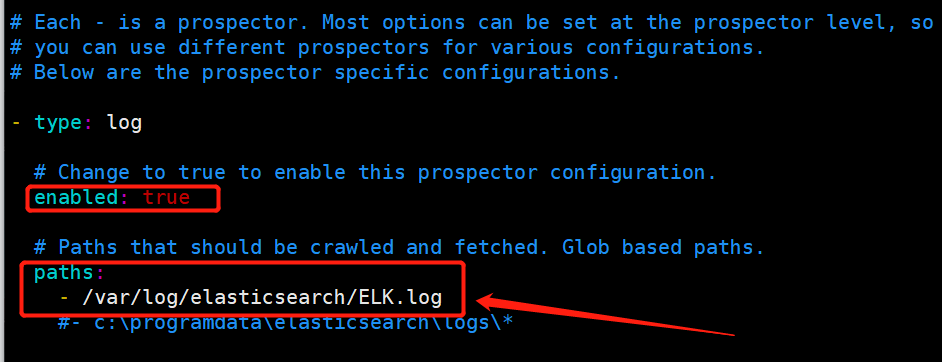

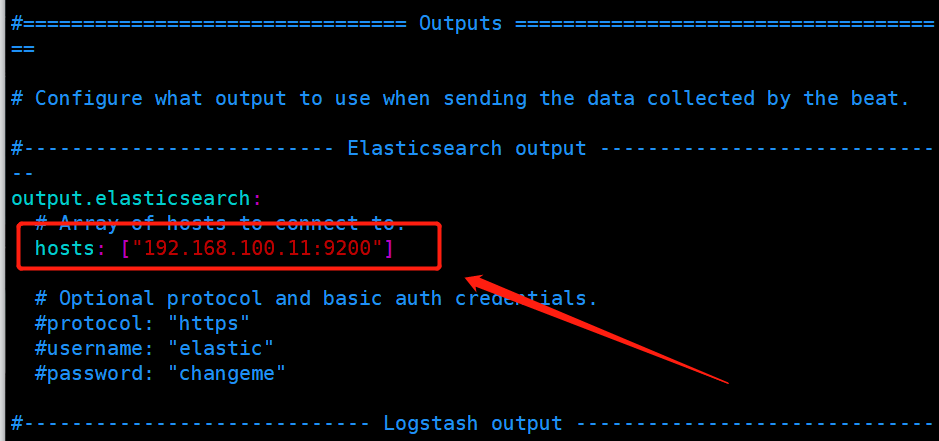

2.配置文件

根据下图来编辑代码

[root@elk_3 ~]# vim /etc/filebeat/filebeat.yml

filebeat.prospectors:

paths:

- /var/log/elasticsearch/elk.log //此处可自行改为想要监听的日志文件

output.elasticsearch:

hosts: ["192.168.100.11:9200"]

systemctl start filebeat

#启动

[root@elk_3 ~]# systemctl start filebeat

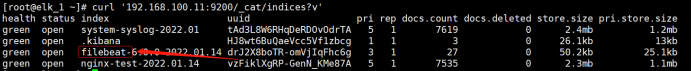

在elk-1主机上使用curl '192.168.100.20:9200/_cat/indices?v'命令查看是否监听到elk-3主机上的日志(出现filebeat字样表示成功):

[root@elk_1 ~]# curl '192.168.100.11:9200/_cat/indices?v'

health status index uuid pri rep d

green open system-syslog-2022.01 tAd3L8W6RHqDeRDOvOdrTA 5 1

green open .kibana HJ8wt6BuQaeVcc5Vf1zbcg 1 1

green open filebeat-6.0.0-2022.01.14 drJ2X8boTR-omVjIqFhc6g 3 1

green open nginx-test-2022.01.14 vzFiklXgRP-GenN_KMe87A 5 1

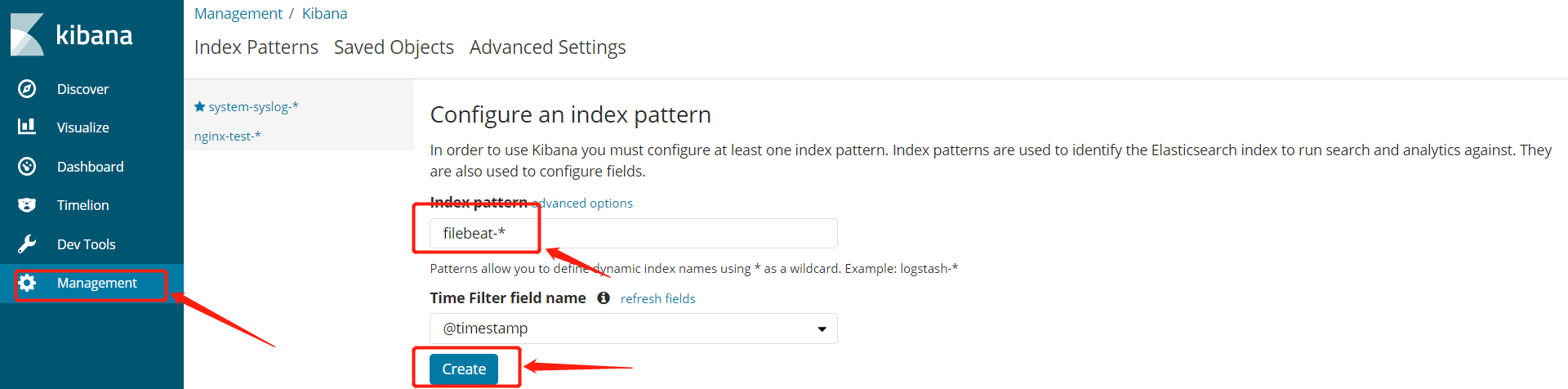

3.Web 界面配置

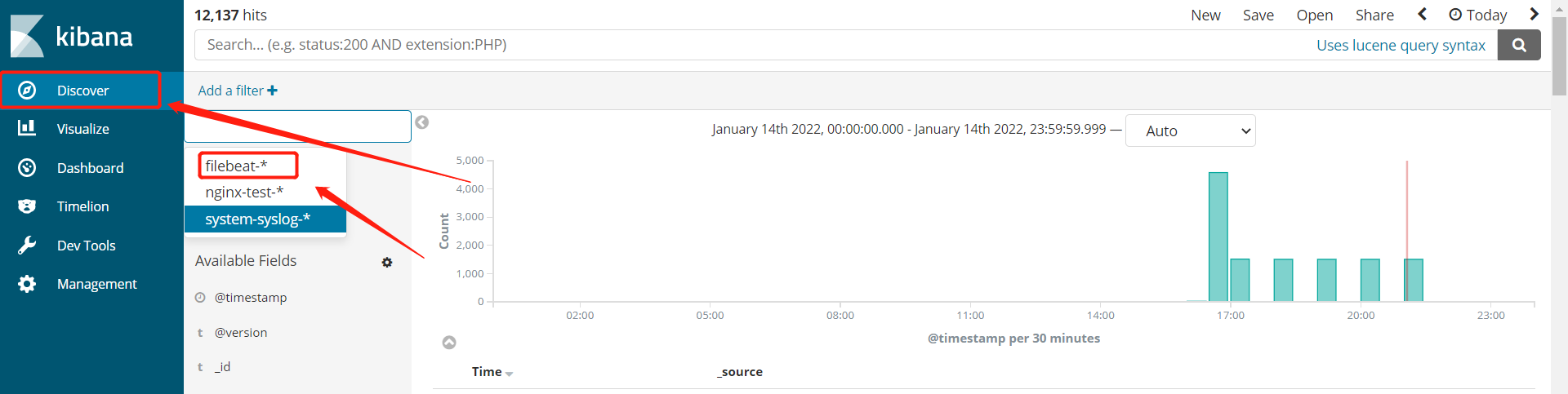

按照上一个步骤在浏览器中添加filebeat日志文件的监控。

这就成功了。