根据训练好的Transformer模型,得到注意力矩阵,并对注意力进行可视化

首先安装:tensorflow 1.13.1 + tensor2tensor 1.13.1

可视化,请在Jupyter notebook中运行。该代码根据tensor2tensor/tensor2tensor/visualization/visualization.py修改得到

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98 99 100 101 102 103 104 105 106 107 108 109 110 111 112 113 114 115 116 117 118 119 120 121 122 123 124 125 126 127 128 129 130 131 132 133 134 135 136 137 138 139 140 141 142 143 144 145 146 147 148 149 150 151 152 153 154 155 156 157 158 159 160 161 162 163 164 165 166 167 168 169 170 171 172 173 174 175 176 177 178 179 180 181 182 183 184 185 186 187 188 189 190 191 192 193 194 195 196 197 198 199 200 201 202 203 204 205 206 207 208 209 210 211 212 213 214 215 216 217 218 219 220 221 222 223 224 225 226 227 228 229 230 231 232 233 234 235 236 237 238 239 240 241 242 243 244 245 246 247 248 249 250 251 | # coding=utf-8# Copyright 2020 The Tensor2Tensor Authors.## Licensed under the Apache License, Version 2.0 (the "License");# you may not use this file except in compliance with the License.# You may obtain a copy of the License at## http://www.apache.org/licenses/LICENSE-2.0## Unless required by applicable law or agreed to in writing, software# distributed under the License is distributed on an "AS IS" BASIS,# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.# See the License for the specific language governing permissions and# limitations under the License."""Shared code for visualizing transformer attentions."""from __future__ import absolute_importfrom __future__ import divisionfrom __future__ import print_functionimport numpy as np# To register the hparams setfrom tensor2tensor import models # pylint: disable=unused-importfrom tensor2tensor import problemsfrom tensor2tensor.utils import registryfrom tensor2tensor.utils import trainer_libimport tensorflow.compat.v1 as tffrom tensor2tensor.utils import usr_dirEOS_ID = 1class AttentionVisualizer2(object): """Helper object for creating Attention visualizations.""" def __init__( self, hparams_set,hparams,t2t_usr_dir, model_name, data_dir, problem_name, beam_size=1): inputs, targets, samples, att_mats = build_model( hparams_set,hparams, t2t_usr_dir, model_name, data_dir, problem_name, beam_size=beam_size) # Fetch the problem ende_problem = problems.problem(problem_name) encoders = ende_problem.feature_encoders(data_dir) self.inputs = inputs self.targets = targets self.att_mats = att_mats self.samples = samples self.encoders = encoders def encode(self, input_str): """Input str to features dict, ready for inference.""" inputs = self.encoders["inputs"].encode(input_str) + [EOS_ID] batch_inputs = np.reshape(inputs, [1, -1, 1, 1]) # Make it 3D. return batch_inputs def decode(self, integers): """List of ints to str.""" integers = list(np.squeeze(integers)) return self.encoders["targets"].decode(integers) def encode_list(self, integers): """List of ints to list of str.""" integers = list(np.squeeze(integers)) return self.encoders["inputs"].decode_list(integers) def decode_list(self, integers): """List of ints to list of str.""" integers = list(np.squeeze(integers)) return self.encoders["targets"].decode_list(integers) def get_vis_data_from_string(self, sess, input_string): """Constructs the data needed for visualizing attentions. Args: sess: A tf.Session object. input_string: The input sentence to be translated and visualized. Returns: Tuple of ( output_string: The translated sentence. input_list: Tokenized input sentence. output_list: Tokenized translation. att_mats: Tuple of attention matrices; ( enc_atts: Encoder self attention weights. A list of `num_layers` numpy arrays of size (batch_size, num_heads, inp_len, inp_len) dec_atts: Decoder self attention weights. A list of `num_layers` numpy arrays of size (batch_size, num_heads, out_len, out_len) encdec_atts: Encoder-Decoder attention weights. A list of `num_layers` numpy arrays of size (batch_size, num_heads, out_len, inp_len) ) """ encoded_inputs = self.encode(input_string) # Run inference graph to get the translation. out = sess.run(self.samples, { self.inputs: encoded_inputs, }) # Run the decoded translation through the training graph to get the # attention tensors. att_mats = sess.run(self.att_mats, { self.inputs: encoded_inputs, self.targets: np.reshape(out, [1, -1, 1, 1]), }) output_string = self.decode(out) input_list = self.encode_list(encoded_inputs) output_list = self.decode_list(out) return output_string, input_list, output_list, att_matsdef build_model(hparams_set, hparams,t2t_usr_dir, model_name, data_dir, problem_name, beam_size=1): """Build the graph required to fetch the attention weights. Args: hparams_set: HParams set to build the model with. model_name: Name of model. data_dir: Path to directory containing training data. problem_name: Name of problem. beam_size: (Optional) Number of beams to use when decoding a translation. If set to 1 (default) then greedy decoding is used. Returns: Tuple of ( inputs: Input placeholder to feed in ids to be translated. targets: Targets placeholder to feed to translation when fetching attention weights. samples: Tensor representing the ids of the translation. att_mats: Tensors representing the attention weights. ) """ print(model_name) usr_dir.import_usr_dir(t2t_usr_dir) hparams = trainer_lib.create_hparams( hparams_set,hparams, data_dir=data_dir, problem_name=problem_name) # print(hparams) translate_model = registry.model(model_name)( hparams, tf.estimator.ModeKeys.EVAL) inputs = tf.placeholder(tf.int32, shape=(1, None, 1, 1), name="inputs") targets = tf.placeholder(tf.int32, shape=(1, None, 1, 1), name="targets") translate_model({ "inputs": inputs, "targets": targets, }) # Must be called after building the training graph, so that the dict will # have been filled with the attention tensors. BUT before creating the # inference graph otherwise the dict will be filled with tensors from # inside a tf.while_loop from decoding and are marked unfetchable. atts = get_att_mats(translate_model,model_name) with tf.variable_scope(tf.get_variable_scope(), reuse=True): samples = translate_model.infer({ "inputs": inputs, }, beam_size=beam_size)["outputs"] return inputs, targets, samples, attsdef get_att_mats(translate_model,model_name): """Get's the tensors representing the attentions from a build model. The attentions are stored in a dict on the Transformer object while building the graph. Args: translate_model: Transformer object to fetch the attention weights from. Returns: Tuple of attention matrices; ( enc_atts: Encoder self attention weights. A list of `num_layers` numpy arrays of size (batch_size, num_heads, inp_len, inp_len) dec_atts: Decoder self attetnion weights. A list of `num_layers` numpy arrays of size (batch_size, num_heads, out_len, out_len) encdec_atts: Encoder-Decoder attention weights. A list of `num_layers` numpy arrays of size (batch_size, num_heads, out_len, inp_len) ) """ enc_atts = [] dec_atts = [] encdec_atts = [] prefix = "%s/body/"%(model_name) postfix_self_attention = "/multihead_attention/dot_product_attention" if translate_model.hparams.self_attention_type == "dot_product_relative": postfix_self_attention = ("/multihead_attention/" "dot_product_attention_relative") postfix_encdec = "/multihead_attention/dot_product_attention" for i in range(translate_model.hparams.num_hidden_layers): enc_att = translate_model.attention_weights[ "%sencoder/layer_%i/self_attention%s" % (prefix, i, postfix_self_attention)] dec_att = translate_model.attention_weights[ "%sdecoder/layer_%i/self_attention%s" % (prefix, i, postfix_self_attention)] encdec_att = translate_model.attention_weights[ "%sdecoder/layer_%i/encdec_attention%s" % (prefix, i, postfix_encdec)] enc_atts.append(enc_att) dec_atts.append(dec_att) encdec_atts.append(encdec_att) return enc_atts, dec_atts, encdec_attsfrom IPython.display import displaydef call_html(): import IPython display(IPython.core.display.HTML(''' <script src="/static/components/requirejs/require.js"></script> <script> requirejs.config({ paths: { base: '/static/base', "d3": "https://cdnjs.cloudflare.com/ajax/libs/d3/3.5.8/d3.min", jquery: '//ajax.googleapis.com/ajax/libs/jquery/2.0.0/jquery.min', }, }); </script> '''))import osfrom tensor2tensor import problemsfrom tensor2tensor.bin import t2t_decoder # To register the hparams set# from tensor2tensor.utils import registryfrom tensor2tensor.utils import trainer_libfrom tensor2tensor.visualization import attention# from src.visualization import visualizationos.environ["CUDA_VISIBLE_DEVICES"] = "0,1"# HParamsproblem_name = 'translate_ende_wmt32k' #数据data_dir = os.path.expanduser('/home/usrname/collaboration/t2t_data/%s'%(problem_name)) #数据路径model_name = "collaboration" #模型名称hparams_set = "collaboration_base" #模型类型hparams = 'max_length=128,num_hidden_layers=6,usedegray=1.0,reuse_n=0' #自定义参数 (根据自己需求)t2t_usr_dir = './src/' #用户自定义模型model的路径visualizer = AttentionVisualizer2(hparams_set,hparams, t2t_usr_dir,model_name, data_dir, problem_name, beam_size=1)tf.Variable(0, dtype=tf.int64, trainable=False, name='global_step') |

接着继续运行:

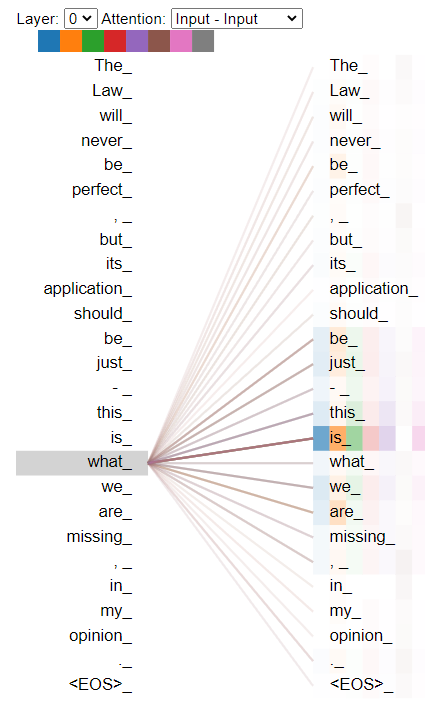

1 2 3 4 5 6 7 8 9 10 11 12 13 | saver = tf.train.Saver()with tf.Session() as sess: ckpt = 'averaged.ckpt-0' #checkpoint路径 print(ckpt) saver.restore(sess, ckpt)<br> #可视化样本# input_sentence = "It is in this spirit that a majority of American governments have passed new laws since 2009 making the registration or voting process more difficult." input_sentence = "The Law will never be perfect, but its application should be just - this is what we are missing, in my opinion." output_string, inp_text, out_text, att_mats = visualizer.get_vis_data_from_string(sess, input_sentence) print(output_string) call_html() attention.show(inp_text, out_text, *att_mats) |

可视化结果:

【推荐】国内首个AI IDE,深度理解中文开发场景,立即下载体验Trae

【推荐】编程新体验,更懂你的AI,立即体验豆包MarsCode编程助手

【推荐】抖音旗下AI助手豆包,你的智能百科全书,全免费不限次数

【推荐】轻量又高性能的 SSH 工具 IShell:AI 加持,快人一步

· 开发者必知的日志记录最佳实践

· SQL Server 2025 AI相关能力初探

· Linux系列:如何用 C#调用 C方法造成内存泄露

· AI与.NET技术实操系列(二):开始使用ML.NET

· 记一次.NET内存居高不下排查解决与启示

· 阿里最新开源QwQ-32B,效果媲美deepseek-r1满血版,部署成本又又又降低了!

· 开源Multi-agent AI智能体框架aevatar.ai,欢迎大家贡献代码

· Manus重磅发布:全球首款通用AI代理技术深度解析与实战指南

· 被坑几百块钱后,我竟然真的恢复了删除的微信聊天记录!

· 没有Manus邀请码?试试免邀请码的MGX或者开源的OpenManus吧