1 Introduction(一)

1 Introduction

1.1 Overview

NVM Express (NVMe) is an interface that allows host software to communicate with a non-volatile memory subsystem. This interface is optimized for Enterprise and Client solid state drives, typically attached as a register level interface to the PCI Express interface.

Note: During development, this specification was referred to as Enterprise NVMHCI. However, the name was modified to NVM Express prior to specification completion. This interface is targeted for use in both Client and Enterprise systems.

For an overview of changes from revision 1.2.1 to revision 1.3, refer to nvmexpress.org/changes for a document that describes the new features, including mandatory requirements for a controller to comply with revision 1.3.

NVMe协议(以前也叫NVMHCI)接口允许主机软件与非易失性存储器子系统通信。 此接口针对企业和客户端固态驱动器进行了优化,通常作为寄存器级接口连接到PCI Express接口。

注意:在开发过程中,此规范称为Enterprise NVMHCI。 但是,在规范完成之前,该名称已更改为NVM Express。 此接口旨在在客户端和企业系统中使用。

有关从版本1.2.1到版本1.3的更改的概述,请参考nvmexpress.org/changes以获取描述新功能的文档,包括对控制器遵守版本1.3的强制要求。

1.1.1 NVMe over PCIe and NVMe over Fabrics

NVM Express 1.3 and prior revisions define a register level interface for host software to communicate with a non-volatile memory subsystem over PCI Express (NVMe over PCIe). The NVMe over Fabrics specification defines a protocol interface and related extensions to NVMe that enable operation over other interconnects (e.g., Ethernet, InfiniBand™, Fibre Channel). The NVMe over Fabrics specification has an NVMe Transport binding for each NVMe Transport (either within that specification or by reference).

In this specification a requirement/feature may be documented as specific to NVMe over Fabrics or to a particular NVMe Transport binding. In addition, support requirements for features and functionality may differ between NVMe over PCIe and NVMe over Fabrics.

To comply with NVM Express 1.2.1, a controller shall support the NVM Subsystem NVMe Qualified Name in the Identify Controller data structure in Figure 109.

1.1.1 PCIe上的NVMe和Fabric上的NVMe

NVM Express 1.3和以前的版本为主机软件定义了一个寄存器级接口,以便通过PCI Express(基于PCIe的NVMe)与非易失性存储器子系统进行通信。 架构上的NVMe规范定义了协议接口和对NVMe的相关扩展,从而可以通过其他互连(例如,以太网,InfiniBand™,光纤通道)进行操作。 NVMe over Fabrics规范为每个NVMe传输(在该规范内或通过引用)具有NVMe传输绑定。

在本规范中,需求/功能可以记录为特定于Fabric上的NVMe或特定的NVMe Transport绑定。 此外,在PCIe上的NVMe和在结构上的NVMe之间,功能部件的支持要求可能有所不同。

为了符合NVM Express 1.2.1,控制器应在图109的“识别控制器”数据结构中支持NVM子系统NVMe合格名称。

1.2 Scope

The specification defines a register interface for communication with a non-volatile memory subsystem. It also defines a standard command set for use with the NVM subsystem.

1.2范围

该规范定义了用于与非易失性存储器子系统进行通信的寄存器接口。 它还定义了与NVM子系统一起使用的标准命令集。

1.3 Outside of Scope

The register interface and command set are specified apart from any usage model for the NVM, but rather only specifies the communication interface to the NVM subsystem. Thus, this specification does not specify whether the non-volatile memory system is used as a solid state drive, a main memory, a cache memory, a backup memory, a redundant memory, etc. Specific usage models are outside the scope, optional, and not licensed.

This interface is specified above any non-volatile memory management, like wear leveling. Erases and other management tasks for NVM technologies like NAND are abstracted.

This specification does not contain any information on caching algorithms or techniques.

The implementation or use of other published specifications referred to in this specification, even if required for compliance with the specification, are outside the scope of this specification (for example, PCI, PCI Express and PCI-X).

1.3超出范围

除了NVM的任何使用模型外,还指定了寄存器接口和命令集,而仅指定了与NVM子系统的通信接口。 因此,本规范未指定是否将非易失性存储系统用作固态驱动器,主存储器,高速缓存存储器,备份存储器,冗余存储器等。特定的使用模型不在范围之内,是可选的, 并且没有许可。

在任何非易失性存储器管理(例如损耗均衡)之上指定了此接口。 对NVM技术(如NAND)的擦除和其他管理任务进行了抽象。

该规范不包含有关缓存算法或技术的任何信息。

即使符合规范要求,本规范中引用的其他已发布规范的实现或使用也不在本规范的范围内(例如,PCI,PCI Express和PCI-X)。

1.4 Theory of Operation

NVM Express is a scalable host controller interface designed to address the needs of Enterprise and Client systems that utilize PCI Express based solid state drives. The interface provides optimized command submission and completion paths. It includes support for parallel operation by supporting up to 65,535 I/O Queues with up to 64K outstanding commands per I/O Queue. Additionally, support has been added for many Enterprise capabilities like end-to-end data protection (compatible with SCSI Protection Information, commonly known as T10 DIF, and SNIA DIX standards), enhanced error reporting, and virtualization.

The interface has the following key attributes:

Does not require uncacheable / MMIO register reads in the command submission or completion path.

A maximum of one MMIO register write is necessary in the command submission path.

Support for up to 65,535 I/O queues, with each I/O queue supporting up to 64K outstanding commands.

Priority associated with each I/O queue with well-defined arbitration mechanism.

All information to complete a 4KB read request is included in the 64B command itself, ensuring efficient small I/O operation.

Efficient and streamlined command set.

Support for MSI/MSI-X and interrupt aggregation.

Support for multiple namespaces.

Efficient support for I/O virtualization architectures like SR-IOV.

Robust error reporting and management capabilities.

Support for multi-path I/O and namespace sharing.

This specification defines a streamlined set of registers whose functionality includes:

Indication of controller capabilities

Status for controller failures (command status is processed via CQ directly)

Admin Queue configuration (I/O Queue configuration processed via Admin commands)

Doorbell registers for scalable number of Submission and Completion Queues

An NVM Express controller is associated with a single PCI Function. The capabilities and settings that apply to the entire controller are indicated in the Controller Capabilities (CAP) register and the Identify Controller data structure.

A namespace is a quantity of non-volatile memory that may be formatted into logical blocks. An NVM Express controller may support multiple namespaces that are referenced using a namespace ID. Namespaces may be created and deleted using the Namespace Management and Namespace Attachment commands. The Identify Namespace data structure indicates capabilities and settings that are specific to a particular namespace. The capabilities and settings that are common to all namespaces are reported by the Identify Namespace data structure for namespace ID FFFFFFFFh.

NVM Express is based on a paired Submission and Completion Queue mechanism. Commands are placed by host software into a Submission Queue. Completions are placed into the associated Completion Queue by the controller. Multiple Submission Queues may utilize the same Completion Queue. Submission and Completion Queues are allocated in memory.

An Admin Submission and associated Completion Queue exist for the purpose of controller management and control (e.g., creation and deletion of I/O Submission and Completion Queues, aborting commands, etc.). Only commands that are part of the Admin Command Set may be submitted to the Admin Submission Queue.

An I/O Command Set is used with an I/O queue pair. This specification defines one I/O Command Set, named the NVM Command Set. The host selects one I/O Command Set that is used for all I/O queue pairs.

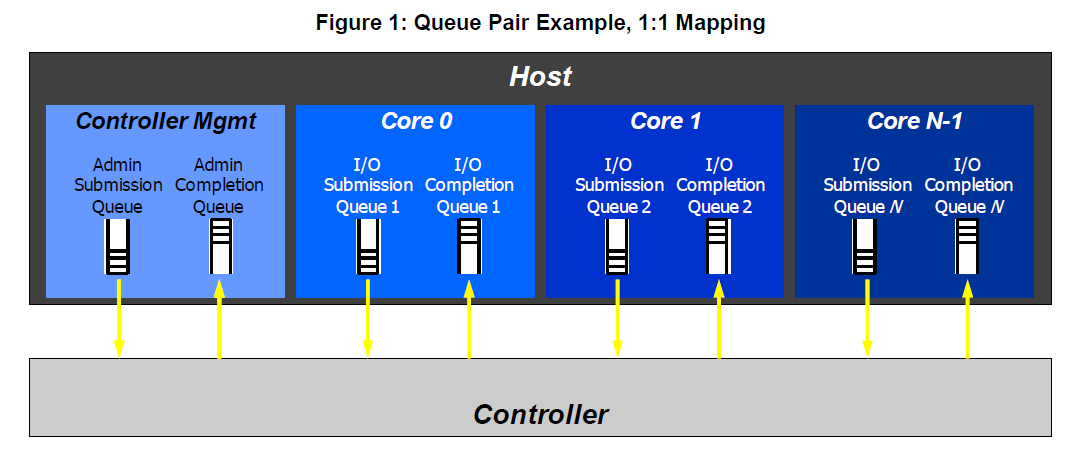

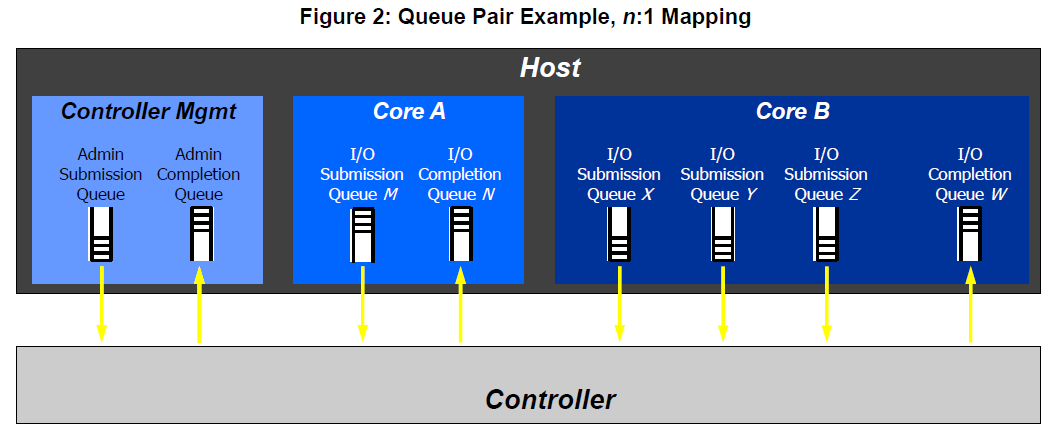

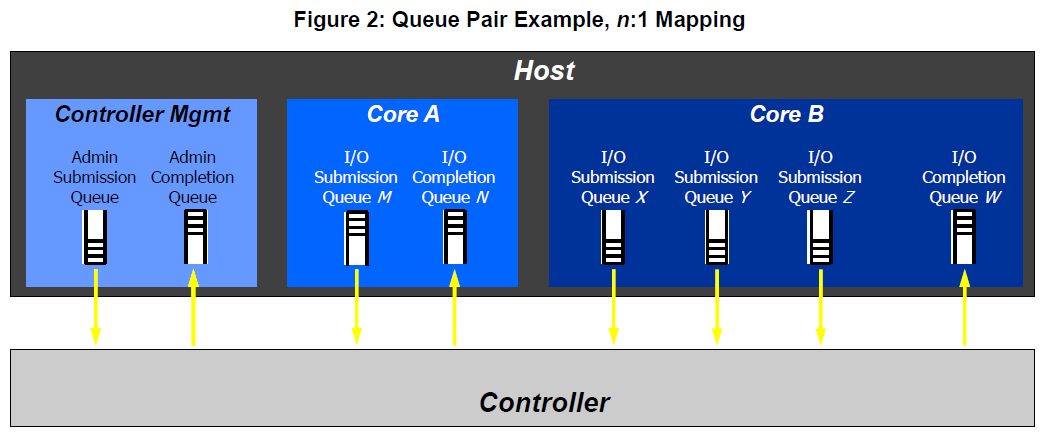

Host software creates queues, up to the maximum supported by the controller. Typically the number of command queues created is based on the system configuration and anticipated workload. For example, on a four core processor based system, there may be a queue pair per core to avoid locking and ensure data structures are created in the appropriate processor core’s cache. Figure 1 provides a graphical representation of the queue pair mechanism, showing a 1:1 mapping between Submission Queues and Completion Queues. Figure 2 shows an example where multiple I/O Submission Queues utilize the same I/O Completion Queue on Core B. Figure 1 and Figure 2 show that there is always a 1:1 mapping between the Admin Submission Queue and Admin Completion Queue.

NVMe协议是以PCIE协议为基础的可扩展性主机控制器接口,专为企业客户设计。接口提供命令提交和完成的交互手段,可支持高达65535条IO队列,每条队列支持64K条命令的并发操作。除此之外,提供许多企业级特性,如端到端保护、增强型错误上报以及虚拟化等。

协议主要特性有:

在命令提交或完成中,不需要提供不可缓存或内存映射的寄存器读取;

命令提交最多只需要一个MMIO的寄存器写;

支持最高65535个IO队列,同时每个队列最高支持64K条命令;

每条IO队列优先级可定义;

完成一个4KB读请求需要64B的命令消耗;

高效和简化的命令集;

支持MSI/MSI-X的中断聚合;

支持多命名空间;

高效支持IO虚拟化架构如SR-IOV;

强大的错误上报机制和管理能力;

支持多路径IO以及命名空间共享;

该规范定义了一组简化的寄存器,其功能包括:

定义了控制器功能;

控制器故障状态(命令状态通过CQ直接处理);

管理队列配置(通过Admin命令处理I / O队列配置);

数量可扩展的提交和完成队列的Doorbell寄存器。

NVM Express控制器与单个PCI功能相关联,其中控制器功能(CAP)寄存器和识别控制器数据结构中表明了整个控制器的功能和设置。

命名空间是一定大小的非易失性存储空间,可以格式化为逻辑块。 NVM Express控制器可以通过命名空间ID支持多个命名空间。 可以使用命名空间管理和命名空间附加命令创建和删除命名空间。 Identify Namespace数据结构指示特定于特定命名空间的功能和设置。 通用的命名空间功能和设置由名称空间ID为FFFFFFFFh的Identify Namespace数据结构表示。

NVM Express接口基于成对的提交和完成队列机制。命令由主机软件放入提交队列。完成消息由控制器放入相关的完成队列中。 多个提交队列可以使用相同的完成队列。 提交和完成队列通过内存中分配。

Admin Submission Queue(管理提交队列)和对应的完成队列用于控制器管理和控制(例如,I/O提交和完成队列的创建和删除,中止命令等)。 只有属于Admin命令集的命令才可以提交给Admin Submission Queue。

I/O命令集与I/O队列对一起使用。 该规范定义了一个名为NVM命令集的I/O命令集。主机选择一个用于所有I/O队列对的I/O命令集。

主机软件创建队列,最高可达控制器支持的最大值。通常,创建的命令队列数基于系统配置和预期工作负载。例如,在基于四核处理器的系统上,每个核心可能有一个队列对以避免锁定并确保在适当的处理器核心缓存中创建数据结构。图1提供了队列对机制的图形表示,显示了提交队列和完成队列之间的1:1映射。

图2展示了多个I / O提交队列在Core B上使用相同的I / O完成队列。图1和图2展示了Admin Submission Queue和Admin Completion Queue之间始终存在1:1映射。

A Submission Queue (SQ) is a circular buffer with a fixed slot size that the host software uses to submit commands for execution by the controller. The host software updates the appropriate SQ Tail doorbell register when there are one to n new commands to execute. The previous SQ Tail value is overwritten in the controller when there is a new doorbell register write. The controller fetches SQ entries in order from the Submission Queue, however, it may then execute those commands in any order.

Each Submission Queue entry is a command. Commands are 64 bytes in size. The physical memory locations in memory to use for data transfers are specified using Physical Region Page (PRP) entries or Scatter Gather Lists. Each command may include two PRP entries or one Scatter Gather List (SGL) segment. If more than two PRP entries are necessary to describe the data buffer, then a pointer to a PRP List that describes a list of PRP entries is provided. If more than one SGL segment is necessary to describe the data buffer, then the SGL segment provides a pointer to the next SGL segment.

A Completion Queue (CQ) is a circular buffer with a fixed slot size used to post status for completed commands. A completed command is uniquely identified by a combination of the associated SQ identifier and command identifier that is assigned by host software. Multiple Submission Queues may be associated with a single Completion Queue. This feature may be used where a single worker thread processes all command completions via one Completion Queue even when those commands originated from multiple Submission Queues. The CQ Head pointer is updated by host software after it has processed completion queue entries indicating the last free CQ slot. A Phase Tag (P) bit is defined in the completion queue entry to indicate whether an entry has been newly posted without consulting a register. This enables host software to determine whether the new entry was posted as part of the previous or current round of completion notifications. Specifically, each round through the Completion Queue entries, the controller inverts the Phase Tag bit.

提交队列(SQ)是具有固定时隙大小的循环缓冲区,主机软件使用该缓冲区大小来提交命令以供控制器执行。 当有一到n个新命令要执行时,主机软件会更新相应的SQ Tail门铃寄存器。 当有新的门铃寄存器写入时,控制器中会覆盖先前的SQ Tail值。 控制器按顺序从提交队列中提取SQ条目,然后,它可以按任何顺序执行这些命令。

提交队列(SQ)是具有固定槽位大小的循环缓冲区,主机软件使用该缓冲区大小来提交命令以供控制器执行。 当有一到n个新命令要执行时,主机软件会更新相应的SQ Tail门铃寄存器。当有新的门铃寄存器写入时,控制器中会覆盖先前的SQ Tail值。控制器按顺序从提交队列中提取SQ条目,然后,它可以按任何顺序执行这些命令。

每个Submission Queue条目都是一个命令。命令大小为64字节。用于数据传输的内存以PRP形式或散列表形式来传输。 每个命令可以包括两个PRP条目或一个Scatter Gather List(SGL)段。 如果需要传输两个以上的PRP条目来描述数据缓冲区,则提供指向描述PRP条目列表的PRP列表指针。如果需要多个SGL段来描述数据缓冲区,则SGL段提供指向下一个SGL段的指针。

完成队列(CQ)是一个循环缓冲区,具有固定的插槽大小,用于发布已完成命令的状态。一个完成的命令通过关联的SQ标识符和由主机软件分配的命令标识符的组合唯一标识。多个提交队列可以与单个完成队列相关联。这个功能可以用在,当只有单个工作线程通过一个完成队列处理所有命令完成时,即使这些命令源自多个提交队列。

主机软件在处理完完成队列后会更新CQ头指针,表示当前队列的空闲CQ队列的槽位。在完成队列条目中定义了相位标记(P)位,以指示是否在未咨询寄存器的情况下新发布了条目。这使主机软件能够不通过查询寄存器的情况下确定该完成队列条目是新的还是未被更新。实际上,每使用完一轮完成队列的条目,控制器都会反转相位标记位。

1.4.1 Multi-Path I/O and Namespace Sharing

This section provides an overview of multi-path I/O and namespace sharing. Multi-path I/O refers to two or more completely independent PCI Express paths between a single host and a namespace while namespace sharing refers to the ability for two or more hosts to access a common shared namespace using different NVM Express controllers. Both multi-path I/O and namespace sharing require that the NVM subsystem contain two or more controllers. Concurrent access to a shared namespace by two or more hosts requires some form of coordination between hosts. The procedure used to coordinate these hosts is outside the scope of this specification.

Figure 3 shows an NVM subsystem that contains a single NVM Express controller and a single PCI Express port. Since this is a single Function PCI Express device, the NVM Express controller shall be associated with PCI Function 0. A controller may support multiple namespaces. The controller in Figure 3 supports two namespaces labeled NS A and NS B. Associated with each controller namespace is a namespace ID, labeled as NSID 1 and NSID 2, that is used by the controller to reference a specific namespace. The namespace ID is distinct from the namespace itself and is the handle a host and controller use to specify a particular namespace in a command. The selection of a controller’s namespace IDs is outside the scope of this specification. In this example namespace ID 1 is associated with namespace A and namespace ID 2 is associated with namespace B. Both namespaces are private to the controller and this configuration supports neither multi-path I/O nor namespace sharing.

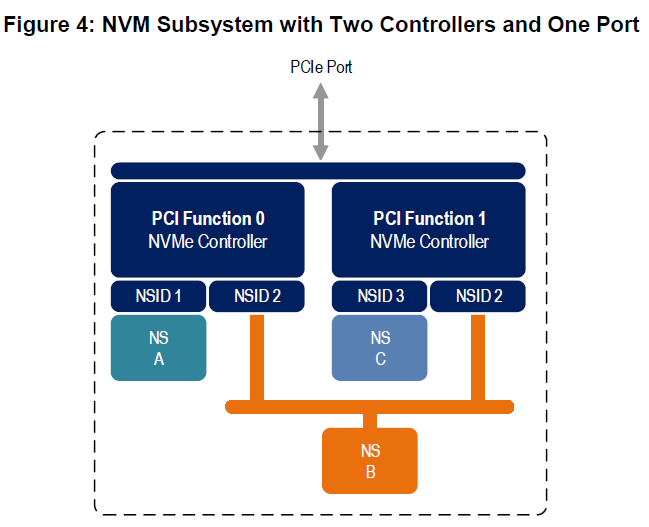

Figure 4 shows a multi-Function NVM Subsystem with a single PCI Express port containing two controllers, one controller is associated with PCI Function 0 and the other controller is associated with PCI Function 1. Each controller supports a single private namespace and access to shared namespace B. The namespace ID shall be the same in all controllers that have access to a particular shared namespace. In this example both controllers use namespace ID 2 to access shared namespace B.

There is a unique Identify Controller data structure for each controller and a unique Identify Namespace data structure for each namespace. Controllers with access to a shared namespace return the Identify Namespace data structure associated with that shared namespace (i.e., the same data structure contents are returned by all controllers with access to the same shared namespace). There is a globally unique identifier associated with the namespace itself and may be used to determine when there are multiple paths to the same shared namespace. Refer to section 7.10.

Controllers associated with a shared namespace may operate on the namespace concurrently. Operations performed by individual controllers are atomic to the shared namespace at the write atomicity level of the controller to which the command was submitted (refer to section 6.4). The write atomicity level is not required to be the same across controllers that share a namespace. If there are any ordering requirements between commands issued to different controllers that access a shared namespace, then host software or an associated application, is required to enforce these ordering requirements.

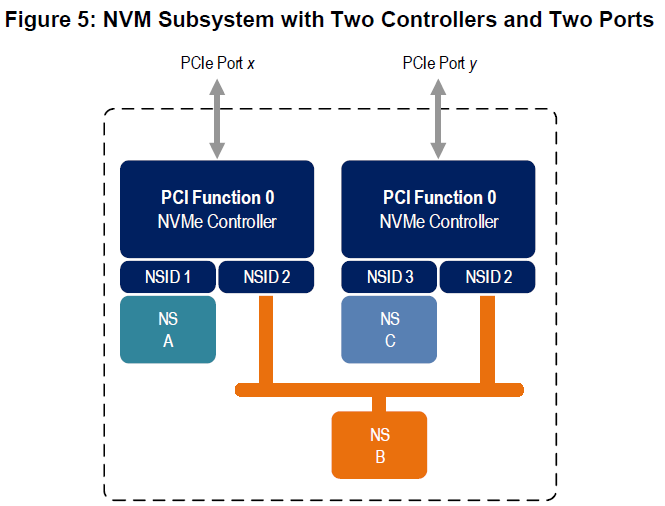

Figure 5 illustrates an NVM Subsystem with two PCI Express ports, each with an associated controller. Both controllers map to PCI Function 0 of the corresponding port. Each PCI Express port in this example is completely independent and has its own PCI Express Fundamental Reset and reference clock input. A reset of a port only affects the controller associated with that port and has no impact on the other controller, shared namespace, or operations performed by the other controller on the shared namespace. The functional behavior of this example is otherwise the same as that illustrated in Figure 4.

The two ports shown in Figure 5 may be associated with the same Root Complex or with different Root Complexes and may be used to implement both multi-path I/O and I/O sharing architectures. System-level architectural aspects and use of multiple ports in a PCI Express fabric are beyond the scope of this specification.

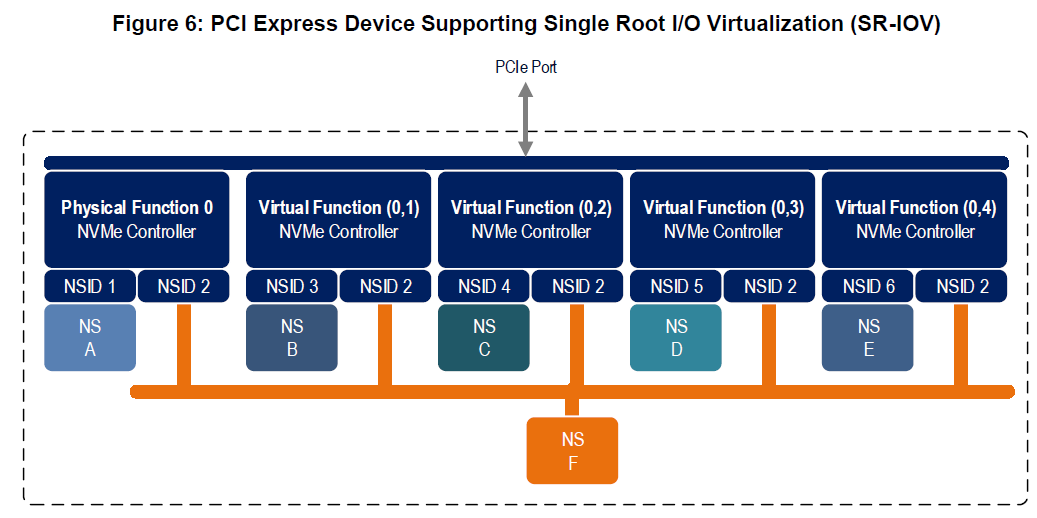

Figure 6 illustrates an NVM subsystem that supports Single Root I/O Virtualization (SR-IOV) and has one Physical Function and four Virtual Functions. An NVM Express controller is associated with each Function with each controller having a private namespace and access to a namespace shared by all controllers, labeled NS F. The behavior of the controllers in this example parallels that of the other examples in this section. Refer to section 8.5.4 for more information on SR-IOV.

Examples provided in this section are meant to illustrate concepts and are not intended to enumerate all possible configurations. For example, an NVM subsystem may contain multiple PCI Express ports with each port supporting SR-IOV.

1.4.1多路径I / O和命名空间共享

本节概述了多路径I / O和命名空间共享。 多路径I / O是指单个主机和命名空间之间具有两个或多个完全独立的PCI Express路径,而命名空间共享是指两个或多个主机使用不同的NVM Express控制器访问公共共享命名空间的能力。 多路径I / O和命名空间共享都要求NVM子系统包含两个或更多控制器。 两个或多个主机同时访问共享命名空间需要在主机之间进行某种形式的协调,至于如何协调这些主机的过程超出了本规范的范围。

图3显示了一个NVM子系统,它包含一个NVM Express控制器和一个PCI Express端口。 由于这是单个功能PCI Express设备,因此NVM Express控制器应与PCI Function 0相关联。控制器可支持多个命名空间。 图3中的控制器支持两个标记为NS A和NS B的命名空间。与至两个命名空间相关联的是分别为NSID 1和NSID 2的命名空间ID,控制器使用该名称空间ID来指定特定名称空间。 命名空间ID与命名空间本身不同,是主机和控制器用于在命令中指定特定命名空间的句柄。 控制器命名空间ID的定义超出了本规范的范围。 在此示例中,命名空间ID 1与命名空间A相关联,命名空间ID 2与命名空间B相关联。两个名称空间都是控制器专用的,并且此配置既不支持多路径I / O,也不支持名称空间共享。

图4展示了一个多Function 的NVM子系统,其中包含一个PCI Express端口和两个控制器,一个控制器与PCI功能0相关联,另一个控制器与PCI功能1相关联。每个控制器支持单个专用的命名空间并访问共享命名空间B。所有控制器可访问的指定共享命名空间的ID应相同。在这个例子中,两个控制器使用命名空间ID 2来访问共享名称空间。

每个控制器都有一个唯一的Identify Controller数据结构,同时每个命名空间都有唯一的Identify Namespace数据结构。不同的控制器访问共享命名空间会返回与该共享命名空间相关联的Identify Namespace数据结构(即,所有可访问同一共享命名空间的控制器返回相同的数据结构内容)。每个命名空间都会有一个与自身相关联的全局唯一标识符,并且可以用于确定多路径下的共享命名空间。请参阅第7.10节。

与共享命名空间相关联的多个控制器可以同时在该命名空间上操作。各个控制器执行该命名空间的写操作时,需要是原子写的(参见第6.4节)。在共享命名空间的控制器之间,写入原子性级别不需要相同。如果访问共享命名空间的不同控制器的命令之间存在任何排序要求,则需要主机软件或相关应用程序来强制执行这些排序要求。

图5显示了一个带有两个PCI Express端口的NVM子系统,每个端口都有一个相关的控制器。两个控制器都映射到相应端口的PCI功能0。此示例中的每个PCI Express端口都是完全独立的,并具有自己的PCI Express基本复位和参考时钟输入。重置端口只会影响与该端口关联的控制器,并且不会影响其他控制器,共享命名空间或共享命名空间上其他控制器执行的操作都不会受到影响。此示例的功能行为与图4中所示的相同。

图5中所示的两个端口可以与相同的Root Complex关联或不同的Root Complex关联,并且可以用于实现多路径I / O和I / O共享架构。系统级体系结构方面以及PCI Express结构中多个端口的使用超出了本规范的范围。

图6说明了支持单根I / O虚拟化(SR-IOV)的NVM子系统,它具有一个物理Function和四个虚拟Function。NVM Express控制器与每个Function关联,每个控制器具有私有命名空间,并且可以访问由所有控制器共享的命名空间,标记为NS F。此示例中控制器的行为与本节中其他示例的行为相似。有关SR-IOV的更多信息,请参见第8.5.4节。

本节中提供的示例旨在说明概念,而不是为了枚举所有可能的配置。例如,NVM子系统可以包含多个PCI Express端口,每个端口支持SR-IOV。