手搭openstack Y版(ubuntu22)

@

基础环境#

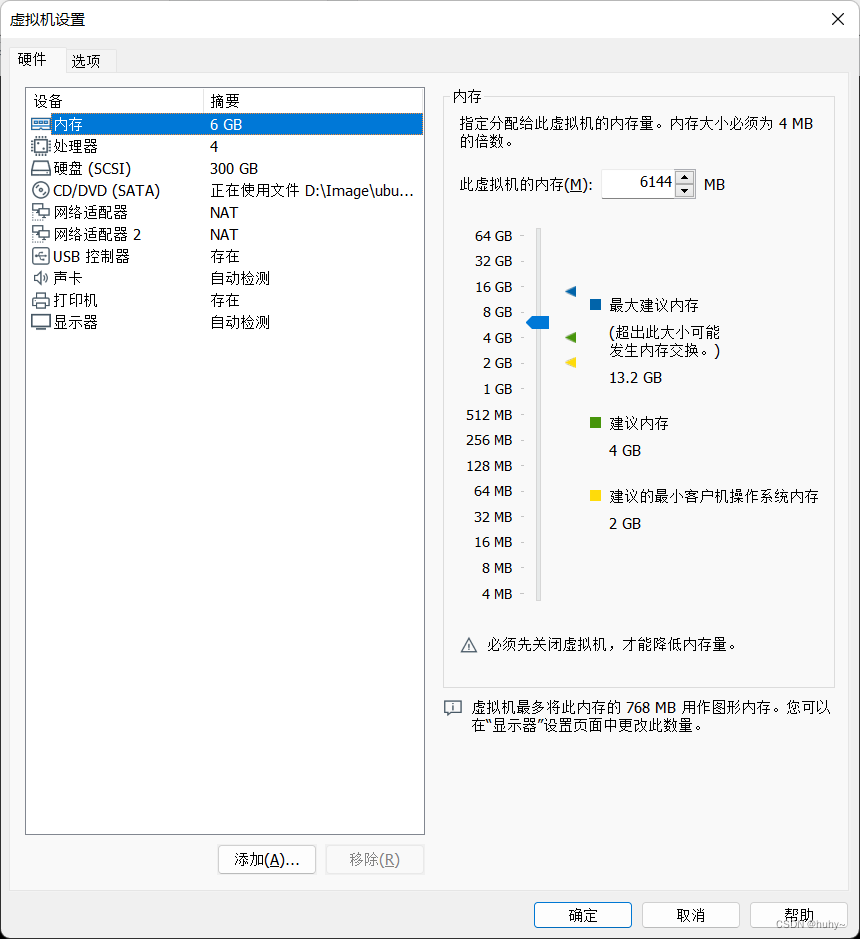

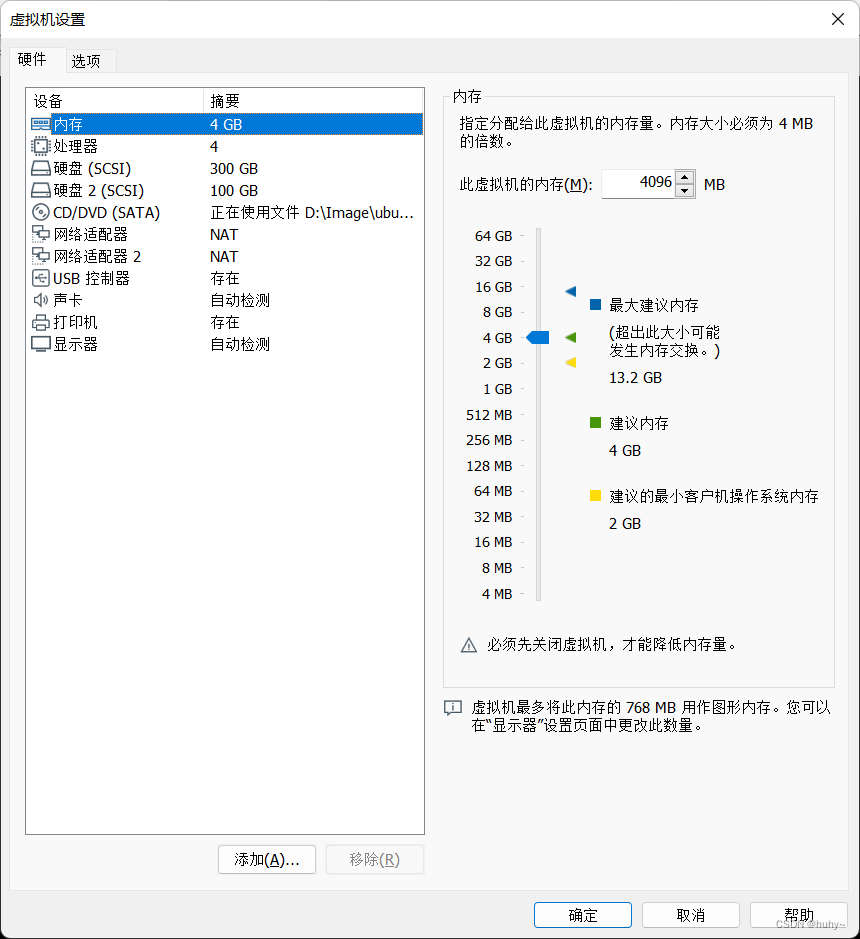

ubuntu22系统,VMware15

controller节点

compute节点

| 节点 | ip |

|---|---|

| controller | 192.168.200.40 |

| compute | 192.168.200.50 |

网卡配置

root@controller:~# cat /etc/netplan/00-installer-config.yaml

# This is the network config written by 'subiquity'

network:

ethernets:

ens33:

dhcp4: no

addresses: [192.168.200.40/24]

#gateway4: 192.168.200.2

routes:

- to: default

via: 192.168.200.2

nameservers:

addresses: [114.114.114.114,8.8.8.8]

ens38:

dhcp4: no

version: 2

root@controller:~#

root@compute:~# cat /etc/netplan/00-installer-config.yaml

# This is the network config written by 'subiquity'

network:

ethernets:

ens33:

dhcp4: no

addresses: [192.168.200.50/24]

#gateway4: 192.168.200.2

routes:

- to: default

via: 192.168.200.2

nameservers:

addresses: [114.114.114.114,8.8.8.8]

ens38:

dhcp4: no

version: 2

root@compute:~#

设置主机名

hostnamectl set-hostname controller

bash

hostnamectl set-hostname compute

bash

设置主机映射

cat >> /etc/hosts << EOF

192.168.200.40 controller

192.168.200.50 compute

EOF

时间调整

开启可配置服务

timedatectl set-ntp true

调整时区为上海

timedatectl set-timezone Asia/Shanghai

将系统时间同步到硬件时间

hwclock --systohc

配置离线环境#

解压

tar zxvf openstackyoga.tar.gz -C /opt/

备份原文件

cp /etc/apt/sources.list{,.bak}

配置离线源

cat > /etc/apt/sources.list << EOF

deb [trusted=yes] file:// /opt/openstackyoga/debs/

EOF

清空缓存

apt clean all

加载源

apt update

时间同步(双节点)#

安装软件包

apt install chrony -y

controller 配置文件

vim /etc/chrony/chrony.conf

server controller iburst maxsources 2

allow all

local stratum 10

compute节点配置文件

vim /etc/chrony/chrony.conf

pool controller iburst maxsources 4

重启服务

systemctl restart chronyd

测试

chronyc sources

安装openstack客户端#

controller节点

apt install -y python3-openstackclient

数据库服务#

apt install -y mariadb-server python3-pymysql

配置mariadb配置文件

cat > /etc/mysql/mariadb.conf.d/99-openstack.cnf << EOF

[mysqld]

bind-address = 0.0.0.0

default-storage-engine = innodb

innodb_file_per_table = on

max_connections = 4096

collation-server = utf8_general_ci

character-set-server = utf8

EOF

重启服务

service mysql restart

初始化数据库

mysql_secure_installation

输入数据库密码:回车

可以在没有适当授权的情况下登录到MariaDB root用户,当前已收到保护:n

设置root用户密码:n

删除匿名用户:y

不允许远程root登录:n

删除测试数据库:y

重新加载数据库:y

消息队列服务#

controller节点

apt install -y rabbitmq-server

创建openstack用户

用户名为:openstack

密码:openstackhuhy

rabbitmqctl add_user openstack openstackhuhy

给open stack用户配置读写权限

rabbitmqctl set_permissions openstack ".*" ".*" ".*"

缓存服务#

controller节点

apt install -y memcached python3-memcache

配置监听地址

vim /etc/memcached.conf

-l 0.0.0.0

服务重启

service memcached restart

keystone服务部署#

controller节点

mysql -uroot -p000000

CREATE DATABASE keystone;

GRANT ALL PRIVILEGES ON keystone.* TO 'keystone'@'%' IDENTIFIED BY 'keystonehuhy';

安装服务

apt install -y keystone

配置keystone文件

备份文件

cp /etc/keystone/keystone.conf{,.bak}

过滤覆盖文件

grep -Ev "^$|#" /etc/keystone/keystone.conf.bak > /etc/keystone/keystone.conf

配置

vim /etc/keystone/keystone.conf

文件直接覆盖,便于简洁排错

[DEFAULT]

log_dir = /var/log/keystone

[application_credential]

[assignment]

[auth]

[cache]

[catalog]

[cors]

[credential]

[database]

connection = mysql+pymysql://keystone:keystonehuhy@controller/keystone

[domain_config]

[endpoint_filter]

[endpoint_policy]

[eventlet_server]

[extra_headers]

Distribution = Ubuntu

[federation]

[fernet_receipts]

[fernet_tokens]

[healthcheck]

[identity]

[identity_mapping]

[jwt_tokens]

[ldap]

[memcache]

[oauth1]

[oslo_messaging_amqp]

[oslo_messaging_kafka]

[oslo_messaging_notifications]

[oslo_messaging_rabbit]

[oslo_middleware]

[oslo_policy]

[policy]

[profiler]

[receipt]

[resource]

[revoke]

[role]

[saml]

[security_compliance]

[shadow_users]

[token]

provider = fernet

[tokenless_auth]

[totp]

[trust]

[unified_limit]

[wsgi]

填充数据库

su -s /bin/sh -c "keystone-manage db_sync" keystone

调用用户和组的密钥库

keystone-manage fernet_setup --keystone-user keystone --keystone-group keystone

keystone-manage credential_setup --keystone-user keystone --keystone-group keystone

在Queens发布之前,keystone需要在两个单独的端口上运行,以容纳Identity v2 API,后者通常在端口35357上运行单独的仅限管理员的服务。随着v2 API的删除,keystones可以在所有接口的同一端口上运行5000

keystone-manage bootstrap --bootstrap-password 000000 --bootstrap-admin-url http://controller:5000/v3/ --bootstrap-internal-url http://controller:5000/v3/ --bootstrap-public-url http://controller:5000/v3/ --bootstrap-region-id RegionOne

编辑/etc/apache2/apache2.conf文件并配置ServerName选项以引用控制器节点

echo "ServerName controller" >> /etc/apache2/apache2.conf

重新启动Apache服务生效配置

service apache2 restart

配置OpenStack认证环境变量

cat > /etc/keystone/admin-openrc.sh << EOF

export OS_PROJECT_DOMAIN_NAME=Default

export OS_USER_DOMAIN_NAME=Default

export OS_PROJECT_NAME=admin

export OS_USERNAME=admin

export OS_PASSWORD=000000

export OS_AUTH_URL=http://controller:5000/v3

export OS_IDENTITY_API_VERSION=3

export OS_IMAGE_API_VERSION=2

EOF

加载生效环境变量

source /etc/keystone/admin-openrc.sh

创建服务项目,后期组件将使用这个项目

openstack project create --domain default --description "Service Project" service

验证

openstack token issue

glance服务部署#

进入数据库

mysql -uroot -p000000

创建数据库

CREATE DATABASE glance;

GRANT ALL PRIVILEGES ON glance.* TO 'glance'@'%' IDENTIFIED BY 'glancehuhy';

创建用户和授权角色

openstack user create --domain default --password glance glance; \

openstack role add --project service --user glance admin; \

openstack service create --name glance --description "OpenStack Image" image

创建镜像服务API端点

openstack endpoint create --region RegionOne image public http://controller:9292; \

openstack endpoint create --region RegionOne image internal http://controller:9292; \

openstack endpoint create --region RegionOne image admin http://controller:9292

安装glance镜像服务

apt install -y glance

配置glance配置文件

备份配置文件

cp /etc/glance/glance-api.conf{,.bak}

过滤覆盖配置文件

grep -Ev "^$|#" /etc/glance/glance-api.conf.bak > /etc/glance/glance-api.conf

配置文件

vim /etc/glance/glance-api.conf

[DEFAULT]

[barbican]

[barbican_service_user]

[cinder]

[cors]

[database]

connection = mysql+pymysql://glance:glancehuhy@controller/glance

[glance_store]

stores = file,http

default_store = file

filesystem_store_datadir = /var/lib/glance/images/

[image_format]

disk_formats = ami,ari,aki,vhd,vhdx,vmdk,raw,qcow2,vdi,iso,ploop.root-tar

[keystone_authtoken]

www_authenticate_uri = http://controller:5000

auth_url = http://controller:5000

memcached_servers = controller:11211

auth_type = password

project_domain_name = Default

user_domain_name = Default

project_name = service

username = glance

password = glance

[paste_deploy]

flavor = keystone

填充数据库

su -s /bin/sh -c "glance-manage db_sync" glance

重启glance服务生效配置

service glance-api restart

placement服务部署#

进入数据库

mysql -uroot -p000000

创建数据库

CREATE DATABASE placement;

GRANT ALL PRIVILEGES ON placement.* TO 'placement'@'%' IDENTIFIED BY 'placementhuhy';

创建用户和授权

openstack user create --domain default --password placement placement; \

openstack role add --project service --user placement admin; \

openstack service create --name placement --description "Placement API" placement

创建Placement API服务端点

openstack endpoint create --region RegionOne placement public http://controller:8778; \

openstack endpoint create --region RegionOne placement internal http://controller:8778; \

openstack endpoint create --region RegionOne placement admin http://controller:8778

安装placement服务

apt install -y placement-api

配置placement文件

备份配置文件

cp /etc/placement/placement.conf{,.bak}

过滤覆盖文件

grep -Ev "^$|#" /etc/placement/placement.conf.bak > /etc/placement/placement.conf

配置文件

vim /etc/placement/placement.conf

[DEFAULT]

[api]

auth_strategy = keystone

[cors]

[keystone_authtoken]

auth_url = http://controller:5000/v3

memcached_servers = controller:11211

auth_type = password

project_domain_name = Default

user_domain_name = Default

project_name = service

username = placement

password = placement

[placement_database]

connection = mysql+pymysql://placement:placementhuhy@controller/placement

填充数据库

su -s /bin/sh -c "placement-manage db sync" placement

重启服务

service apache2 restart

验证

root@controller:~# placement-status upgrade check

+-------------------------------------------+

| Upgrade Check Results |

+-------------------------------------------+

| Check: Missing Root Provider IDs |

| Result: Success |

| Details: None |

+-------------------------------------------+

| Check: Incomplete Consumers |

| Result: Success |

| Details: None |

+-------------------------------------------+

| Check: Policy File JSON to YAML Migration |

| Result: Success |

| Details: None |

+-------------------------------------------+

root@controller:~#

nova服务部署#

controller#

创建数据库与用户给予nova使用

进入数据库

mysql -uroot -p000000

存放nova交互等数据

CREATE DATABASE nova_api;

存放nova资源等数据

CREATE DATABASE nova;

存放nova等元数据

CREATE DATABASE nova_cell0;

创建管理nova_api库的用户

GRANT ALL PRIVILEGES ON nova_api.* TO 'nova'@'%' IDENTIFIED BY 'novahuhy';

创建管理nova库的用户

GRANT ALL PRIVILEGES ON nova.* TO 'nova'@'%' IDENTIFIED BY 'novahuhy';

创建管理nova_cell0库的用户

GRANT ALL PRIVILEGES ON nova_cell0.* TO 'nova'@'%' IDENTIFIED BY 'novahuhy';

创建nova实体,用户授权

openstack user create --domain default --password nova nova; \

openstack role add --project service --user nova admin; \

openstack service create --name nova --description "OpenStack Compute" compute

创建计算API服务端点

openstack endpoint create --region RegionOne compute public http://controller:8774/v2.1; \

openstack endpoint create --region RegionOne compute internal http://controller:8774/v2.1; \

openstack endpoint create --region RegionOne compute admin http://controller:8774/v2.1

安装nova服务

apt install -y nova-api nova-conductor nova-novncproxy nova-scheduler

配置nova文件

备份配置文件

cp /etc/nova/nova.conf{,.bak}

过滤提取文件

grep -Ev "^$|#" /etc/nova/nova.conf.bak > /etc/nova/nova.conf

配置文件

vim /etc/nova/nova.conf

[DEFAULT]

log_dir = /var/log/nova

lock_path = /var/lock/nova

state_path = /var/lib/nova

transport_url = rabbit://openstack:openstackhuhy@controller:5672/

my_ip = 192.168.200.40

[api]

auth_strategy = keystone

[api_database]

connection = mysql+pymysql://nova:novahuhy@controller/nova_api

[barbican]

[barbican_service_user]

[cache]

[cinder]

[compute]

[conductor]

[console]

[consoleauth]

[cors]

[cyborg]

[database]

connection = mysql+pymysql://nova:novahuhy@controller/nova

[devices]

[ephemeral_storage_encryption]

[filter_scheduler]

[glance]

api_servers = http://controller:9292

[guestfs]

[healthcheck]

[hyperv]

[image_cache]

[ironic]

[key_manager]

[keystone]

[keystone_authtoken]

www_authenticate_uri = http://controller:5000/

auth_url = http://controller:5000/

memcached_servers = controller:11211

auth_type = password

project_domain_name = Default

user_domain_name = Default

project_name = service

username = nova

password = nova

[libvirt]

[metrics]

[mks]

[neutron]

[notifications]

[oslo_concurrency]

lock_path = /var/lib/nova/tmp

[oslo_messaging_amqp]

[oslo_messaging_kafka]

[oslo_messaging_notifications]

[oslo_messaging_rabbit]

[oslo_middleware]

[oslo_policy]

[oslo_reports]

[pci]

[placement]

region_name = RegionOne

project_domain_name = Default

project_name = service

auth_type = password

user_domain_name = Default

auth_url = http://controller:5000/v3

username = placement

password = placement

[powervm]

[privsep]

[profiler]

[quota]

[rdp]

[remote_debug]

[scheduler]

[serial_console]

[service_user]

[spice]

[upgrade_levels]

[vault]

[vendordata_dynamic_auth]

[vmware]

[vnc]

enabled = true

server_listen = $my_ip

server_proxyclient_address = $my_ip

[workarounds]

[wsgi]

[zvm]

[cells]

enable = False

[os_region_name]

openstack =

填充nova_api数据库

su -s /bin/sh -c "nova-manage api_db sync" nova

注册cell0数据库

su -s /bin/sh -c "nova-manage cell_v2 map_cell0" nova

创建cell1单元格

su -s /bin/sh -c "nova-manage cell_v2 create_cell --name=cell1 --verbose" nova

填充nova数据库

su -s /bin/sh -c "nova-manage db sync" nova

验证nova、cell0和cell1是否正确注册

su -s /bin/sh -c "nova-manage cell_v2 list_cells" nova

重启服务

cat > /root/nova-restart.sh <<EOF

#!bin/bash

# 处理api服务

service nova-api restart

# 处理资源调度服务

service nova-scheduler restart

# 处理数据库服务

service nova-conductor restart

# 处理vnc远程窗口服务

service nova-novncproxy restart

EOF

bash nova-restart.sh

compute#

安装nova-compute服务

apt install -y nova-compute

配置nova文件

备份配置文件

cp /etc/nova/nova.conf{,.bak}

过滤覆盖配置文件

grep -Ev "^$|#" /etc/nova/nova.conf.bak > /etc/nova/nova.conf

vi /etc/nova/nova.conf

[DEFAULT]

log_dir = /var/log/nova

lock_path = /var/lock/nova

state_path = /var/lib/nova

transport_url = rabbit://openstack:openstackhuhy@controller

my_ip = 192.168.200.50

[api]

auth_strategy = keystone

[api_database]

[barbican]

[barbican_service_user]

[cache]

[cinder]

[compute]

[conductor]

[console]

[consoleauth]

[cors]

[cyborg]

[database]

[devices]

[ephemeral_storage_encryption]

[filter_scheduler]

[glance]

api_servers = http://controller:9292

[guestfs]

[healthcheck]

[hyperv]

[image_cache]

[ironic]

[key_manager]

[keystone]

[keystone_authtoken]

www_authenticate_uri = http://controller:5000/

auth_url = http://controller:5000/

memcached_servers = controller:11211

auth_type = password

project_domain_name = Default

user_domain_name = Default

project_name = service

username = nova

password = nova

[libvirt]

[metrics]

[mks]

[neutron]

[notifications]

[oslo_concurrency]

lock_path = /var/lib/nova/tmp

[oslo_messaging_amqp]

[oslo_messaging_kafka]

[oslo_messaging_notifications]

[oslo_messaging_rabbit]

[oslo_middleware]

[oslo_policy]

[oslo_reports]

[pci]

[placement]

region_name = RegionOne

project_domain_name = Default

project_name = service

auth_type = password

user_domain_name = Default

auth_url = http://controller:5000/v3

username = placement

password = placement

[powervm]

[privsep]

[profiler]

[quota]

[rdp]

[remote_debug]

[scheduler]

[serial_console]

[service_user]

[spice]

[upgrade_levels]

[vault]

[vendordata_dynamic_auth]

[vmware]

[vnc]

enabled = true

server_listen = 0.0.0.0

server_proxyclient_address = $my_ip

novncproxy_base_url = http://192.168.200.40:6080/vnc_auto.html

[workarounds]

[wsgi]

[zvm]

[cells]

enable = False

[os_region_name]

openstack =

检测是否支持硬件加速

如果结果返回0,需要配置如下

# 确定计算节点是否支持虚拟机的硬件加速

egrep -c '(vmx|svm)' /proc/cpuinfo

# 如果结果返回 “0” ,那么需要配置如下

vim /etc/nova/nova-compute.conf

[libvirt]

virt_type = qemu

重启服务生效nova配置

service nova-compute restart

controller下执行直接发现

查看有那些可用的计算节点

openstack compute service list --service nova-compute

发现计算主机

su -s /bin/sh -c "nova-manage cell_v2 discover_hosts --verbose" nova

配置每5分钟主机发现一次

vim /etc/nova/nova.conf

'''

[scheduler]

discover_hosts_in_cells_interval = 300

'''

校验nova服务

root@controller:~# openstack compute service list

+--------------------------------------+----------------+------------+----------+---------+-------+----------------------------+

| ID | Binary | Host | Zone | Status | State | Updated At |

+--------------------------------------+----------------+------------+----------+---------+-------+----------------------------+

| 3b7fba5d-52e7-45af-a399-bdd20dcd95cd | nova-scheduler | controller | internal | enabled | up | 2022-10-23T06:54:56.000000 |

| f9d3c86c-16a8-42fe-9abb-d8290cf61f9b | nova-conductor | controller | internal | enabled | up | 2022-10-23T06:54:56.000000 |

| bd9c8e22-bd5f-4319-868f-c33ca5ddc586 | nova-compute | compute | nova | enabled | up | 2022-10-23T06:54:53.000000 |

+--------------------------------------+----------------+------------+----------+---------+-------+----------------------------+

root@controller:~#

neutron服务部署#

controller节点#

配置基于OVS的Neutron网络服务

创建数据库与用给予neutron使用

进入数据库

mysql -uroot -p000000

创建数据库

CREATE DATABASE neutron;

创建用户

GRANT ALL PRIVILEGES ON neutron.* TO 'neutron'@'%' IDENTIFIED BY 'neutronhuhy';

创建neutron实体,用户授权

openstack user create --domain default --password neutron neutron; \

openstack role add --project service --user neutron admin; \

openstack service create --name neutron --description "OpenStack Networking" network

创建neutron的api端点

openstack endpoint create --region RegionOne network public http://controller:9696; \

openstack endpoint create --region RegionOne network internal http://controller:9696; \

openstack endpoint create --region RegionOne network admin http://controller:9696

配置内核

cat >> /etc/sysctl.conf << EOF

# 用于控制系统是否开启对数据包源地址的校验,关闭

net.ipv4.conf.all.rp_filter=0

net.ipv4.conf.default.rp_filter=0

# 开启二层转发设备

net.bridge.bridge-nf-call-iptables=1

net.bridge.bridge-nf-call-ip6tables=1

EOF

modprobe br_netfilter

sysctl -p

安装neutron服务

apt install -y neutron-server neutron-plugin-ml2 neutron-l3-agent neutron-dhcp-agent neutron-metadata-agent neutron-openvswitch-agent

配置neutron.conf文件#

备份配置文件

cp /etc/neutron/neutron.conf{,.bak}

过滤提取配置文件

grep -Ev "^$|#" /etc/neutron/neutron.conf.bak > /etc/neutron/neutron.conf

vim /etc/neutron/neutron.conf

[DEFAULT]

core_plugin = ml2

service_plugins = router

allow_overlapping_ips = true

auth_strategy = keystone

state_path = /var/lib/neutron

dhcp_agent_notification = true

allow_overlapping_ips = true

notify_nova_on_port_status_changes = true

notify_nova_on_port_data_changes = true

transport_url = rabbit://openstack:openstackhuhy@controller

[agent]

root_helper = "sudo /usr/bin/neutron-rootwrap /etc/neutron/rootwrap.conf"

[database]

connection = mysql+pymysql://neutron:neutronhuhy@controller/neutron

[keystone_authtoken]

www_authenticate_uri = http://controller:5000

auth_url = http://controller:5000

memcached_servers = controller:11211

auth_type = password

project_domain_name = default

user_domain_name = default

project_name = service

username = neutron

password = neutron

[nova]

auth_url = http://controller:5000

auth_type = password

project_domain_name = default

user_domain_name = default

region_name = RegionOne

project_name = service

username = nova

password = nova

[oslo_concurrency]

lock_path = /var/lib/neutron/tmp

配置ml2_conf.ini文件#

备份配置文件

cp /etc/neutron/plugins/ml2/ml2_conf.ini{,.bak}

过滤覆盖文件

grep -Ev "^$|#" /etc/neutron/plugins/ml2/ml2_conf.ini.bak > /etc/neutron/plugins/ml2/ml2_conf.ini

vim /etc/neutron/plugins/ml2/ml2_conf.ini

[DEFAULT]

[ml2]

type_drivers = flat,vlan,vxlan,gre

tenant_network_types = vxlan

mechanism_drivers = openvswitch,l2population

extension_drivers = port_security

[ml2_type_flat]

flat_networks = physnet1

[ml2_type_geneve]

[ml2_type_gre]

[ml2_type_vlan]

[ml2_type_vxlan]

vni_ranges = 1:1000

[ovs_driver]

[securitygroup]

enable_ipset = true

enable_security_group = true

firewall_driver = neutron.agent.linux.iptables_firewall.OVSHybridIptablesFirewallDriver

[sriov_driver]

配置openvswitch_agent.ini文件#

备份文件

cp /etc/neutron/plugins/ml2/openvswitch_agent.ini{,.bak}

过滤覆盖文件

grep -Ev "^$|#" /etc/neutron/plugins/ml2/openvswitch_agent.ini.bak > /etc/neutron/plugins/ml2/openvswitch_agent.ini

vim /etc/neutron/plugins/ml2/openvswitch_agent.ini

[DEFAULT]

[agent]

l2_population = True

tunnel_types = vxlan

prevent_arp_spoofing = True

[dhcp]

[network_log]

[ovs]

local_ip = 192.168.200.40

bridge_mappings = physnet1:br-ens38

[securitygroup]

配置l3_agent.ini文件

备份文件

cp /etc/neutron/l3_agent.ini{,.bak}

过滤覆盖文件

grep -Ev "^$|#" /etc/neutron/l3_agent.ini.bak > /etc/neutron/l3_agent.ini

vim /etc/neutron/l3_agent.ini

[DEFAULT]

interface_driver = neutron.agent.linux.interface.OVSInterfaceDriver

external_network_bridge =

[agent]

[network_log]

[ovs]

配置dhcp_agent文件#

备份文件

cp /etc/neutron/dhcp_agent.ini{,.bak}

过滤覆盖文件

grep -Ev "^$|#" /etc/neutron/dhcp_agent.ini.bak > /etc/neutron/dhcp_agent.ini

vim /etc/neutron/dhcp_agent.ini

[DEFAULT]

interface_driver = neutron.agent.linux.interface.OVSInterfaceDriver

dhcp_driver = neutron.agent.linux.dhcp.Dnsmasq

enable_isolated_metadata = True

[agent]

[ovs]

配置metadata_agent.ini文件#

备份文件

cp /etc/neutron/metadata_agent.ini{,.bak}

过滤覆盖文件

grep -Ev "^$|#" /etc/neutron/metadata_agent.ini.bak > /etc/neutron/metadata_agent.ini

vim /etc/neutron/metadata_agent.ini

[DEFAULT]

nova_metadata_host = controller

metadata_proxy_shared_secret = huhy

[agent]

[cache]

配置nova文件#

vim /etc/nova/nova.conf

'

''

[default]

linuxnet_interface_driver = nova.network.linux_net.LinuxOVSlnterfaceDriver

[neutron]

auth_url = http://controller:5000

auth_type = password

project_domain_name = default

user_domain_name = default

region_name = RegionOne

project_name = service

username = neutron

password = neutron

service_metadata_proxy = true

metadata_proxy_shared_secret = huhy

'''

填充数据库

su -s /bin/sh -c "neutron-db-manage --config-file /etc/neutron/neutron.conf --config-file /etc/neutron/plugins/ml2/ml2_conf.ini upgrade head" neutron

重启nova-api服务生效neutron配置

service nova-api restart

新建一个外部网络桥接

ovs-vsctl add-br br-ens38

将外部网络桥接映射到网卡

这里绑定第二张网卡,属于业务网卡

ovs-vsctl add-port br-ens38 ens38

重启neutron相关服务生效配置

cat > neutron-restart.sh <<EOF

#!bin/bash

# 提供neutron服务

service neutron-server restart

# 提供ovs服务

service neutron-openvswitch-agent restart

# 提供地址动态服务

service neutron-dhcp-agent restart

# 提供元数据服务

service neutron-metadata-agent restart

# 提供三层网络服务

service neutron-l3-agent restart

EOF

bash neutron-restart.sh

compute#

配置内核转发

cat >> /etc/sysctl.conf << EOF

# 用于控制系统是否开启对数据包源地址的校验,关闭

net.ipv4.conf.all.rp_filter=0

net.ipv4.conf.default.rp_filter=0

# 开启二层转发设备

net.bridge.bridge-nf-call-iptables=1

net.bridge.bridge-nf-call-ip6tables=1

EOF

modprobe br_netfilter

sysctl -p

安装软件包

apt install -y neutron-openvswitch-agent

配置neutron文件#

备份文件

cp /etc/neutron/neutron.conf{,.bak}

过滤提取文件

grep -Ev "^$|#" /etc/neutron/neutron.conf.bak > /etc/neutron/neutron.conf

vim /etc/neutron/neutron.conf

[DEFAULT]

core_plugin = ml2

service_plugins = router

auth_strategy = keystone

state_path = /var/lib/neutron

allow_overlapping_ips = true

transport_url = rabbit://openstack:openstackhuhy@controller

[agent]

root_helper = "sudo /usr/bin/neutron-rootwrap /etc/neutron/rootwrap.conf"

[cache]

[cors]

[database]

[healthcheck]

[ironic]

[keystone_authtoken]

www_authenticate_uri = http://controller:5000

auth_url = http://controller:5000

memcached_servers = controller:11211

auth_type = password

project_domain_name = default

user_domain_name = default

project_name = service

username = neutron

password = neutron

[nova]

[oslo_concurrency]

lock_path = /var/lib/neutron/tmp

[oslo_messaging_amqp]

[oslo_messaging_kafka]

[oslo_messaging_notifications]

[oslo_messaging_rabbit]

[oslo_middleware]

[oslo_policy]

[oslo_reports]

[placement]

[privsep]

[quotas]

[ssl]

配置openvswitch_agent.ini文件#

备份文件

cp /etc/neutron/plugins/ml2/openvswitch_agent.ini{,.bak}

过滤提取文件

grep -Ev "^$|#" /etc/neutron/plugins/ml2/openvswitch_agent.ini.bak > /etc/neutron/plugins/ml2/openvswitch_agent.ini

完整配置

vim /etc/neutron/plugins/ml2/openvswitch_agent.ini

[DEFAULT]

[agent]

l2_population = True

tunnel_types = vxlan

prevent_arp_spoofing = True

[dhcp]

[network_log]

[ovs]

local_ip = 192.168.200.50

bridge_mappings = physnet1:br-ens38

[securitygroup]

enable_security_group = True

firewall_driver = neutron.agent.linux.iptables_firewall.OVSHybridIptablesFirewallDriver

配置nova文件识别neutron配置#

vim /etc/nova/nova.conf

'''

[DEFAULT]

linuxnet_interface_driver = nova.network.linux_net.LinuxOVSlnterfaceDriver

vif_plugging_is_fatal = true

vif_pligging_timeout = 300

[neutron]

auth_url = http://controller:5000

auth_type = password

project_domain_name = default

user_domain_name = default

region_name = RegionOne

project_name = service

username = neutron

password = neutron

'''

重启nova服务识别网络配置

service nova-compute restart

新建一个外部网络桥接

ovs-vsctl add-br br-ens38

将外部网络桥接映射到网卡

这里绑定第二张网卡,属于业务网卡

ovs-vsctl add-port br-ens38 ens38

重启服务加载ovs配置

service neutron-openvswitch-agent restart

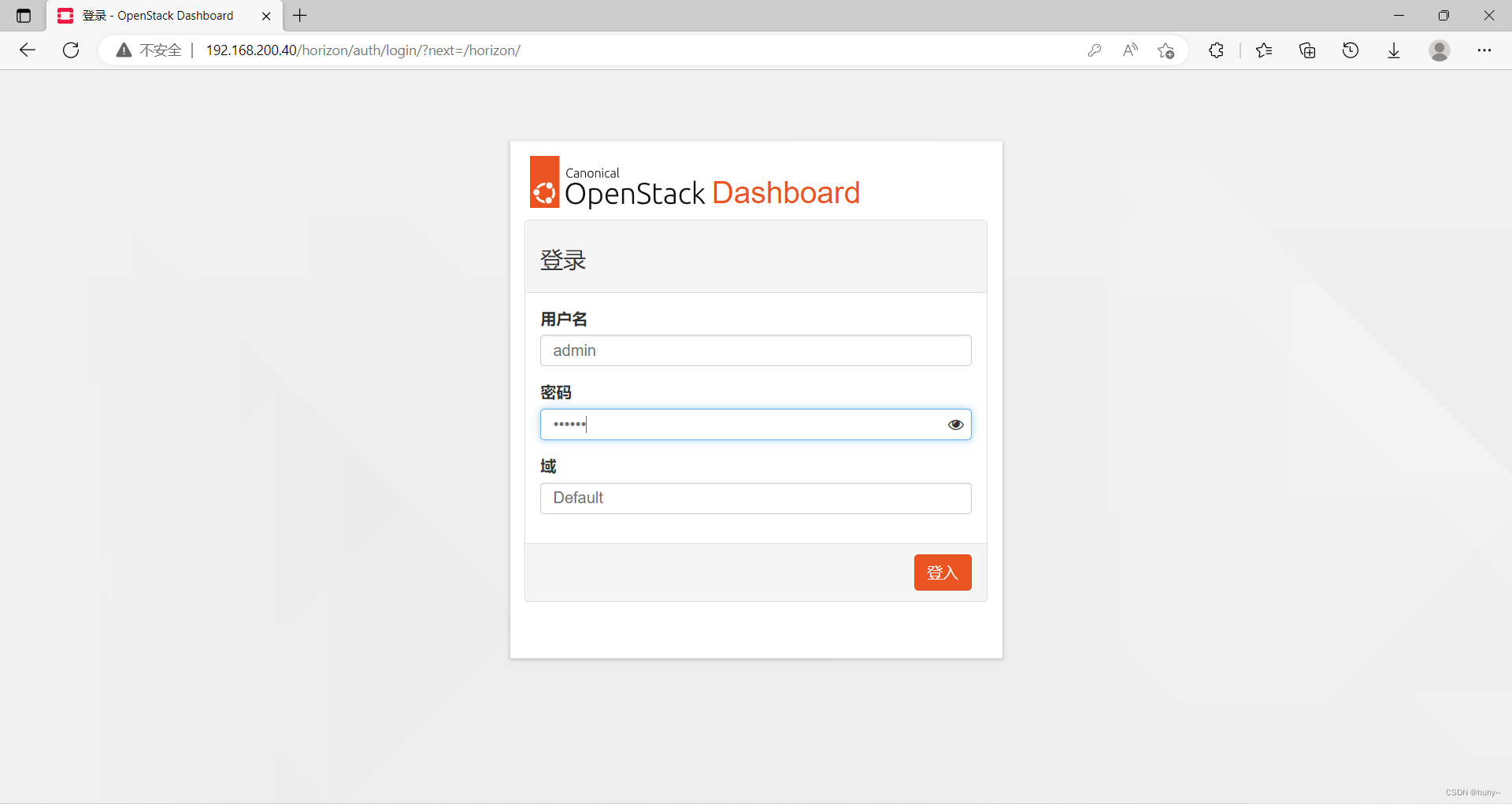

dashboard服务部署#

controller节点

安装软件包

apt install -y openstack-dashboard

配置local_settings.py文件#

vim /etc/openstack-dashboard/local_settings.py

'''

# 配置仪表板以在控制器节点上使用OpenStack服务

OPENSTACK_HOST = "controller"

# 在Dashboard configuration部分中,允许主机访问Dashboard

ALLOWED_HOSTS = ["*"]

# 配置memcached会话存储服务

SESSION_ENGINE = 'django.contrib.sessions.backends.cache'

CACHES = {

'default': {

'BACKEND': 'django.core.cache.backends.memcached.MemcachedCache',

'LOCATION': 'controller:11211',

}

}

# 启用Identity API版本3

OPENSTACK_KEYSTONE_URL = "http://%s:5000/v3" % OPENSTACK_HOST

# 启用对域的支持

OPENSTACK_KEYSTONE_MULTIDOMAIN_SUPPORT = True

# 配置API版本

OPENSTACK_API_VERSIONS = {

"identity": 3,

"image": 2,

"volume": 3,

}

# 将Default配置为通过仪表板创建的用户的默认域

OPENSTACK_KEYSTONE_DEFAULT_DOMAIN = "Default"

# 将用户配置为通过仪表板创建的用户的默认角色

OPENSTACK_KEYSTONE_DEFAULT_ROLE = "user"

# 启用卷备份

OPENSTACK_CINDER_FEATURES = {

'enable_backup': True,

}

# 配置时区

TIME_ZONE = "Asia/Shanghai"

'''

重新加载web服务

systemctl reload apache2

web访问 ;http://controller/horizon

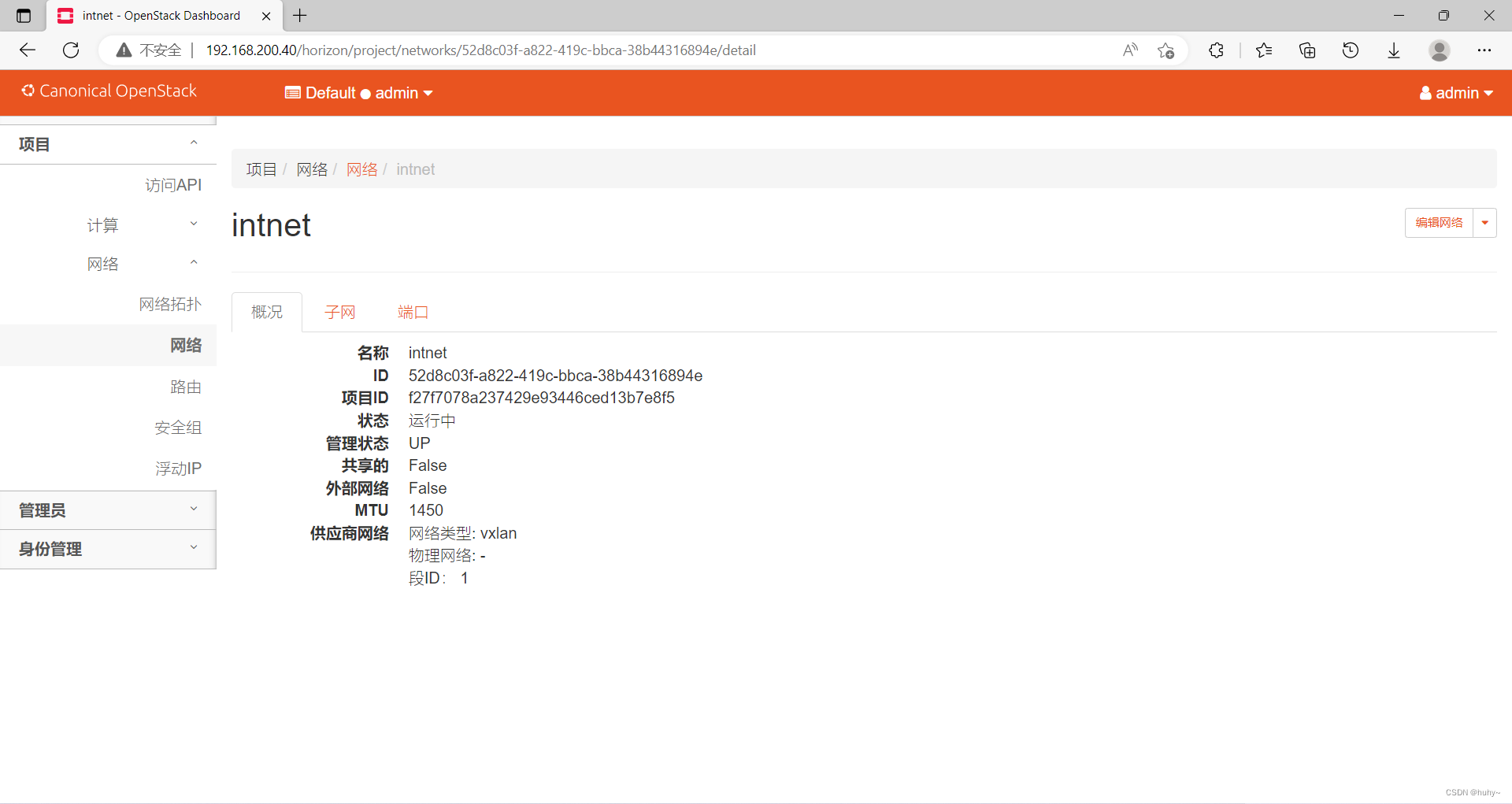

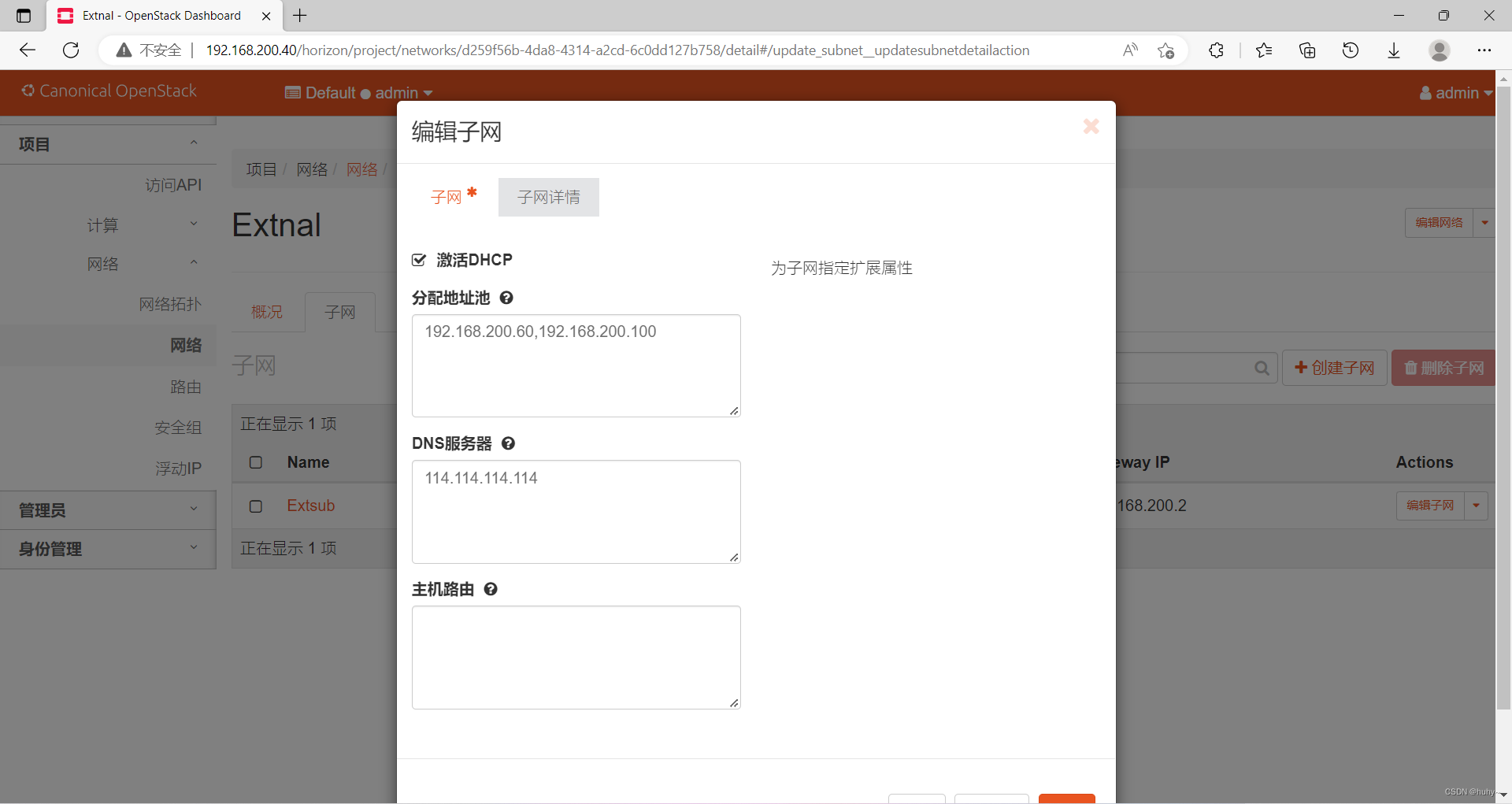

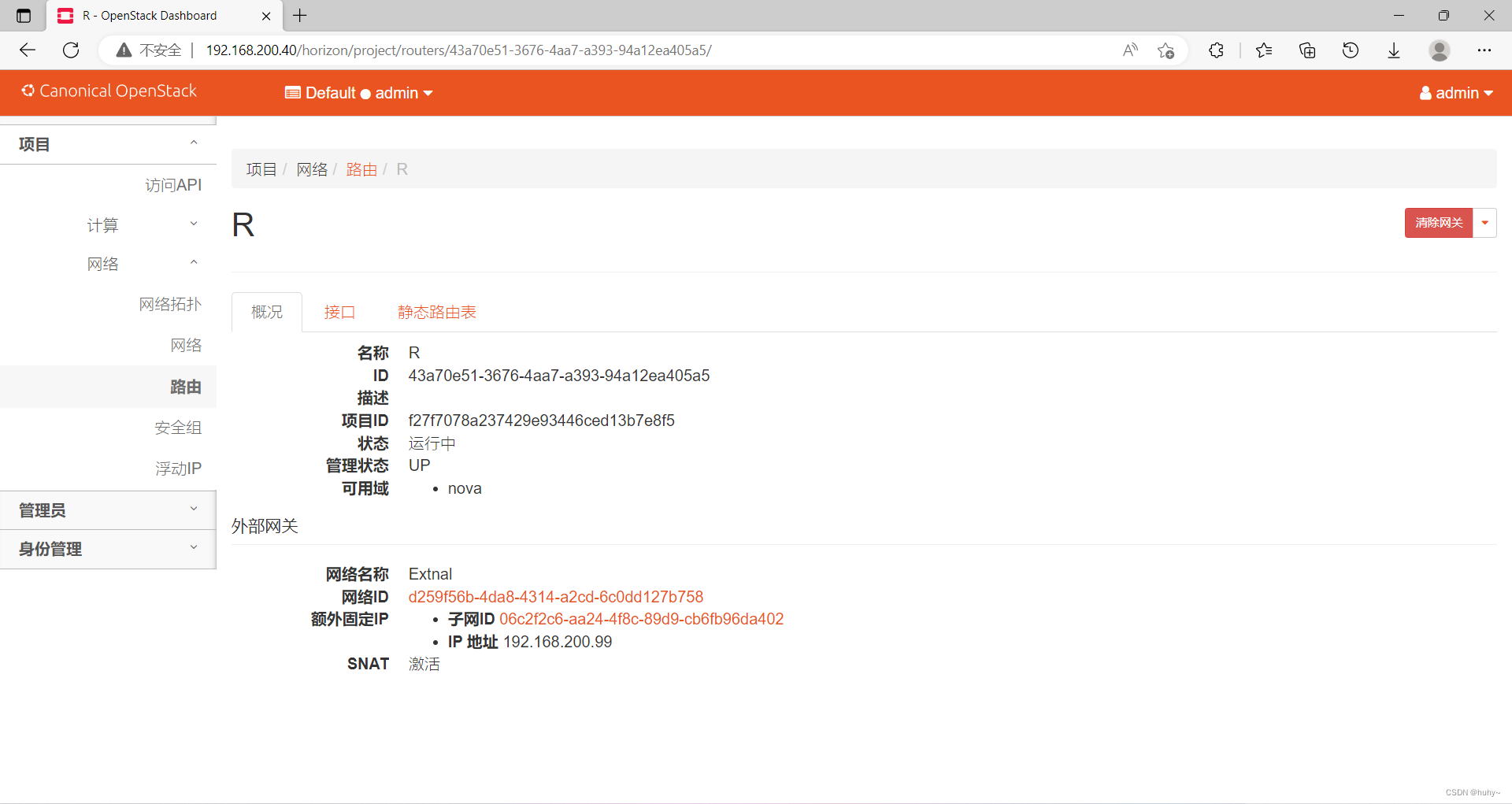

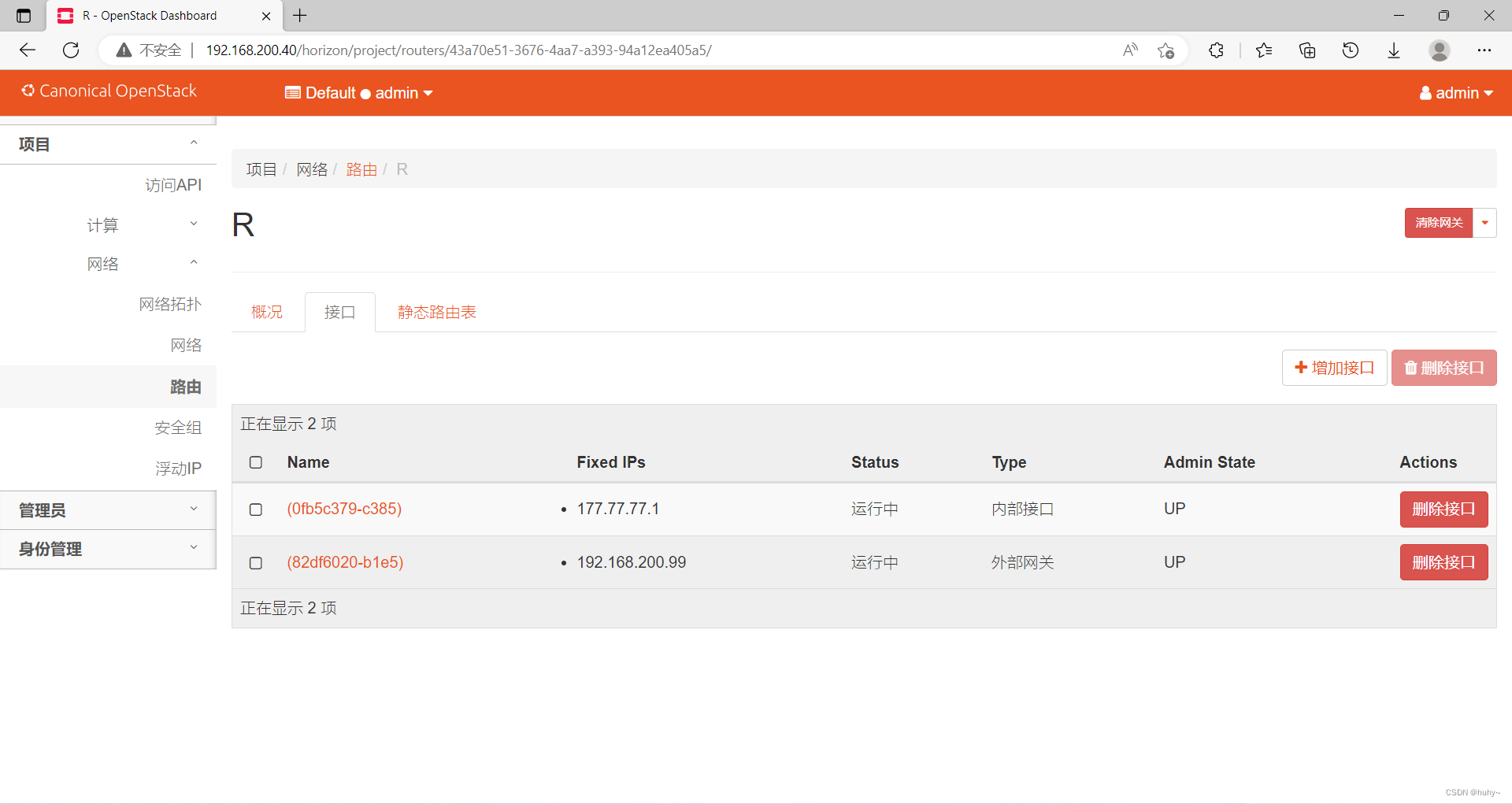

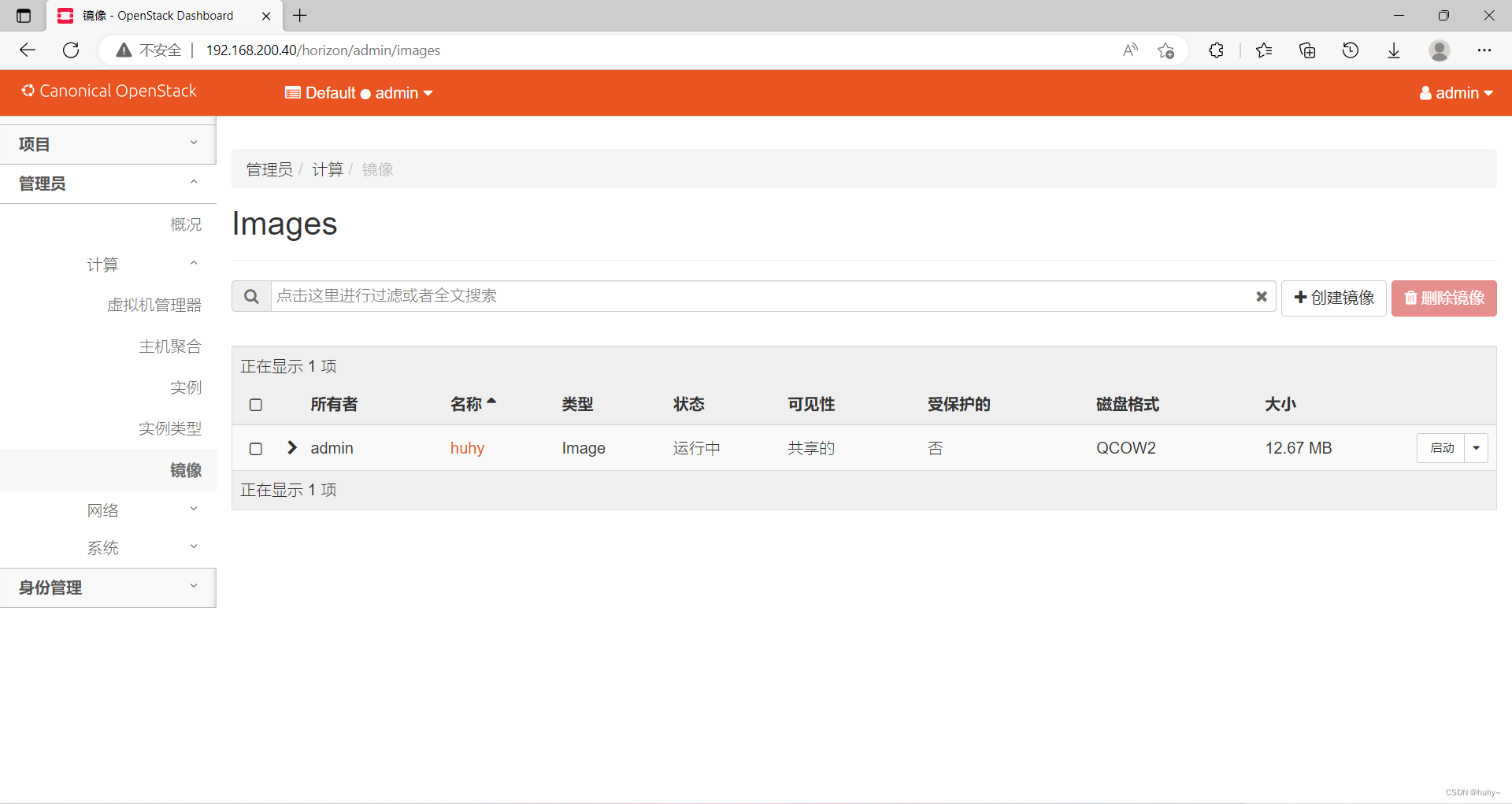

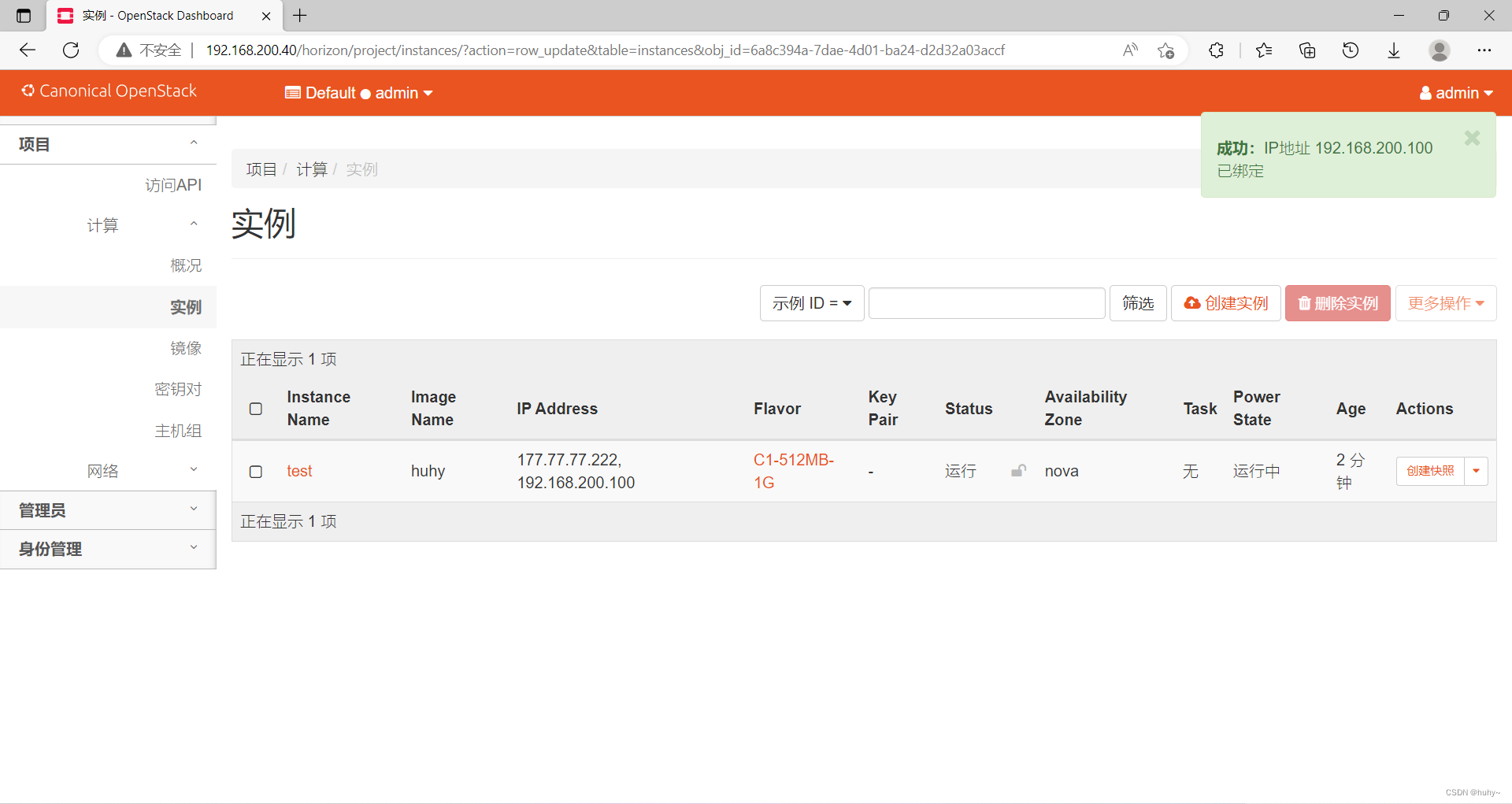

创建云主机(界面)#

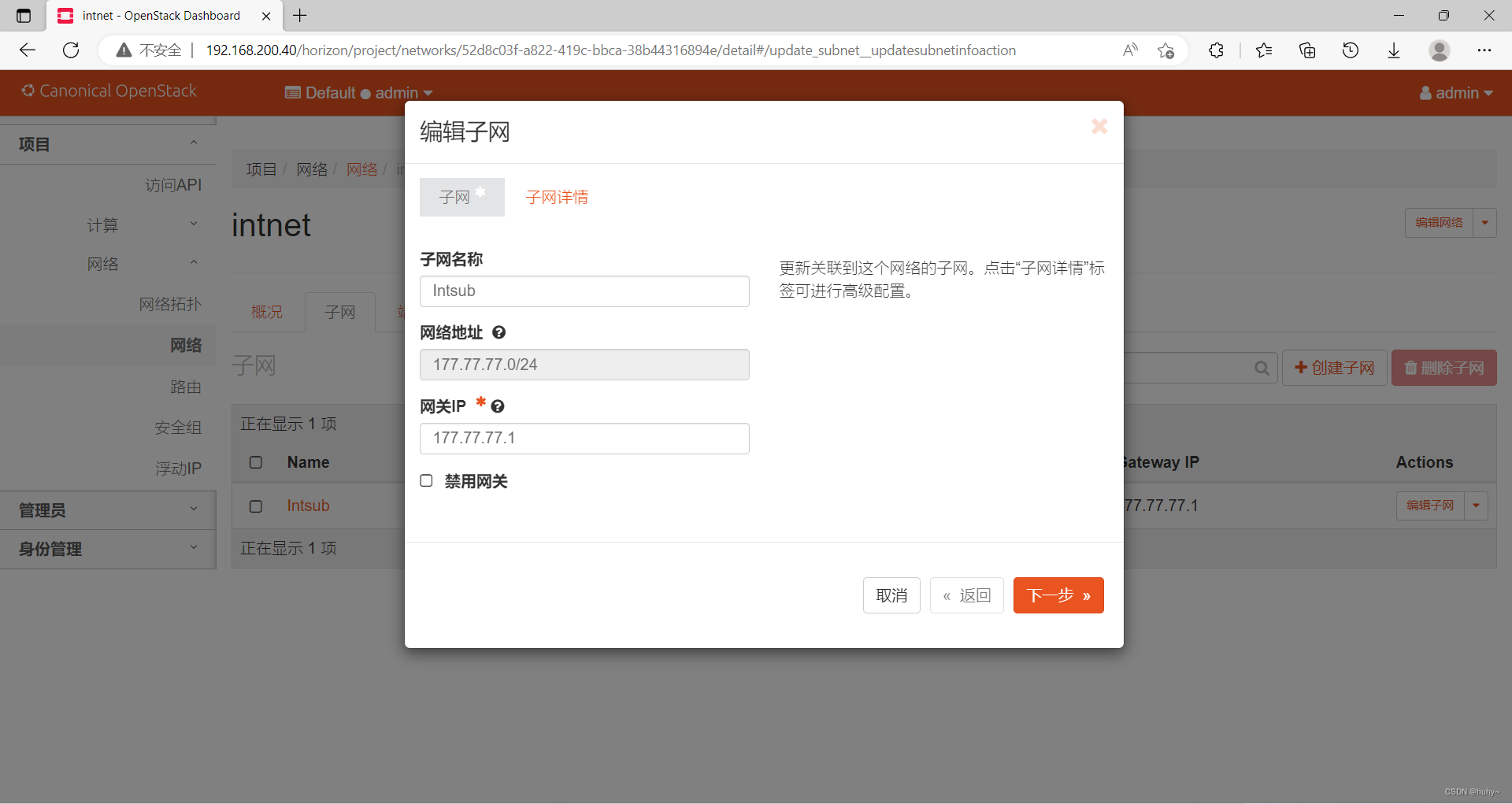

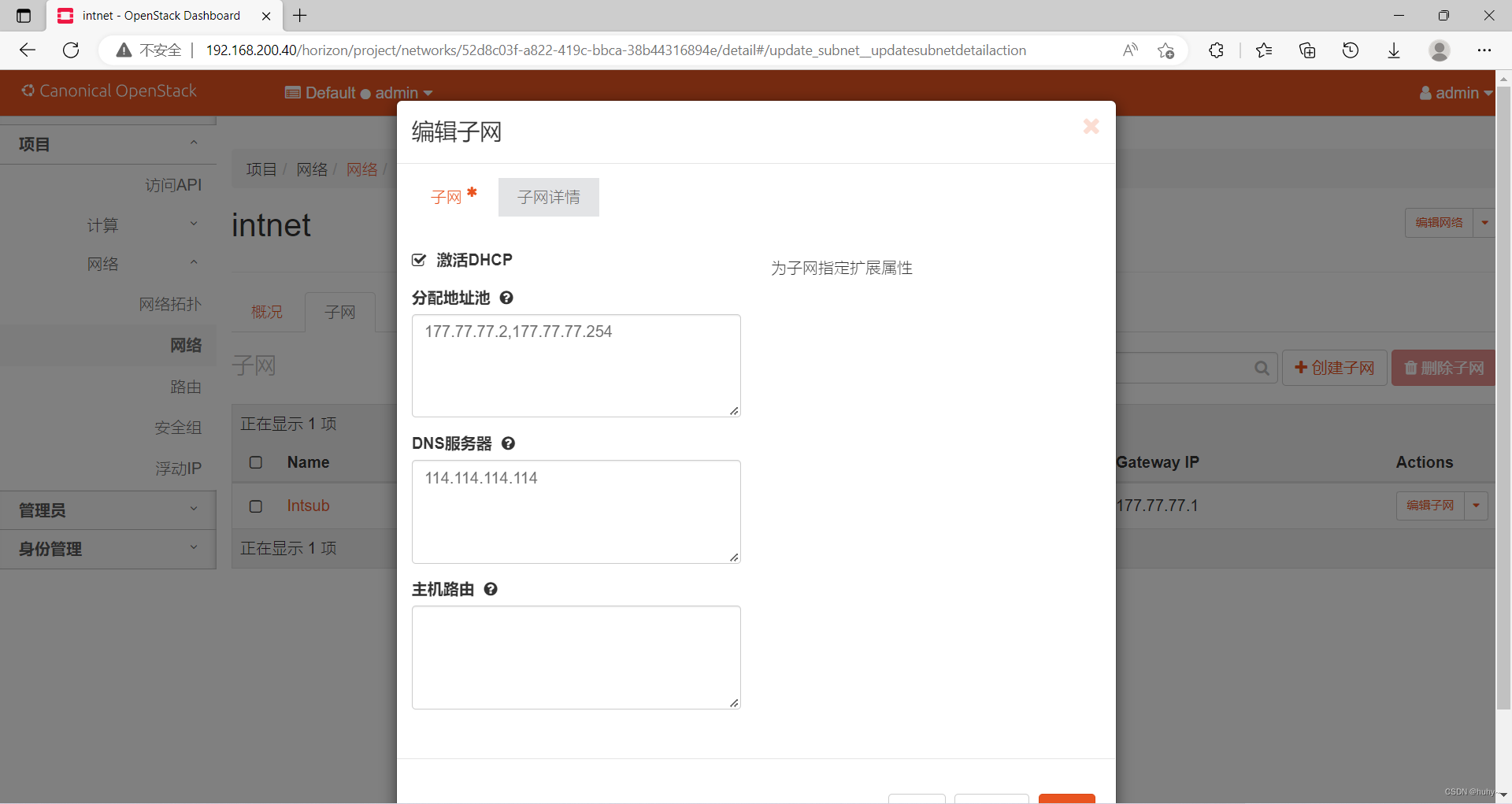

创建内网vxlan,网段随意,网关设不设都可以,主机DNS114.114.114.114

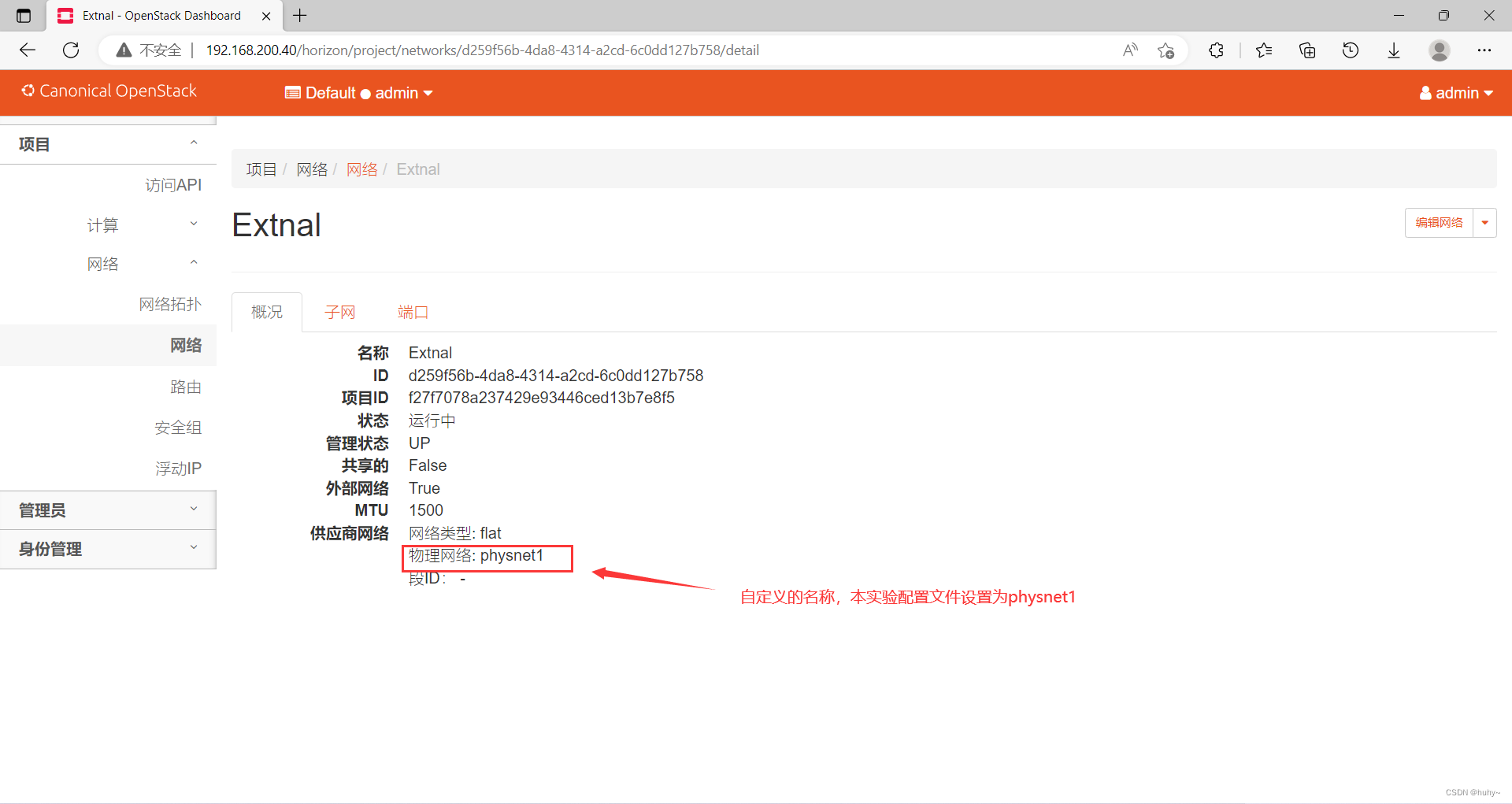

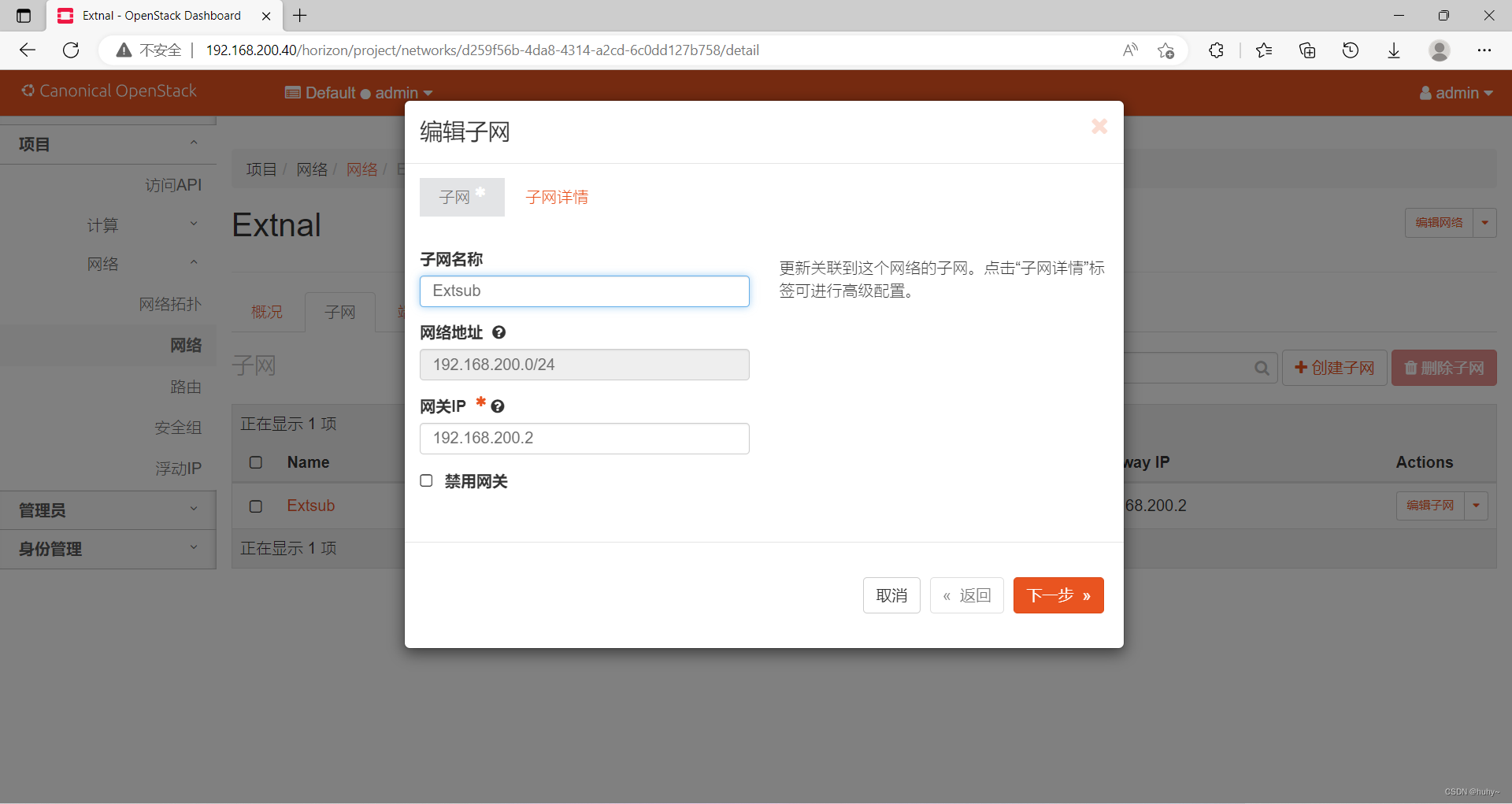

创建外网,类型flat,网段指定为物理机网段,网关指定为物理机网段,配置DNS114.114.114.114

配置路由

创建cirros镜像

创建实例类型

配置安全组

创建实例

绑定浮动ip

命令创建云主机#

加载openstack环境变量

source /etc/keystone/admin-openrc.sh

创建路由器

openstack router create Ext-Router

root@controller:~# openstack router create Ext-Router

+-------------------------+--------------------------------------+

| Field | Value |

+-------------------------+--------------------------------------+

| admin_state_up | UP |

| availability_zone_hints | |

| availability_zones | |

| created_at | 2022-10-23T12:12:33Z |

| description | |

| distributed | False |

| external_gateway_info | null |

| flavor_id | None |

| ha | False |

| id | 867b0bcf-8759-4d15-8333-adfe9bbfbda6 |

| name | Ext-Router |

| project_id | f27f7078a237429e93446ced13b7e8f5 |

| revision_number | 1 |

| routes | |

| status | ACTIVE |

| tags | |

| updated_at | 2022-10-23T12:12:33Z |

+-------------------------+--------------------------------------+

root@controller:~#

创建vxlan内部网络

openstack network create --internal --provider-network-type vxlan int-net

root@controller:~# openstack network create --internal --provider-network-type vxlan int-net

+---------------------------+--------------------------------------+

| Field | Value |

+---------------------------+--------------------------------------+

| admin_state_up | UP |

| availability_zone_hints | |

| availability_zones | |

| created_at | 2022-10-23T12:15:39Z |

| description | |

| dns_domain | None |

| id | 3ab6b9ab-8cdd-4c79-8e6d-aa3a4e45a03c |

| ipv4_address_scope | None |

| ipv6_address_scope | None |

| is_default | False |

| is_vlan_transparent | None |

| mtu | 1450 |

| name | int-net |

| port_security_enabled | True |

| project_id | f27f7078a237429e93446ced13b7e8f5 |

| provider:network_type | vxlan |

| provider:physical_network | None |

| provider:segmentation_id | 143 |

| qos_policy_id | None |

| revision_number | 1 |

| router:external | Internal |

| segments | None |

| shared | False |

| status | ACTIVE |

| subnets | |

| tags | |

| updated_at | 2022-10-23T12:15:39Z |

+---------------------------+--------------------------------------+

root@controller:~#

创建vxlan子网

openstack subnet create int-net-sub --network int-net --subnet-range 177.77.77.0/24 --gateway 177.77.77.1 --dns-nameserver 114.114.114.114

root@controller:~# openstack subnet create int-net-sub --network int-net --subnet-range 177.77.77.0/24 --gateway 177.77.77.1 --dns-nameserver 114.114.114.114

+----------------------+--------------------------------------+

| Field | Value |

+----------------------+--------------------------------------+

| allocation_pools | 177.77.77.2-177.77.77.254 |

| cidr | 177.77.77.0/24 |

| created_at | 2022-10-23T12:16:55Z |

| description | |

| dns_nameservers | 114.114.114.114 |

| dns_publish_fixed_ip | None |

| enable_dhcp | True |

| gateway_ip | 177.77.77.1 |

| host_routes | |

| id | 5eeeeae8-a3c0-4bb1-90bd-f09e6fd8d473 |

| ip_version | 4 |

| ipv6_address_mode | None |

| ipv6_ra_mode | None |

| name | int-net-sub |

| network_id | 3ab6b9ab-8cdd-4c79-8e6d-aa3a4e45a03c |

| project_id | f27f7078a237429e93446ced13b7e8f5 |

| revision_number | 0 |

| segment_id | None |

| service_types | |

| subnetpool_id | None |

| tags | |

| updated_at | 2022-10-23T12:16:55Z |

+----------------------+--------------------------------------+

root@controller:~#

将内部网络添加到路由器

openstack router add subnet Ext-Router int-net-sub

创建flat外部网络

openstack network create --provider-physical-network physnet1 --provider-network-type flat --external ext-net

root@controller:~# openstack network create --provider-physical-network physnet1 --provider-network-type flat --external ext-net

+---------------------------+--------------------------------------+

| Field | Value |

+---------------------------+--------------------------------------+

| admin_state_up | UP |

| availability_zone_hints | |

| availability_zones | |

| created_at | 2022-10-23T12:18:23Z |

| description | |

| dns_domain | None |

| id | ff412b9f-56c9-403d-b823-3aa8d0140344 |

| ipv4_address_scope | None |

| ipv6_address_scope | None |

| is_default | False |

| is_vlan_transparent | None |

| mtu | 1500 |

| name | ext-net |

| port_security_enabled | True |

| project_id | f27f7078a237429e93446ced13b7e8f5 |

| provider:network_type | flat |

| provider:physical_network | physnet1 |

| provider:segmentation_id | None |

| qos_policy_id | None |

| revision_number | 1 |

| router:external | External |

| segments | None |

| shared | False |

| status | ACTIVE |

| subnets | |

| tags | |

| updated_at | 2022-10-23T12:18:23Z |

+---------------------------+--------------------------------------+

root@controller:~#

创建flat子网

openstack subnet create ext-net-sub --network ext-net --subnet-range 192.168.200.40/24 --allocation-pool start=192.168.200.30,end=192.168.200.200 --gateway 192.168.200.2 --dns-nameserver 114.114.114.114 --no-dhcp

root@controller:~# openstack subnet create ext-net-sub --network ext-net --subnet-range 192.168.200.40/24 --allocation-pool start=192.168.200.30,end=192.168.200.200 --gateway 192.168.200.2 --dns-nameserver 114.114.114.114 --no-dhcp

+----------------------+--------------------------------------+

| Field | Value |

+----------------------+--------------------------------------+

| allocation_pools | 192.168.200.30-192.168.200.200 |

| cidr | 192.168.200.0/24 |

| created_at | 2022-10-23T12:20:07Z |

| description | |

| dns_nameservers | 114.114.114.114 |

| dns_publish_fixed_ip | None |

| enable_dhcp | False |

| gateway_ip | 192.168.200.2 |

| host_routes | |

| id | 01a9f546-fb55-43b1-92aa-b7e1608e2bbd |

| ip_version | 4 |

| ipv6_address_mode | None |

| ipv6_ra_mode | None |

| name | ext-net-sub |

| network_id | ff412b9f-56c9-403d-b823-3aa8d0140344 |

| project_id | f27f7078a237429e93446ced13b7e8f5 |

| revision_number | 0 |

| segment_id | None |

| service_types | |

| subnetpool_id | None |

| tags | |

| updated_at | 2022-10-23T12:20:07Z |

+----------------------+--------------------------------------+

root@controller:~#

设置路由器网关接口

openstack router set Ext-Router --external-gateway ext-net

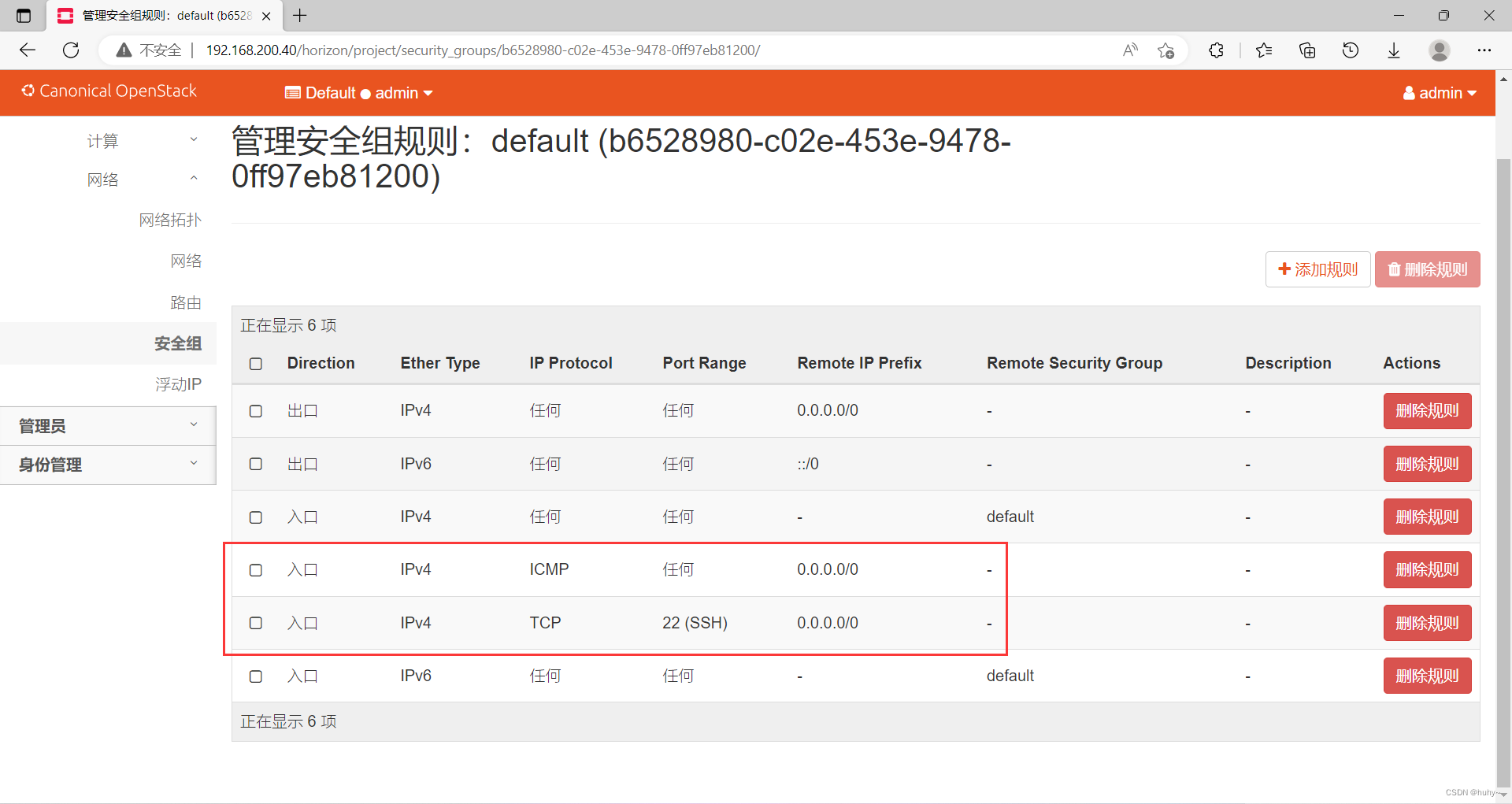

开放安全组

查看安全组,由于之前创建了,所以多了一个安全组,默认配置第一个安全组,指定id配置

root@controller:~# openstack security group list

+--------------------------------------+---------+------------------------+----------------------------------+------+

| ID | Name | Description | Project | Tags |

+--------------------------------------+---------+------------------------+----------------------------------+------+

| b6528980-c02e-453e-9478-0ff97eb81200 | default | Default security group | f27f7078a237429e93446ced13b7e8f5 | [] |

| f49f6599-f08d-40fc-a43a-459f99e0d271 | default | Default security group | c1399386e66e48f69d014a19ac377a97 | [] |

+--------------------------------------+---------+------------------------+----------------------------------+------+

root@controller:~#

开放icmp协议

openstack security group rule create --proto icmp b6528980-c02e-453e-9478-0ff97eb81200

root@controller:~# openstack security group rule create --proto icmp b6528980-c02e-453e-9478-0ff97eb81200

+-------------------------+--------------------------------------+

| Field | Value |

+-------------------------+--------------------------------------+

| created_at | 2022-10-23T12:23:28Z |

| description | |

| direction | ingress |

| ether_type | IPv4 |

| id | 15dc7ad0-77ea-4e98-a023-0b394de4f388 |

| name | None |

| port_range_max | None |

| port_range_min | None |

| project_id | f27f7078a237429e93446ced13b7e8f5 |

| protocol | icmp |

| remote_address_group_id | None |

| remote_group_id | None |

| remote_ip_prefix | 0.0.0.0/0 |

| revision_number | 0 |

| security_group_id | b6528980-c02e-453e-9478-0ff97eb81200 |

| tags | [] |

| tenant_id | f27f7078a237429e93446ced13b7e8f5 |

| updated_at | 2022-10-23T12:23:28Z |

+-------------------------+--------------------------------------+

root@controller:~#

开放22端口

openstack security group rule create --proto tcp --dst-port 22:22 b6528980-c02e-453e-9478-0ff97eb81200

root@controller:~# openstack security group rule create --proto tcp --dst-port 22:22 b6528980-c02e-453e-9478-0ff97eb81200

+-------------------------+--------------------------------------+

| Field | Value |

+-------------------------+--------------------------------------+

| created_at | 2022-10-23T12:25:58Z |

| description | |

| direction | ingress |

| ether_type | IPv4 |

| id | 40805581-e83f-4da1-86f9-8a6ec1b08ab1 |

| name | None |

| port_range_max | 22 |

| port_range_min | 22 |

| project_id | f27f7078a237429e93446ced13b7e8f5 |

| protocol | tcp |

| remote_address_group_id | None |

| remote_group_id | None |

| remote_ip_prefix | 0.0.0.0/0 |

| revision_number | 0 |

| security_group_id | b6528980-c02e-453e-9478-0ff97eb81200 |

| tags | [] |

| tenant_id | f27f7078a237429e93446ced13b7e8f5 |

| updated_at | 2022-10-23T12:25:58Z |

+-------------------------+--------------------------------------+

root@controller:~#

查看安全组规则

openstack security group rule list

root@controller:~# openstack security group rule list

+--------------------------------------+-------------+-----------+-----------+------------+-----------+--------------------------------------+----------------------+--------------------------------------+

| ID | IP Protocol | Ethertype | IP Range | Port Range | Direction | Remote Security Group | Remote Address Group | Security Group |

+--------------------------------------+-------------+-----------+-----------+------------+-----------+--------------------------------------+----------------------+--------------------------------------+

| 0d39daac-07e0-4db8-8e9b-5e0768dc78f6 | None | IPv6 | ::/0 | | ingress | f49f6599-f08d-40fc-a43a-459f99e0d271 | None | f49f6599-f08d-40fc-a43a-459f99e0d271 |

| 112b75f7-85e9-4a4b-9a05-77a9dcb900a9 | None | IPv4 | 0.0.0.0/0 | | egress | None

| None | b6528980-c02e-453e-9478-0ff97eb81200 |

| 15dc7ad0-77ea-4e98-a023-0b394de4f388 | icmp | IPv4 | 0.0.0.0/0 | | ingress | None

| None | b6528980-c02e-453e-9478-0ff97eb81200 |

| 40805581-e83f-4da1-86f9-8a6ec1b08ab1 | tcp | IPv4 | 0.0.0.0/0 | 22:22 | ingress | None

| None | b6528980-c02e-453e-9478-0ff97eb81200 |

| 5513c7c9-6b02-4b70-bd71-9d4522a53d65 | None | IPv4 | 0.0.0.0/0 | | ingress | f49f6599-f08d-40fc-a43a-459f99e0d271 | None | f49f6599-f08d-40fc-a43a-459f99e0d271 |

| 6b3132e3-3acc-463f-9762-2d565b04af9a | None | IPv6 | ::/0 | | egress | None

| None | b6528980-c02e-453e-9478-0ff97eb81200 |

| 735ca074-f37a-4359-93cb-71628e038108 | None | IPv4 | 0.0.0.0/0 | | ingress | b6528980-c02e-453e-9478-0ff97eb81200 | None | b6528980-c02e-453e-9478-0ff97eb81200 |

| 777acd23-10d2-4322-916a-092dc32adc0d | None | IPv4 | 0.0.0.0/0 | | egress | None

| None | f49f6599-f08d-40fc-a43a-459f99e0d271 |

| da2c2aba-27f8-4fd8-b3cf-c9b21927f708 | None | IPv6 | ::/0 | | ingress | b6528980-c02e-453e-9478-0ff97eb81200 | None | b6528980-c02e-453e-9478-0ff97eb81200 |

| f873ba00-f57d-422a-8dcc-52ccb645d3f7 | None | IPv6 | ::/0 | | egress | None

| None | f49f6599-f08d-40fc-a43a-459f99e0d271 |

+--------------------------------------+-------------+-----------+-----------+------------+-----------+--------------------------------------+----------------------+--------------------------------------+

root@controller:~#

上传镜像

openstack image create cirros-test --disk-format qcow2 --file cirros-0.3.4-x86_64-disk.img

root@controller:~# openstack image create cirros-test --disk-format qcow2 --file cirros-0.3.4-x86_64-disk.img

+------------------+-------------------------------------------------------------------------------------------------------------------------------------------------+

| Field | Value |

+------------------+-------------------------------------------------------------------------------------------------------------------------------------------------+

| container_format | bare |

| created_at | 2022-10-23T12:28:14Z |

| disk_format | qcow2 |

| file | /v2/images/6c3b4c83-eb48-4182-a436-99e94548942c/file |

| id | 6c3b4c83-eb48-4182-a436-99e94548942c |

| min_disk | 0 |

| min_ram | 0 |

| name | cirros-test |

| owner | f27f7078a237429e93446ced13b7e8f5 |

| properties | os_hidden='False', owner_specified.openstack.md5='', owner_specified.openstack.object='images/cirros-test', owner_specified.openstack.sha256='' |

| protected | False |

| schema | /v2/schemas/image

|

| status | queued

|

| tags |

|

| updated_at | 2022-10-23T12:28:14Z

|

| visibility | shared

|

+------------------+-------------------------------------------------------------------------------------------------------------------------------------------------+

root@controller:~#

创建云主机

创建ssh-key密钥

ssh-keygen -N ""

创建密钥

openstack keypair create --public-key ~/.ssh/id_rsa.pub mykey

root@controller:~# openstack keypair create --public-key ~/.ssh/id_rsa.pub mykey

+-------------+-------------------------------------------------+

| Field | Value |

+-------------+-------------------------------------------------+

| created_at | None |

| fingerprint | a6:0f:55:76:1d:9b:39:ec:89:9a:c4:45:80:ee:b1:c6 |

| id | mykey |

| is_deleted | None |

| name | mykey |

| type | ssh |

| user_id | cb56cef9f3f64ff8a036efc922d36b5b |

+-------------+-------------------------------------------------+

root@controller:~#

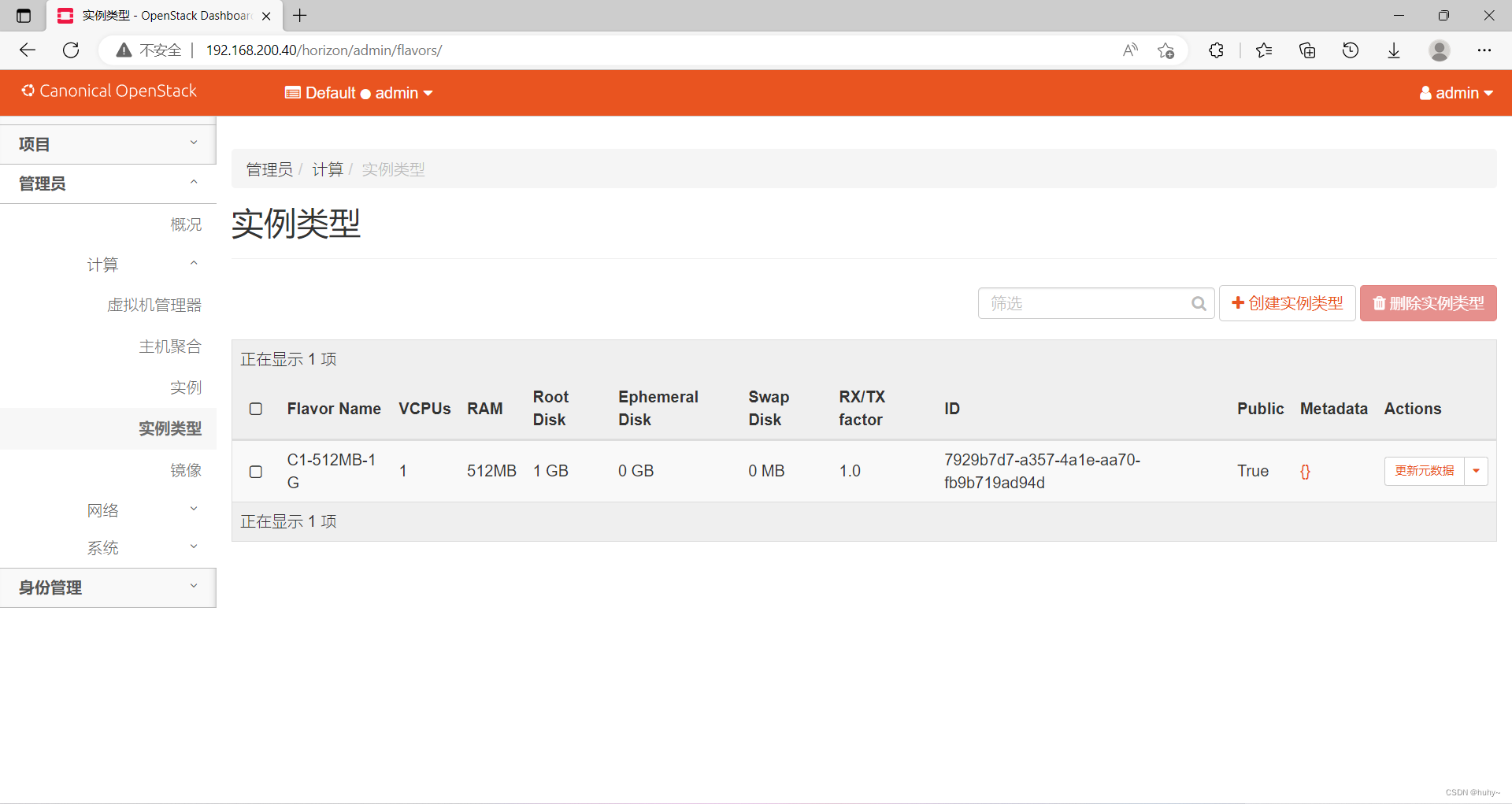

创建云主机类型

openstack flavor create --vcpus 1 --ram 512 --disk 1 C1-512MB-1G

root@controller:~# openstack flavor create --vcpus 1 --ram 512 --disk 1 C1-512MB-1G

+----------------------------+--------------------------------------+

| Field | Value |

+----------------------------+--------------------------------------+

| OS-FLV-DISABLED:disabled | False |

| OS-FLV-EXT-DATA:ephemeral | 0 |

| description | None |

| disk | 1 |

| id | 9728d3fb-d35a-4fbd-9b8f-9e7b60234f78 |

| name | C1-512MB-1G |

| os-flavor-access:is_public | True |

| properties | |

| ram | 512 |

| rxtx_factor | 1.0 |

| swap | |

| vcpus | 1 |

+----------------------------+--------------------------------------+

root@controller:~#

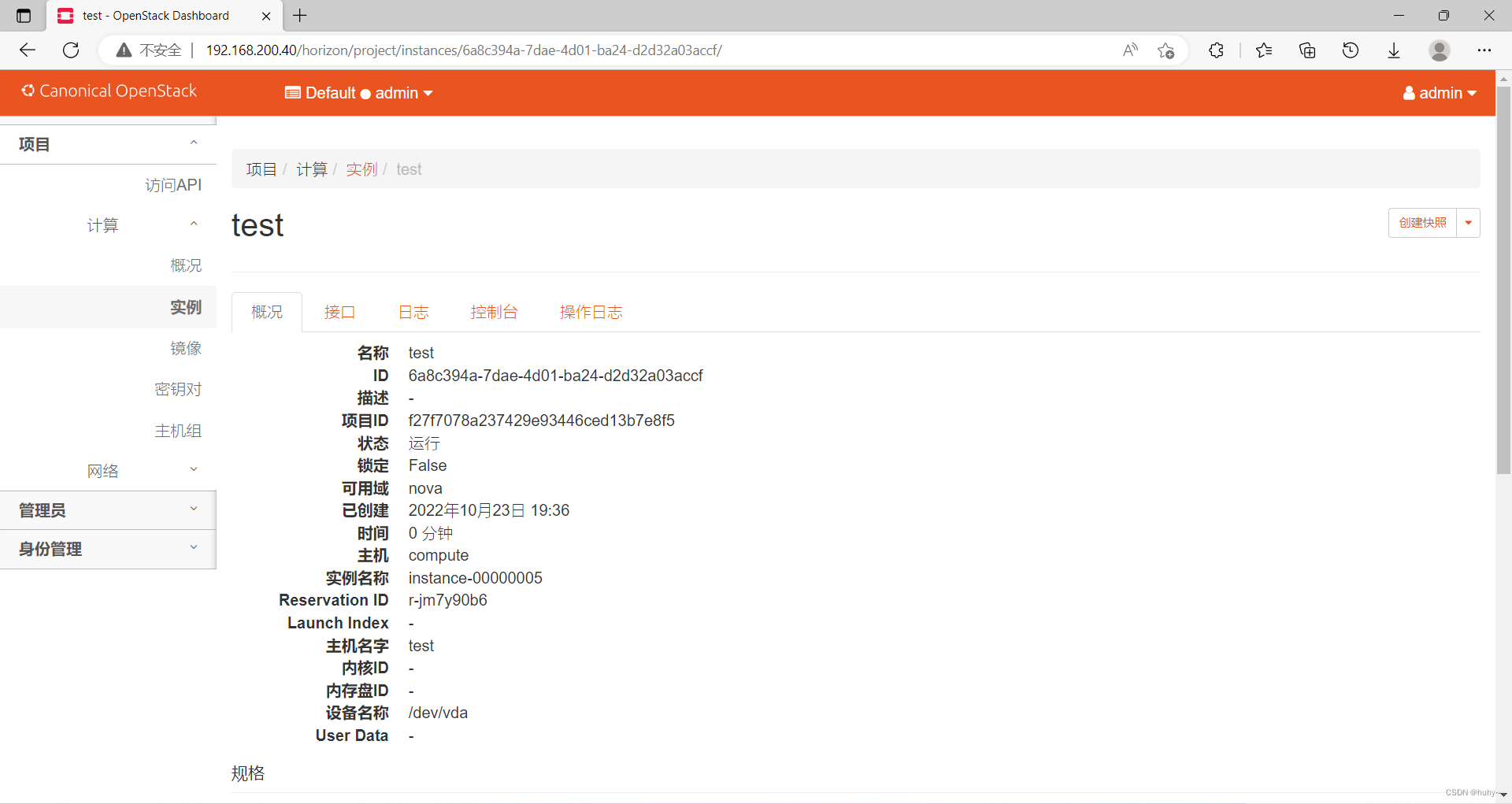

创建云主机

openstack server create --flavor C1-512MB-1G --image cirros-test --security-group b6528980-c02e-453e-9478-0ff97eb81200 --nic net-id=int-net --key-name mykey vm-test

root@controller:~# openstack server create --flavor C1-512MB-1G --image cirros-test --security-group b6528980-c02e-453e-9478-0ff97eb81200 --nic net-id=int-net --key-name mykey vm-test

+-------------------------------------+----------------------------------------------------+

| Field | Value |

+-------------------------------------+----------------------------------------------------+

| OS-DCF:diskConfig | MANUAL |

| OS-EXT-AZ:availability_zone | |

| OS-EXT-SRV-ATTR:host | None |

| OS-EXT-SRV-ATTR:hypervisor_hostname | None |

| OS-EXT-SRV-ATTR:instance_name | |

| OS-EXT-STS:power_state | NOSTATE |

| OS-EXT-STS:task_state | scheduling |

| OS-EXT-STS:vm_state | building |

| OS-SRV-USG:launched_at | None |

| OS-SRV-USG:terminated_at | None |

| accessIPv4 | |

| accessIPv6 | |

| addresses | |

| adminPass | X23cQfafzJht |

| config_drive | |

| created | 2022-10-23T12:31:06Z |

| flavor | C1-512MB-1G (9728d3fb-d35a-4fbd-9b8f-9e7b60234f78) |

| hostId | |

| id | ae9cd673-002a-48dc-b2f4-0d86395d869e |

| image | cirros-test (6c3b4c83-eb48-4182-a436-99e94548942c) |

| key_name | mykey |

| name | vm-test |

| progress | 0 |

| project_id | f27f7078a237429e93446ced13b7e8f5 |

| properties | |

| security_groups | name='b6528980-c02e-453e-9478-0ff97eb81200' |

| status | BUILD |

| updated | 2022-10-23T12:31:06Z |

| user_id | cb56cef9f3f64ff8a036efc922d36b5b |

| volumes_attached | |

+-------------------------------------+----------------------------------------------------+

root@controller:~#

分配浮动地址

openstack floating ip create ext-net

root@controller:~# openstack floating ip create ext-net

+---------------------+--------------------------------------+

| Field | Value |

+---------------------+--------------------------------------+

| created_at | 2022-10-23T12:31:49Z |

| description | |

| dns_domain | None |

| dns_name | None |

| fixed_ip_address | None |

| floating_ip_address | 192.168.200.191 |

| floating_network_id | ff412b9f-56c9-403d-b823-3aa8d0140344 |

| id | 9d31a716-0991-4986-b5ce-525610946bf6 |

| name | 192.168.200.191 |

| port_details | None |

| port_id | None |

| project_id | f27f7078a237429e93446ced13b7e8f5 |

| qos_policy_id | None |

| revision_number | 0 |

| router_id | None |

| status | DOWN |

| subnet_id | None |

| tags | [] |

| updated_at | 2022-10-23T12:31:49Z |

+---------------------+--------------------------------------+

root@controller:~#

查看创建的浮动IP

openstack floating ip list

root@controller:~# openstack floating ip list

+--------------------------------------+---------------------+------------------+------+--------------------------------------+----------------------------------+

| ID | Floating IP Address | Fixed IP Address | Port | Floating Network

| Project |

+--------------------------------------+---------------------+------------------+------+--------------------------------------+----------------------------------+

| 9d31a716-0991-4986-b5ce-525610946bf6 | 192.168.200.191 | None | None | ff412b9f-56c9-403d-b823-3aa8d0140344 | f27f7078a237429e93446ced13b7e8f5 |

+--------------------------------------+---------------------+------------------+------+--------------------------------------+----------------------------------+

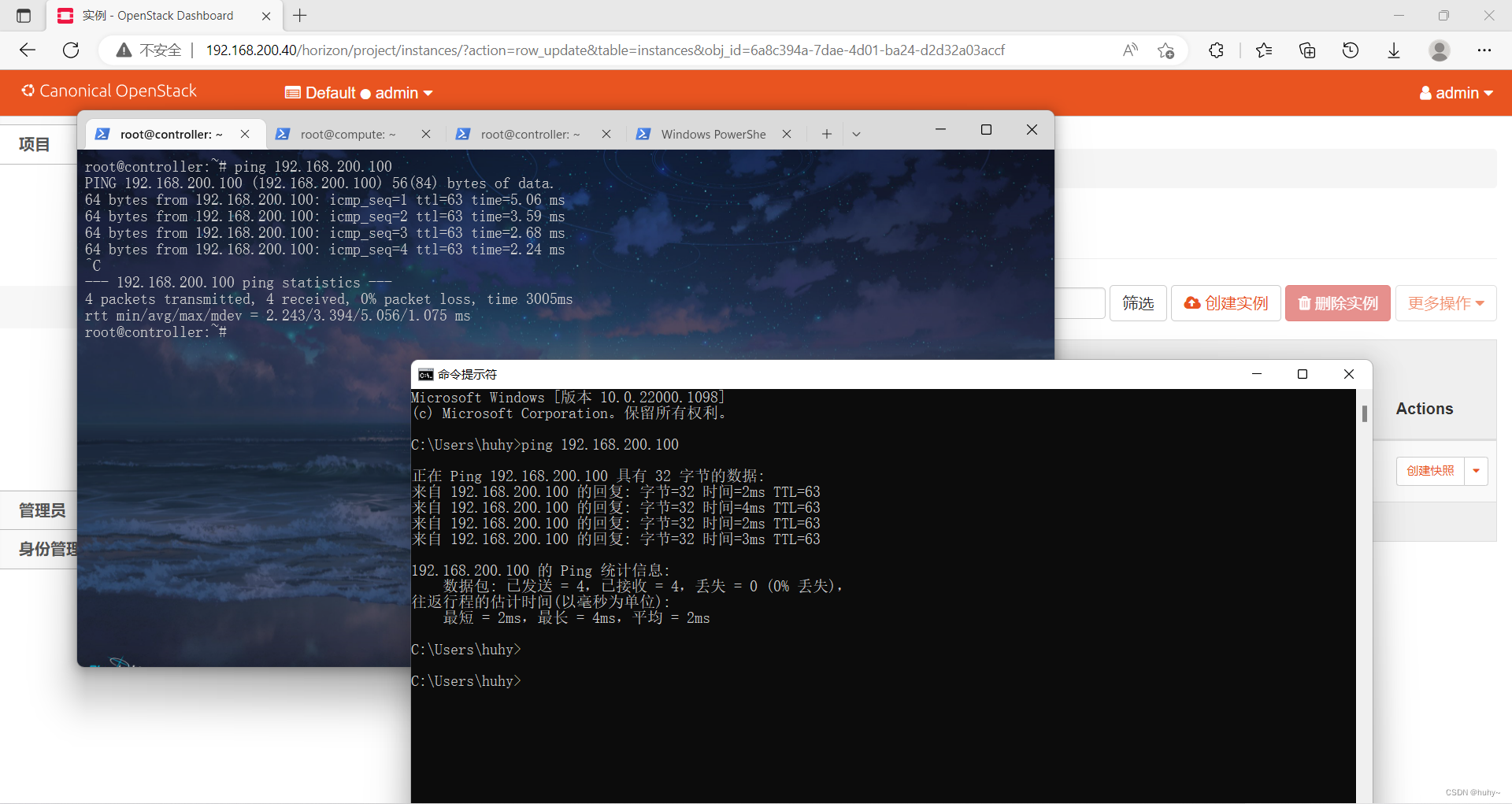

将分配的浮动IP绑定云主机

openstack server add floating ip vm-test $(分配出的地址)

root@controller:~# openstack server add floating ip vm-test 192.168.200.191

root@controller:~#

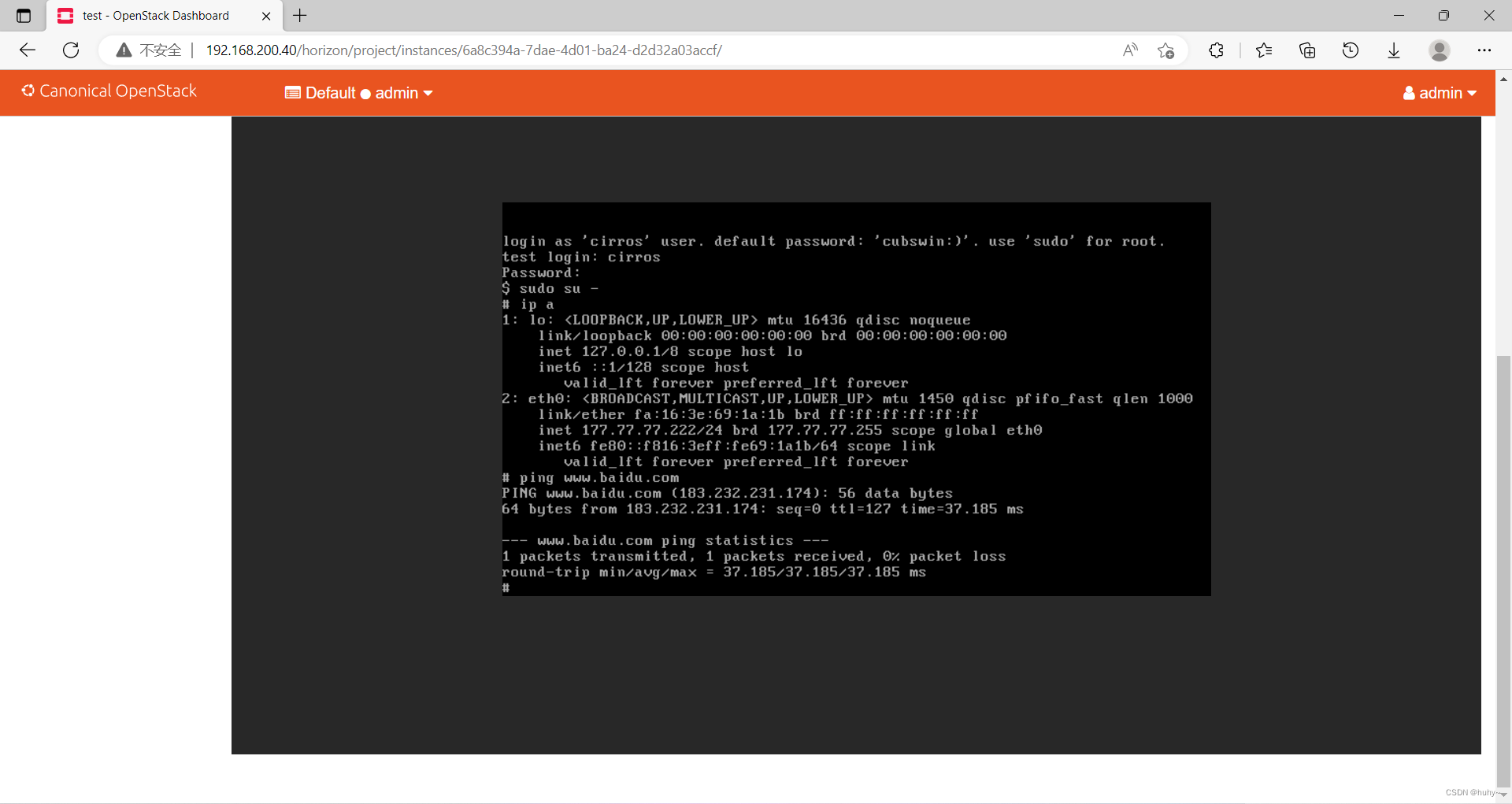

测试

root@controller:~# ping 192.168.200.191 -c4

PING 192.168.200.191 (192.168.200.191) 56(84) bytes of data.

64 bytes from 192.168.200.191: icmp_seq=1 ttl=63 time=7.43 ms

64 bytes from 192.168.200.191: icmp_seq=2 ttl=63 time=3.29 ms

64 bytes from 192.168.200.191: icmp_seq=3 ttl=63 time=3.09 ms

64 bytes from 192.168.200.191: icmp_seq=4 ttl=63 time=2.48 ms

--- 192.168.200.191 ping statistics ---

4 packets transmitted, 4 received, 0% packet loss, time 3006ms

rtt min/avg/max/mdev = 2.480/4.073/7.430/1.960 ms

root@controller:~#

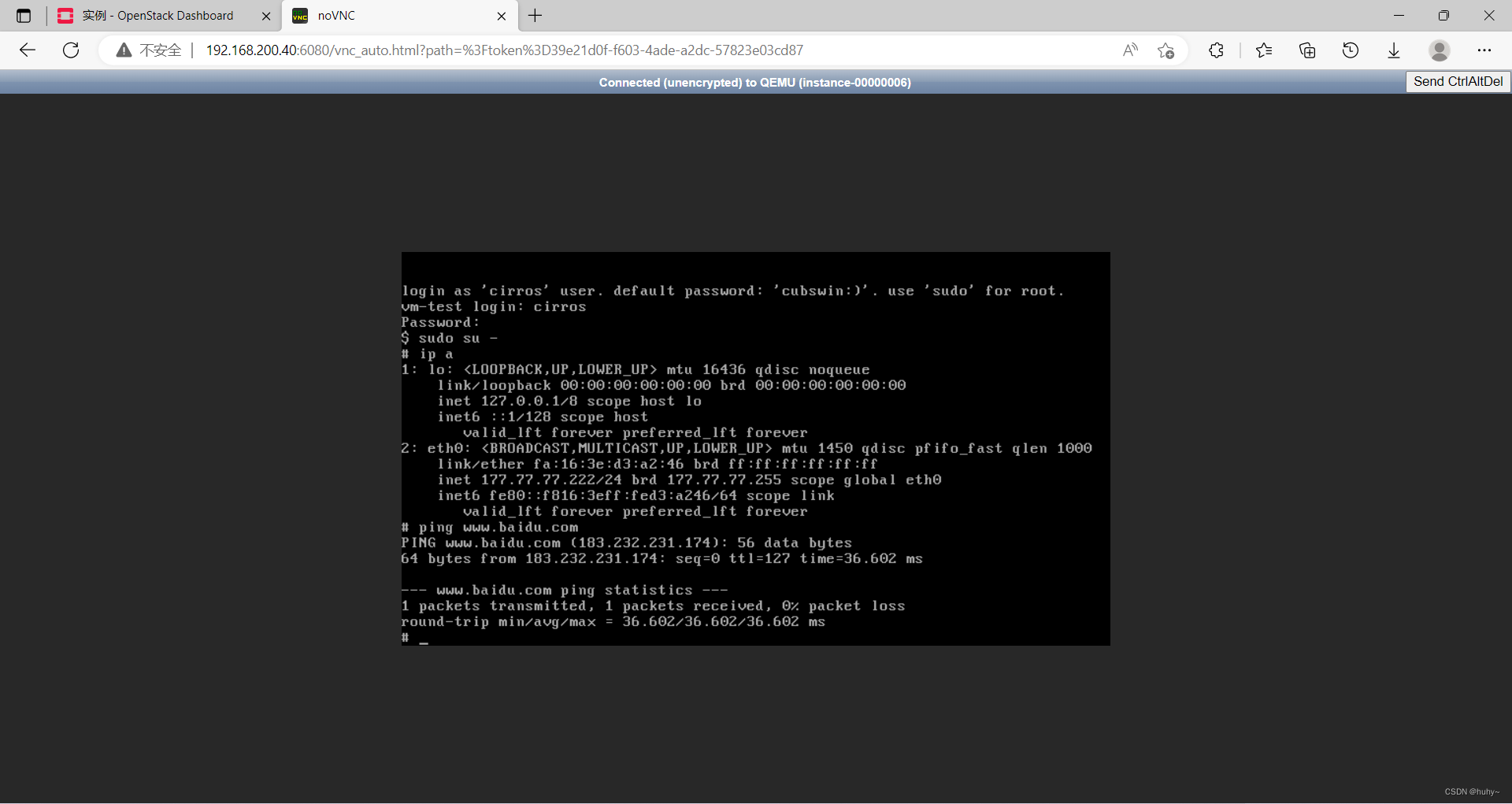

查看vnc控制台访问

openstack console url show vm-test

root@controller:~# openstack console url show vm-test

+----------+-----------------------------------------------------------------------------------------------+

| Field | Value |

+----------+-----------------------------------------------------------------------------------------------+

| protocol | vnc |

| type | novnc |

| url | http://192.168.200.40:6080/vnc_auto.html?path=%3Ftoken%3D39e21d0f-f603-4ade-a2dc-57823e03cd87 |

+----------+-----------------------------------------------------------------------------------------------+

root@controller:~#

http://192.168.200.40:6080/vnc_auto.html?path=%3Ftoken%3D39e21d0f-f603-4ade-a2dc-57823e03cd87

cinder服务配置#

controller#

创建数据库与用户给予cinder组件使用

进入数据库

mysql -uroot -p000000

创建cinder数据库

CREATE DATABASE cinder;

创建cinder用户

GRANT ALL PRIVILEGES ON cinder.* TO 'cinder'@'%' IDENTIFIED BY 'cinderhuhy';

创建cinder实体,用户绑定角色

openstack user create --domain default --password cinder cinder; \

openstack role add --project service --user cinder admin; \

openstack service create --name cinderv3 --description "OpenStack Block Storage" volumev3

创建cinder服务API端点

openstack endpoint create --region RegionOne volumev3 public http://controller:8776/v3/%\(project_id\)s; \

openstack endpoint create --region RegionOne volumev3 internal http://controller:8776/v3/%\(project_id\)s; \

openstack endpoint create --region RegionOne volumev3 admin http://controller:8776/v3/%\(project_id\)s

安装cinder软件包

apt install -y cinder-api cinder-scheduler

配置cinder.conf文件#

备份文件

cp /etc/cinder/cinder.conf{,.bak}

过滤覆盖文件

grep -Ev "^$|#" /etc/cinder/cinder.conf.bak > /etc/cinder/cinder.conf

vim /etc/cinder/cinder.conf

[DEFAULT]

transport_url = rabbit://openstack:openstackhuhy@controller

auth_strategy = keystone

my_ip = 192.168.200.40

[database]

connection = mysql+pymysql://cinder:cinderhuhy@controller/cinder

[keystone_authtoken]

www_authenticate_uri = http://controller:5000

auth_url = http://controller:5000

memcached_servers = controller:11211

auth_type = password

project_domain_name = default

user_domain_name = default

project_name = service

username = cinder

password = cinder

[oslo_concurrency]

lock_path = /var/lib/cinder/tmp

填充数据库

su -s /bin/sh -c "cinder-manage db sync" cinder

配置nova服务可调用cinder服务

vim /etc/nova/nova.conf

'''

[cinder]

os_region_name = RegionOne

'''

重启服务

cat > cinder-restart.sh <<EOF

#!/bin/bash

#重启nova服务生效cinder服务

service nova-api restart

#重新启动块存储服务

service cinder-scheduler restart

#平滑重启apache服务识别cinder页面

service apache2 reload

EOF

bash cinder-restart.sh

compute#

安装软件包

apt install -y lvm2 thin-provisioning-tools

创建LVM物理卷(磁盘根据自己名称指定)

pvcreate /dev/sdb

创建LVM卷组 cinder-volumes

vgcreate cinder-volumes /dev/sdb

修改lvm.conf文件

作用:添加接受/dev/sdb设备并拒绝所有其他设备的筛选器

vim /etc/lvm/lvm.conf

devices {

...

filter = [ "a/sdb/", "r/.*/"]

安装cinder软件包

apt install -y cinder-volume tgt

配置cinder.conf配置文件

备份配置文件

cp /etc/cinder/cinder.conf{,.bak}

过滤覆盖文件

grep -Ev "^$|#" /etc/cinder/cinder.conf.bak > /etc/cinder/cinder.conf

vim /etc/cinder/cinder.conf

[DEFAULT]

transport_url = rabbit://openstack:openstackhuhy@controller

auth_strategy = keystone

my_ip = 192.168.200.50

enabled_backends = lvm

glance_api_servers = http://controller:9292

[database]

connection = mysql+pymysql://cinder:cinderhuhy@controller/cinder

[keystone_authtoken]

www_authenticate_uri = http://controller:5000

auth_url = http://controller:5000

memcached_servers = controller:11211

auth_type = password

project_domain_name = default

user_domain_name = default

project_name = service

username = cinder

password = cinder

[lvm]

volume_driver = cinder.volume.drivers.lvm.LVMVolumeDriver

volume_group = cinder-volumes

target_protocol = iscsi

target_helper = tgtadm

volume_backend_name = lvm

[oslo_concurrency]

lock_path = /var/lib/cinder/tmp

指定卷路径

vim /etc/tgt/conf.d/tgt.conf

include /var/lib/cinder/volumes/*

重新启动块存储卷服务,包括其依赖项

service tgt restart;service cinder-volume restart

controller节点检验cinder

openstack volume service list

root@controller:~# openstack volume service list

+------------------+-------------+------+---------+-------+----------------------------+

| Binary | Host | Zone | Status | State | Updated At |

+------------------+-------------+------+---------+-------+----------------------------+

| cinder-scheduler | controller | nova | enabled | up | 2022-10-23T13:00:51.000000 |

| cinder-volume | compute@lvm | nova | enabled | up | 2022-10-23T13:01:00.000000 |

+------------------+-------------+------+---------+-------+----------------------------+

root@controller:~#

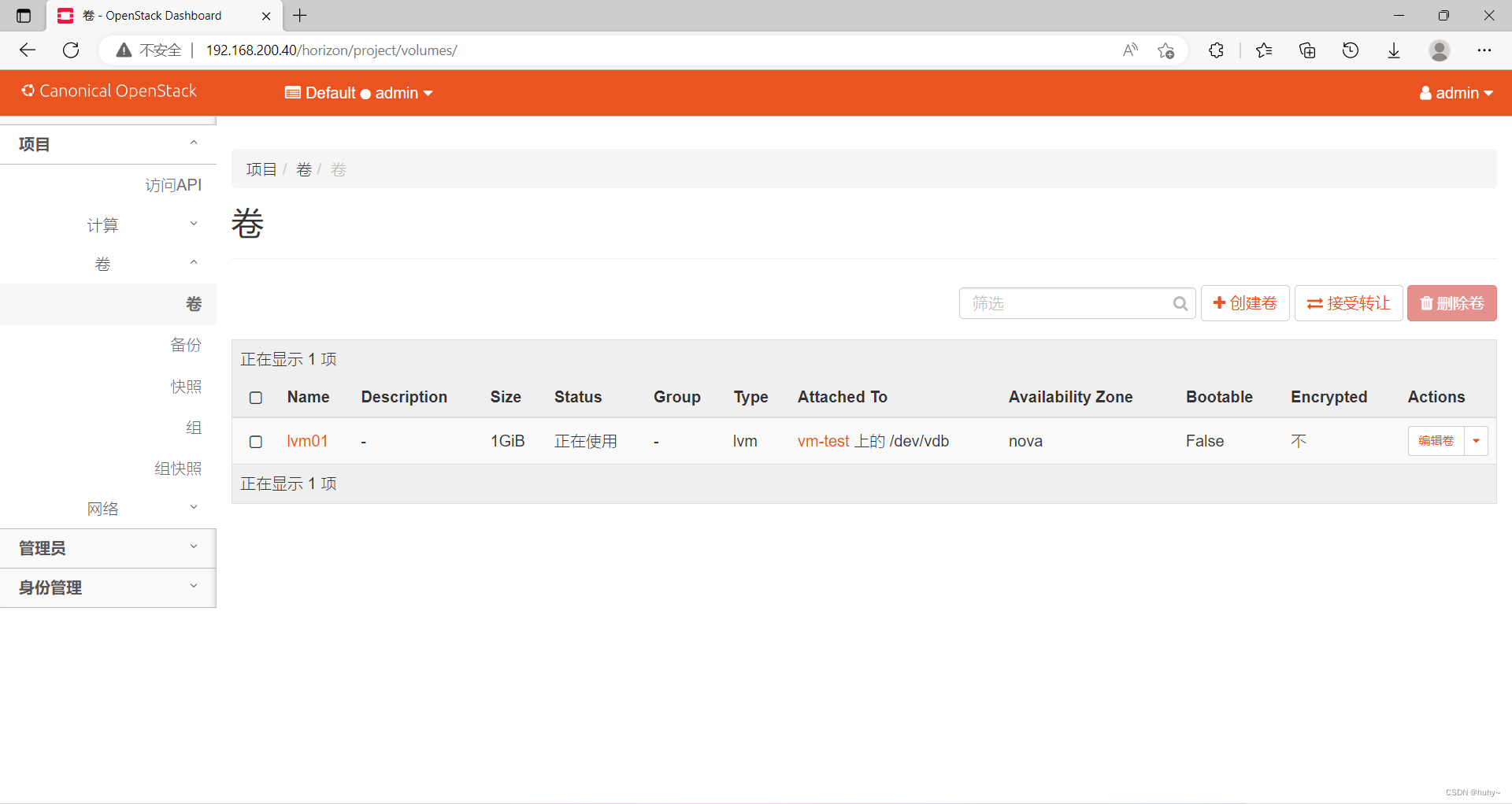

卷绑定云主机#

创建卷类型

openstack volume type create lvm

root@controller:~# openstack volume type create lvm

+-------------+--------------------------------------+

| Field | Value |

+-------------+--------------------------------------+

| description | None |

| id | ea3939ab-dfb6-4702-a079-741a9e0e6ca0 |

| is_public | True |

| name | lvm |

+-------------+--------------------------------------+

root@controller:~#

卷类型添加元数据

cinder --os-username admin --os-tenant-name admin type-key lvm set volume_backend_name=lvm

查看卷类型

openstack volume type list

root@controller:~# cinder --os-username admin --os-tenant-name admin type-key lvm set volume_backend_name=lvm

root@controller:~# openstack volume type list

+--------------------------------------+-------------+-----------+

| ID | Name | Is Public |

+--------------------------------------+-------------+-----------+

| ea3939ab-dfb6-4702-a079-741a9e0e6ca0 | lvm | True |

| 876e0deb-2da5-40fa-9e56-dd6dc26efd30 | __DEFAULT__ | True |

+--------------------------------------+-------------+-----------+

root@controller:~#

指定lvm卷类型创建卷

openstack volume create lvm01 --type lvm --size 1

root@controller:~# openstack volume create lvm01 --type lvm --size 1

+---------------------+--------------------------------------+

| Field | Value |

+---------------------+--------------------------------------+

| attachments | [] |

| availability_zone | nova |

| bootable | false |

| consistencygroup_id | None |

| created_at | 2022-10-23T13:04:38.137848 |

| description | None |

| encrypted | False |

| id | 2778b6fe-6e24-43b8-b3e2-dd5e0845e885 |

| migration_status | None |

| multiattach | False |

| name | lvm01 |

| properties | |

| replication_status | None |

| size | 1 |

| snapshot_id | None |

| source_volid | None |

| status | creating |

| type | lvm |

| updated_at | None |

| user_id | cb56cef9f3f64ff8a036efc922d36b5b |

+---------------------+--------------------------------------+

root@controller:~#

将卷绑定云主机

nova volume-attach vm-test 卷ID

root@controller:~# nova volume-attach vm-test 2778b6fe-6e24-43b8-b3e2-dd5e0845e885

+-----------------------+--------------------------------------+

| Property | Value |

+-----------------------+--------------------------------------+

| delete_on_termination | False |

| device | /dev/vdb |

| id | 2778b6fe-6e24-43b8-b3e2-dd5e0845e885 |

| serverId | ae9cd673-002a-48dc-b2f4-0d86395d869e |

| tag | - |

| volumeId | 2778b6fe-6e24-43b8-b3e2-dd5e0845e885 |

+-----------------------+--------------------------------------+

root@controller:~#

ubuntu22,VMware15,ovs网络

ubuntu22,VMware15,ovs网络

【推荐】国内首个AI IDE,深度理解中文开发场景,立即下载体验Trae

【推荐】编程新体验,更懂你的AI,立即体验豆包MarsCode编程助手

【推荐】抖音旗下AI助手豆包,你的智能百科全书,全免费不限次数

【推荐】轻量又高性能的 SSH 工具 IShell:AI 加持,快人一步

· 无需6万激活码!GitHub神秘组织3小时极速复刻Manus,手把手教你使用OpenManus搭建本

· Manus爆火,是硬核还是营销?

· 终于写完轮子一部分:tcp代理 了,记录一下

· 别再用vector<bool>了!Google高级工程师:这可能是STL最大的设计失误

· 单元测试从入门到精通