2020-2021-1 20209304《Linux内核原理与分析》第三周作业

实验内容和收获

1.实验内容:完成一个简单的时间片轮转多道程序内核代码

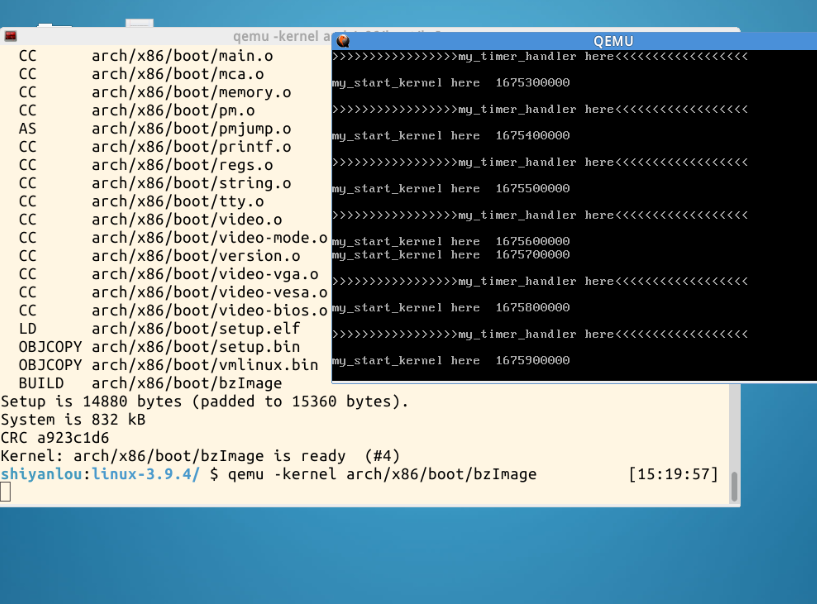

内核启动效果:

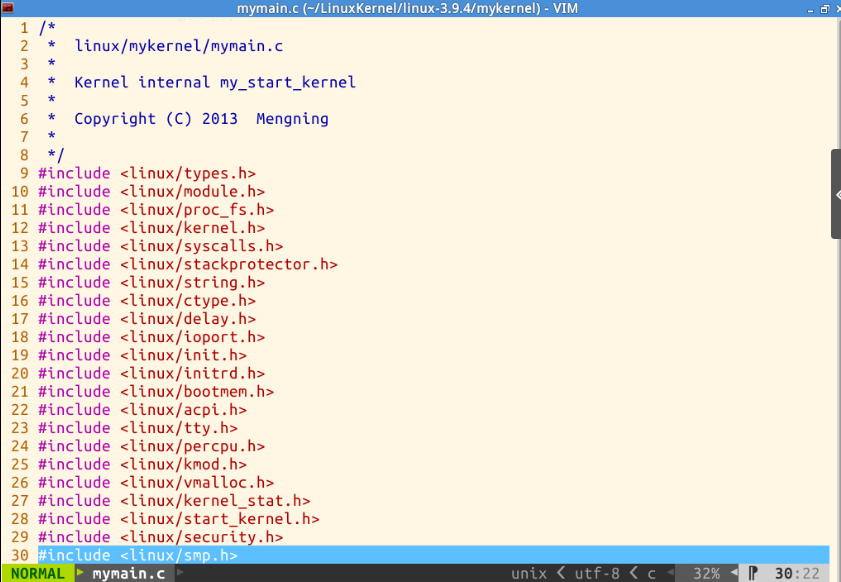

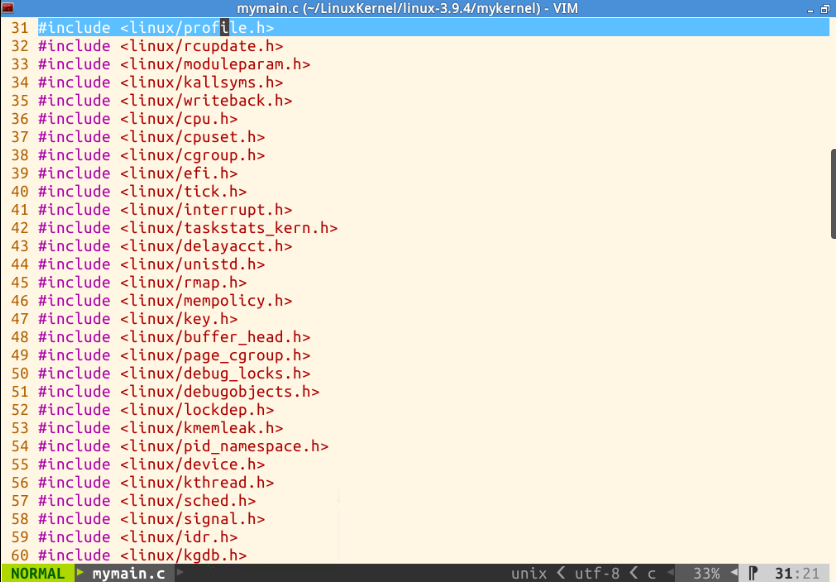

mymain.c的代码:

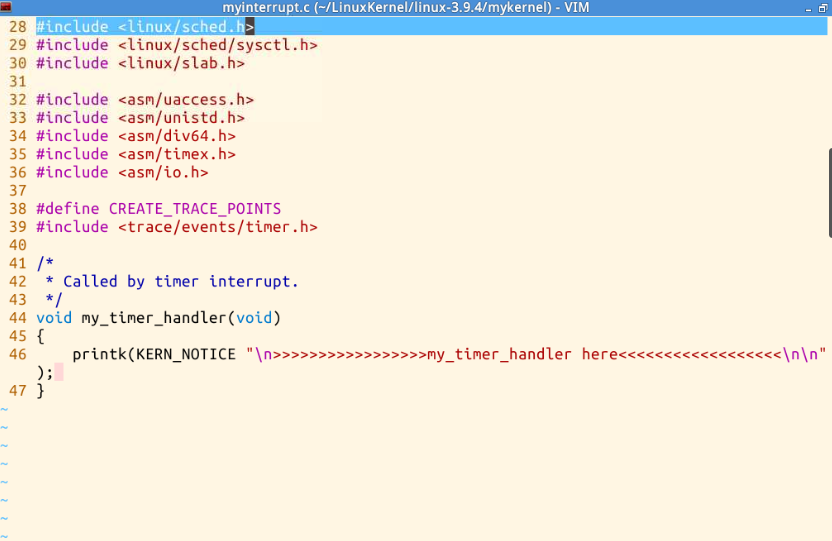

myinterrupt.c的代码:

#define MAX_TASK_NUM 4

#define KERNEL_STACK_SIZE 1024*8

增加用来定义进程控制块PCB的头文件mypcb.h,代码如下:

struct Thread {

unsigned long ip;

unsigned long sp;

};

typedef struct PCB{

int pid;

volatile long state;

char stack[KERNEL_STACK_SIZE];

struct Thread thread;

unsigned long task_entry;

struct PCB *next;

}tPCB;

void my_schedule(void);

mymain.c修改后的代码:

#include <linux/types.h>

#include <linux/string.h>

#include <linux/ctype.h>

#include <linux/tty.h>

#include <linux/vmalloc.h>

#include "mypcb.h"

tPCB task[MAX_TASK_NUM];

tPCB * my_current_task = NULL;

volatile int my_need_sched = 0;

void my_process(void);

void __init my_start_kernel(void)

{

int pid = 0;

int i;

/* Initialize process 0*/

task[pid].pid = pid;

task[pid].state = 0;/* -1 unrunnable, 0 runnable, >0 stopped */

task[pid].task_entry = task[pid].thread.ip = (unsigned long)my_process;

task[pid].thread.sp = (unsigned long)&task[pid].stack[KERNEL_STACK_SIZE-1];

task[pid].next = &task[pid];

/*fork more process */

for(i=1;i<MAX_TASK_NUM;i++)

{

memcpy(&task[i],&task[0],sizeof(tPCB));

task[i].pid = i;

task[i].state = -1;

task[i].thread.sp = (unsigned long)&task[i].stack[KERNEL_STACK_SIZE-1];

task[i].next = task[i-1].next;

task[i-1].next = &task[i];

}

/* start process 0 by task[0] */

pid = 0;

my_current_task = &task[pid];

asm volatile(

"movl %1,%%esp\n\t" /* set task[pid].thread.sp to esp */

"pushl %1\n\t" /* push ebp */

"pushl %0\n\t" /* push task[pid].thread.ip */

"ret\n\t" /* pop task[pid].thread.ip to eip */

"popl %%ebp\n\t"

:

: "c" (task[pid].thread.ip),"d" (task[pid].thread.sp) /* input c or d mean %ecx/%edx*/

);

}

void my_process(void)

{

int i = 0;

while(1)

{

i++;

if(i%10000000 == 0)

{

printk(KERN_NOTICE "this is process %d -\n",my_current_task->pid);

if(my_need_sched == 1)

{

my_need_sched = 0;

my_schedule();

}

printk(KERN_NOTICE "this is process %d +\n",my_current_task->pid);

}

}

}

修改后myinterrupt.c中的代码:

#include <linux/types.h>

#include <linux/string.h>

#include <linux/ctype.h>

#include <linux/tty.h>

#include <linux/vmalloc.h>

#include "mypcb.h"

extern tPCB task[MAX_TASK_NUM];

extern tPCB * my_current_task;

extern volatile int my_need_sched;

volatile int time_count = 0;

void my_timer_handler(void)

{

#if 1

if(time_count%1000 == 0 && my_need_sched != 1)

{

printk(KERN_NOTICE ">>>my_timer_handler here<<<\n");

my_need_sched = 1;

}

time_count ++ ;

#endif

return;

}

void my_schedule(void)

{

tPCB * next;

tPCB * prev;

if(my_current_task == NULL

|| my_current_task->next == NULL)

{

return;

}

printk(KERN_NOTICE ">>>my_schedule<<<\n");

/* schedule */

next = my_current_task->next;

prev = my_current_task;

if(next->state == 0)

{

my_current_task = next;

printk(KERN_NOTICE ">>>switch %d to %d<<<\n",prev->pid,next->pid);

/* 切换进程 */

asm volatile(

"pushl %%ebp\n\t" /* save ebp */

"movl %%esp,%0\n\t" /* save esp */

"movl %2,%%esp\n\t" /* restore esp */

"movl $1f,%1\n\t" /* save eip */

"pushl %3\n\t"

"ret\n\t" /* restore eip */

"1:\t" /* next process start here */

"popl %%ebp\n\t"

: "=m" (prev->thread.sp),"=m" (prev->thread.ip)

: "m" (next->thread.sp),"m" (next->thread.ip)

);

}

else

{

next->state = 0;

my_current_task = next;

printk(KERN_NOTICE ">>>switch %d to %d<<<\n",prev->pid,next->pid);

/* switch to new process */

asm volatile(

"pushl %%ebp\n\t" /* save ebp */

"movl %%esp,%0\n\t" /* save esp */

"movl %2,%%esp\n\t" /* restore esp */

"movl %2,%%ebp\n\t" /* restore ebp */

"movl $1f,%1\n\t" /* save eip */

"pushl %3\n\t"

"ret\n\t" /* restore eip */

: "=m" (prev->thread.sp),"=m" (prev->thread.ip)

: "m" (next->thread.sp),"m" (next->thread.ip)

);

}

return;

}

2.学习收获

1.CS:EIP总是指向下一条的指令地址,而在跳转/分支时,值会根据程序的需要修改;

2.在32位x86的Linux系统中,__stdcall函数调用方式,参数压栈的方向为从右向左;

3.有了中断,才有了多道程序。当一个中断信号发生时,CPU把正在执行的程序的CS:EIP寄存器和ESP寄存器等都压到内核堆栈,然后把CS:EIP指向一个中断处理程序的入口,保存现场,之后继续执行其他程序。等中断前执行的程序重新回来时,再恢复现场CS:EIP及ESP寄存器等,继续执行。