用python爬取杭电oj的数据

暑假集训主要是在杭电oj上面刷题,白天与算法作斗争,晚上望干点自己喜欢的事情!

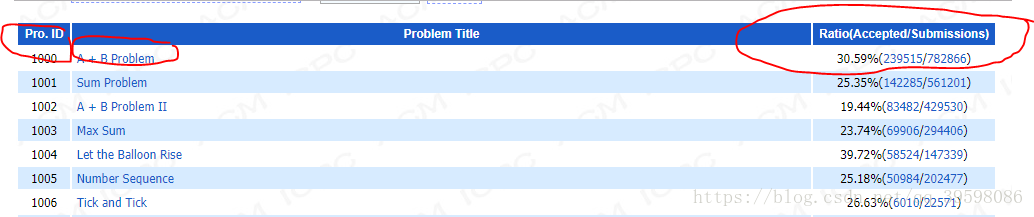

首先,确定要爬取哪些数据:

如上图所示,题目ID,名称,accepted,submissions,都很有用。

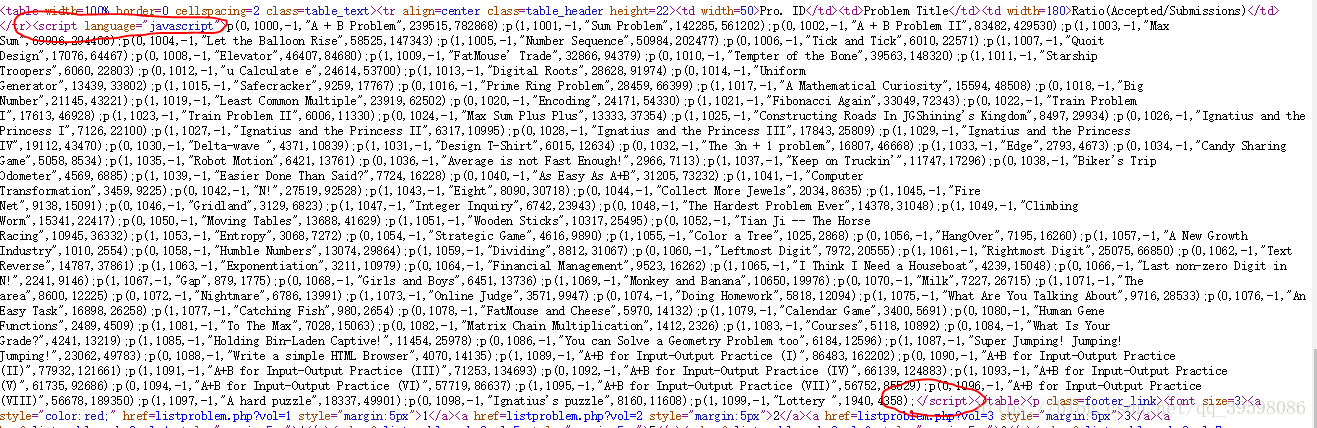

查看源代码知:

所有的数据都在一个script标签里面。

思路:用beautifulsoup找到这个标签,然后用正则表达式提取。

话不多说,上数据爬取的代码:

import requests

from bs4 import BeautifulSoup

import time

import random

import re

from requests.exceptions import RequestException

prbm_id = []

prbm_name = []

prbm_ac = []

prbm_sub = []

def get_html(url): # 获取html

try:

kv = {'user-agent': 'Mozilla/5.0'}

r = requests.get(url, timeout=5, headers=kv)

r.raise_for_status()

r.encoding = r.apparent_encoding

random_time = random.randint(1, 3)

time.sleep(random_time) # 应对反爬虫,随机休眠1至3秒

return r.text

except RequestException as e: # 异常输出

print(e)

return ""

def get_hdu():

count = 0

for i in range(1, 56):

url = "http://acm.hdu.edu.cn/listproblem.php?vol=" + str(i)

# print(url)

html = get_html(url)

# print(html)

soup = BeautifulSoup(html, "html.parser")

cnt = 1

for it in soup.find_all("script"):

if cnt == 5:

# print(it.get_text())

str1 = it.string

list_pro = re.split("p\(|\);", str1) # 去除 p(); 分割

# print(list_pro)

for its in list_pro:

if its != "":

# print(its)

temp = re.split(',', its)

len1 = len(temp)

prbm_id.append(temp[1])

prbm_name.append(temp[3])

prbm_ac.append(temp[len1-2])

prbm_sub.append(temp[len1-1])

cnt = cnt + 1

count = count + 1

print('\r当前进度:{:.2f}%'.format(count * 100 / 55, end='')) # 进度条

def main():

get_hdu()

root = "F://爬取的资源//hdu题目数据爬取2.txt"

len1 = len(prbm_id)

for i in range(0, len1):

with open(root, 'a', encoding='utf-8') as f: # 存储个人网址

f.write("hdu"+prbm_id[i] + "," + prbm_name[i] + "," + prbm_ac[i] + "," + prbm_sub[i] + '\n')

# print(prbm_id[i])

if __name__ == '__main__':

main()

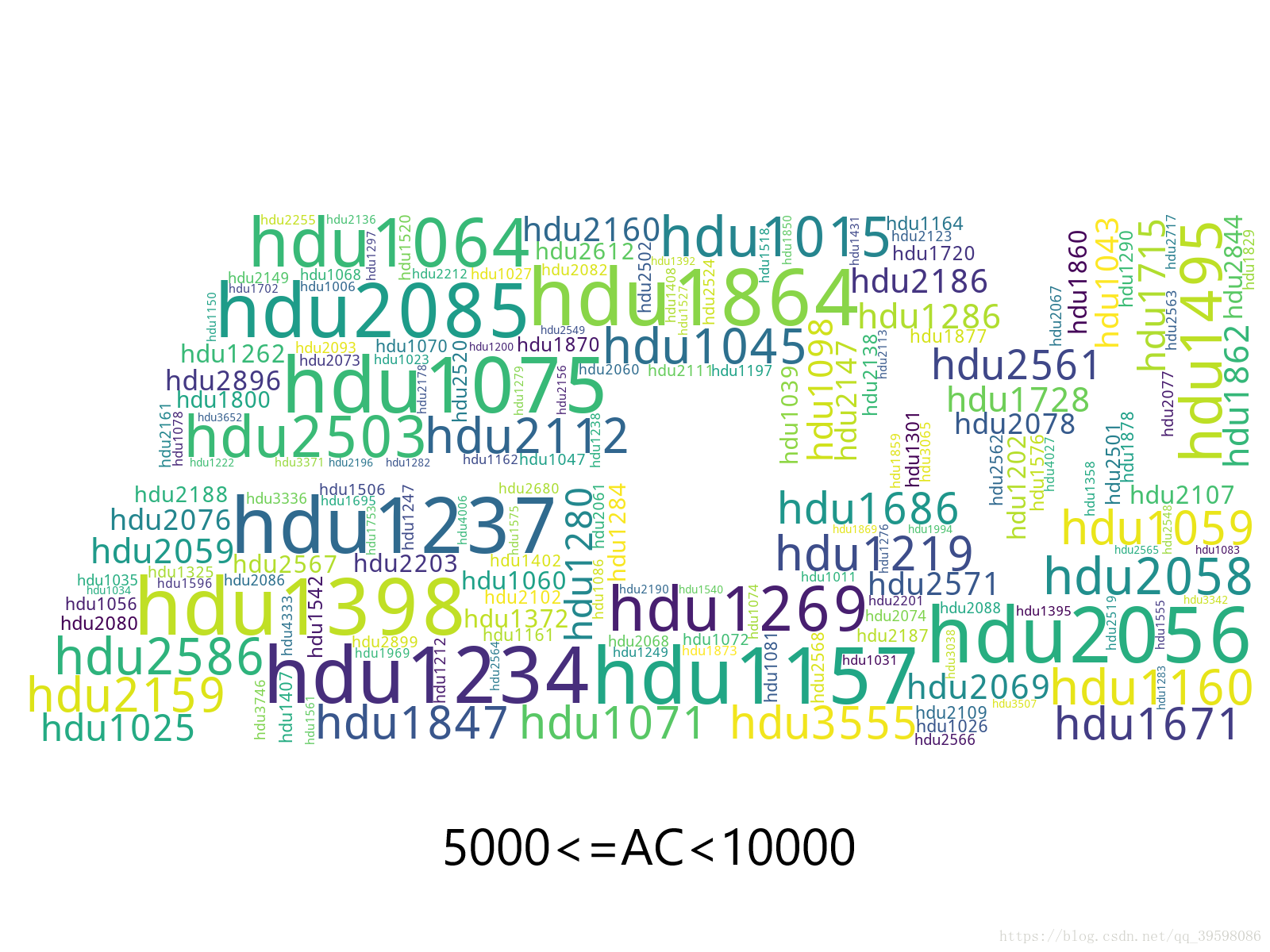

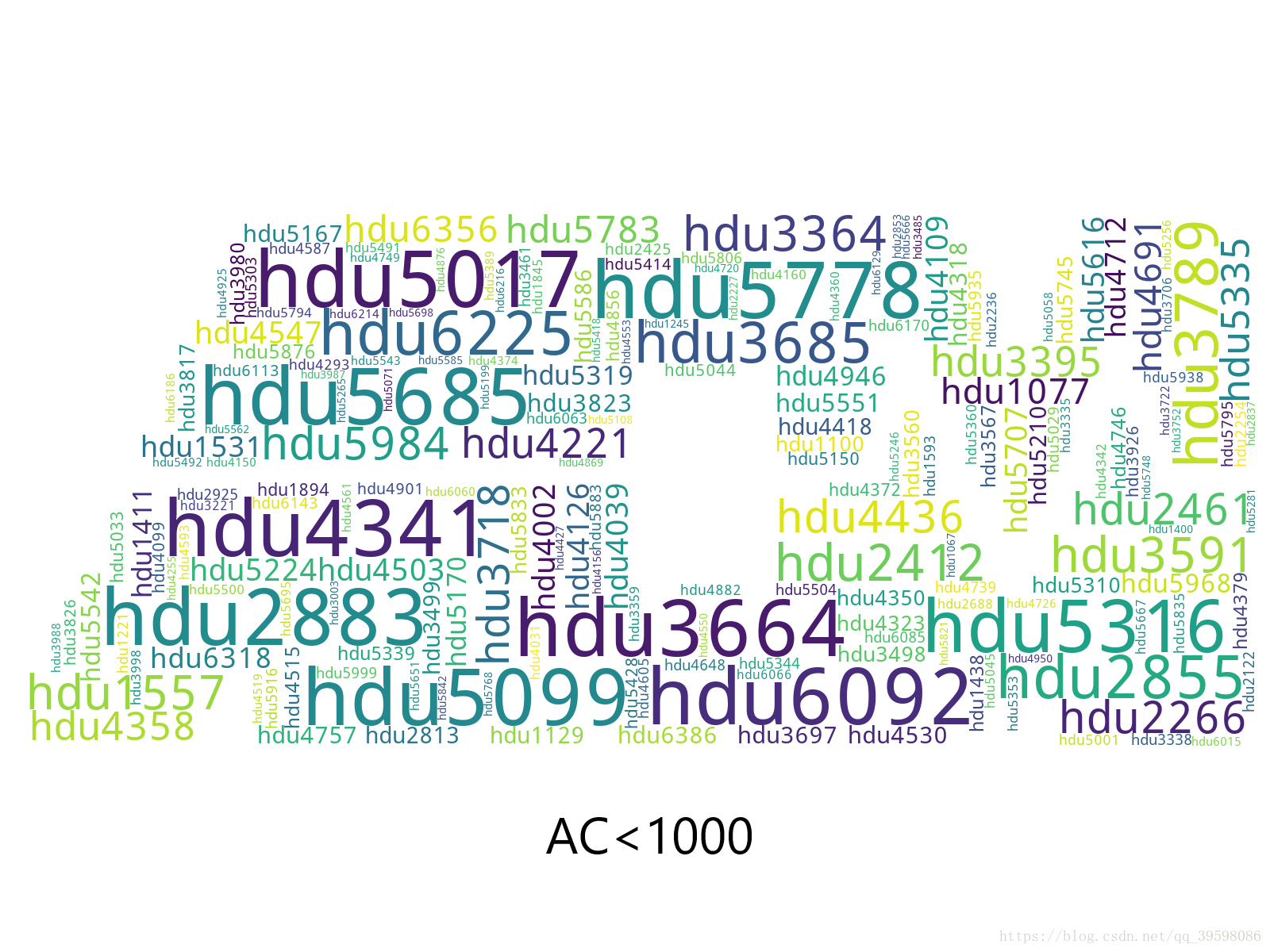

爬取数据之后,想到用词云生成图片,来达到数据可视化。

本人能力有限,仅根据AC的数量进行分类,生成不同的词云图片。数据分析代码如下:

import re

import wordcloud

from scipy.misc import imread # 这是一个处理图像的函数

from wordcloud import WordCloud, STOPWORDS, ImageColorGenerator

import matplotlib.pyplot as plt

import os

prbm_id = []

prbm_name = []

prbm_ac = []

prbm_sub = []

def read():

f = open(r"F://爬取的资源//hdu题目数据爬取2.txt", "r", encoding="utf-8")

list_str = f.readlines()

for it in list_str:

list_pre = re.split(",", it)

prbm_id.append(list_pre[0].strip('\n'))

prbm_name.append(list_pre[1].strip('\n'))

prbm_ac.append(list_pre[2].strip('\n'))

prbm_sub.append(list_pre[3].strip('\n'))

def data_Process():

for it in range(0, len(prbm_ac)):

# print(prbm_sub[it])

root = "F://爬取的资源//词语统计.txt"

num1 = int(prbm_ac[it])

# num2 = int(prbm_ac[it])*1.0/int(prbm_sub[it])

if 5000 <= num1 <= 10000: # 分类

with open(root, 'a', encoding='utf-8') as f: # 写入txt文件,用于wordcloud词云生成

for i in range(0, int(num1/100)): # num1/100,这里可根据num1,除数变化

f.write(prbm_id[it] + ' ')

def main():

read()

data_Process()

text = open(r"F://爬取的资源//词语统计.txt", "r", encoding='utf-8').read()

# 生成一个词云图像

back_color = imread('F://爬取的资源//acm.jpg') # 解析该图片

w = wordcloud.WordCloud(background_color='white', # 背景颜色

mask=back_color, # 以该参数值作图绘制词云,这个参数不为空时,width和height会被忽略

width=300,

height =100,

collocations=False # 去掉重复元素

)

w.generate(text)

plt.imshow(w)

plt.axis("off")

plt.show()

os.remove("F://爬取的资源//词语统计.txt")

w.to_file("F://爬取的资源//hdu热度词云5.png")

if __name__ == '__main__':

main()

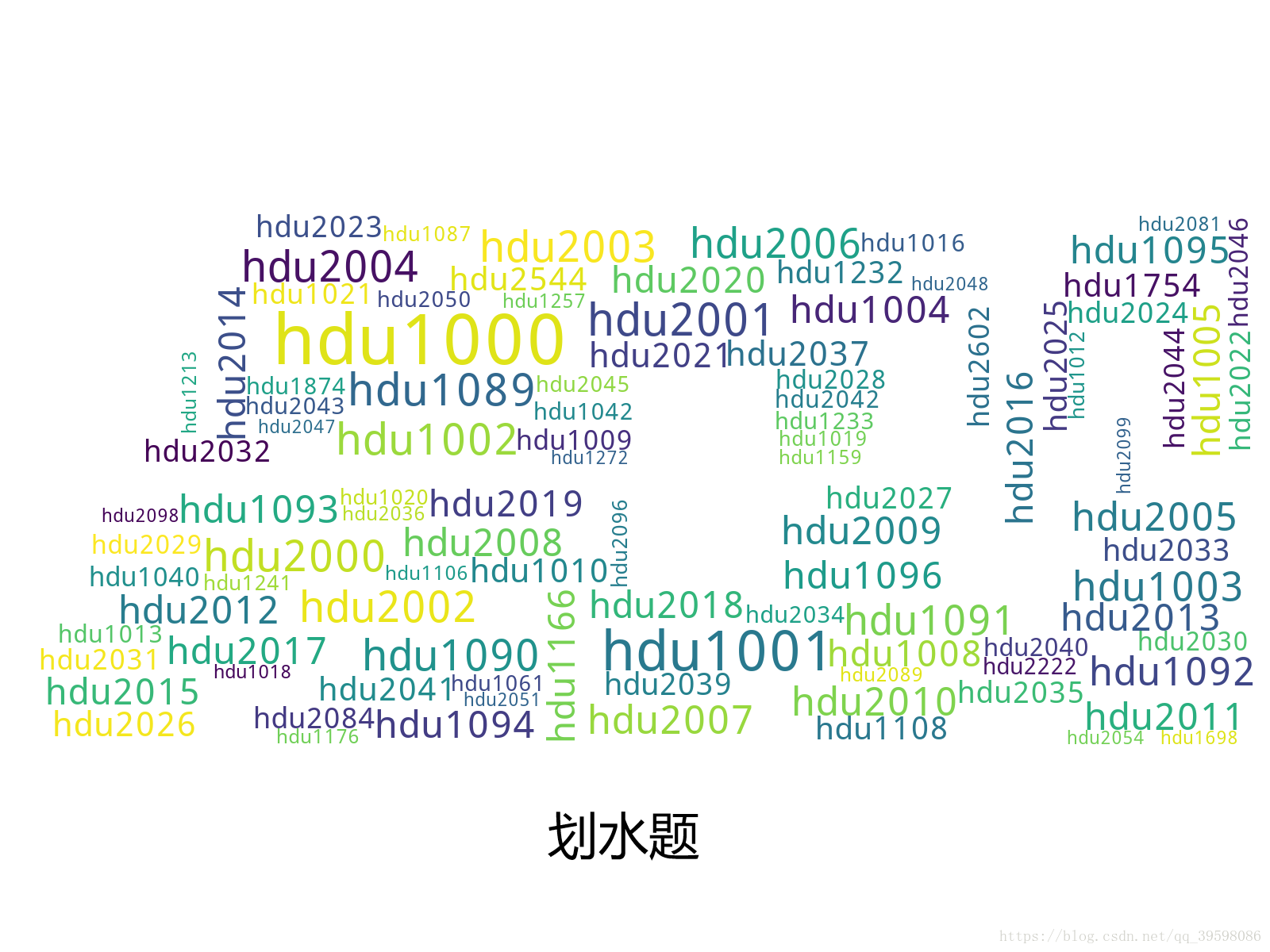

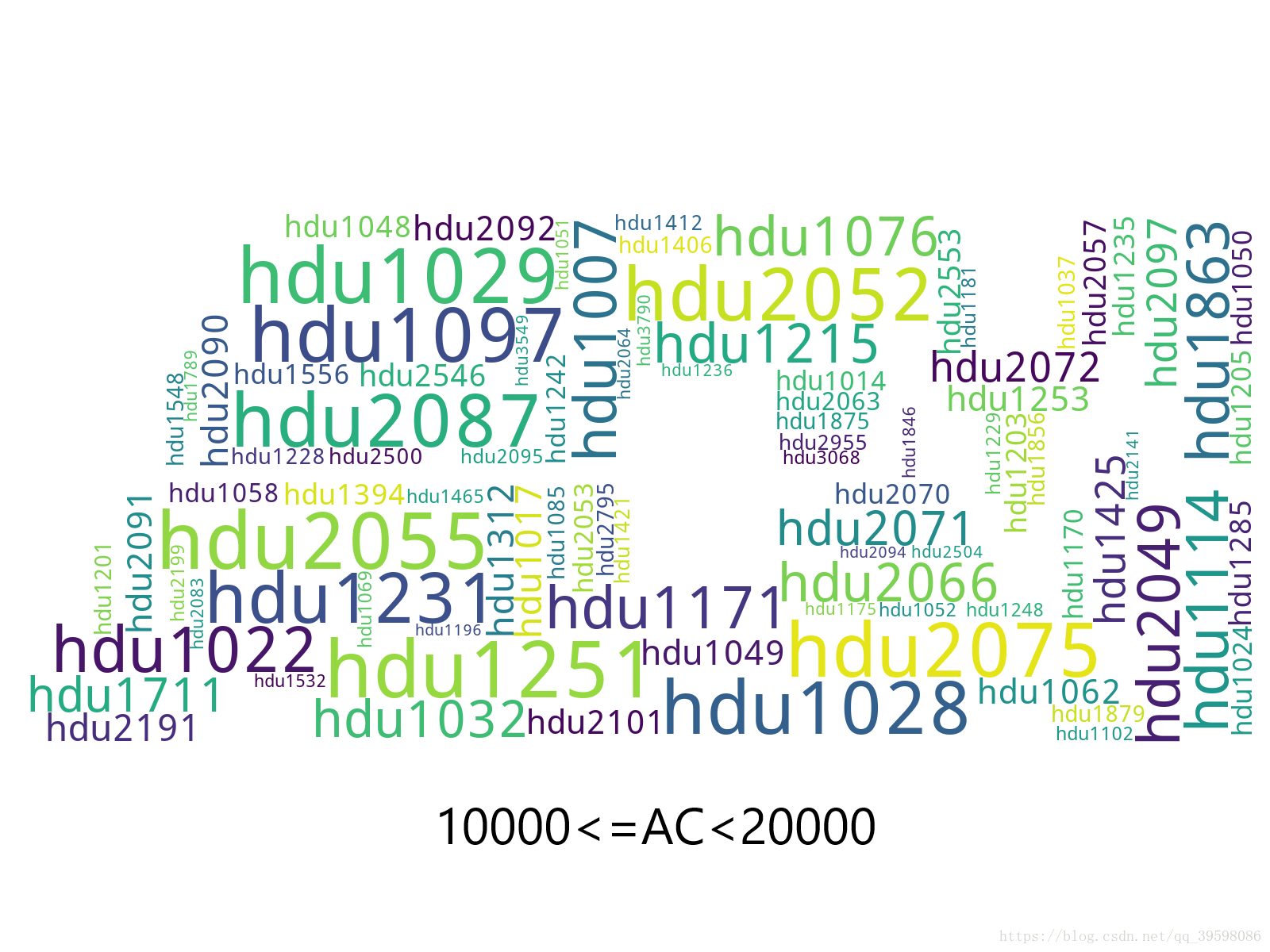

生成的图片效果展示如下:

词云是根据每个分类里面,ac的数量生成的。

仅以此,向广大在杭电上刷题的苦逼acmer们,表达此刻心中的敬意。愿每位acmer都能勇往直前,披荆斩棘。