【机器学习】拟合一元二次方程

多项式回归实现

实验目的

基于单变量线性回归模型实现拟合一个多项式函数

y=ax2+bx+c

需要实现的函数

compute_model_output: 计算 y=ax2+bx+c 的值compute_cost: 计算一组 a,b 的均方误差compute_gradient: 计算偏导数gradient_descent: 梯度下降算法- 绘制图像

数学公式推导

数学模型:

fa,b,c(x)=ax2+bx+c(1)

损失函数

与单源线性回归相同,采用均方误差来作为损失函数

J(a,b,c)=12mm−1∑i=0(fa,b,c(x(i))−y(i))2(2)

fa,b,c(x(i)=a(x(i))2+bx(i)+c(3)

梯度计算

∂J(a,b,c)∂a=1mm−1∑i=0(fa,b,c(x(i))−y(i))(x(i))2∂J(a,b,c)∂b=1mm−1∑i=0(fa,b,c(x(i))−y(i))x(i)∂J(a,b,c)∂c=1mm−1∑i=0(fa,b,c(x(i))−y(i))(4)(5)(6)

梯度下降算法

repeat until convergence:{a=a−α∂J(a,b,c)∂wb=b−α∂J(a,b,c)∂bc=c−α∂J(a,b,c)∂c}(7)

遇到的问题

-

在

doTrain中p_hist[:,0]我们接受的p_hist这个参数如果不用p_hist = np.array(p_hist)转换的话,是无法用切片[:,0]获取全部的值 -

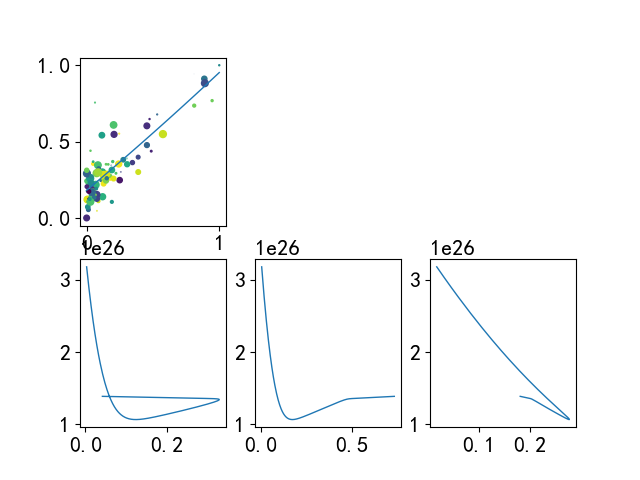

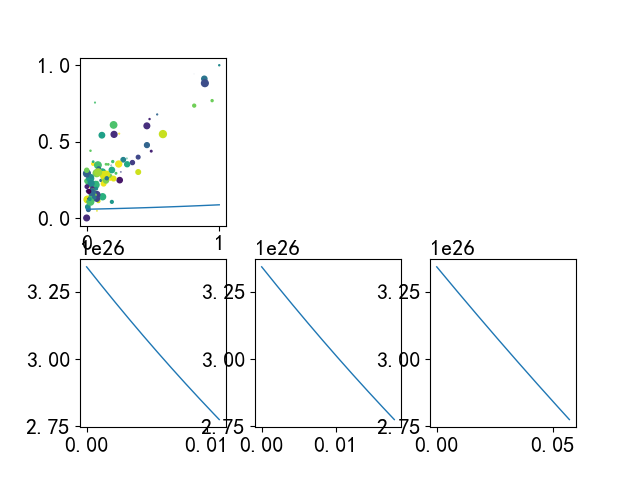

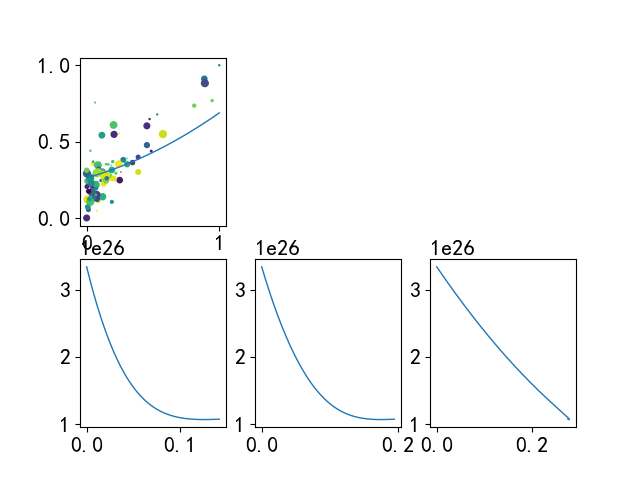

第一次体会到了,人工智能中的调参,一直调迭代的次数,然后看a,b,c三个参数的收敛情况,然后来调学习率

代码与数据

main.py

hoppz_plt_util.py 绘制图像的自定义包

single_data.txt

6.1101 17.592 5.5277 9.1302 8.5186 13.662 7.0032 11.854 5.8598 6.8233 8.3829 11.886 7.4764 4.3483 8.5781 12 6.4862 6.5987 5.0546 3.8166 5.7107 3.2522 14.164 15.505 5.734 3.1551 8.4084 7.2258 5.6407 0.71618 5.3794 3.5129 6.3654 5.3048 5.1301 0.56077 6.4296 3.6518 7.0708 5.3893 6.1891 3.1386 20.27 21.767 5.4901 4.263 6.3261 5.1875 5.5649 3.0825 18.945 22.638 12.828 13.501 10.957 7.0467 13.176 14.692 22.203 24.147 5.2524 -1.22 6.5894 5.9966 9.2482 12.134 5.8918 1.8495 8.2111 6.5426 7.9334 4.5623 8.0959 4.1164 5.6063 3.3928 12.836 10.117 6.3534 5.4974 5.4069 0.55657 6.8825 3.9115 11.708 5.3854 5.7737 2.4406 7.8247 6.7318 7.0931 1.0463 5.0702 5.1337 5.8014 1.844 11.7 8.0043 5.5416 1.0179 7.5402 6.7504 5.3077 1.8396 7.4239 4.2885 7.6031 4.9981 6.3328 1.4233 6.3589 -1.4211 6.2742 2.4756 5.6397 4.6042 9.3102 3.9624 9.4536 5.4141 8.8254 5.1694 5.1793 -0.74279 21.279 17.929 14.908 12.054 18.959 17.054 7.2182 4.8852 8.2951 5.7442 10.236 7.7754 5.4994 1.0173 20.341 20.992 10.136 6.6799 7.3345 4.0259 6.0062 1.2784 7.2259 3.3411 5.0269 -2.6807 6.5479 0.29678 7.5386 3.8845 5.0365 5.7014 10.274 6.7526 5.1077 2.0576 5.7292 0.47953 5.1884 0.20421 6.3557 0.67861 9.7687 7.5435 6.5159 5.3436 8.5172 4.2415 9.1802 6.7981 6.002 0.92695 5.5204 0.152 5.0594 2.8214 5.7077 1.8451 7.6366 4.2959 5.8707 7.2029 5.3054 1.9869 8.2934 0.14454 13.394 9.0551 5.4369 0.61705

__EOF__

本文作者:Hoppz

本文链接:https://www.cnblogs.com/hoppz/p/16557958.html

关于博主:评论和私信会在第一时间回复。或者直接私信我。

版权声明:本博客所有文章除特别声明外,均采用 BY-NC-SA 许可协议。转载请注明出处!

声援博主:如果您觉得文章对您有帮助,可以点击文章右下角【推荐】一下。您的鼓励是博主的最大动力!

本文链接:https://www.cnblogs.com/hoppz/p/16557958.html

关于博主:评论和私信会在第一时间回复。或者直接私信我。

版权声明:本博客所有文章除特别声明外,均采用 BY-NC-SA 许可协议。转载请注明出处!

声援博主:如果您觉得文章对您有帮助,可以点击文章右下角【推荐】一下。您的鼓励是博主的最大动力!

【推荐】国内首个AI IDE,深度理解中文开发场景,立即下载体验Trae

【推荐】编程新体验,更懂你的AI,立即体验豆包MarsCode编程助手

【推荐】抖音旗下AI助手豆包,你的智能百科全书,全免费不限次数

【推荐】轻量又高性能的 SSH 工具 IShell:AI 加持,快人一步

· 无需6万激活码!GitHub神秘组织3小时极速复刻Manus,手把手教你使用OpenManus搭建本

· C#/.NET/.NET Core优秀项目和框架2025年2月简报

· Manus爆火,是硬核还是营销?

· 终于写完轮子一部分:tcp代理 了,记录一下

· 【杭电多校比赛记录】2025“钉耙编程”中国大学生算法设计春季联赛(1)

2021-08-06 [ACM] CF水题记