Computer Architecture and practical designs (CAPD, 计算机体系结构)

大纲

- 指令集与简单CPU处理器设计

- 复杂CPU流水线与乱序执行(指令级并行)

- 高速缓存、内存及存储器(存储)

- 多线程与多核系统(线程级并行)

- 向量处理器与GPU(数据级并行)

- 微码与超长指令字处理器

- 片上网络

- 仓库级计算机与云计算、分布式

- 系统评价与性能分析

- 硬件安全、硬件虚拟化和加速

指令集与简单CPU处理器设计

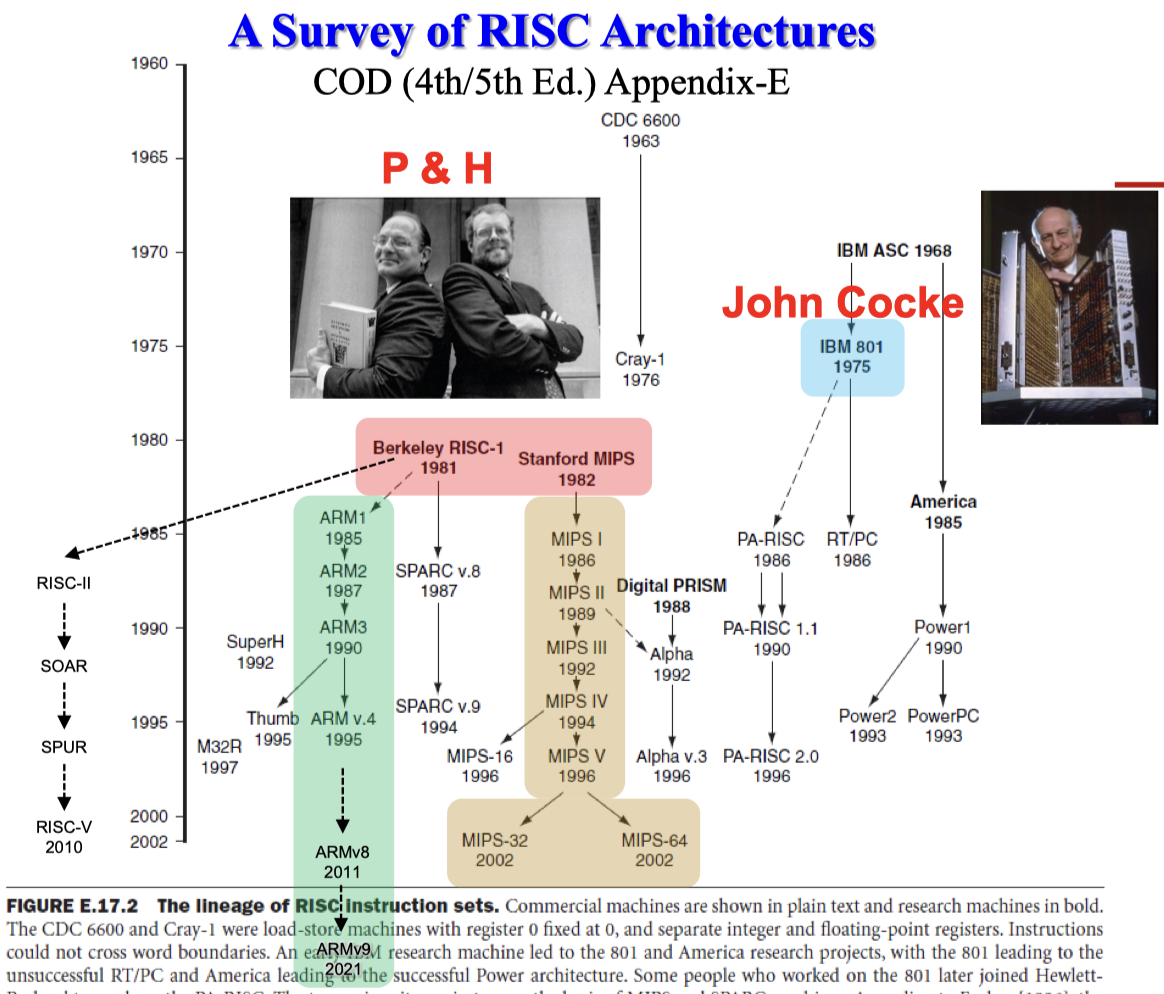

RISC-V、ARM 和 MIPS 的发展时间线和关系

复杂CPU流水线与乱序执行

流水线级数的作用是如何体现的:在可行范围内,把一条指令的执行流程划分的越细致,也就是级数越多,可以并行化执行的指令执行的指令数目越多

感觉分的级数越多,在不考虑各级之间的通信同步延迟情况下,时钟周期就能够越短,由于CPI最小也就是1了,时钟周期降低了,那么流水线下一条指令的执行时间就降低了(需要注意这只是在不考虑其他很多因素的理想情况下)

如何理解流水线的分级,指令执行过程包含一些阶段,如果在确保指令能够正常执行的情况下,把这些阶段细划,分为多个阶段,这些阶段相互之间是可以同时进行的,

因此就可以引入多条轨道,同时执行多条指令,在没有其他手段的情况下(例如每执行几条指令后就停顿一次),划分的阶段数就是流水线级数,同时也是流水线中同时执行的指令数

注意在某些计算题目中,流水线的计算并不是从流水线为空的最初状态开始的,多数是按照流水线执行过程中的情况来看的,e.g.流水线的CPI

流水线冒险

结构冒险

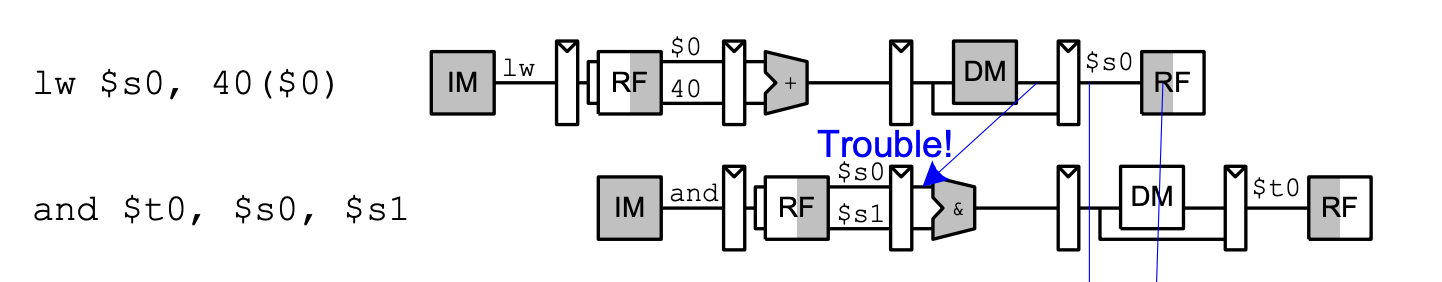

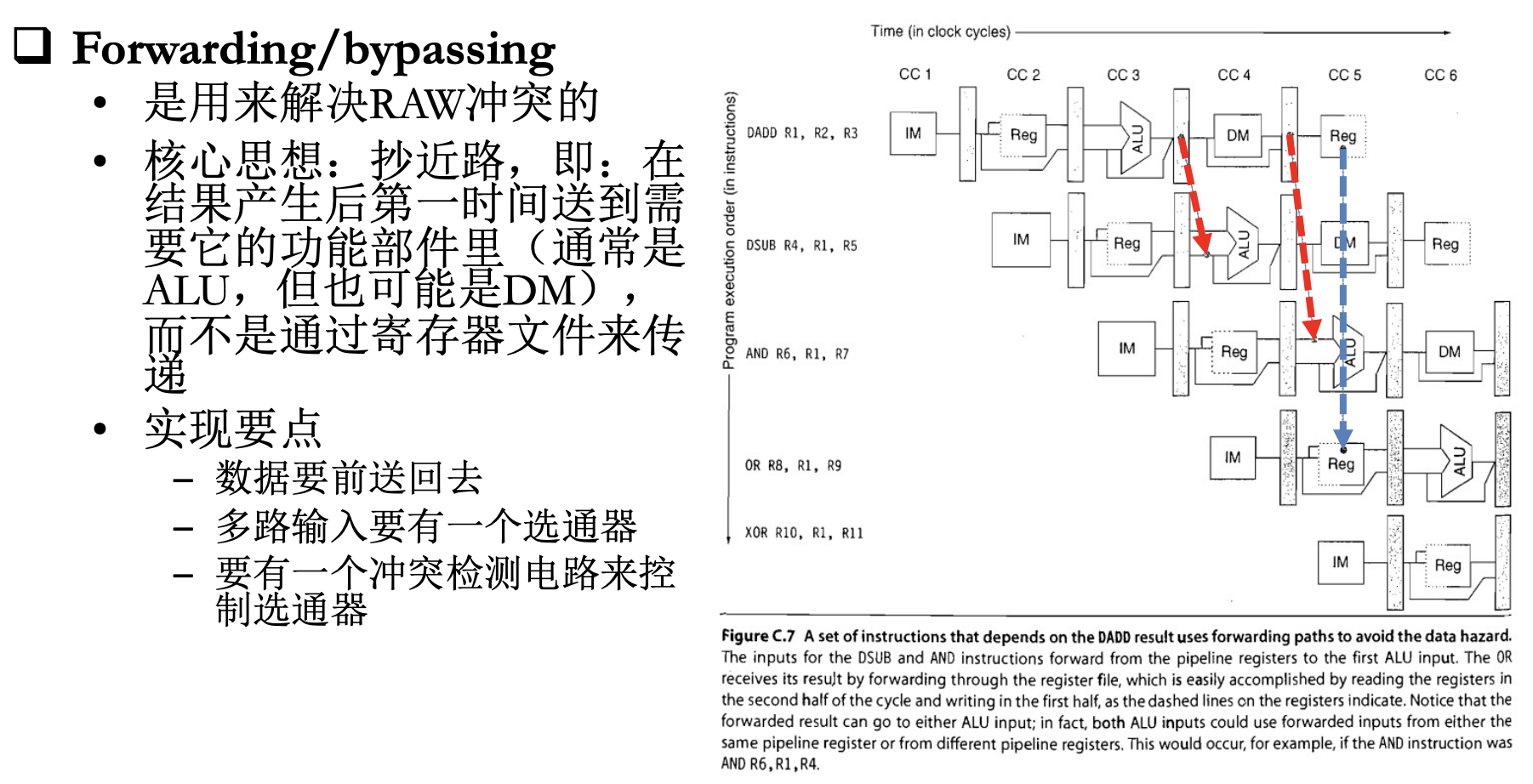

数据冒险

- 使用数据前递/数据转发(forwarding)

假设一个寄存器的值得到确定是在EX阶段之后,但是这个值的写入却要等到WB阶段。在流水线中,下一条指令需要在上一条指令的WB阶段之前就获取这个寄存器的值,因此直接将上一条指令EX阶段后确定的值通过旁路前递/转发到下一条指令的目标位置。

需要注意的是,数据前递/转发必须是上一条指令前面的cycle把数据转发到下面指令后面的cycle,并不能做到时光逆转,例如下面的trouble

同一个寄存器,前半个周期写,后半个周期读,这也属于data forwarding

- 停顿

分支/控制冒险

- 冻结/冲刷流水线

- 预测选中机制

- 预测未选中机制

- 延迟分支

高速缓存、内存及存储器

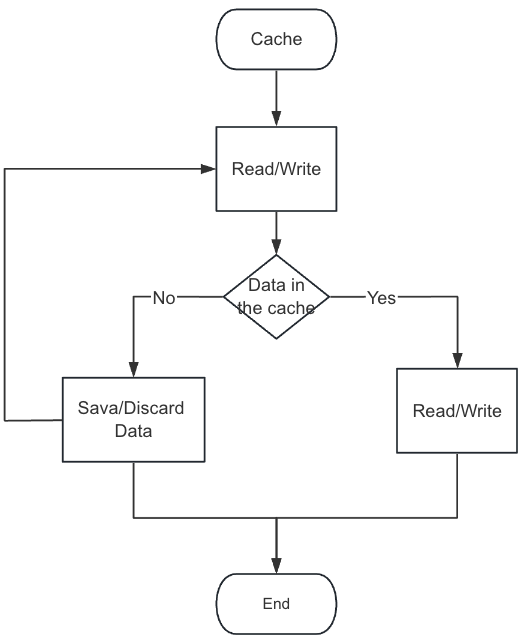

The design purpose of cache is store data temporarily for the data transforming between cpu and memory. So it will exchange data with memory. Therefore, to increase the efficiency of data transforming, memory and cache create a logical concept block. Every time transforming data, they will transform a block data.

Cache Design key points

Cache need to slove three problems:

- sava data (discard data)

- Placement:block放在哪里,如何找到block

- Replacement:如果需要置换,把cache中的哪些block置换出去

- Granularity of management: block设置为多大

- Read data

- Instructions/Data:指令cache和数据cache需要分离吗

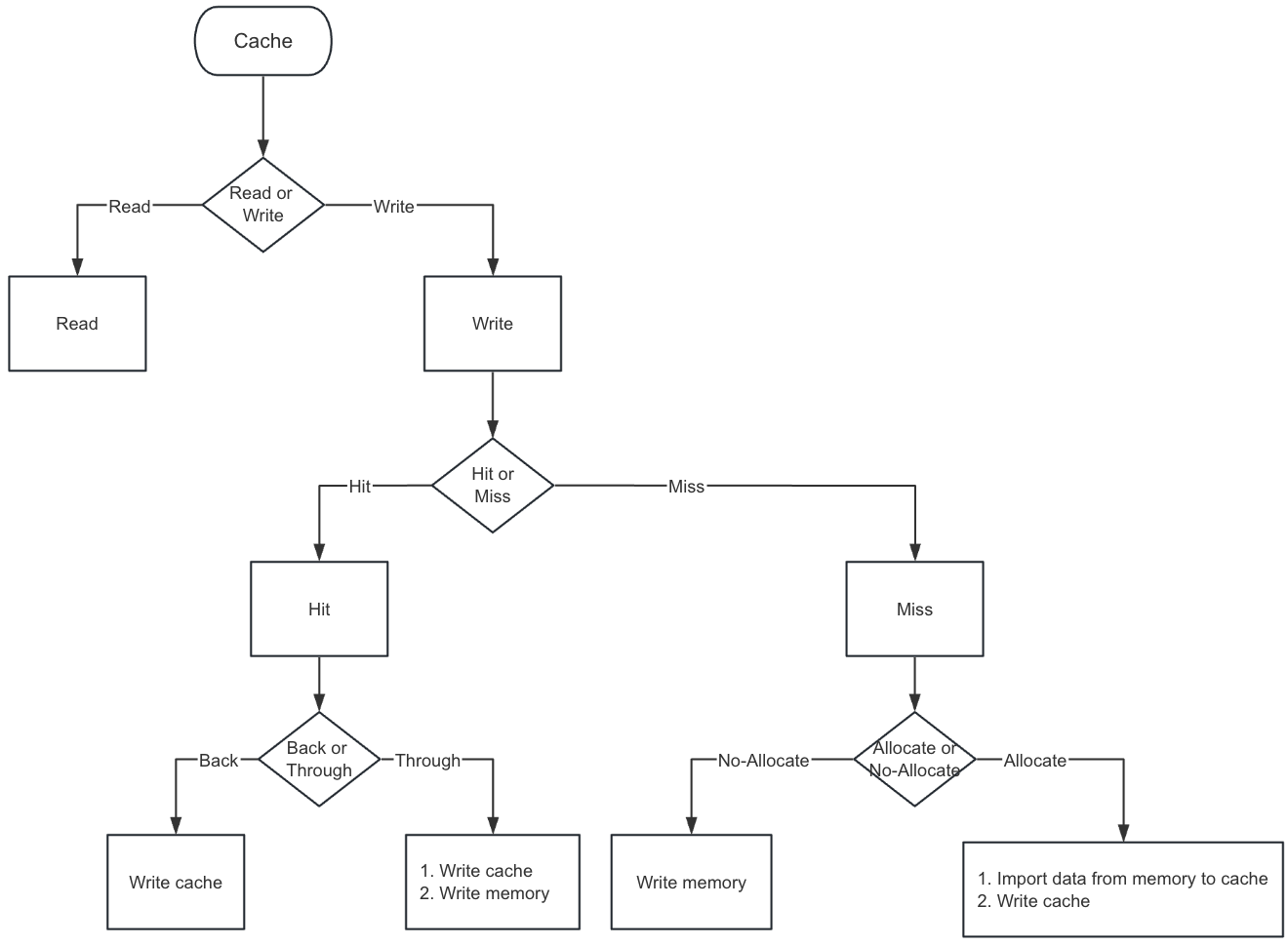

- Write(Edit) data

- Write policy

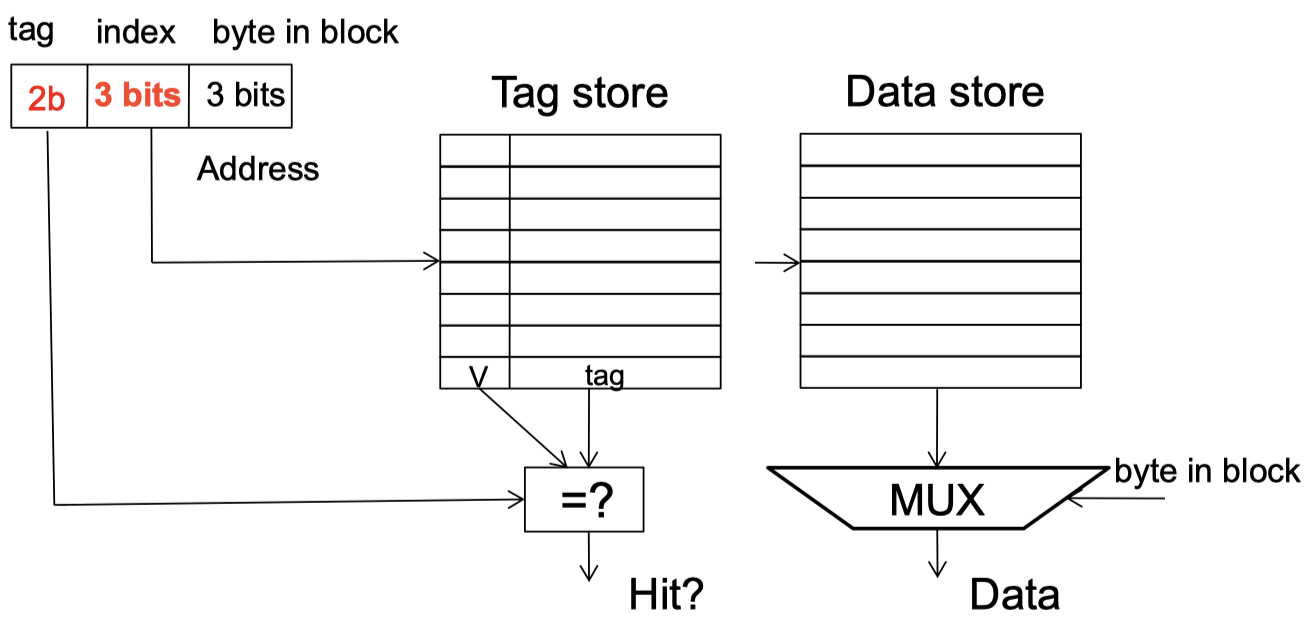

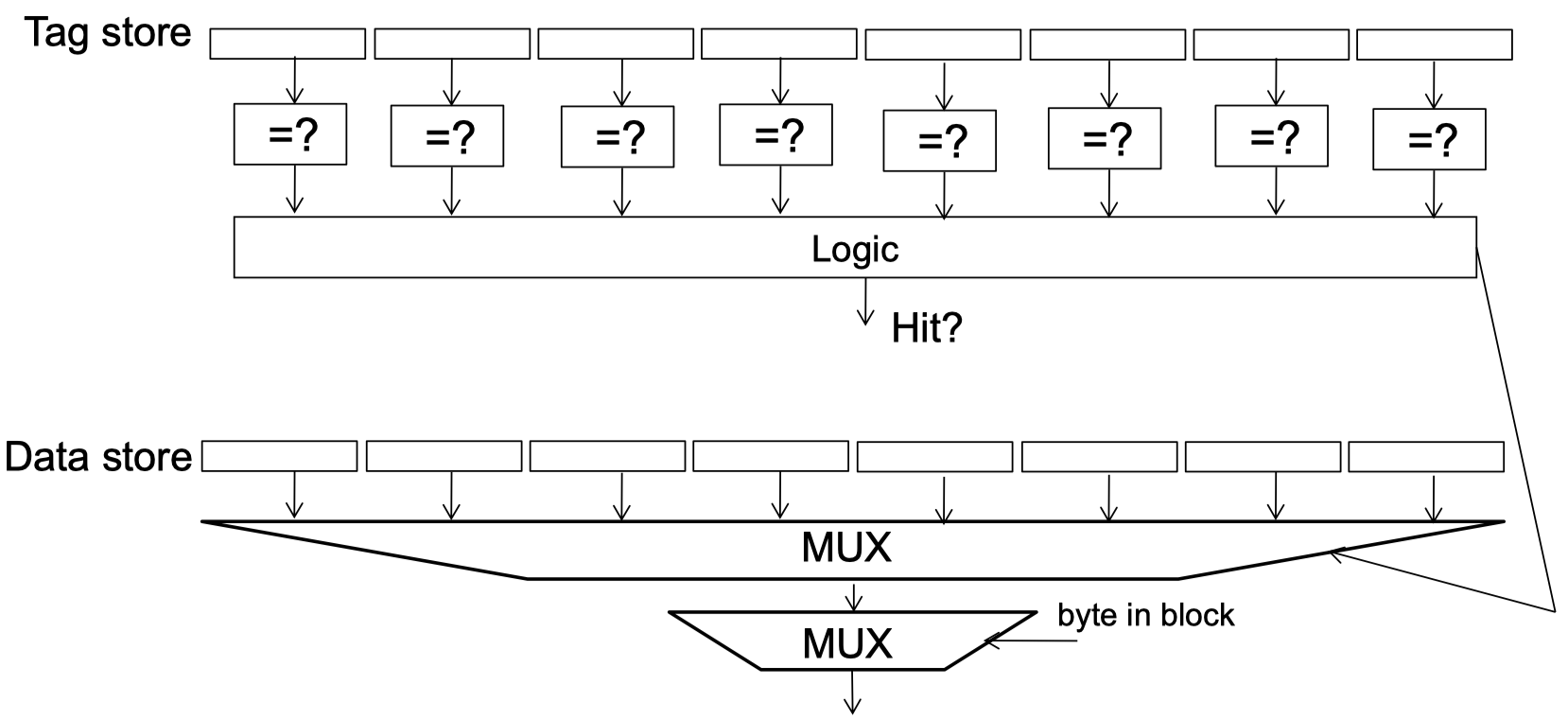

Placement-Cache映射方式

- Direct-mapped

The problem of this way is that if we want to access two blocks that has the same index but different tags, all accesses will become cache-miss.

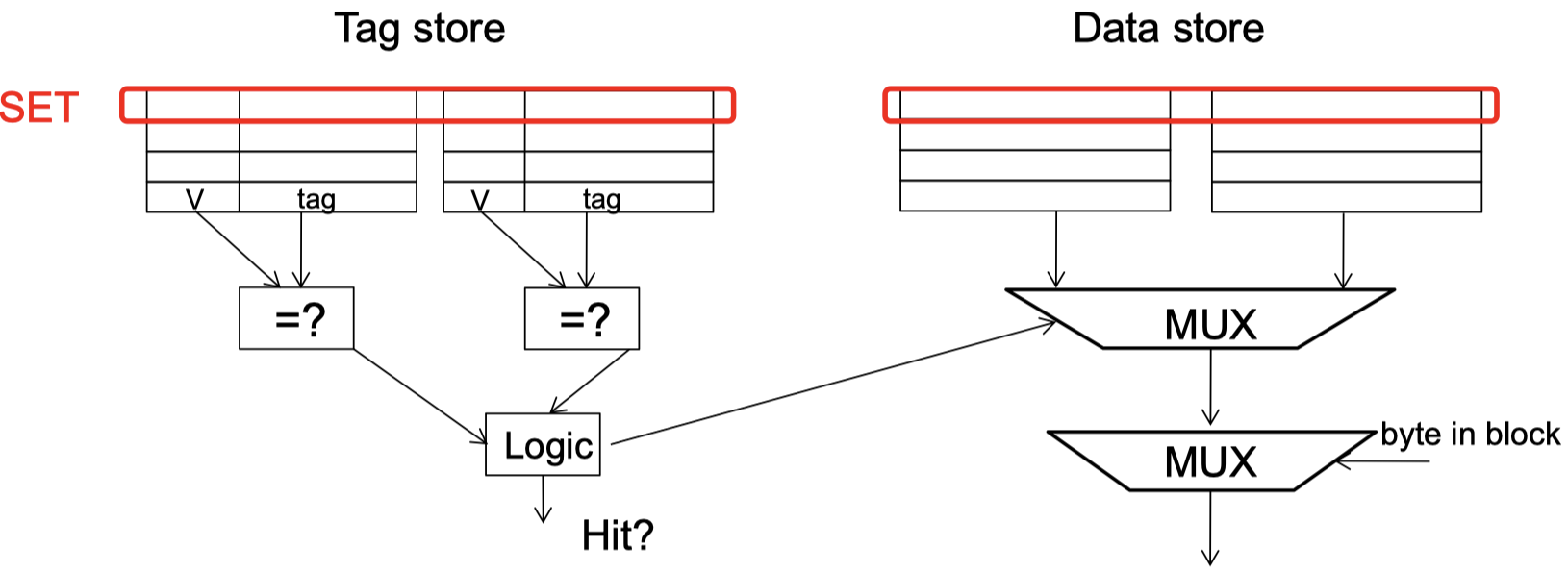

- Set Associativity

One index maps to only one space causes the disadvantagement of Direct-mapped.

One way to solve it is that increase the num of spaces that are mapped by an index.

Using index bit to find a group, and we cache can place the block in any one in the two spaces (just use the 2 way, the above picture, as an example, in fact, there are many types).

The advantage of this way is that an index maps to multiple space, rather than just one space in direct-mapped. So it can decrease the probability of cache-miss.

The disadvantage of it is that cache have to increase the number of comparative circuit and use a larger tag store.

- Full Associativity

If we continuously increasing the associativity, we will get the full associativity. If a cache can store eight blocks, a full associativity is equivalent to a eight-way set associativity.

Because one group just has one block, so a block can be placed in any block location. It is meaningless to use the index bit to map a block location.

Replacement-cache priority

We have to consider the changement of chche priorities in the following three scenes:

- cache fill

- cache hit

- cache miss

Write

【推荐】国内首个AI IDE,深度理解中文开发场景,立即下载体验Trae

【推荐】编程新体验,更懂你的AI,立即体验豆包MarsCode编程助手

【推荐】抖音旗下AI助手豆包,你的智能百科全书,全免费不限次数

【推荐】轻量又高性能的 SSH 工具 IShell:AI 加持,快人一步

· 终于写完轮子一部分:tcp代理 了,记录一下

· 震惊!C++程序真的从main开始吗?99%的程序员都答错了

· 别再用vector<bool>了!Google高级工程师:这可能是STL最大的设计失误

· 单元测试从入门到精通

· 【硬核科普】Trae如何「偷看」你的代码?零基础破解AI编程运行原理