Docker 搭建Spark 依赖singularities/spark:2.2镜像

singularities/spark:2.2版本中

Hadoop版本:2.8.2

Spark版本: 2.2.1

Scala版本:2.11.8

Java版本:1.8.0_151

拉取镜像:

[root@localhost docker-spark-2.1.0]# docker pull singularities/spark

查看:

[root@localhost docker-spark-2.1.0]# docker image ls REPOSITORY TAG IMAGE ID CREATED SIZE docker.io/singularities/spark latest 84222b254621 6 months ago 1.39 GB

创建docker-compose.yml文件

[root@localhost home]# mkdir singularitiesCR

[root@localhost home]# cd singularitiesCR

[root@localhost singularitiesCR]# touch docker-compose.yml

内容:

version: "2" services: master: image: singularities/spark command: start-spark master hostname: master ports: - "6066:6066" - "7070:7070" - "8080:8080" - "50070:50070" worker: image: singularities/spark command: start-spark worker master environment: SPARK_WORKER_CORES: 1 SPARK_WORKER_MEMORY: 2g links: - master

执行docker-compose up即可启动一个单工作节点的standlone模式下运行的spark集群

[root@localhost singularitiesCR]# docker-compose up -d

Creating singularitiescr_master_1 ... done

Creating singularitiescr_worker_1 ... done

查看容器:

[root@localhost singularitiesCR]# docker-compose ps Name Command State Ports -------------------------------------------------------------------------------------------------------------------------------------------------------- singularitiescr_master_1 start-spark master Up 10020/tcp, 13562/tcp, 14000/tcp, 19888/tcp, 50010/tcp, 50020/tcp, 0.0.0.0:50070->50070/tcp, 50075/tcp, 50090/tcp, 50470/tcp, 50475/tcp, 0.0.0.0:6066->6066/tcp, 0.0.0.0:7070->7070/tcp, 7077/tcp, 8020/tcp, 0.0.0.0:8080->8080/tcp, 8081/tcp, 9000/tcp singularitiescr_worker_1 start-spark worker master Up 10020/tcp, 13562/tcp, 14000/tcp, 19888/tcp, 50010/tcp, 50020/tcp, 50070/tcp, 50075/tcp, 50090/tcp, 50470/tcp, 50475/tcp, 6066/tcp, 7077/tcp, 8020/tcp, 8080/tcp, 8081/tcp, 9000/tcp

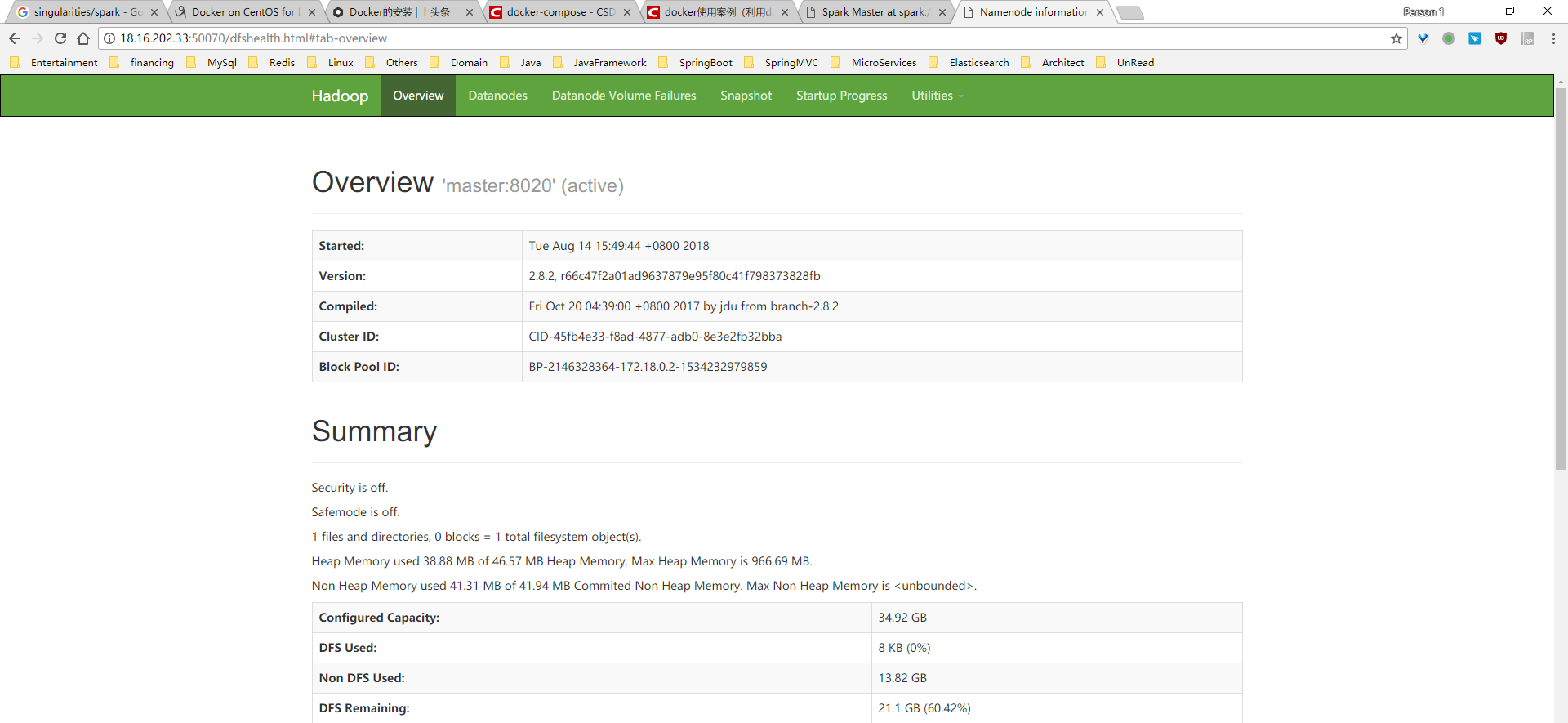

查看结果:

停止容器:

[root@localhost singularitiesCR]# docker-compose stop Stopping singularitiescr_worker_1 ... done Stopping singularitiescr_master_1 ... done [root@localhost singularitiesCR]# docker-compose ps Name Command State Ports ----------------------------------------------------------------------- singularitiescr_master_1 start-spark master Exit 137 singularitiescr_worker_1 start-spark worker master Exit 137

删除容器:

[root@localhost singularitiesCR]# docker-compose rm Going to remove singularitiescr_worker_1, singularitiescr_master_1 Are you sure? [yN] y Removing singularitiescr_worker_1 ... done Removing singularitiescr_master_1 ... done [root@localhost singularitiesCR]# docker-compose ps Name Command State Ports ------------------------------

进入master容器查看版本:

[root@localhost singularitiesCR]# docker exec -it 497 /bin/bash root@master:/# hadoop version Hadoop 2.8.2 Subversion https://git-wip-us.apache.org/repos/asf/hadoop.git -r 66c47f2a01ad9637879e95f80c41f798373828fb Compiled by jdu on 2017-10-19T20:39Z Compiled with protoc 2.5.0 From source with checksum dce55e5afe30c210816b39b631a53b1d This command was run using /usr/local/hadoop-2.8.2/share/hadoop/common/hadoop-common-2.8.2.jar root@master:/# which is hadoop /usr/local/hadoop-2.8.2/bin/hadoop root@master:/# spark-shell Setting default log level to "WARN". To adjust logging level use sc.setLogLevel(newLevel). For SparkR, use setLogLevel(newLevel). 18/08/14 09:20:44 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable Spark context Web UI available at http://172.18.0.2:4040 Spark context available as 'sc' (master = local[*], app id = local-1534238447256). Spark session available as 'spark'. Welcome to ____ __ / __/__ ___ _____/ /__ _\ \/ _ \/ _ `/ __/ '_/ /___/ .__/\_,_/_/ /_/\_\ version 2.2.1 /_/ Using Scala version 2.11.8 (OpenJDK 64-Bit Server VM, Java 1.8.0_151) Type in expressions to have them evaluated. Type :help for more information.

参考:

【推荐】国内首个AI IDE,深度理解中文开发场景,立即下载体验Trae

【推荐】编程新体验,更懂你的AI,立即体验豆包MarsCode编程助手

【推荐】抖音旗下AI助手豆包,你的智能百科全书,全免费不限次数

【推荐】轻量又高性能的 SSH 工具 IShell:AI 加持,快人一步

· Linux系列:如何用heaptrack跟踪.NET程序的非托管内存泄露

· 开发者必知的日志记录最佳实践

· SQL Server 2025 AI相关能力初探

· Linux系列:如何用 C#调用 C方法造成内存泄露

· AI与.NET技术实操系列(二):开始使用ML.NET

· 无需6万激活码!GitHub神秘组织3小时极速复刻Manus,手把手教你使用OpenManus搭建本

· C#/.NET/.NET Core优秀项目和框架2025年2月简报

· Manus爆火,是硬核还是营销?

· 终于写完轮子一部分:tcp代理 了,记录一下

· 【杭电多校比赛记录】2025“钉耙编程”中国大学生算法设计春季联赛(1)