OPTEE - CA和TA的交互

本文分析了optee中CA和TA的交互流程,参考了很多大神的文章。

CA和TA的交互设计很多方面,过程非常复杂,本文仅主要分析了其中函数调用的流程,忽略了内存管理,中断处理等很多细节,后续再找时间补上吧。

参考文章:

https://www.zhihu.com/column/c_1513796671003271168

https://www.cnblogs.com/arnoldlu/p/14175126.html

https://icyshuai.blog.csdn.net/category_6909494_2.html

https://www.jianshu.com/p/557f9903e895

https://blog.csdn.net/qq_42878531/article/details/121783732

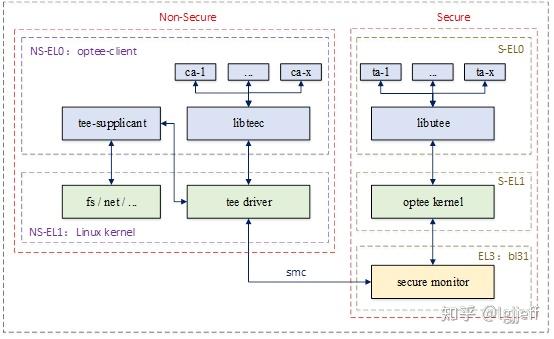

optee整体架构

optee整体架构如下图

(图片来源:https://zhuanlan.zhihu.com/p/553611279 )

client application端API调用

从以下示例代码中(tab:example-hello_world)我们可以看到,在CA(client application)端调用tee client API的流程如下:

- TEEC_InitializeContext()

- TEEC_OpenSession()

- TEEC_InvokeCommand()

- TEEC_CloseSession();

- TEEC_FinalizeContext();

这些API都有一个共同的特点(tab:tee_client_api): 通过ioctl()这一系统调用执行相应指令(open()和close()打开/关闭了/dev/tee0这一tee设备,该设备相关信息仍待研究,先按下不表)。

TEEC_InitializeContext() -> ioctl(fd, TEE_IOC_VERSION, &vers)

TEEC_OpenSession() -> ioctl(ctx->fd, TEE_IOC_OPEN_SESSION, &buf_data)

TEEC_CloseSession() -> ioctl(session->ctx->fd, TEE_IOC_CLOSE_SESSION, &arg)

TEEC_InvokeCommand() -> ioctl(session->ctx->fd, TEE_IOC_INVOKE, &buf_data)

可见TEE_IOC_xxx这些ioctl命令定义了CA希望通过ioctl让TA实现的操作,其定义在optee_client/libteec/include/linux/tee.h中(tab:ioctl_cmd)。那么这些命令在kernel中是如何进行处理的呢?

内核处理

从以下代码中我们可以看到,tee_core.c中定义了tee的file_operations结构体类型变量tee_fops,其中.unlocked_ioctl和.compat_ioctl方法都指向tee_ioctl()函数(tee_fops是如何与对tee设备文件的操作绑定起来的在下一节分析)。

tee_ioctl()函数中,根据用户空间传入的cmd进行相应的处理。以TEE_IOC_VERSION为例,tee_ioctl_version()会被调用。而该函数则会去调用tee_driver_ops结构体类型变量的.get_version函数optee_get_version(),最终获取版本相关信息。

tee_fops和tee设备文件的绑定

用户进程在对设备文件进行诸如read/write等操作时,系统调用通过设备文件的主设备号找到相应的设备驱动程序,然后读取file_operations这个数据结构中相应的函数指针,接着把控制权交给该函数。(该说法来自 https://www.cnblogs.com/chen-farsight/p/6181341.html ,应大致正确,细节未及探究)

tee驱动的加载通过subsys_initcall(tee_init)和module_init(optee_driver_init)两个函数完成(详见 https://icyshuai.blog.csdn.net/article/details/72934531 )。其中optee_driver_init()调用了optee_probe(), 而optee_probe()中分别调用了以下两个函数:

teedev = tee_device_alloc(&optee_desc, NULL, pool, optee);

teedev = tee_device_alloc(&optee_supp_desc, NULL, pool, optee);

在tee_device_alloc()中,通过调用cdev_init(&teedev->cdev, &tee_fops);将tee_fops与tee设备文件的主设备号绑定。可以看到/dev/tee0(用于libteec)和/dev/teepriv0(用于tee-supplicant)都绑定了同样的file_operations.

那么这两个设备之间的区别如何体现呢?答案同样在tee_device_alloc()中,通过teedev->desc = teedesc;将optee_desc和optee_supp_desc分别赋予了/dev/tee0和/dev/teepriv0对应的tee_device结构体变量的.desc成员。

optee_desc和optee_supp_desc定义如下:

static const struct tee_driver_ops optee_ops = {

.get_version = optee_get_version,

.open = optee_open,

.release = optee_release,

.open_session = optee_open_session,

.close_session = optee_close_session,

.invoke_func = optee_invoke_func,

.cancel_req = optee_cancel_req,

.shm_register = optee_shm_register,

.shm_unregister = optee_shm_unregister,

};

static const struct tee_desc optee_desc = {

.name = DRIVER_NAME "-clnt",

.ops = &optee_ops,

.owner = THIS_MODULE,

};

static const struct tee_driver_ops optee_supp_ops = {

.get_version = optee_get_version,

.open = optee_open,

.release = optee_release,

.supp_recv = optee_supp_recv,

.supp_send = optee_supp_send,

.shm_register = optee_shm_register_supp,

.shm_unregister = optee_shm_unregister_supp,

};

static const struct tee_desc optee_supp_desc = {

.name = DRIVER_NAME "-supp",

.ops = &optee_supp_ops,

.owner = THIS_MODULE,

.flags = TEE_DESC_PRIVILEGED,

};

这样,在内核处理函数tee_ioctl_version()中,通过下列语句即可选择不同的设备对应的操作函数。

ctx->teedev->desc->ops->get_version(ctx->teedev, &vers);

trusted application端的处理

上述例子中对TEE_IOC_VERSION的处理并没有涉及到secure/non-secure world的切换以及TA(trusted application)的处理,只是在linux kernel内就拿到了相关版本信息并返回用户空间。这只是特例,下面我们再以TEE_IOC_OPEN_SESSION为例看一下TA端的处理。

TEEC_OpenSession()中调用ioctl(ctx->fd, TEE_IOC_OPEN_SESSION, &buf_data)后,同样会进入内核态,执行tee_ioctl()函数,此时传入的cmd为TEE_IOC_OPEN_SESSION,因此执行tee_ioctl_open_session()函数,最终调用ctx->teedev->desc->ops->open_session(ctx, &arg, params)执行对应设备的操作函数optee_open_session()。

optee_open_session()的定义在/drivers/tee/optee/call.c中,

点击查看代码

int optee_open_session(struct tee_context *ctx,

struct tee_ioctl_open_session_arg *arg,

struct tee_param *param)

{

struct optee_context_data *ctxdata = ctx->data;

int rc;

struct tee_shm *shm;

struct optee_msg_arg *msg_arg;

phys_addr_t msg_parg;

struct optee_session *sess = NULL;

/* +2 for the meta parameters added below */

shm = get_msg_arg(ctx, arg->num_params + 2, &msg_arg, &msg_parg);

if (IS_ERR(shm))

return PTR_ERR(shm);

msg_arg->cmd = OPTEE_MSG_CMD_OPEN_SESSION; //指定cmd为OPTEE_MSG_CMD_OPEN_SESSION

msg_arg->cancel_id = arg->cancel_id;

/*

* Initialize and add the meta parameters needed when opening a

* session.

*/

msg_arg->params[0].attr = OPTEE_MSG_ATTR_TYPE_VALUE_INPUT |

OPTEE_MSG_ATTR_META;

msg_arg->params[1].attr = OPTEE_MSG_ATTR_TYPE_VALUE_INPUT |

OPTEE_MSG_ATTR_META;

memcpy(&msg_arg->params[0].u.value, arg->uuid, sizeof(arg->uuid)); //将uuid拷贝进params中

memcpy(&msg_arg->params[1].u.value, arg->uuid, sizeof(arg->clnt_uuid));

msg_arg->params[1].u.value.c = arg->clnt_login;

rc = optee_to_msg_param(msg_arg->params + 2, arg->num_params, param);

if (rc)

goto out;

sess = kzalloc(sizeof(*sess), GFP_KERNEL);

if (!sess) {

rc = -ENOMEM;

goto out;

}

if (optee_do_call_with_arg(ctx, msg_parg)) { //这是处理向secure world发出SMC指令和处理secure world发来的RPC调用的重要函数!!

msg_arg->ret = TEEC_ERROR_COMMUNICATION;

msg_arg->ret_origin = TEEC_ORIGIN_COMMS;

}

if (msg_arg->ret == TEEC_SUCCESS) {

/* A new session has been created, add it to the list. */

sess->session_id = msg_arg->session;

mutex_lock(&ctxdata->mutex);

list_add(&sess->list_node, &ctxdata->sess_list);

mutex_unlock(&ctxdata->mutex);

} else {

kfree(sess);

}

if (optee_from_msg_param(param, arg->num_params, msg_arg->params + 2)) {

arg->ret = TEEC_ERROR_COMMUNICATION;

arg->ret_origin = TEEC_ORIGIN_COMMS;

/* Close session again to avoid leakage */

optee_close_session(ctx, msg_arg->session);

} else {

arg->session = msg_arg->session;

arg->ret = msg_arg->ret;

arg->ret_origin = msg_arg->ret_origin;

}

out:

tee_shm_free(shm);

return rc;

}

其中最重要的函数是optee_do_call_with_arg(),其定义如下:

点击查看代码

u32 optee_do_call_with_arg(struct tee_context *ctx, phys_addr_t parg)

{

struct optee *optee = tee_get_drvdata(ctx->teedev);

struct optee_call_waiter w;

struct optee_rpc_param param = { };

struct optee_call_ctx call_ctx = { };

u32 ret;

param.a0 = OPTEE_SMC_CALL_WITH_ARG;

reg_pair_from_64(¶m.a1, ¶m.a2, parg);

/* Initialize waiter */

optee_cq_wait_init(&optee->call_queue, &w);

while (true) {

struct arm_smccc_res res;

optee->invoke_fn(param.a0, param.a1, param.a2, param.a3, //通过hvc/smc切换到secure world继续执行

param.a4, param.a5, param.a6, param.a7,

&res);

if (res.a0 == OPTEE_SMC_RETURN_ETHREAD_LIMIT) {

/*

* Out of threads in secure world, wait for a thread

* become available.

*/

optee_cq_wait_for_completion(&optee->call_queue, &w);

} else if (OPTEE_SMC_RETURN_IS_RPC(res.a0)) { //此处是对secure world发来的RPC调用的处理

if (need_resched())

cond_resched();

param.a0 = res.a0;

param.a1 = res.a1;

param.a2 = res.a2;

param.a3 = res.a3;

optee_handle_rpc(ctx, ¶m, &call_ctx);

} else {

ret = res.a0;

break;

}

}

optee_rpc_finalize_call(&call_ctx);

/*

* We're done with our thread in secure world, if there's any

* thread waiters wake up one.

*/

optee_cq_wait_final(&optee->call_queue, &w);

return ret;

}

optee_do_call_with_arg()中调用了optee->invoke_fn()来切换到secure world继续执行。invoke_fn()的赋值在optee os初始化时get_invoke_func()中完成,根据设备树文件中的"method"节点值为"hvc"还是"smc"决定invoke_fn指向optee_smccc_hvc还是optee_smccc_smc.(本文不考虑虚拟化场景,因此忽略hvc)

optee_smccc_smc是对__arm_smccc_smc的简单封装,其定义在arch/arm64/kernel/smccc-call.S中:

点击查看代码

.macro SMCCC instr

.cfi_startproc

\instr #0 //核心即调用smc #0指令进入EL3切换到secure world

ldr x4, [sp]

stp x0, x1, [x4, #ARM_SMCCC_RES_X0_OFFS]

stp x2, x3, [x4, #ARM_SMCCC_RES_X2_OFFS]

ldr x4, [sp, #8]

cbz x4, 1f /* no quirk structure */

ldr x9, [x4, #ARM_SMCCC_QUIRK_ID_OFFS]

cmp x9, #ARM_SMCCC_QUIRK_QCOM_A6

b.ne 1f

str x6, [x4, ARM_SMCCC_QUIRK_STATE_OFFS]

1: ret

.cfi_endproc

.endm

/*

* void arm_smccc_smc(unsigned long a0, unsigned long a1, unsigned long a2,

* unsigned long a3, unsigned long a4, unsigned long a5,

* unsigned long a6, unsigned long a7, struct arm_smccc_res *res,

* struct arm_smccc_quirk *quirk)

*/

ENTRY(__arm_smccc_smc)

SMCCC smc

ENDPROC(__arm_smccc_smc)

EXPORT_SYMBOL(__arm_smccc_smc)

调用__arm_smccc_smc后CPU会切换到secure monitor中继续执行,具体如何切换到secure world参见https://blog.csdn.net/shuaifengyun/article/details/73118852 和

https://icyshuai.blog.csdn.net/article/details/72794556

进入secure world后,最终会走到函数tee_entry_std()中,其定义在optee_os/core/arch/arm/tee/entry_std.c中,

点击查看代码

/* Note: this function is weak to let platforms add special handling */

uint32_t __weak tee_entry_std(struct optee_msg_arg *arg, uint32_t num_params)

{

return __tee_entry_std(arg, num_params);

}

/*

* If tee_entry_std() is overridden, it's still supposed to call this

* function.

*/

uint32_t __tee_entry_std(struct optee_msg_arg *arg, uint32_t num_params)

{

uint32_t rv = OPTEE_SMC_RETURN_OK;

/* Enable foreign interrupts for STD calls */

thread_set_foreign_intr(true);

switch (arg->cmd) {

case OPTEE_MSG_CMD_OPEN_SESSION:

entry_open_session(arg, num_params);

break;

case OPTEE_MSG_CMD_CLOSE_SESSION:

entry_close_session(arg, num_params);

break;

case OPTEE_MSG_CMD_INVOKE_COMMAND:

entry_invoke_command(arg, num_params);

break;

case OPTEE_MSG_CMD_CANCEL:

entry_cancel(arg, num_params);

break;

#ifndef CFG_CORE_FFA

#ifdef CFG_CORE_DYN_SHM

case OPTEE_MSG_CMD_REGISTER_SHM:

register_shm(arg, num_params);

break;

case OPTEE_MSG_CMD_UNREGISTER_SHM:

unregister_shm(arg, num_params);

break;

#endif

#endif

default:

EMSG("Unknown cmd 0x%x", arg->cmd);

rv = OPTEE_SMC_RETURN_EBADCMD;

}

return rv;

}

这里我们可以看到对参数中带入的cmd进行识别,在optee_open_session()中指定了cmd为OPTEE_MSG_CMD_OPEN_SESSION,因此执行entry_open_session().

点击查看代码

static void entry_open_session(struct optee_msg_arg *arg, uint32_t num_params)

{

TEE_Result res;

TEE_ErrorOrigin err_orig = TEE_ORIGIN_TEE;

struct tee_ta_session *s = NULL;

TEE_Identity clnt_id;

TEE_UUID uuid;

struct tee_ta_param param;

size_t num_meta;

uint64_t saved_attr[TEE_NUM_PARAMS] = { 0 };

res = get_open_session_meta(num_params, arg->params, &num_meta, &uuid,

&clnt_id);

if (res != TEE_SUCCESS)

goto out;

res = copy_in_params(arg->params + num_meta, num_params - num_meta,

¶m, saved_attr);

if (res != TEE_SUCCESS)

goto cleanup_shm_refs;

res = tee_ta_open_session(&err_orig, &s, &tee_open_sessions, &uuid,

&clnt_id, TEE_TIMEOUT_INFINITE, ¶m);

if (res != TEE_SUCCESS)

s = NULL;

copy_out_param(¶m, num_params - num_meta, arg->params + num_meta,

saved_attr);

/*

* The occurrence of open/close session command is usually

* un-predictable, using this property to increase randomness

* of prng

*/

plat_prng_add_jitter_entropy(CRYPTO_RNG_SRC_JITTER_SESSION,

&session_pnum);

cleanup_shm_refs:

cleanup_shm_refs(saved_attr, ¶m, num_params - num_meta);

out:

if (s)

arg->session = s->id;

else

arg->session = 0;

arg->ret = res;

arg->ret_origin = err_orig;

}

跳转到tee_ta_open_session()函数中,该函数定义于optee_os/core/kernel/tee_ta_manager.c中,其中最重要的两个函数分别是tee_ta_init_session()和ts_ctx->ops->enter_open_session()。

点击查看代码

TEE_Result tee_ta_open_session(TEE_ErrorOrigin *err,

struct tee_ta_session **sess,

struct tee_ta_session_head *open_sessions,

const TEE_UUID *uuid,

const TEE_Identity *clnt_id,

uint32_t cancel_req_to,

struct tee_ta_param *param)

{

TEE_Result res = TEE_SUCCESS;

struct tee_ta_session *s = NULL;

struct tee_ta_ctx *ctx = NULL;

struct ts_ctx *ts_ctx = NULL;

bool panicked = false;

bool was_busy = false;

res = tee_ta_init_session(err, open_sessions, uuid, &s);

if (res != TEE_SUCCESS) {

DMSG("init session failed 0x%x", res);

return res;

}

if (!check_params(s, param))

return TEE_ERROR_BAD_PARAMETERS;

ts_ctx = s->ts_sess.ctx;

if (ts_ctx)

ctx = ts_to_ta_ctx(ts_ctx);

if (!ctx || ctx->panicked) {

DMSG("panicked, call tee_ta_close_session()");

tee_ta_close_session(s, open_sessions, KERN_IDENTITY);

*err = TEE_ORIGIN_TEE;

return TEE_ERROR_TARGET_DEAD;

}

*sess = s;

/* Save identity of the owner of the session */

s->clnt_id = *clnt_id;

if (tee_ta_try_set_busy(ctx)) {

s->param = param;

set_invoke_timeout(s, cancel_req_to);

res = ts_ctx->ops->enter_open_session(&s->ts_sess);

tee_ta_clear_busy(ctx);

} else {

/* Deadlock avoided */

res = TEE_ERROR_BUSY;

was_busy = true;

}

panicked = ctx->panicked;

s->param = NULL;

tee_ta_put_session(s);

if (panicked || (res != TEE_SUCCESS))

tee_ta_close_session(s, open_sessions, KERN_IDENTITY);

/*

* Origin error equal to TEE_ORIGIN_TRUSTED_APP for "regular" error,

* apart from panicking.

*/

if (panicked || was_busy)

*err = TEE_ORIGIN_TEE;

else

*err = s->err_origin;

if (res != TEE_SUCCESS)

EMSG("Failed. Return error 0x%x", res);

return res;

}

tee_ta_init_session()会首先根据uuid查找当期按要求open session的TA是否已经加载(所有已加载的ta context保存在tee_ctxes中)。若发现是一个新的TA,则会按顺序从下列三种TA中寻找相应的TA image:

- secure partition

- pseudo TA

- user TA

我们主要关心user TA的加载,暂时忽略前两种TA的session init函数。

点击查看代码

static TEE_Result tee_ta_init_session(TEE_ErrorOrigin *err,

struct tee_ta_session_head *open_sessions,

const TEE_UUID *uuid,

struct tee_ta_session **sess)

{

TEE_Result res;

struct tee_ta_session *s = calloc(1, sizeof(struct tee_ta_session));

*err = TEE_ORIGIN_TEE;

if (!s)

return TEE_ERROR_OUT_OF_MEMORY;

s->cancel_mask = true;

condvar_init(&s->refc_cv);

condvar_init(&s->lock_cv);

s->lock_thread = THREAD_ID_INVALID;

s->ref_count = 1;

mutex_lock(&tee_ta_mutex);

s->id = new_session_id(open_sessions);

if (!s->id) {

res = TEE_ERROR_OVERFLOW;

goto err_mutex_unlock;

}

TAILQ_INSERT_TAIL(open_sessions, s, link);

/* Look for already loaded TA */

res = tee_ta_init_session_with_context(s, uuid);

mutex_unlock(&tee_ta_mutex);

if (res == TEE_SUCCESS || res != TEE_ERROR_ITEM_NOT_FOUND)

goto out;

/* Look for secure partition */

res = stmm_init_session(uuid, s);

if (res == TEE_SUCCESS || res != TEE_ERROR_ITEM_NOT_FOUND)

goto out;

/* Look for pseudo TA */

res = tee_ta_init_pseudo_ta_session(uuid, s);

if (res == TEE_SUCCESS || res != TEE_ERROR_ITEM_NOT_FOUND)

goto out;

/* Look for user TA */

res = tee_ta_init_user_ta_session(uuid, s);

out:

if (!res) {

*sess = s;

return TEE_SUCCESS;

}

mutex_lock(&tee_ta_mutex);

TAILQ_REMOVE(open_sessions, s, link);

err_mutex_unlock:

mutex_unlock(&tee_ta_mutex);

free(s);

return res;

}

tee_ta_init_user_ta_session()的实现在optee_os/core/arch/arm/kernel/user_ta.c中,

点击查看代码

TEE_Result tee_ta_init_user_ta_session(const TEE_UUID *uuid,

struct tee_ta_session *s)

{

TEE_Result res = TEE_SUCCESS;

struct user_ta_ctx *utc = NULL;

utc = calloc(1, sizeof(struct user_ta_ctx));

if (!utc)

return TEE_ERROR_OUT_OF_MEMORY;

utc->ta_ctx.initializing = true;

utc->uctx.is_initializing = true;

TAILQ_INIT(&utc->open_sessions);

TAILQ_INIT(&utc->cryp_states);

TAILQ_INIT(&utc->objects);

TAILQ_INIT(&utc->storage_enums);

condvar_init(&utc->ta_ctx.busy_cv);

utc->ta_ctx.ref_count = 1;

utc->uctx.ts_ctx = &utc->ta_ctx.ts_ctx;

/*

* Set context TA operation structure. It is required by generic

* implementation to identify userland TA versus pseudo TA contexts.

*/

set_ta_ctx_ops(&utc->ta_ctx);

utc->ta_ctx.ts_ctx.uuid = *uuid;

res = vm_info_init(&utc->uctx);

if (res)

goto out;

mutex_lock(&tee_ta_mutex);

s->ts_sess.ctx = &utc->ta_ctx.ts_ctx;

s->ts_sess.handle_svc = s->ts_sess.ctx->ops->handle_svc;

/*

* Another thread trying to load this same TA may need to wait

* until this context is fully initialized. This is needed to

* handle single instance TAs.

*/

TAILQ_INSERT_TAIL(&tee_ctxes, &utc->ta_ctx, link);

mutex_unlock(&tee_ta_mutex);

/*

* We must not hold tee_ta_mutex while allocating page tables as

* that may otherwise lead to a deadlock.

*/

ts_push_current_session(&s->ts_sess);

res = ldelf_load_ldelf(&utc->uctx);

if (!res)

res = ldelf_init_with_ldelf(&s->ts_sess, &utc->uctx);

ts_pop_current_session();

mutex_lock(&tee_ta_mutex);

if (!res) {

utc->uctx.is_initializing = false;

} else {

s->ts_sess.ctx = NULL;

TAILQ_REMOVE(&tee_ctxes, &utc->ta_ctx, link);

}

/* The state has changed for the context, notify eventual waiters. */

condvar_broadcast(&tee_ta_init_cv);

mutex_unlock(&tee_ta_mutex);

out:

if (res) {

condvar_destroy(&utc->ta_ctx.busy_cv);

pgt_flush_ctx(&utc->ta_ctx.ts_ctx);

free_utc(utc);

}

return res;

}

user TA的寻找和加载是通过secure EL0下的ldelf程序完成的。ldelf在ldelf_load_ldelf()被调用时才被加载到内存中(相关代码段,数据段信息写入了通过gen_ldelf_hex.py脚本生成的ldelf_hex.c文件), 并将ldelf_entry指定的ldelf程序入口地址赋值给uctx->entry_func.

随后在ldelf_init_with_ldelf()函数中,通过thread_enter_user_mode()函数转入secure EL0执行ldelf程序

点击查看代码

TEE_Result ldelf_load_ldelf(struct user_mode_ctx *uctx)

{

TEE_Result res = TEE_SUCCESS;

vaddr_t stack_addr = 0;

vaddr_t code_addr = 0;

vaddr_t rw_addr = 0;

uctx->is_32bit = is_arm32;

res = alloc_and_map_ldelf_fobj(uctx, LDELF_STACK_SIZE,

TEE_MATTR_URW | TEE_MATTR_PRW,

&stack_addr);

if (res)

return res;

uctx->ldelf_stack_ptr = stack_addr + LDELF_STACK_SIZE;

res = alloc_and_map_ldelf_fobj(uctx, ldelf_code_size, TEE_MATTR_PRW,

&code_addr);

if (res)

return res;

uctx->entry_func = code_addr + ldelf_entry;

rw_addr = ROUNDUP(code_addr + ldelf_code_size, SMALL_PAGE_SIZE);

res = alloc_and_map_ldelf_fobj(uctx, ldelf_data_size,

TEE_MATTR_URW | TEE_MATTR_PRW, &rw_addr);

if (res)

return res;

vm_set_ctx(uctx->ts_ctx);

memcpy((void *)code_addr, ldelf_data, ldelf_code_size);

memcpy((void *)rw_addr, ldelf_data + ldelf_code_size, ldelf_data_size);

res = vm_set_prot(uctx, code_addr,

ROUNDUP(ldelf_code_size, SMALL_PAGE_SIZE),

TEE_MATTR_URX);

if (res)

return res;

DMSG("ldelf load address %#"PRIxVA, code_addr);

return TEE_SUCCESS;

}

TEE_Result ldelf_init_with_ldelf(struct ts_session *sess,

struct user_mode_ctx *uctx)

{

TEE_Result res = TEE_SUCCESS;

struct ldelf_arg *arg = NULL;

uint32_t panic_code = 0;

uint32_t panicked = 0;

uaddr_t usr_stack = 0;

usr_stack = uctx->ldelf_stack_ptr;

usr_stack -= ROUNDUP(sizeof(*arg), STACK_ALIGNMENT);

arg = (struct ldelf_arg *)usr_stack;

memset(arg, 0, sizeof(*arg));

arg->uuid = uctx->ts_ctx->uuid;

sess->handle_svc = ldelf_handle_svc; //此处将处理系统调用的函数替换为ldelf_handle_svc,以便在ldelf程序运行时正确处理相应的系统调用

res = thread_enter_user_mode((vaddr_t)arg, 0, 0, 0,

usr_stack, uctx->entry_func,

is_arm32, &panicked, &panic_code);

sess->handle_svc = sess->ctx->ops->handle_svc;

thread_user_clear_vfp(uctx);

ldelf_sess_cleanup(sess);

if (panicked) {

abort_print_current_ta();

EMSG("ldelf panicked");

return TEE_ERROR_GENERIC;

}

if (res) {

EMSG("ldelf failed with res: %#"PRIx32, res);

return res;

}

res = vm_check_access_rights(uctx,

TEE_MEMORY_ACCESS_READ |

TEE_MEMORY_ACCESS_ANY_OWNER,

(uaddr_t)arg, sizeof(*arg));

if (res)

return res;

if (is_user_ta_ctx(uctx->ts_ctx)) {

/*

* This is already checked by the elf loader, but since it runs

* in user mode we're not trusting it entirely.

*/

if (arg->flags & ~TA_FLAGS_MASK)

return TEE_ERROR_BAD_FORMAT;

to_user_ta_ctx(uctx->ts_ctx)->ta_ctx.flags = arg->flags;

}

uctx->is_32bit = arg->is_32bit;

uctx->entry_func = arg->entry_func;

uctx->stack_ptr = arg->stack_ptr;

uctx->dump_entry_func = arg->dump_entry;

#ifdef CFG_FTRACE_SUPPORT

uctx->ftrace_entry_func = arg->ftrace_entry;

sess->fbuf = arg->fbuf;

#endif

uctx->dl_entry_func = arg->dl_entry;

return TEE_SUCCESS;

}

ldelf程序的入口地址在ldelf/ldelf.ld.S中指定,其定义在ldelf/start_a64.S中,最终会跳转到ldelf()函数中。

点击查看代码

//ldelf.ld.S

ENTRY(_ldelf_start)

//start_a64.S

FUNC _ldelf_start , :

/*

* First ldelf needs to be relocated. The binary is compiled to

* contain only a minimal number of R_AARCH64_RELATIVE relocations

* in read/write memory, leaving read-only and executeble memory

* untouched.

*/

adr x4, reloc_begin_rel

ldr w5, reloc_begin_rel

ldr w6, reloc_end_rel

add x5, x5, x4

add x6, x6, x4

cmp x5, x6

beq 2f

adr x4, _ldelf_start /* Get the load offset */

/* Loop over the relocations (Elf64_Rela) and process all entries */

1: ldp x7, x8, [x5], #16 /* x7 = r_offset, x8 = r_info */

ldr x9, [x5], #8 /* x9 = r_addend */

and x8, x8, #0xffffffff

cmp x8, #R_AARCH64_RELATIVE

/* We're currently only supporting R_AARCH64_RELATIVE relocations */

bne 3f

/*

* Update the pointer at r_offset + load_offset with r_addend +

* load_offset.

*/

add x7, x7, x4

add x9, x9, x4

str x9, [x7]

cmp x5, x6

blo 1b

2: bl ldelf

mov x0, #0

bl _ldelf_return

3: mov x0, #0

bl _ldelf_panic

reloc_begin_rel:

.word __reloc_begin - reloc_begin_rel

reloc_end_rel:

.word __reloc_end - reloc_end_rel

END_FUNC _ldelf_start

ldelf()函数定义在ldelf/main.c中,其主要调用了ta_elf_load_main()->load_main()->init_elf()来加载user TA。

点击查看代码

void ldelf(struct ldelf_arg *arg)

{

TEE_Result res = TEE_SUCCESS;

struct ta_elf *elf = NULL;

DMSG("Loading TA %pUl", (void *)&arg->uuid);

res = sys_map_zi(mpool_size, 0, &mpool_base, 0, 0);

if (res) {

EMSG("sys_map_zi(%zu): result %"PRIx32, mpool_size, res);

panic();

}

malloc_add_pool((void *)mpool_base, mpool_size);

/* Load the main binary and get a list of dependencies, if any. */

ta_elf_load_main(&arg->uuid, &arg->is_32bit, &arg->stack_ptr,

&arg->flags);

/*

* Load binaries, ta_elf_load() may add external libraries to the

* list, so the loop will end when all the dependencies are

* satisfied.

*/

TAILQ_FOREACH(elf, &main_elf_queue, link)

ta_elf_load_dependency(elf, arg->is_32bit);

TAILQ_FOREACH(elf, &main_elf_queue, link) {

ta_elf_relocate(elf);

ta_elf_finalize_mappings(elf);

}

ta_elf_finalize_load_main(&arg->entry_func);

arg->ftrace_entry = 0;

#ifdef CFG_FTRACE_SUPPORT

if (ftrace_init(&arg->fbuf))

arg->ftrace_entry = (vaddr_t)(void *)ftrace_dump;

#endif

TAILQ_FOREACH(elf, &main_elf_queue, link)

DMSG("ELF (%pUl) at %#"PRIxVA,

(void *)&elf->uuid, elf->load_addr);

#if TRACE_LEVEL >= TRACE_ERROR

arg->dump_entry = (vaddr_t)(void *)dump_ta_state;

#else

arg->dump_entry = 0;

#endif

arg->dl_entry = (vaddr_t)(void *)dl_entry;

sys_return_cleanup();

}

init_elf()定义在ldelf/ta_elf.c中,其中sys_open_ta_bin()由于在之前ldelf_init_with_ldelf()中通过thread_enter_user_mode()转入secure EL0运行前,已经将系统调用处理函数更改为ldelf_handle_svc(其对应的系统调用表在core/arch/arm/tee/arch_svc.c中),因此根据sys_open_ta_bin的系统调用号会调用到相应的处理函数ldelf_syscall_open_bin()。

点击查看代码

static void init_elf(struct ta_elf *elf)

{

TEE_Result res = TEE_SUCCESS;

vaddr_t va = 0;

uint32_t flags = LDELF_MAP_FLAG_SHAREABLE;

size_t sz = 0;

res = sys_open_ta_bin(&elf->uuid, &elf->handle);

if (res)

err(res, "sys_open_ta_bin(%pUl)", (void *)&elf->uuid);

/*

* Map it read-only executable when we're loading a library where

* the ELF header is included in a load segment.

*/

if (!elf->is_main)

flags |= LDELF_MAP_FLAG_EXECUTABLE;

res = sys_map_ta_bin(&va, SMALL_PAGE_SIZE, flags, elf->handle, 0, 0, 0);

if (res)

err(res, "sys_map_ta_bin");

elf->ehdr_addr = va;

if (!elf->is_main) {

elf->load_addr = va;

elf->max_addr = va + SMALL_PAGE_SIZE;

elf->max_offs = SMALL_PAGE_SIZE;

}

if (!IS_ELF(*(Elf32_Ehdr *)va))

err(TEE_ERROR_BAD_FORMAT, "TA is not an ELF");

res = e32_parse_ehdr(elf, (void *)va);

if (res == TEE_ERROR_BAD_FORMAT)

res = e64_parse_ehdr(elf, (void *)va);

if (res)

err(res, "Cannot parse ELF");

if (MUL_OVERFLOW(elf->e_phnum, elf->e_phentsize, &sz) ||

ADD_OVERFLOW(sz, elf->e_phoff, &sz))

err(TEE_ERROR_BAD_FORMAT, "Program headers size overflow");

if (sz > SMALL_PAGE_SIZE)

err(TEE_ERROR_NOT_SUPPORTED, "Cannot read program headers");

elf->phdr = (void *)(va + elf->e_phoff);

}

ldelf_syscall_open_bin()的定义在core/kernel/ldelf_syscalls.c中,

点击查看代码

TEE_Result ldelf_syscall_open_bin(const TEE_UUID *uuid, size_t uuid_size,

uint32_t *handle)

{

TEE_Result res = TEE_SUCCESS;

struct ts_session *sess = ts_get_current_session();

struct user_mode_ctx *uctx = to_user_mode_ctx(sess->ctx);

struct system_ctx *sys_ctx = sess->user_ctx;

struct bin_handle *binh = NULL;

uint8_t tag[FILE_TAG_SIZE] = { 0 };

unsigned int tag_len = sizeof(tag);

int h = 0;

res = vm_check_access_rights(uctx,

TEE_MEMORY_ACCESS_READ |

TEE_MEMORY_ACCESS_ANY_OWNER,

(uaddr_t)uuid, sizeof(TEE_UUID));

if (res)

return res;

res = vm_check_access_rights(uctx,

TEE_MEMORY_ACCESS_WRITE |

TEE_MEMORY_ACCESS_ANY_OWNER,

(uaddr_t)handle, sizeof(uint32_t));

if (res)

return res;

if (uuid_size != sizeof(*uuid))

return TEE_ERROR_BAD_PARAMETERS;

if (!sys_ctx) {

sys_ctx = calloc(1, sizeof(*sys_ctx));

if (!sys_ctx)

return TEE_ERROR_OUT_OF_MEMORY;

sess->user_ctx = sys_ctx;

}

binh = calloc(1, sizeof(*binh));

if (!binh)

return TEE_ERROR_OUT_OF_MEMORY;

SCATTERED_ARRAY_FOREACH(binh->op, ta_stores, struct ts_store_ops) {

DMSG("Lookup user TA ELF %pUl (%s)",

(void *)uuid, binh->op->description);

res = binh->op->open(uuid, &binh->h);

DMSG("res=%#"PRIx32, res);

if (res != TEE_ERROR_ITEM_NOT_FOUND &&

res != TEE_ERROR_STORAGE_NOT_AVAILABLE)

break;

}

if (res)

goto err;

res = binh->op->get_size(binh->h, &binh->size_bytes);

if (res)

goto err;

res = binh->op->get_tag(binh->h, tag, &tag_len);

if (res)

goto err;

binh->f = file_get_by_tag(tag, tag_len);

if (!binh->f)

goto err_oom;

h = handle_get(&sys_ctx->db, binh);

if (h < 0)

goto err_oom;

*handle = h;

return TEE_SUCCESS;

err_oom:

res = TEE_ERROR_OUT_OF_MEMORY;

err:

bin_close(binh);

return res;

}

ldelf_syscall_open_bin首先在注册的ta_stores中按照优先级依次从3个TA location中查找是否存在相应uuid的TA image。

ta storage分为三种,early_ta, ree_fs_ta和secstor_ta:

- in optee_os/core/arch/arm/kernel/early_ta.c

REGISTER_TA_STORE(2) = { .description = "early TA", .open = early_ta_open, .get_size = emb_ts_get_size, .get_tag = emb_ts_get_tag, .read = emb_ts_read, .close = emb_ts_close, };``` - in optee_os/core/arch/arm/kernel/secstor_ta.c

REGISTER_TA_STORE(4) = { .description = "Secure Storage TA", .open = secstor_ta_open, .get_size = secstor_ta_get_size, .get_tag = secstor_ta_get_tag, .read = secstor_ta_read, .close = secstor_ta_close, };``` - in optee_os/core/arch/arm/kernel/ree_fs_ta.c

#ifndef CFG_REE_FS_TA_BUFFERED REGISTER_TA_STORE(9) = { .description = "REE", .open = ree_fs_ta_open, .get_size = ree_fs_ta_get_size, .get_tag = ree_fs_ta_get_tag, .read = ree_fs_ta_read, .close = ree_fs_ta_close, }; #endif

我们主要关心REEFS TA的加载,它会在early TA和Secure Storage TA都没有找到对应uuid的TA后再进行查找。

binh->op->open即调用ree_fs_ta_open函数来打开TA。其定义在core/arch/arm/kernel/ree_fs_ta.c中,

点击查看代码

static TEE_Result ree_fs_ta_open(const TEE_UUID *uuid,

struct ts_store_handle **h)

{

struct ree_fs_ta_handle *handle;

struct shdr *shdr = NULL;

struct mobj *mobj = NULL;

void *hash_ctx = NULL;

struct shdr *ta = NULL;

size_t ta_size = 0;

TEE_Result res;

size_t offs;

struct shdr_bootstrap_ta *bs_hdr = NULL;

struct shdr_encrypted_ta *ehdr = NULL;

handle = calloc(1, sizeof(*handle));

if (!handle)

return TEE_ERROR_OUT_OF_MEMORY;

/* Request TA from tee-supplicant */

res = rpc_load(uuid, &ta, &ta_size, &mobj);

if (res != TEE_SUCCESS)

goto error;

/* Make secure copy of signed header */

shdr = shdr_alloc_and_copy(ta, ta_size);

if (!shdr) {

res = TEE_ERROR_SECURITY;

goto error_free_payload;

}

/* Validate header signature */

res = shdr_verify_signature(shdr);

if (res != TEE_SUCCESS)

goto error_free_payload;

if (shdr->img_type != SHDR_TA && shdr->img_type != SHDR_BOOTSTRAP_TA &&

shdr->img_type != SHDR_ENCRYPTED_TA) {

res = TEE_ERROR_SECURITY;

goto error_free_payload;

}

/*

* Initialize a hash context and run the algorithm over the signed

* header (less the final file hash and its signature of course)

*/

res = crypto_hash_alloc_ctx(&hash_ctx,

TEE_DIGEST_HASH_TO_ALGO(shdr->algo));

if (res != TEE_SUCCESS)

goto error_free_payload;

res = crypto_hash_init(hash_ctx);

if (res != TEE_SUCCESS)

goto error_free_hash;

res = crypto_hash_update(hash_ctx, (uint8_t *)shdr, sizeof(*shdr));

if (res != TEE_SUCCESS)

goto error_free_hash;

offs = SHDR_GET_SIZE(shdr);

if (shdr->img_type == SHDR_BOOTSTRAP_TA ||

shdr->img_type == SHDR_ENCRYPTED_TA) {

TEE_UUID bs_uuid;

if (ta_size < SHDR_GET_SIZE(shdr) + sizeof(*bs_hdr)) {

res = TEE_ERROR_SECURITY;

goto error_free_hash;

}

bs_hdr = malloc(sizeof(*bs_hdr));

if (!bs_hdr) {

res = TEE_ERROR_OUT_OF_MEMORY;

goto error_free_hash;

}

memcpy(bs_hdr, (uint8_t *)ta + offs, sizeof(*bs_hdr));

/*

* There's a check later that the UUID embedded inside the

* ELF is matching, but since we now have easy access to

* the expected uuid of the TA we check it a bit earlier

* here.

*/

tee_uuid_from_octets(&bs_uuid, bs_hdr->uuid);

if (memcmp(&bs_uuid, uuid, sizeof(TEE_UUID))) {

res = TEE_ERROR_SECURITY;

goto error_free_hash;

}

res = crypto_hash_update(hash_ctx, (uint8_t *)bs_hdr,

sizeof(*bs_hdr));

if (res != TEE_SUCCESS)

goto error_free_hash;

offs += sizeof(*bs_hdr);

handle->bs_hdr = bs_hdr;

}

if (shdr->img_type == SHDR_ENCRYPTED_TA) {

struct shdr_encrypted_ta img_ehdr;

if (ta_size < SHDR_GET_SIZE(shdr) +

sizeof(struct shdr_bootstrap_ta) + sizeof(img_ehdr)) {

res = TEE_ERROR_SECURITY;

goto error_free_hash;

}

memcpy(&img_ehdr, ((uint8_t *)ta + offs), sizeof(img_ehdr));

ehdr = malloc(SHDR_ENC_GET_SIZE(&img_ehdr));

if (!ehdr) {

res = TEE_ERROR_OUT_OF_MEMORY;

goto error_free_hash;

}

memcpy(ehdr, ((uint8_t *)ta + offs),

SHDR_ENC_GET_SIZE(&img_ehdr));

res = crypto_hash_update(hash_ctx, (uint8_t *)ehdr,

SHDR_ENC_GET_SIZE(ehdr));

if (res != TEE_SUCCESS)

goto error_free_hash;

res = tee_ta_decrypt_init(&handle->enc_ctx, ehdr,

shdr->img_size);

if (res != TEE_SUCCESS)

goto error_free_hash;

offs += SHDR_ENC_GET_SIZE(ehdr);

handle->ehdr = ehdr;

}

if (ta_size != offs + shdr->img_size) {

res = TEE_ERROR_SECURITY;

goto error_free_hash;

}

handle->nw_ta = ta;

handle->nw_ta_size = ta_size;

handle->offs = offs;

handle->hash_ctx = hash_ctx;

handle->shdr = shdr;

handle->mobj = mobj;

*h = (struct ts_store_handle *)handle;

return TEE_SUCCESS;

error_free_hash:

crypto_hash_free_ctx(hash_ctx);

error_free_payload:

thread_rpc_free_payload(mobj);

error:

free(ehdr);

free(bs_hdr);

shdr_free(shdr);

free(handle);

return res;

}

由于REEFS TA存在于REE侧文件系统中,因此需要通过rpc_load请求tee-supplicant进行TA的加载,rpc_load函数的定义同样在core/arch/arm/kernel/ree_fs_ta.c中,

点击查看代码

static TEE_Result rpc_load(const TEE_UUID *uuid, struct shdr **ta,

size_t *ta_size, struct mobj **mobj)

{

TEE_Result res;

struct thread_param params[2];

if (!uuid || !ta || !mobj || !ta_size)

return TEE_ERROR_BAD_PARAMETERS;

memset(params, 0, sizeof(params));

params[0].attr = THREAD_PARAM_ATTR_VALUE_IN;

tee_uuid_to_octets((void *)¶ms[0].u.value, uuid);

params[1].attr = THREAD_PARAM_ATTR_MEMREF_OUT;

res = thread_rpc_cmd(OPTEE_RPC_CMD_LOAD_TA, 2, params);

if (res != TEE_SUCCESS)

return res;

*mobj = thread_rpc_alloc_payload(params[1].u.memref.size);

if (!*mobj)

return TEE_ERROR_OUT_OF_MEMORY;

if ((*mobj)->size < params[1].u.memref.size) {

res = TEE_ERROR_SHORT_BUFFER;

goto exit;

}

*ta = mobj_get_va(*mobj, 0);

/* We don't expect NULL as thread_rpc_alloc_payload() was successful */

assert(*ta);

*ta_size = params[1].u.memref.size;

params[0].attr = THREAD_PARAM_ATTR_VALUE_IN;

tee_uuid_to_octets((void *)¶ms[0].u.value, uuid);

params[1].attr = THREAD_PARAM_ATTR_MEMREF_OUT;

params[1].u.memref.offs = 0;

params[1].u.memref.mobj = *mobj;

res = thread_rpc_cmd(OPTEE_RPC_CMD_LOAD_TA, 2, params);

exit:

if (res != TEE_SUCCESS)

thread_rpc_free_payload(*mobj);

return res;

}

rpc_load将uuid存入params中后,调用thread_rpc_cmd(OPTEE_RPC_CMD_LOAD_TA, 2, params)执行rpc调用,thread_rpc_cmd函数定义在core/arch/arm/kernel/thread_optee_smc.c中,

点击查看代码

uint32_t thread_rpc_cmd(uint32_t cmd, size_t num_params,

struct thread_param *params)

{

uint32_t rpc_args[THREAD_RPC_NUM_ARGS] = { OPTEE_SMC_RETURN_RPC_CMD };

void *arg = NULL;

uint64_t carg = 0;

uint32_t ret = 0;

/* The source CRYPTO_RNG_SRC_JITTER_RPC is safe to use here */

plat_prng_add_jitter_entropy(CRYPTO_RNG_SRC_JITTER_RPC,

&thread_rpc_pnum);

ret = get_rpc_arg(cmd, num_params, params, &arg, &carg);

if (ret)

return ret;

reg_pair_from_64(carg, rpc_args + 1, rpc_args + 2);

thread_rpc(rpc_args);

return get_rpc_arg_res(arg, num_params, params);

}

而其调用的thread_rpc定义在core/arch/arm/kernel/thread_optee_smc_a64.S中,主要是通过smc指令切换到normal world继续执行。

点击查看代码

/* void thread_rpc(uint32_t rv[THREAD_RPC_NUM_ARGS]) */

FUNC thread_rpc , :

/* Read daif and create an SPSR */

mrs x1, daif

orr x1, x1, #(SPSR_64_MODE_EL1 << SPSR_64_MODE_EL_SHIFT)

/* Mask all maskable exceptions before switching to temporary stack */

msr daifset, #DAIFBIT_ALL

push x0, xzr

push x1, x30

bl thread_get_ctx_regs

ldr x30, [sp, #8]

store_xregs x0, THREAD_CTX_REGS_X19, 19, 30

mov x19, x0

bl thread_get_tmp_sp

pop x1, xzr /* Match "push x1, x30" above */

mov x2, sp

str x2, [x19, #THREAD_CTX_REGS_SP]

ldr x20, [sp] /* Get pointer to rv[] */

mov sp, x0 /* Switch to tmp stack */

/*

* We need to read rv[] early, because thread_state_suspend

* can invoke virt_unset_guest() which will unmap pages,

* where rv[] resides

*/

load_wregs x20, 0, 21, 23 /* Load rv[] into w20-w22 */

adr x2, .thread_rpc_return

mov w0, #THREAD_FLAGS_COPY_ARGS_ON_RETURN

bl thread_state_suspend

mov x4, x0 /* Supply thread index */

ldr w0, =TEESMC_OPTEED_RETURN_CALL_DONE

mov x1, x21

mov x2, x22

mov x3, x23

smc #0

b . /* SMC should not return */

.thread_rpc_return:

/*

* At this point has the stack pointer been restored to the value

* stored in THREAD_CTX above.

*

* Jumps here from thread_resume above when RPC has returned. The

* IRQ and FIQ bits are restored to what they where when this

* function was originally entered.

*/

pop x16, xzr /* Get pointer to rv[] */

store_wregs x16, 0, 0, 3 /* Store w0-w3 into rv[] */

ret

END_FUNC thread_rpc

DECLARE_KEEP_PAGER thread_rpc

切换到normal world后,cpu会从optee_do_call_with_arg函数中继续执行,由于res.a0在thread_rpc_cmd中被设为OPTEE_SMC_RETURN_RPC_CMD,因此会执行optee_handle_rpc函数,其定义在drivers/tee/optee/rpc.c中,进而调用handle_rpc_func_cmd->handle_rpc_supp_cmd->optee_supp_thrd_req向tee-supplicant发出请求。

点击查看代码

void optee_handle_rpc(struct tee_context *ctx, struct optee_rpc_param *param,

struct optee_call_ctx *call_ctx)

{

struct tee_device *teedev = ctx->teedev;

struct optee *optee = tee_get_drvdata(teedev);

struct tee_shm *shm;

phys_addr_t pa;

switch (OPTEE_SMC_RETURN_GET_RPC_FUNC(param->a0)) {

case OPTEE_SMC_RPC_FUNC_ALLOC:

shm = tee_shm_alloc(ctx, param->a1, TEE_SHM_MAPPED);

if (!IS_ERR(shm) && !tee_shm_get_pa(shm, 0, &pa)) {

reg_pair_from_64(¶m->a1, ¶m->a2, pa);

reg_pair_from_64(¶m->a4, ¶m->a5,

(unsigned long)shm);

} else {

param->a1 = 0;

param->a2 = 0;

param->a4 = 0;

param->a5 = 0;

}

break;

case OPTEE_SMC_RPC_FUNC_FREE:

shm = reg_pair_to_ptr(param->a1, param->a2);

tee_shm_free(shm);

break;

case OPTEE_SMC_RPC_FUNC_FOREIGN_INTR:

/*

* A foreign interrupt was raised while secure world was

* executing, since they are handled in Linux a dummy RPC is

* performed to let Linux take the interrupt through the normal

* vector.

*/

break;

case OPTEE_SMC_RPC_FUNC_CMD:

shm = reg_pair_to_ptr(param->a1, param->a2);

handle_rpc_func_cmd(ctx, optee, shm, call_ctx);

break;

default:

pr_warn("Unknown RPC func 0x%x\n",

(u32)OPTEE_SMC_RETURN_GET_RPC_FUNC(param->a0));

break;

}

param->a0 = OPTEE_SMC_CALL_RETURN_FROM_RPC;

}

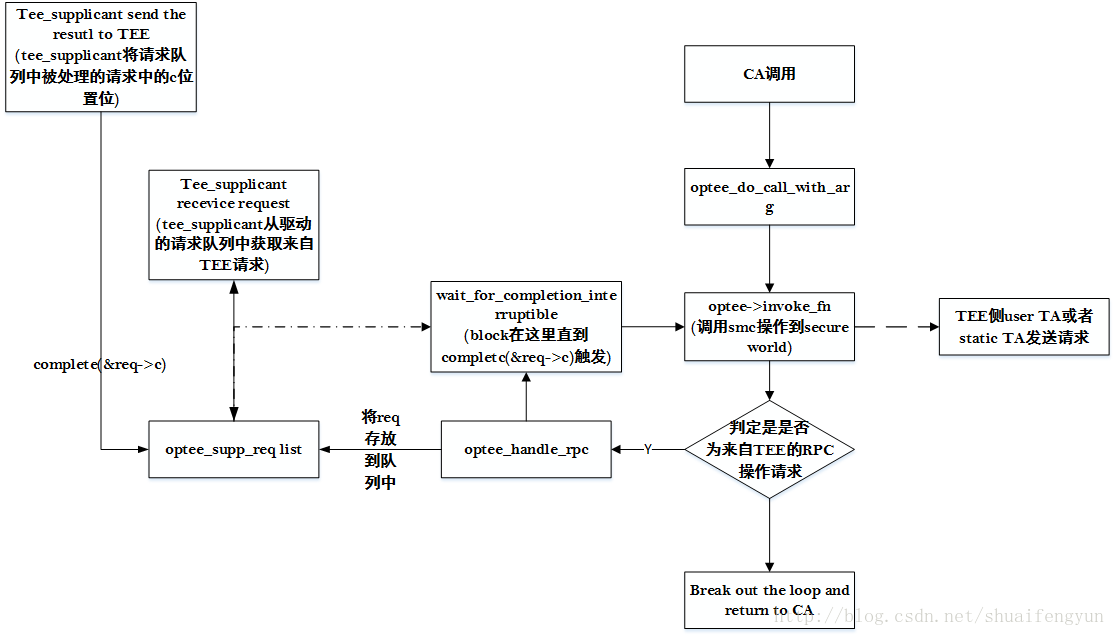

tee-supplicant在运行后会一直处于等待请求的状态(这里不做详细展开,大致流程见下图),直到optee_supp_thrd_req中complete(&supp->reqs_c);被调用,tee-supplicant会从队列中拿到传来的请求,解析得到TEE侧想要执行的指令。然后optee_supp_thrd_req中会调用wait_for_completion_interruptible(&req->c)来等待tee-supplicant完成要求的指令并返回。

(图片来源:https://icyshuai.blog.csdn.net/article/details/73061002 )

由于TEE侧设置的请求为OPTEE_RPC_CMD_LOAD_TA,与tee-supplicant侧OPTEE_MSG_RPC_CMD_LOAD_TA的值相同,因此会执行load_ta函数。

点击查看代码

//tee-supplicant/src/tee_supplicant.c

static bool process_one_request(struct thread_arg *arg)

{

size_t num_params = 0;

size_t num_meta = 0;

struct tee_ioctl_param *params = NULL;

uint32_t func = 0;

uint32_t ret = 0;

union tee_rpc_invoke request;

memset(&request, 0, sizeof(request));

DMSG("looping");

request.recv.num_params = RPC_NUM_PARAMS;

/* Let it be known that we can deal with meta parameters */

params = (struct tee_ioctl_param *)(&request.send + 1);

params->attr = TEE_IOCTL_PARAM_ATTR_META;

num_waiters_inc(arg);

if (!read_request(arg->fd, &request))

return false;

if (!find_params(&request, &func, &num_params, ¶ms, &num_meta))

return false;

if (num_meta && !num_waiters_dec(arg) && !spawn_thread(arg))

return false;

switch (func) {

case OPTEE_MSG_RPC_CMD_LOAD_TA:

ret = load_ta(num_params, params);

break;

case OPTEE_MSG_RPC_CMD_FS:

ret = tee_supp_fs_process(num_params, params);

break;

case OPTEE_MSG_RPC_CMD_RPMB:

ret = process_rpmb(num_params, params);

break;

case OPTEE_MSG_RPC_CMD_SHM_ALLOC:

ret = process_alloc(arg, num_params, params);

break;

case OPTEE_MSG_RPC_CMD_SHM_FREE:

ret = process_free(num_params, params);

break;

case OPTEE_MSG_RPC_CMD_GPROF:

ret = prof_process(num_params, params, "gmon-");

break;

case OPTEE_MSG_RPC_CMD_SOCKET:

ret = tee_socket_process(num_params, params);

break;

case OPTEE_MSG_RPC_CMD_FTRACE:

ret = prof_process(num_params, params, "ftrace-");

break;

default:

EMSG("Cmd [0x%" PRIx32 "] not supported", func);

/* Not supported. */

ret = TEEC_ERROR_NOT_SUPPORTED;

break;

}

request.send.ret = ret;

return write_response(arg->fd, &request);

}

static uint32_t load_ta(size_t num_params, struct tee_ioctl_param *params)

{

int ta_found = 0;

size_t size = 0;

struct param_value *val_cmd = NULL;

TEEC_UUID uuid;

TEEC_SharedMemory shm_ta;

memset(&uuid, 0, sizeof(uuid));

memset(&shm_ta, 0, sizeof(shm_ta));

if (num_params != 2 || get_value(num_params, params, 0, &val_cmd) ||

get_param(num_params, params, 1, &shm_ta))

return TEEC_ERROR_BAD_PARAMETERS;

uuid_from_octets(&uuid, (void *)val_cmd);

size = shm_ta.size;

ta_found = TEECI_LoadSecureModule(ta_dir, &uuid, shm_ta.buffer, &size);

if (ta_found != TA_BINARY_FOUND) {

EMSG(" TA not found");

return TEEC_ERROR_ITEM_NOT_FOUND;

}

MEMREF_SIZE(params + 1) = size;

/*

* If a buffer wasn't provided, just tell which size it should be.

* If it was provided but isn't big enough, report an error.

*/

if (shm_ta.buffer && size > shm_ta.size)

return TEEC_ERROR_SHORT_BUFFER;

return TEEC_SUCCESS;

}

//drivers/tee/optee/supp.c

u32 optee_supp_thrd_req(struct tee_context *ctx, u32 func, size_t num_params,

struct tee_param *param)

{

struct optee *optee = tee_get_drvdata(ctx->teedev);

struct optee_supp *supp = &optee->supp;

struct optee_supp_req *req;

bool interruptable;

u32 ret;

/*

* Return in case there is no supplicant available and

* non-blocking request.

*/

if (!supp->ctx && ctx->supp_nowait)

return TEEC_ERROR_COMMUNICATION;

req = kzalloc(sizeof(*req), GFP_KERNEL);

if (!req)

return TEEC_ERROR_OUT_OF_MEMORY;

init_completion(&req->c);

req->func = func;

req->num_params = num_params;

req->param = param;

/* Insert the request in the request list */

mutex_lock(&supp->mutex);

list_add_tail(&req->link, &supp->reqs);

req->in_queue = true;

mutex_unlock(&supp->mutex);

/* Tell an eventual waiter there's a new request */

complete(&supp->reqs_c);

/*

* Wait for supplicant to process and return result, once we've

* returned from wait_for_completion(&req->c) successfully we have

* exclusive access again.

*/

while (wait_for_completion_interruptible(&req->c)) {

mutex_lock(&supp->mutex);

interruptable = !supp->ctx;

if (interruptable) {

/*

* There's no supplicant available and since the

* supp->mutex currently is held none can

* become available until the mutex released

* again.

*

* Interrupting an RPC to supplicant is only

* allowed as a way of slightly improving the user

* experience in case the supplicant hasn't been

* started yet. During normal operation the supplicant

* will serve all requests in a timely manner and

* interrupting then wouldn't make sense.

*/

if (req->in_queue) {

list_del(&req->link);

req->in_queue = false;

}

}

mutex_unlock(&supp->mutex);

if (interruptable) {

req->ret = TEEC_ERROR_COMMUNICATION;

break;

}

}

ret = req->ret;

kfree(req);

return ret;

}

int optee_supp_recv(struct tee_context *ctx, u32 *func, u32 *num_params,

struct tee_param *param)

{

struct tee_device *teedev = ctx->teedev;

struct optee *optee = tee_get_drvdata(teedev);

struct optee_supp *supp = &optee->supp;

struct optee_supp_req *req = NULL;

int id;

size_t num_meta;

int rc;

rc = supp_check_recv_params(*num_params, param, &num_meta);

if (rc)

return rc;

while (true) {

mutex_lock(&supp->mutex);

req = supp_pop_entry(supp, *num_params - num_meta, &id);

mutex_unlock(&supp->mutex);

if (req) {

if (IS_ERR(req))

return PTR_ERR(req);

break;

}

/*

* If we didn't get a request we'll block in

* wait_for_completion() to avoid needless spinning.

*

* This is where supplicant will be hanging most of

* the time, let's make this interruptable so we

* can easily restart supplicant if needed.

*/

if (wait_for_completion_interruptible(&supp->reqs_c))

return -ERESTARTSYS;

}

if (num_meta) {

/*

* tee-supplicant support meta parameters -> requsts can be

* processed asynchronously.

*/

param->attr = TEE_IOCTL_PARAM_ATTR_TYPE_VALUE_INOUT |

TEE_IOCTL_PARAM_ATTR_META;

param->u.value.a = id;

param->u.value.b = 0;

param->u.value.c = 0;

} else {

mutex_lock(&supp->mutex);

supp->req_id = id;

mutex_unlock(&supp->mutex);

}

*func = req->func;

*num_params = req->num_params + num_meta;

memcpy(param + num_meta, req->param,

sizeof(struct tee_param) * req->num_params);

return 0;

}

load_ta调用了TEECI_LoadSecureModule函数尝试从/lib/optee_armtz目录下寻找并打开名为<uuid>.ta的TA image。由于之前在TEE侧调用rpc_load时,TEE侧还不知道该TA image的大小,并没有为其分配内存,参数设定的size为0,此时TEECI_LoadeSecureModule并不能真正打开找到的TA image,只是返回其大小。

点击查看代码

static int try_load_secure_module(const char* prefix,

const char* dev_path,

const TEEC_UUID *destination, void *ta,

size_t *ta_size)

{

char fname[PATH_MAX] = { 0 };

FILE *file = NULL;

bool first_try = true;

size_t s = 0;

int n = 0;

if (!ta_size || !destination) {

printf("wrong inparameter to TEECI_LoadSecureModule\n");

return TA_BINARY_NOT_FOUND;

}

/*

* We expect the TA binary to be named after the UUID as per RFC4122,

* that is: xxxxxxxx-xxxx-xxxx-xxxx-xxxxxxxxxxxx.ta

* If the file cannot be open, try the deprecated format:

* xxxxxxxx-xxxx-xxxx-xxxxxxxxxxxxxxxx.ta

*/

again:

n = snprintf(fname, PATH_MAX,

"%s/%s/%08x-%04x-%04x-%02x%02x%s%02x%02x%02x%02x%02x%02x.ta",

prefix, dev_path,

destination->timeLow,

destination->timeMid,

destination->timeHiAndVersion,

destination->clockSeqAndNode[0],

destination->clockSeqAndNode[1],

first_try ? "-" : "",

destination->clockSeqAndNode[2],

destination->clockSeqAndNode[3],

destination->clockSeqAndNode[4],

destination->clockSeqAndNode[5],

destination->clockSeqAndNode[6],

destination->clockSeqAndNode[7]);

DMSG("Attempt to load %s", fname);

if ((n < 0) || (n >= PATH_MAX)) {

EMSG("wrong TA path [%s]", fname);

return TA_BINARY_NOT_FOUND;

}

file = fopen(fname, "r");

if (file == NULL) {

DMSG("failed to open the ta %s TA-file", fname);

if (first_try) {

first_try = false;

goto again;

}

return TA_BINARY_NOT_FOUND;

}

if (fseek(file, 0, SEEK_END) != 0) {

fclose(file);

return TA_BINARY_NOT_FOUND;

}

s = ftell(file);

if (s > *ta_size || !ta) {

/*

* Buffer isn't large enough, return the required size to

* let the caller increase the size of the buffer and try

* again.

*/

goto out;

}

if (fseek(file, 0, SEEK_SET) != 0) {

fclose(file);

return TA_BINARY_NOT_FOUND;

}

if (s != fread(ta, 1, s, file)) {

printf("error fread TA file\n");

fclose(file);

return TA_BINARY_NOT_FOUND;

}

out:

*ta_size = s;

fclose(file);

return TA_BINARY_FOUND;

}

int TEECI_LoadSecureModule(const char* dev_path,

const TEEC_UUID *destination, void *ta,

size_t *ta_size)

{

#ifdef TEEC_TEST_LOAD_PATH

int res = 0;

res = try_load_secure_module(TEEC_TEST_LOAD_PATH,

dev_path, destination, ta, ta_size);

if (res != TA_BINARY_NOT_FOUND)

return res;

#endif

return try_load_secure_module(TEEC_LOAD_PATH,

dev_path, destination, ta, ta_size);

}

load_ta函数返回后,process_one_request会调用write_response将结果发回TEE侧,这将最终调用optee_supp_send函数,其中执行了语句complete(&req->c),这样,之前optee_supp_thrd_req中等待的wait_for_completion_interruptible(&req->c)得以返回,最终再次会到optee_do_call_with_arg函数中继续执行。此时,param->a0已在optee_handle_rpc中被设为OPTEE_SMC_CALL_RETURN_FROM_RPC。

点击查看代码

//tee-supplicant/src/tee_supplicant.c

static bool write_response(int fd, union tee_rpc_invoke *request)

{

struct tee_ioctl_buf_data data;

memset(&data, 0, sizeof(data));

data.buf_ptr = (uintptr_t)&request->send;

data.buf_len = sizeof(struct tee_iocl_supp_send_arg) +

sizeof(struct tee_ioctl_param) *

(__u64)request->send.num_params;

if (ioctl(fd, TEE_IOC_SUPPL_SEND, &data)) {

EMSG("TEE_IOC_SUPPL_SEND: %s", strerror(errno));

return false;

}

return true;

}

//drivers/tee/optee/supp.c

int optee_supp_send(struct tee_context *ctx, u32 ret, u32 num_params,

struct tee_param *param)

{

struct tee_device *teedev = ctx->teedev;

struct optee *optee = tee_get_drvdata(teedev);

struct optee_supp *supp = &optee->supp;

struct optee_supp_req *req;

size_t n;

size_t num_meta;

mutex_lock(&supp->mutex);

req = supp_pop_req(supp, num_params, param, &num_meta);

mutex_unlock(&supp->mutex);

if (IS_ERR(req)) {

/* Something is wrong, let supplicant restart. */

return PTR_ERR(req);

}

/* Update out and in/out parameters */

for (n = 0; n < req->num_params; n++) {

struct tee_param *p = req->param + n;

switch (p->attr & TEE_IOCTL_PARAM_ATTR_TYPE_MASK) {

case TEE_IOCTL_PARAM_ATTR_TYPE_VALUE_OUTPUT:

case TEE_IOCTL_PARAM_ATTR_TYPE_VALUE_INOUT:

p->u.value.a = param[n + num_meta].u.value.a;

p->u.value.b = param[n + num_meta].u.value.b;

p->u.value.c = param[n + num_meta].u.value.c;

break;

case TEE_IOCTL_PARAM_ATTR_TYPE_MEMREF_OUTPUT:

case TEE_IOCTL_PARAM_ATTR_TYPE_MEMREF_INOUT:

p->u.memref.size = param[n + num_meta].u.memref.size;

break;

default:

break;

}

}

req->ret = ret;

/* Let the requesting thread continue */

complete(&req->c);

return 0;

}

optee_do_call_with_arg再次调用optee->invoke_fn通过smc指令切换到secure world,将继续从rpc_load执行。

此时,第一次thread_rpc_cmd执行的结果已经返回,从params中我们知道了找到的user TA的大小,因此可以分配对应的内存,然后再次调用thread_rpc_cmd真正通过tee-supplicant加载该TA到内存中。

rpc_load函数返回后,继续在ree_fs_ta_open中进行TA header signature的检查,并根据TA image类型是否为ENCRYPTED_TA决定是否进行解密。

验证成功后,系统调用结束,继续回到ldelf的init_elf中执行,解析加载的TA elf文件,获取其入口地址即其他相关信息,并在ta_elf_finalize_load_main函数中将TA的入口地址赋值给&arg->entry_func.

在ldelf程序执行完成后,继续回到optee os中执行ldelf_init_with_ldelf,将首先回复中断处理函数为user_ta_handle_svc,随后将参数中返回的TA入口地址和其他必要信息保存到uctx中,这些信息最终会被存入所有打开的tee context队列tee_ctxes中。

一步步回到tee_ta_open_session中后,会继续执行ts_ctx->ops->enter_open_session(&s->ts_sess),对于user TA而言,enter_open_session对应于user_ta_enter_open_session。其定义在

core/arch/arm/kernel/user_ta.c中。可以看到user_ta_enter_open_session同样调用了thread_enter_user_mode函数切换到secure EL0执行,不过此时指定的入口地址为utc->uctx.entry_func,即user TA的入口地址。

点击查看代码

static TEE_Result user_ta_enter_open_session(struct ts_session *s)

{

return user_ta_enter(s, UTEE_ENTRY_FUNC_OPEN_SESSION, 0);

}

static TEE_Result user_ta_enter(struct ts_session *session,

enum utee_entry_func func, uint32_t cmd)

{

TEE_Result res = TEE_SUCCESS;

struct utee_params *usr_params = NULL;

uaddr_t usr_stack = 0;

struct user_ta_ctx *utc = to_user_ta_ctx(session->ctx);

struct tee_ta_session *ta_sess = to_ta_session(session);

struct ts_session *ts_sess __maybe_unused = NULL;

void *param_va[TEE_NUM_PARAMS] = { NULL };

if (!inc_recursion()) {

/* Using this error code since we've run out of resources. */

res = TEE_ERROR_OUT_OF_MEMORY;

goto out_clr_cancel;

}

if (ta_sess->param) {

/* Map user space memory */

res = vm_map_param(&utc->uctx, ta_sess->param, param_va);

if (res != TEE_SUCCESS)

goto out;

}

/* Switch to user ctx */

ts_push_current_session(session);

/* Make room for usr_params at top of stack */

usr_stack = utc->uctx.stack_ptr;

usr_stack -= ROUNDUP(sizeof(struct utee_params), STACK_ALIGNMENT);

usr_params = (struct utee_params *)usr_stack;

if (ta_sess->param)

init_utee_param(usr_params, ta_sess->param, param_va);

else

memset(usr_params, 0, sizeof(*usr_params));

res = thread_enter_user_mode(func, kaddr_to_uref(session),

(vaddr_t)usr_params, cmd, usr_stack,

utc->uctx.entry_func, utc->uctx.is_32bit,

&utc->ta_ctx.panicked,

&utc->ta_ctx.panic_code);

thread_user_clear_vfp(&utc->uctx);

if (utc->ta_ctx.panicked) {

abort_print_current_ta();

DMSG("tee_user_ta_enter: TA panicked with code 0x%x",

utc->ta_ctx.panic_code);

res = TEE_ERROR_TARGET_DEAD;

} else {

/*

* According to GP spec the origin should allways be set to

* the TA after TA execution

*/

ta_sess->err_origin = TEE_ORIGIN_TRUSTED_APP;

}

if (ta_sess->param) {

/* Copy out value results */

update_from_utee_param(ta_sess->param, usr_params);

/*

* Clear out the parameter mappings added with

* vm_clean_param() above.

*/

vm_clean_param(&utc->uctx);

}

ts_sess = ts_pop_current_session();

assert(ts_sess == session);

out:

dec_recursion();

out_clr_cancel:

/*

* Clear the cancel state now that the user TA has returned. The next

* time the TA will be invoked will be with a new operation and should

* not have an old cancellation pending.

*/

ta_sess->cancel = false;

return res;

}

而user TA的入口地址是__ta_entry,它是由ta/arch/arm/link.mk指定的,

link-ldflags = -e__ta_entry -pie

该函数定义在ta/arch/arm/user_ta_header.c中,调用了__utee_entry()函数

void __noreturn _C_FUNCTION(__ta_entry)(unsigned long func,

unsigned long session_id,

struct utee_params *up,

unsigned long cmd_id)

{

TEE_Result res = __utee_entry(func, session_id, up, cmd_id);

#if defined(CFG_FTRACE_SUPPORT)

/*

* __ta_entry is the first TA API called from TEE core. As it being

* __noreturn API, we need to call ftrace_return in this API just

* before _utee_return syscall to get proper ftrace call graph.

*/

ftrace_return();

#endif

_utee_return(res);

}

__utee_entry函数定义在lib/libutee/arch/arm/user_ta_entry.c中,它根据传入的func执行相应的函数,对于open session而言,会执行entry_open_session,进而调用各user TA定义的接口函数TA_OpenSessionEntryPoint().

点击查看代码

TEE_Result __utee_entry(unsigned long func, unsigned long session_id,

struct utee_params *up, unsigned long cmd_id)

{

TEE_Result res;

switch (func) {

case UTEE_ENTRY_FUNC_OPEN_SESSION:

res = entry_open_session(session_id, up);

break;

case UTEE_ENTRY_FUNC_CLOSE_SESSION:

res = entry_close_session(session_id);

break;

case UTEE_ENTRY_FUNC_INVOKE_COMMAND:

res = entry_invoke_command(session_id, up, cmd_id);

break;

default:

res = 0xffffffff;

TEE_Panic(0);

break;

}

ta_header_save_params(0, NULL);

return res;

static TEE_Result entry_open_session(unsigned long session_id,

struct utee_params *up)

{

TEE_Result res;

struct ta_session *session;

uint32_t param_types;

TEE_Param params[TEE_NUM_PARAMS];

res = ta_header_add_session(session_id);

if (res != TEE_SUCCESS)

return res;

session = ta_header_get_session(session_id);

if (!session)

return TEE_ERROR_BAD_STATE;

from_utee_params(params, ¶m_types, up);

ta_header_save_params(param_types, params);

res = TA_OpenSessionEntryPoint(param_types, params,

&session->session_ctx);

to_utee_params(up, param_types, params);

if (res != TEE_SUCCESS)

ta_header_remove_session(session_id);

return res;

}

}

至此,从CA到TA的完整调用流程终于走完。而TEEC_InvokeCommand()的调用流程应与TEEC_OpenSession()大同小异,就不再分析了。

【推荐】还在用 ECharts 开发大屏?试试这款永久免费的开源 BI 工具!

【推荐】国内首个AI IDE,深度理解中文开发场景,立即下载体验Trae

【推荐】编程新体验,更懂你的AI,立即体验豆包MarsCode编程助手

【推荐】轻量又高性能的 SSH 工具 IShell:AI 加持,快人一步

· 从零开始开发一个 MCP Server!

· ThreeJs-16智慧城市项目(重磅以及未来发展ai)

· .NET 原生驾驭 AI 新基建实战系列(一):向量数据库的应用与畅想

· Ai满嘴顺口溜,想考研?浪费我几个小时

· Browser-use 详细介绍&使用文档