异步爬虫爬取数据碰到的问题

在采用异步http请求模块aiohttp对数据进行获取时,碰到一些奇怪的问题:

OSError: [WinError 121] 信号灯超时时间已到

aiohttp.client_exceptions.ClientConnectorError: Cannot connect to host file.nicegirl.in:443 ssl:default [信号灯超时时间已到]

aiohttp.client_exceptions.ClientConnectorError: Cannot connect to host nicegirl.in:443 ssl:default [None]

ConnectionAbortedError: SSL handshake is taking longer than 60.0 seconds: aborting the connection

案例

1. 爬取nicegirl中的图集

根据输入的要下载图集的数量,下载网站最新的图集数

源码

import asyncio

import os.path

import aiofiles

import aiohttp

import requests

from lxml import etree

from tenacity import retry, wait_random, stop_after_attempt, stop_after_delay

from tqdm.asyncio import tqdm

from fake_user_agent import user_agent

headers = {'User-Agent': user_agent('Chrome', False)}

async def get_page_content(category_name, category_link):

async with aiohttp.ClientSession(trust_env=True) as session:

async with session.get(category_link, headers=headers) as pics_source:

pic_urls = etree.HTML(await pics_source.text()).xpath('//p/img/@src')

await download(session, category_name, pic_urls)

async def download(session, category_name, pic_urls):

dir_name = r'.\pics\%s' % category_name

if not os.path.exists(dir_name):

os.mkdir(dir_name)

for pic_url in pic_urls:

pic_name = pic_url.split('/')[-1]

if not pic_name in os.listdir(dir_name):

async with session.get(pic_url, headers=headers) as pic_source:

file_path = r'%s\%s' % (dir_name, pic_name)

async with aiofiles.open(file_path, mode='wb') as f:

await f.write(await pic_source.read())

print('下载成功: %s' % pic_name)

print('================ %s中的图片下载成功 ================' % category_name)

async def main(url):

categories_source = requests.get(url, headers=headers)

categories_source.close()

nums = input('请输入要下载图集的数量: ').strip()

categories_info = categories_source.json()['posts'][-1 - int(nums):-1]

tasks = []

for category in categories_info:

category_name = category['title']

category_link = 'https://nicegirl.in' + category['link']

tasks.append(get_page_content(category_name, category_link))

await tqdm.gather(*tasks)

if __name__ == '__main__':

url = 'https://nicegirl.in/content.json'

asyncio.run(main(url))

2. 爬取站长素材的免费简历

源码

import asyncio

import os.path

import re

import aiofiles

import aiohttp

import requests

from fake_user_agent import user_agent

from lxml import etree

from tqdm.asyncio import tqdm

headers = {'User-Agent': user_agent('Chrome', False)}

async def main(url):

resumes_page = requests.get(url, headers=headers)

pages_in_onclick = etree.HTML(resumes_page.text).xpath('//input[@id="btngo"]/@onclick')[0]

pages = re.findall(r'\d+', pages_in_onclick)[0]

dir_name = r'.\resume'

if not os.path.exists(dir_name):

os.mkdir(dir_name)

pages = input('总共%s页,请输入你要下载的页数: ' % pages).strip()

tasks = []

for num in range(1, int(pages) + 1):

tasks.append(get_one_page_resume(num, dir_name))

await tqdm.gather(*tasks)

async def get_one_page_resume(num, dir_name):

resumes_url = 'https://sc.chinaz.com/jianli/free%s.html' \

% ('' if num == 1 else '_' + str(num))

async with aiohttp.ClientSession() as session: # trust_env=True,

async with session.get(resumes_url, headers=headers) as resumes_source:

urls = etree.HTML(await resumes_source.text()).xpath('//div[@id="main"]//p/a/@href')

for resume_url in urls:

async with session.get(resume_url, headers=headers) as url_source:

resume_page_source = etree.HTML(await url_source.text())

resume_name = resume_page_source.xpath('//div[@class="ppt_tit clearfix"]/h1/text()')[0]

cur_resume_url = resume_page_source.xpath('//div[@class="clearfix mt20 downlist"]//a/@href')[0]

await download(session, cur_resume_url, dir_name, resume_name)

print('============= 第%d页简历 下载成功 =============' % num)

async def download(session, url, dir_name, resume_name):

file_path = r'%s\%s.rar' % (dir_name, resume_name)

if not resume_name + '.rar' in os.listdir(dir_name):

async with session.get(url, headers=headers) as resume_source:

rar = await resume_source.read()

async with aiofiles.open(file_path, 'wb') as f:

await f.write(rar)

print('%s\t保存成功' % resume_name)

if __name__ == '__main__':

url = 'https://sc.chinaz.com/jianli/free.html'

asyncio.run(main(url))

解决方案

# 1. 事件循环策略设置为 WindowsSelectorEventLoopPolicy

policy = asyncio.WindowsSelectorEventLoopPolicy()

asyncio.set_event_loop_policy(policy)

# 2. 设置并发量 < 64

# 如采用信号量Semaphore限制协程数量

semaphore = asyncio.Semaphore(16)

# 3. aiohttp.ClientSession() 中设置参数 trust_env=True,可能会使下载变慢

对案例1,应用上述解决方法,代码如下

源码

import asyncio

import os.path

import aiofiles

import aiohttp

import requests

from lxml import etree

from tqdm.asyncio import tqdm

from fake_user_agent import user_agent

headers = {'User-Agent': user_agent('Chrome', False)}

policy = asyncio.WindowsSelectorEventLoopPolicy()

asyncio.set_event_loop_policy(policy)

semaphore = asyncio.Semaphore(16)

async def get_page_content(category_name, category_link):

async with semaphore:

# connector = aiohttp.TCPConnector(force_close=True) # 禁用 HTTP keep-alive

async with aiohttp.ClientSession(trust_env=True) as session: #trust_env=True

async with session.get(category_link, headers=headers) as pics_source:

pic_urls = etree.HTML(await pics_source.text()).xpath('//p/img/@src')

await download(session, category_name, pic_urls)

async def download(session, category_name, pic_urls):

dir_name = r'.\pics\%s' % category_name

if not os.path.exists(dir_name):

os.mkdir(dir_name)

for pic_url in pic_urls:

pic_name = pic_url.split('/')[-1]

if not pic_name in os.listdir(dir_name):

async with session.get(pic_url, headers=headers) as pic_source:

file_path = r'%s\%s' % (dir_name, pic_name)

async with aiofiles.open(file_path, mode='wb') as f:

await f.write(await pic_source.read())

print('下载成功: %s' % pic_name)

print('================ %s中的图片下载成功 ================' % category_name)

async def main(url):

categories_source = requests.get(url, headers=headers)

categories_source.close()

nums = input('请输入要下载图集的数量: ').strip()

categories_info = categories_source.json()['posts'][-1 - int(nums):-1]

tasks = []

for category in categories_info:

category_name = category['title']

category_link = 'https://nicegirl.in' + category['link']

tasks.append(get_page_content(category_name, category_link))

await tqdm.gather(*tasks)

if __name__ == '__main__':

url = 'https://nicegirl.in/content.json'

asyncio.run(main(url))

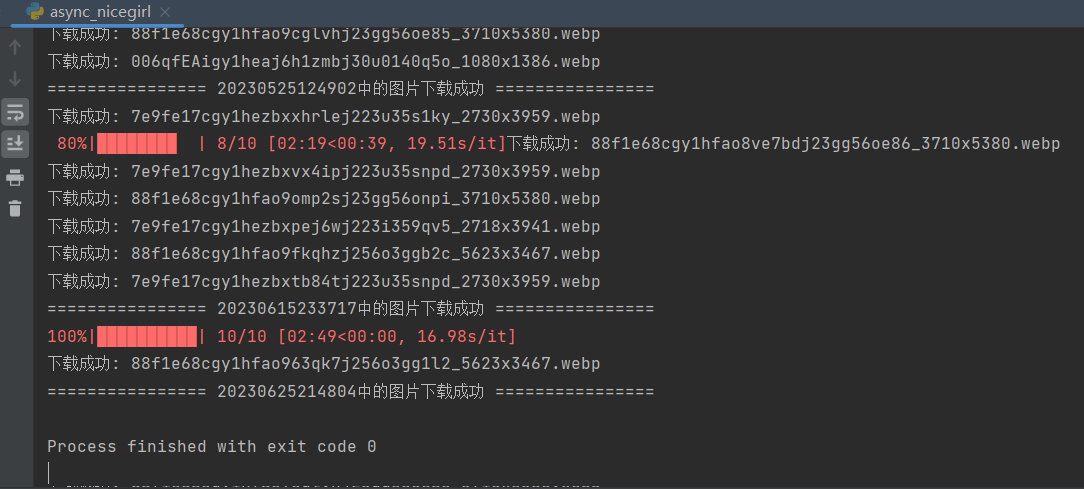

运行结果:

浙公网安备 33010602011771号

浙公网安备 33010602011771号