数学知识:Convex Optimization A

- 1. Introduction

- 2. Convex sets

- affine set

- convex set

- convex combination and convex hull

- convex cone

- hyperplane and half-sapces

- euclidean balls and ellipsoids

- norm balls and cones

- polyhedra

- positive semidefinite cone

- operations that preserve convexity

- intersection

- affine function

- perspective and linear-fractional function

- generalized inequalities

- minimum and minimal elements

- separating hyperplane thoerem

- supporting hyperplane theorem

- dual cones and generalized inequalities

- minimum and minimal elements via dual inequality

- Convex functions

- definition

- examples on

- example on and

- restriction of a convex function to a line

- extended-value extension

- first-order condition

- second-order condition

- examples

- epigraph and sublevel set

- Jense's inequality

- operations that preserve convexity

- positive weighted sum & composition with affine function

- pointwise maximum

- pointwise supremum

- composition with scalar functions

- vector composition

- minimization

- perspective

- the conjugate function

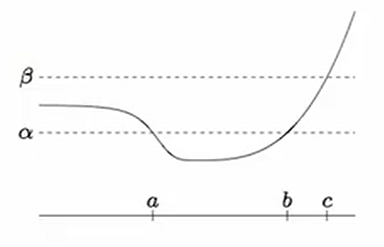

- quasiconvex functions

- examples

- properties

- log-concave and log-convex functions

- properties of log-concave functions

- consequences of integration property

- convexity with respect to generalized inequalities

1. Introduction

- mathematical optimization

- least-squares and linear programing

- convex optimization

- example

- course goals and topics

- nonlinear optimization

- brief history of convex optimization

mathmetical optimization

optimization problem

- : optization variables

- : objective function

- : constraint functions

optimal solution has smallest value of among all vectors that satisfy the constraints

examples

portfolio optimization

- variables:amounts inveated in different assets

- constraints:budget,max./min. investment per asset, minimum return

- objective:overall risk or return variance

device sizing in eletronic circuits

- variables: device widths and lengths

- constraints: manufacturing limits, timing requirements, maximum area

- objective: power consumption

data fiting

- variables: model parameters

- constraints: prior information, parameter limits

- objective: measure of misfit or prediction error

solving optimization problems

general optimization problem

- very difficult to solve

- methods involve some compromise, e.g., very long computation time or not always finding the solution

examples: certain problem classes can be solved efficiently and reliably

- least-squares problems

- linaer programming problems

- convex optimization problems

least-squares

solving least-square problems

- analytical solution:

- reliabe and efficient algorithems and software

- computation time proportional to ; less if structured

- a mature technology

using least-squares

- least-squares problems are easy to recognize

- a few standard techniques increase flexibility(e.g., including weights, adding regularization terms)

linear programming problem

solving linear programs

- no analytical formula for solution

- reliable and efficient algorithms and software

- computation time proportional to if ; less with structure

- a mature technology

using linaer programming

- not as easy as least-square problems

- a few standard tricks used to convert problems to linear programs(e.g., problems involving or norms, piecewise-linear functions)

convex optimization problem

- objective and constraint are convex:

if

- includes least-square problems and linaer program problems as special cases

solving convex optimization problem

- no analytic solution

- reliable and efficient algorithms

- consumption time proportional to , where is cost of evaluating 's and their first and second derivatives

- almost a technology

using convex optimization

- often difficult to recognize

- many triks for transforming problems into convex form

- surprisingly many problems can be solved via convex optimization

example

lamps illuminating (small, flat) patches

intensity at patch depends linearly on lamp power :

problem: achieve desired illumination with bounded lamp powers

solution

- use uniform power: , vary

- use least-squares:

round if or

- use weighted least-squares:

iteratively adjust weights until

- use linear programming:

which can be solved via linear programming

- use convex optimization: problem is equivalent to

with

is convex because maximum of convex functions is convex

additional constraints:

Does add 1 or 2 below complecate the problem?

- no more than 50% total power is in 10 lamps

- no more than half of lamps are on ()

- answer: whit (1), still easy to solve; whit (2), extremely difficult

- moral: (untrained) intuition doesn't always work; whitout the proper background very easy problems can appear quite similiar to very difficult

course goals and topics

goals

- recognize/formulate problems (such as illumination problem) as convex optimization problems

- develop code for problems of moderate size (1000 lamps, 5000 patchs)

- characterize optimal solutin (optimal power distribution), give limits of performace, etc.

topics

- convex sets, functions, optimization problems

- examples and applications

- algorithms

nonlinear optimization

...

2. Convex sets

- affine and convex sets

- some important example

- operations that preserve convexity

- genaralized inequalities

- separating and supporting hyperplanes

- dual cones and generalied inequalized

affine set

line through : all points

affine set: contains the line through any two distinct points in the set

example: soultion set of linear equation

(conversely, every affine set can be expressed as solution set of system of linear equations)

convex set

line segment between and : all points

with

convex set: contains any line segment between two points in the set

examples:

略

convex combination and convex hull

convex combination of : any point of the form

with

convex hull conv :set of all convex combination of points in

convex cone

conic (nonnegative) combination of and : any point of the form

with

convex cone: set that contains all conic combinations of points in the set

hyperplane and half-sapces

hyperplane: set of the form

halfspace: set of the form

- is the normal vector

- hyperplanes are affine and convex; halfspaces are convex

euclidean balls and ellipsoids

(euclipsoid) ball with center and radius :

ellipsoid: set of the form

with ( symmetic positive definite matrix)

other representation: with suqare and nonsigular

norm balls and cones

norm: a function that satisfis

- if and only if

- for

notation: is general (unspecified) norm; is particular norm

norm ball with center and radius

norm cone:

euclidean norm cone is called second-order cone;

norm balls and cones are convex

polyhedra

solution set of finitely many linear inequalities and equalities

( is componentwise inequality)

polyhedron is intersection of finite number of halfspaces and hyperplanes

positive semidefinite cone

notation:

- is set of symmetric matrices

- positive semidefinite matices

is a convex cone

- positive definite matrices

example:

operations that preserve convexity

practical methods to establishing convexity of a set

- apply definition

- show that is obtained from simple convex sets (hyperplanes, halfspaces, norm balls, ...) by operations that preserve convexity

- intersection

- affine function

- perspective function

- linear-fractional function

intersection

the intersection of (any number of) convex sets is convex

example:

where

affine function

suppose is affine ()

- the image of a convex set under is convex

- the inverse image of a convex set under is convex

example:

- scaling, translationg, projection

- solution set of linear matrix inequality (with )

- hyperbolic cone (with )

perspective and linear-fractional function

perspective function :

images and inverse images of convex sets under perspective are convex

linear-fractional function :

images and inverse images of convex sets under linear-fractional functions are convex

example of a linear-fractional function

generalized inequalities

a convex cone is a proper cone if

- is closed (contains its boundary)

- is solid (has nonempty interior)

- is pointed (contains no line)

examples

- nonnegtive orthant

- positive semidefinite cone

- nonnegtive polynomials on :

generalized inequality defined by a proper cone :

examples

- componentwise inequality ()

- martrix ineqaulity ()

these two types are so common that we drop the subscript in

properties: many properties of are much similar to on , ,

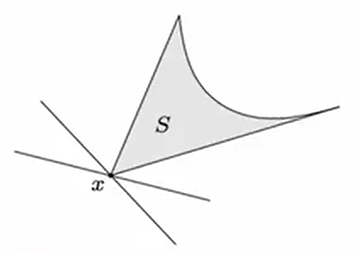

minimum and minimal elements

is not in general a linear ordering: we can have and

is the minimum element of with respect to if

is a minimal element of with respect of if

example ()

is the minimum element of

is a minimal elementof

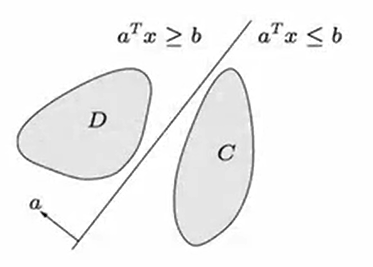

separating hyperplane thoerem

if and are disjoint convex sets, then there exists such that

the hyperplane separates and

strict separation requires additional assuptions (, is closed, is a singleton)

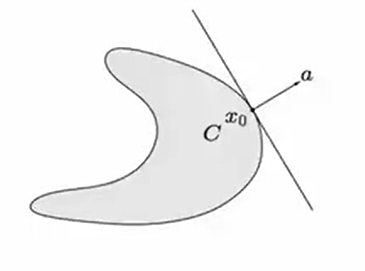

supporting hyperplane theorem

supporting hyperplane to set at boundary point :

where and for all

supporting hyperplane theorem: if is convex, then there exists a supporting hyperplane at every boundary point of

dual cones and generalized inequalities

dual cone of a cone :

examples

first three examples are self-dual cones

dual cones of paper cones are proper, hence define generalized inequalities:

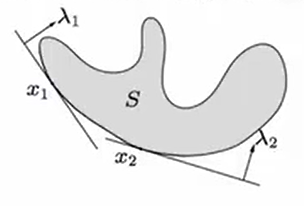

minimum and minimal elements via dual inequality

minimum element w.r.t.

is minimum element of if for all , is the unique minimizer of over

minimal element w.r.t.

- if minimizes over for some , then is minimal

- if is a minimal element of a convex set , then there exists a nonzero such that minimizes over

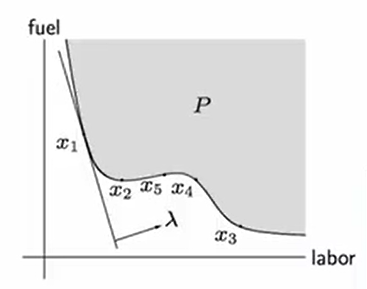

optimal production frontier

- different production methods use different amounts of resources

- production set : resource vectors for all possibel production methods

- efficient (Pareto optimal) methods correspond to resource vectors that are minimal w.r.t.

example ()

are efficient; are not

Convex functions

- basic properties and examples

- oerations that preserve convexity

- the conjugation function

- quasiconvex functions

- log-concave and log-convex functions

- convexity with respect to generalized inequality

definition

is convex if dom is a convex set and

for all dom

- is concave if is convex

- is strictly convex if dom is convex and

for dom

examples on

convex:

- affine: on , for any

- exponential: , for any

- powers: on , for or

- powers of absolute value: on , for

- negative entropy: on

concave:

- affine: on , for any

- powers: on , for

- logarithm: on

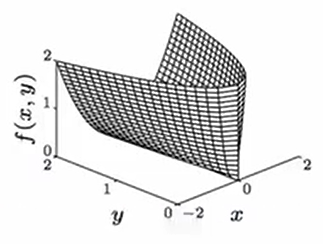

example on and

affine functions are convex; all norms are convex

example on

- affine function

- norms: for

example on ( matrices) - affine function

- spectral (maximum singular value) norm

restriction of a convex function to a line

is convexif and only if the function ,

is convex (int ) for any

can check convexity of F by checking convexity of functions of one variable

example: with

where are the eigenvalues of

is concave in (for any choice of ); hence is concave

extended-value extension

(extended value extension: 拓展值延伸)

extended-value extension of is

often simplifies notation; for example, the condition

(as an inequality in ), means the same as the two conditions

- is convex

- for ,

first-order condition

is differentiable if dom f is open and the gradient

exists at each

1st-order condition: differentiable with convex domain is convex if

first-order approximation of is global underestimator

second-order condition

is twice differentiable if dom f is open and the Hessian ,

exists at each

2nd-order conditions: for twice differentiable with convex domain

- is convex if and only if

- if for all , then is strictly convex

examples

quadratic function: (with )

convex if

least-suqares objective:

convex (for any A)

quadratic-over-linear:

convex for

log-sum-exp is convex

to show , we must werify that for all :

since () (from Cauchy-Schwarz inequality)

geometric mean: on is concave (similar proof as for log-sum-exp)

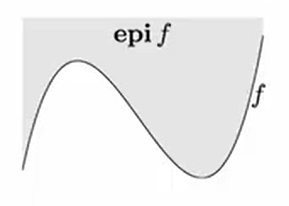

epigraph and sublevel set

(epigraph:上境图;sublevel set:下水平集)

-sublevel set of :

sublevel sets of convex functions are convex (converse is false)

epigraph of :

is convex if and only if is a convex set

Jense's inequality

basic inequality: if is convex, then for ,

extension: if is convex, then

for any random variable

basic inequality is special case with discrete distribution

operations that preserve convexity

practical methods for establishing convexity of a function

- verify definition (often simplified restricting to a line<1>)

- for twice differentiable function, show

- show that is obtainted form simple convex functions by operation that preserve convexity

- nonnegative weighted sum

- composition with affine function

- pointwise maximum or supremum

- composition

- minimization

- perspective

<1>: Generally we know that a function is convex it is convex even after we restrict it to a line. "Restricting a function to a line" simply means that you draw a line in the domain of that function and evaluate the function along that line.

positive weighted sum & composition with affine function

nonnegative multipe: is convex if is convex,

sum: convex if convex (extends to infinite sums and integrals)

composition with affine function: is convex if is convex

examples:

- log barrier for linear inequalities

- (any) norm of affine function:

pointwise maximum

if is convex, then is convex

examples

- piecewisw-linear function:

- sum of largest components of :

is convex ( is th largest component of )

proof:

pointwise supremum

(supremum:上界)

if is convex in for each , then

is convex

examples

-

support function of a set is convex

-

distance to farthest point in a set :

- maximun eigenvalue of symmetric matrix: for ,

composition with scalar functions

composition of and :

is convex if:

convex, convex, nondecreasing;

convave, convex, nonincreasing

- proof (for , differentiable )

- note: monotonicity must hold for extended-value extension

examples

- is convex if is convex

- is convex if is concave and positive

vector composition

composition of and :

is convex if

convex, convex, nondecreasing in each argument

concave, convex, nonincreasing in each argument

proof (for , differentiable )

examples

- is concave if are concave and positive

- is convex if is covex

minimization

(infimum: 下界;Schur complement(舒尔补):https://blog.csdn.net/sheagu/article/details/115771184)

if is convex in and is a convex set, then

is convex

examples

- with

minimizing over gives is convex, hence Schur complement

-distance to a set: is convex if is convex

perspective

the perspective of a function is the function ,??有问题!

is convex if is convex

examples

- is convex; hence is convex for

- negative logrithm is convex; hence relative entropy is convex on

- if is convex, then

is convex on

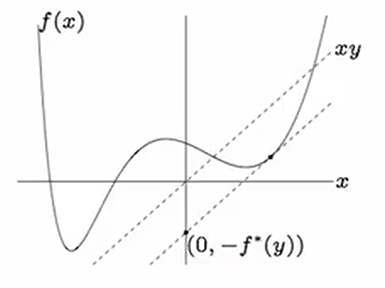

the conjugate function

the conjugate of a function is

- is convex (even if is not)

- will be useful in chapter 5

examples

- negative logarithm

- strictly convex quadratic with

quasiconvex functions

is quasiconvex if is convex and the sublevel sets

are convex for all

- is quasiconcave if is quasiconvex

- is quasilinear if it is quasiconvex and quasiconcave

注:拟凸

examples

- is convex on

- ceil is quasilinear

- is quasilinear on

- is quasicave on

- linear-fractional function

is quasilinaer

- distance ratio

is quasiconvex

注:距离比

internal rate of return

略

注:内部收益率

properties

modified Jeson inequality: for quasiconvex

first-order condition: differentiable with convex domain is quasiconvex if

sums of quasiconvex functions are not necessarily quasiconvex

log-concave and log-convex functions

a positive function is log-concave if is concave:

is log-covex if

- powers: on is log-convex for ,log-convave for

- many common probability densities are log-concave, , normal:

上式表示什么????

- cumulative Gaussian distribution function is log-cocave

properties of log-concave functions

- twice differentiable with convex domain is log-concave if and only if

for all

- product of log-concave functions is log-concave

- sum of log-concave is not always log-concave

- integration:if is log-concave, then

is log-concave (not easy to show)

consequences of integration property

- convolution of log-concave functions is log-concave

- if concex and is a random variable with log-concave pdf then

is log-concave

proof: write as integral of product of log-concave functions

is pdf of

注:pdf(probability density function)概率密度函数;prob() 求概率运算?

example: yield function

- : nominal parameter vlues for product

- : random variations of parameters in manufactured peoduct

- : set of acceptable values

if is convex and has a log-concave pdf, then

- Y is log-concave

- yield regions

convexity with respect to generalized inequalities

is -convex if is convex and

for

example , is -convex

proof: for fixed , is convex in , ,

for ,

therefore

本文来自博客园,作者:工大鸣猪,转载请注明原文链接:https://www.cnblogs.com/hit-ztx/p/17904123.html

【推荐】国内首个AI IDE,深度理解中文开发场景,立即下载体验Trae

【推荐】编程新体验,更懂你的AI,立即体验豆包MarsCode编程助手

【推荐】抖音旗下AI助手豆包,你的智能百科全书,全免费不限次数

【推荐】轻量又高性能的 SSH 工具 IShell:AI 加持,快人一步

· winform 绘制太阳,地球,月球 运作规律

· AI与.NET技术实操系列(五):向量存储与相似性搜索在 .NET 中的实现

· 超详细:普通电脑也行Windows部署deepseek R1训练数据并当服务器共享给他人

· 【硬核科普】Trae如何「偷看」你的代码?零基础破解AI编程运行原理

· 上周热点回顾(3.3-3.9)