Kubernetes controllers for CRDs的示例及Rancher RKE的使用

前言

在《Rancher中基于Kubernetes的CRD实现》一文中介绍和分析了Rancher是如何基于Kubernetes进行业务扩展的,并结合Kubernetes controller的编程范式介绍其实现机制,主要是以分析代码的整体结构为主。这篇文章目的是以实例的形式介绍如何实现Kubernetes自定义controller的编写,示例代码的git地址如下:https://github.com/alena1108/kubecon2018,这是Rancher Labs的首席软件工程师Alena Prokharchyk在CNCF举办的2018年KubeCon大会上进行的题为《Writing Kubernetes controllers for CRDs》主题演讲中的demo示例,该示例以Rancher中的Cluster自定义资源为例,介绍如何进行controller的编写(并不是Rancher代码结构中controller的实现)。由于时间有限,Alena Prokharchyk对demo的讲解和演示比较简练,并没有介绍过多细节,真正要运行起这个demo还是会碰到不少问题,后文中会做具体介绍。

Custom Resource结构

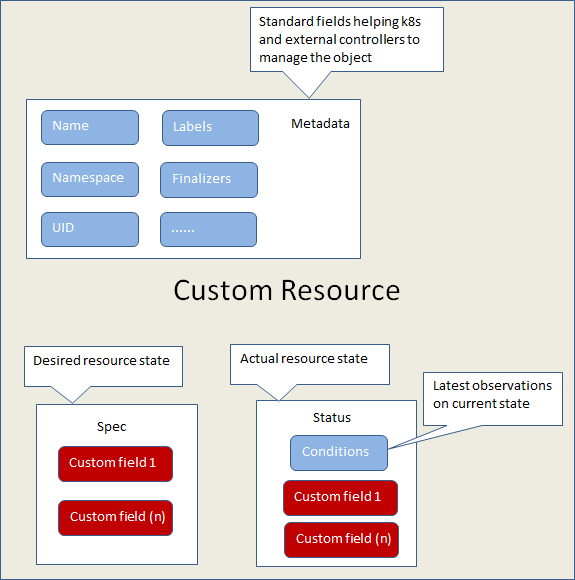

一个典型的Custom Resource结构如下图所示:

Custom Resource一般要包含Metadata, Spec, Status这几项内容,Metadata为元数据,如name、namspace、uid等,Spec为用户期望的资源状态,是必须的,Status为实际的资源状态,Status中的Conditions代表资源当前状态的最新可用观察值。通过Kubernetes的Api我们可以对资源对象进行创建、更新或删除等,当实际的资源对象状态和用户期望的资源对象状态不一致时,就要进行相应的处理,并要朝着用户期望的状态进行改变。例如,Kubernetes Deployment 对象能够表示运行在集群中的应用。 当创建 Deployment 时,可能需要设置 Deployment 的规约,以指定该应用需要有 3 个副本在运行。 Kubernetes 系统读取 Deployment 规约,并启动我们所期望的该应用的 3 个实例 —— 更新状态以与规约相匹配。 如果那些实例中有失败的(一种状态变更),Kubernetes 系统通过修正来响应规约和状态之间的不一致 —— 这种情况,会启动一个新的实例来替换。图中蓝色的部分属于k8s的field,红色的Custom field部分是用户编写的内容,被自定义的controller实现和使用。

Code Generation使用

Why Code-Generation?通过client-go来实现Kubernetes的CRD(CustomResourceDefinition )的时候,需要进行代码生成,更具体来说,client-go要求runtime.Object 类型(golang语言编写的CustomResources必须实现 runtime.Object interface)必须具备DeepCopy方法。Code-generation通过deepcopy-gen生成实现。

在自定义CRD时,可以使用如下代码生成器:

- deepcopy-gen—creates a method

func (t* T) DeepCopy() *Tfor each type T - client-gen—creates typed clientsets for CustomResource APIGroups

- informer-gen—creates informers for CustomResources which offer an event based interface to react on changes of CustomResources on the server

- lister-gen—creates listers for CustomResources which offer a read-only caching layer for GET and LIST requests.

先看一下准备用来使用Code-generation的目录结构,如下所示:

[root@localhost kubecon2018]# tree ./pkg ./pkg └── apis └── clusterprovisioner ├── register.go └── v1alpha1 ├── doc.go ├── register.go └── types.go

首先看pkg/apis/clusterprovisioner路径下的register.go文件,里面只定义了常量GroupName

package clusterprovisioner const ( GroupName = "clusterprovisioner.rke.io" )

在pkg/apis/clusterprovisioner/v1alpha1/doc.go文件中内容如下:

// +k8s:deepcopy-gen=package,register // Package v1alpha1 is the v1alpha1 version of the API. // +groupName=clusterprovisioner.rke.io package v1alpha1

这里需要关注的是注释的部分,包含了代码生成中使用的Tags,Tags的一般形态为// +tag-name 或者是 // +tag-name=value,有两种类型的Tags:

- Global tags above

packageindoc.go - Local tags above a type that is processed

全局的Tags(Global tags)写在doc.go文件中,该文件的典型路径为pkg/apis/<apigroup>/<version>/doc.go,其中

// +k8s:deepcopy-gen=package,register 主要告诉deepcopy-gen为每一个资源类型都缺省创建deepcopy方法,如果资源类型不需要该方法的话,可以在其local tag中使用// +k8s:deepcopy-gen=false

// +groupName=clusterprovisioner.rke.io 定义了API group name

在 pkg/apis/clusterprovisioner/v1alpha1/register.go中,进行scheme和api的注册,如下所示:

package v1alpha1 import ( "github.com/rancher/kubecon2018/pkg/apis/clusterprovisioner" metav1 "k8s.io/apimachinery/pkg/apis/meta/v1" "k8s.io/apimachinery/pkg/runtime" "k8s.io/apimachinery/pkg/runtime/schema" ) var ( SchemeBuilder = runtime.NewSchemeBuilder(addKnownTypes) AddToScheme = SchemeBuilder.AddToScheme ) var SchemeGroupVersion = schema.GroupVersion{Group: clusterprovisioner.GroupName, Version: "v1alpha1"} func Resource(resource string) schema.GroupResource { return SchemeGroupVersion.WithResource(resource).GroupResource() } func addKnownTypes(scheme *runtime.Scheme) error { scheme.AddKnownTypes(SchemeGroupVersion, &Cluster{}, &ClusterList{}, ) metav1.AddToGroupVersion(scheme, SchemeGroupVersion) return nil }

最后,资源类型定义是在pkg/apis/clusterprovisioner/v1alpha1/types.go文件中,如下所示:

package v1alpha1 import ( "github.com/rancher/norman/condition" "k8s.io/api/core/v1" metav1 "k8s.io/apimachinery/pkg/apis/meta/v1" ) type ClusterConditionType string const ( // ClusterConditionReady Cluster ready to serve API (healthy when true, unhealthy when false) ClusterConditionReady condition.Cond = "Ready" // ClusterConditionProvisioned Cluster is provisioned by RKE ClusterConditionProvisioned condition.Cond = "Provisioned" ) // +genclient // +k8s:deepcopy-gen:interfaces=k8s.io/apimachinery/pkg/runtime.Object // +resource:path=cluster // +genclient:noStatus // +genclient:nonNamespaced type Cluster struct { metav1.TypeMeta `json:",inline"` metav1.ObjectMeta `json:"metadata,omitempty"` Spec ClusterSpec `json:"spec"` Status ClusterStatus `json:"status"` } // +genclient // +k8s:deepcopy-gen:interfaces=k8s.io/apimachinery/pkg/runtime.Object // +resource:path=kubeconfig // +genclient:noStatus // +genclient:nonNamespaced type Kubeconfig struct { metav1.TypeMeta `json:",inline"` metav1.ObjectMeta `json:"metadata,omitempty"` Spec KubeconfigSpec `json:"spec"` } // +k8s:deepcopy-gen:interfaces=k8s.io/apimachinery/pkg/runtime.Object // +resource:path=clusters type ClusterList struct { metav1.TypeMeta `json:",inline"` metav1.ListMeta `json:"metadata"` Items []Cluster `json:"items"` } // +k8s:deepcopy-gen:interfaces=k8s.io/apimachinery/pkg/runtime.Object // +resource:path=kubeconfigs type KubeconfigList struct { metav1.TypeMeta `json:",inline"` metav1.ListMeta `json:"metadata"` Items []Kubeconfig `json:"items"` } type KubeconfigSpec struct { ConfigPath string `json: "configPath, omitempty"` } type ClusterSpec struct { ConfigPath string `json: "configPath, omitempty"` } type ClusterStatus struct { AppliedConfig string `json:"appliedConfig,omitempty"` //Conditions represent the latest available observations of an object's current state: //More info: https://github.com/kubernetes/community/blob/master/contributors/devel/api-conventions.md#typical-status-properties Conditions []ClusterCondition `json:"conditions,omitempty"` } type ClusterCondition struct { // Type of cluster condition. Type ClusterConditionType `json:"type"` // Status of the condition, one of True, False, Unknown. Status v1.ConditionStatus `json:"status"` // The last time this condition was updated. LastUpdateTime string `json:"lastUpdateTime,omitempty"` // Last time the condition transitioned from one status to another. LastTransitionTime string `json:"lastTransitionTime,omitempty"` // The reason for the condition's last transition. Reason string `json:"reason,omitempty"` // Human-readable message indicating details about last transition Message string `json:"message,omitempty"` }

以Cluster资源为例,从中我们可以看到定义Cluster资源时,包括了Metadata、Spec和Stauts,以及Status中所要用到的condition。同时,这里我们还看到了Local tags的使用。

接下来,就要进行代码生成了,先看下代码生成的脚本,即代码中scripts路径下的update-codegen.sh文件,应用到了之前提到的代码生成工具,内容如下:

#!/bin/bash APIS_DIR="github.com/rancher/kubecon2018/pkg/apis/clusterprovisioner" VERSION="v1alpha1" APIS_VERSION_DIR="${APIS_DIR}/${VERSION}" OUTPUT_DIR="github.com/rancher/kubecon2018/pkg/client" CLIENTSET_DIR="${OUTPUT_DIR}/clientset" LISTERS_DIR="${OUTPUT_DIR}/listers" INFORMERS_DIR="${OUTPUT_DIR}/informers" echo Generating deepcopy deepcopy-gen --input-dirs ${APIS_VERSION_DIR} \ -O zz_generated.deepcopy --bounding-dirs ${APIS_DIR} echo Generating clientset client-gen --clientset-name versioned \ --input-base '' --input ${APIS_VERSION_DIR} \ --clientset-path ${CLIENTSET_DIR} echo Generating lister lister-gen --input-dirs ${APIS_VERSION_DIR} \ --output-package ${LISTERS_DIR} echo Generating informer informer-gen --input-dirs ${APIS_VERSION_DIR} \ --versioned-clientset-package "${CLIENTSET_DIR}/versioned" \ --listers-package ${LISTERS_DIR} \ --output-package ${INFORMERS_DIR}

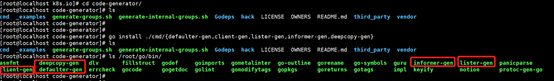

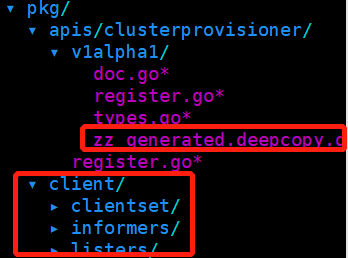

介绍下如何获取这些代码生成工具,在$GOPATH/src路径下创建k8s.io目录,进入k8s.io目录,git clone https://github.com/kubernetes/code-generator.git,进入code-generator目录,选择分支,这里应用release-1.8(对应kubernetes1.8版本系列),然后执行安装命令go install ./cmd/{defaulter-gen,client-gen,lister-gen,informer-gen,deepcopy-gen},在$GOPATH/bin下会生成相应的执行程序,如下图所示:

有了必要的工具后,就可以执行代码生成脚本了,执行update-codegen.sh时可能会发现报错,提示需要依赖kubernetes和apimachinery这两个项目,github上下载这两个项目到$GOPATH/src/k8s.io路径下,并切换为release-1.8分支,再次执行即可,最终得到生成代码后的路径信息如下:

RKE安装

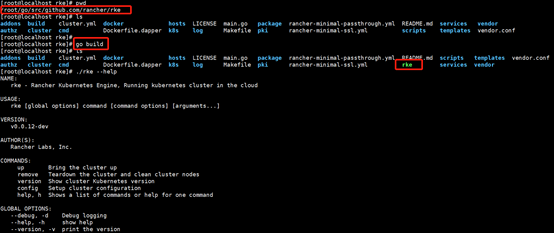

运行demo还需要使用RKE工具,那么什么是RKE?这里还是给出官方的说明:Rancher Kubernetes Engine, an extremely simple, lightning fast Kubernetes installer that works everywhere.

在$GOPATH/src/ github.com/rancher/下git clone https://github.com/rancher/rke.git,go build生成rke执行文件,如下图所示:

代码执行

工具齐全了,现在开始准备执行代码了。Alena Prokharchyk在demo中的代码比较简洁易懂,不做过多的分析了,主要看一下Cluster CRD资源的yml文件,在代码执行时会进行kubectl apply -f该文件,即创建了Cluster资源:

apiVersion: apiextensions.k8s.io/v1beta1

kind: CustomResourceDefinition

metadata:

name: clusters.clusterprovisioner.rke.io

spec:

group: clusterprovisioner.rke.io

version: v1alpha1

names:

kind: Cluster

plural: clusters

scope: Cluster

再看下controller相关的代码片段,主要关注informer中Callbacks的实现和object进入到Workqueue后Worker进行的处理,Callbacks内容如下所示:

...... controller.syncQueue = util.NewTaskQueue(controller.sync) controller.clusterInformer.AddEventHandler(cache.ResourceEventHandlerFuncs{ AddFunc: func(obj interface{}) { controller.syncQueue.Enqueue(obj) }, UpdateFunc: func(old, cur interface{}) { controller.syncQueue.Enqueue(cur) }, }) ......

Worker内容如下,即从Workqueue中get到内容后,根据cluster的DeletionTimestamp元数据值进行Cluster的Remove和ADD,在Remove和ADD的代码中会进行RKE的调用。

func (c *Controller) sync(key string) { cluster, err := c.clusterLister.Get(key) if err != nil { c.syncQueue.Requeue(key, err) return } if cluster.DeletionTimestamp != nil { err = c.handleClusterRemove(cluster) } else { err = c.handleClusterAdd(cluster) } if err != nil { c.syncQueue.Requeue(key, err) return } }

demo运行还需要进行一些代码修改,主要是结合自己的环境信息进行调整:

1)config/rkespec/cluster_aws.yml nodes: - address: 10.18.74.175 user: tht role: [controlplane,worker,etcd] 2)config/spec/cluster.yml apiVersion: clusterprovisioner.rke.io/v1alpha1 kind: Cluster metadata: name: clusteraws labels: rke: "true" spec: # example of cluster.yml file for RKE can be found here: https://github.com/rancher/rke/blob/master/cluster.yml configPath: /root/go/src/github.com/rancher/kubecon2018/config/rkespec/cluster_aws.yml 3)controllers/provisioner/provisioner.go func removeCluster(cluster *types.Cluster) (err error) { cmdName := "/root/go/src/github.com/rancher/rke/rke" cmdArgs := []string{"remove", "--force", "--config", cluster.Spec.ConfigPath} return executeCommand(cmdName, cmdArgs) } func provisionCluster(cluster *types.Cluster) (err error) { cmdName := "/root/go/src/github.com/rancher/rke/rke" cmdArgs := []string{"up", "--config", cluster.Spec.ConfigPath} return executeCommand(cmdName, cmdArgs) }

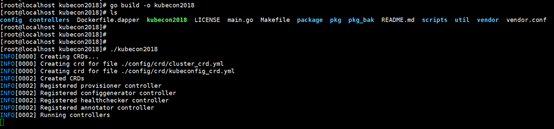

go build之后就可以执行了,在执行程序的--kubeconfig参数中可以使用我们已有的kubernetes环境中的kube config文件。demo主要演示了CRD资源的创建,controller的运行,以及通过kubernetes api创建或删除一个cluster object后,controller的处理,包括RKE的调用(在一个节点上创建一个all-in-one的kubernetes环境)

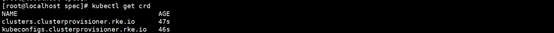

获取crd信息:

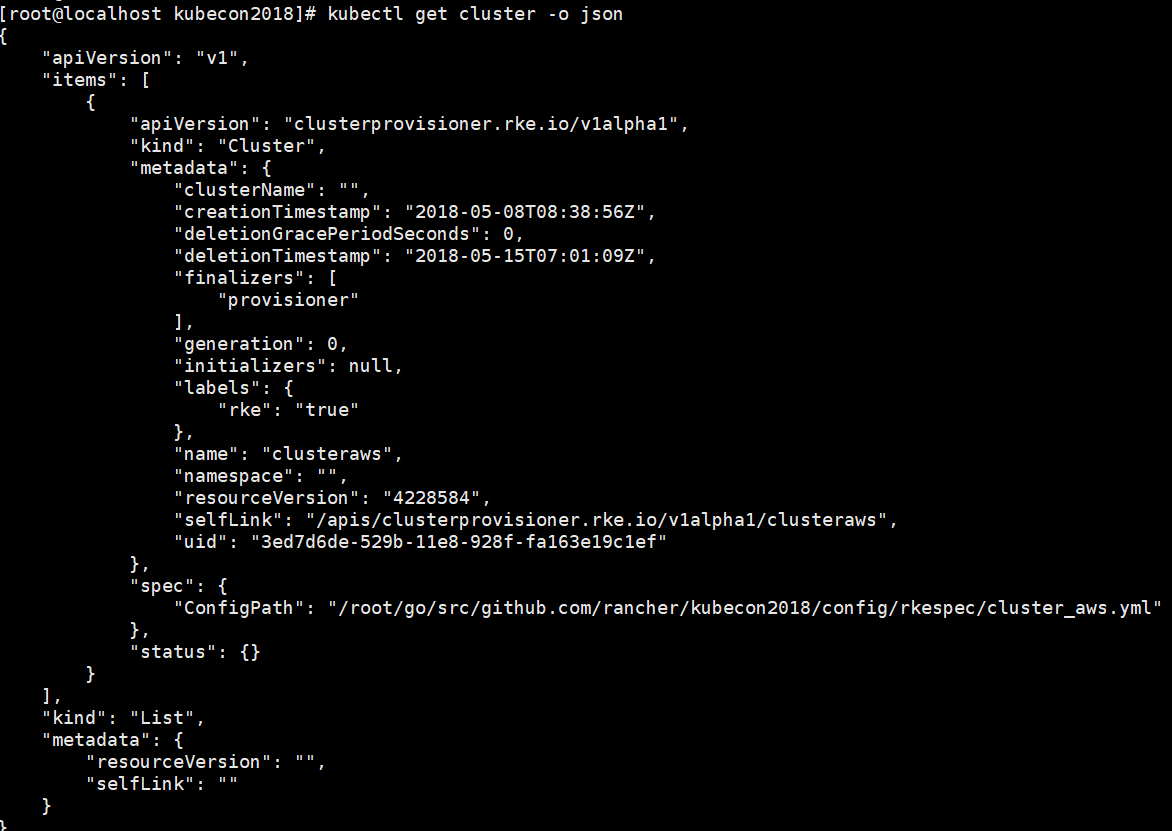

创建的cluster信息:

遇到的问题

1)在config/rkespec/cluster_aws.yml中user设置为root时,发现在调用rke的时候出现ssh tunnel的错误,查了一下只有在Centos操作系统下才会出现这个问题,必须设置为非root用户,而且进行以下操作(Rancher官方的回复):

if you run rke in centos7, you should not use the root user to open the ssh tunnel.you can try the following step to run rke

in all nodes:

- update openssh to 7.4,and docker version v1.12.6

- set "AllowTcpForwarding yes" "PermitTunnel yes" to /etc/ssh/sshd_config,and then restart sshd service

- the host which run rke can ssh to all nodes without password

- run: "groupadd docker" to create docker group,while docker group is not exist.

- run: "useradd -g docker yourusername" to create yourusername user and set it's group to docker

- set the docker.service's MountFlags=shared (vi /xxx/xxx/docker.service)

- run:"su yourusername" to change current user,and then restart the docker service. so in the user yourusername session the docker.sock will be created in the path /var/run/docker.sock

- in cluster.yml set the ssh user to yourusername(in setup hosts)

nodes: - address: x.x.x.x ... user: yourusername - address: x.x.x.x ... user: yourusername- in cluster.yml set the kubelet to use the systemd cgroup(in setup hosts)

services: kubelet: image: rancher/k8s:v1.8.3-rancher2 extra_args: {"cgroup-driver":"systemd","fail-swap-on":"false"}now you can run "rke -d up" to setup your k8s cluster.

2)第一次创建Cluster资源的object(kubectl create -f ./config/spec/cluster.yml)时,由于RKE没有设置正确,创建不成功,然后删除该object(kubectl delete -f ./config/spec/cluster.yml),提示成功,但程序一直循环打印“Removing cluster clusteraws”,在代码中加入日志输出,发现错误原因“error removing cluster clusteraws the server does not allow this method on the requested resource (put clusters.clusterprovisioner.rke.io clusteraws)”,而且再次执行kubectl create -f ./config/spec/cluster.yml也是同样的错误类型,暂不清楚什么原因?

3)出现(2)错误的时候,在RKE部署的节点上发现在/var/lib/docker/volumes/路径下,不停的产生了很多volume,直至将文件系统的空间占满。

Rancher RKE使用

首先建立一个RKE所需要的配置文件,这里部署一个all-in-one的环境,部署的节点为centos操作系统:

nodes: - address: 10.18.74.175 user: tht ssh_key_path: /home/tht/.ssh/id_rsa ssh_key: |- -----BEGIN RSA PRIVATE KEY----- MIIEowIBAAKCAQEA2OvoeczZdB2/3gvY+sHKVp5AFDBBJ6Gc2TcNqn1PuahtuZR2 zdV5Q32x2VUz/H2qXkcQVNcfCnWH4j557Aj580acRHzjCPjVJbW5E3++QW8KXWMS XP+BfVqE4XuJAlXaK0utBBcPuPvzB7Y71tpFFFWIHZZQs9x1xDwwB3DNouI/JP8H dHAICuFm4vZkfpWNvOeAL1hvTCg6okv5ChPEP3Rk83Gq4TfiFDtMF/QrgtkKFTCS 039/mlxeKpeJENyfzKK2DrmVpFxd1MWrUCVjvV2Ht8fzeOMXqlbccWAthnrlNCbn jU0GdThvRKL0azBxkiuNpIs0+oqG0HSVmg99PwIDAQABAoIBAHmfY3wPIAkbuPz9 dY265ADGv7TSDWX0FiYv2OizU+ULi2HW3PmxbEksC3CIdhpmNwSfIYgACXZqyWJP lzqBGeuNtoYr43ufUJrRFdDZ+clkQdJ0ftJHq8ml3AU0p2/4xNcrmflGGNml4fB7 +3cOcFbjUesM4XjG7fy1plQ1qgZdfXW6rODw1u2aQKt6GDmcw2L2mAUWlWuTre5k iZCTuhkdCCgQpeW3ZFZ83h4EYAGhZPXFR4V5Y3WWBnjimfKhdkOB7ajDxWkBoKr7 fiwArFSo5y3e2RWysDj+wqLX4JZqiUmNHzzlFAHNgPEK/hj5UQMepNxtd4ND8F9p y1us/fkCgYEA9pSUxWK10NexNdyRBb4nPjGoqzLayRAu+aLGZ5ZFo8vhr1QCCP8f S9tnPXyaT90ufEuylVubo1NFGbtDTWUVxGRc2HgjjpN4uPaXTcD5pof3mDe923co 1hIOxWSAroXoy5+Y+l0YRq94oS/tI9DJSQ51ORI5xCJn1etjO5TA680CgYEA4TVH l+XILaNjs/+OW9IVz0xq8lxxM+8CXPS4QL8hMFZRadmI4XtWteiPlPGujEJF7SKp B6C5UiFq1A1IaU9NQ45lBwsq8AjrNl45q/YxJmvAqPuAEnigeN9qA72e3ilasadn cVDrqqnZnojkKjZKzY0ZXY7zaJOOjHLEYCX6uTsCgYB8Ar3Ph5VpMxEsxYEqIjga T19Euo7OEBWP9w1Ri4H6ns8iHl3nqGdU/0Ms6T2ybMq0OF3YP/pGadqW1ldC1VPd MZyAQeugCQrt+xadRDBKUJd1NpOFjKg9AVfsbl9JZo9t2RZW0/shkZ5ZcoERQi/5 TgwmZ8QloCgYrgl6LZXZAQKBgDHo2OD075QNrb7qV+ZJfMPgL6NekUftJBztrxfK Q9SujIRkzU0LRIAz9f4QQZqb5VtUXxltqSRme4JbHz0XcgwStpkFBJMFpvr5jtZp TSMyphPNCOkPCqE/AgOqNlcN2yeb7fTS9idwVOYpeEdSmOlM594wHAmFCgZeON8G C7aZAoGBAI1DD0bSjdErvb/Aq4PzLxkj/JF5GMbphX9eOJG5RpEuqW6AmKyDFJKp 1RL/X7Md8MqI5KfsLPUp9N3SWrqln46NfPi0dFZwqAE2/OMQQd5L9kkPrwMnDz1e CpPDrwMxRa75FbZ6i1WzpqTb3cvTRrndGC5jrmU5mWbkoOY4oRlR -----END RSA PRIVATE KEY----- role: - controlplane - etcd - worker services: kubelet: extra_args: {"cgroup-driver":"systemd","fail-swap-on":"false"}

执行./rke --debug up --config ../kubecon2018/config/rkespec/cluster_rke.yml,用时不久即完成了单节点的kubernetes环境部署,确实是十分便捷,不过需要指出的是这里配置比较简单,而且环境也是一个单一的节点,RKE能做的事情远不止于此,关于RKE更多的内容github上有详细的文档说明。

小结

本文通过Rancher Labs首席软件工程师Alena Prokharchyk给出demo,以具体实例的形式介绍Kubernetes CRD的实现方式,以及自定义controller的编写,并对Rancher RKE工具进行了简单使用。

参考文档

https://blog.openshift.com/kubernetes-deep-dive-code-generation-customresources/

https://kubernetes.io/cn/docs/concepts/overview/working-with-objects/kubernetes-objects/

https://github.com/kubernetes/community/blob/master/contributors/devel/api-conventions.md

posted on 2018-05-15 14:49 Hindsight_Tan 阅读(3324) 评论(0) 收藏 举报

浙公网安备 33010602011771号

浙公网安备 33010602011771号