kafka producer自定义partitioner和consumer多线程

为了更好的实现负载均衡和消息的顺序性,Kafka Producer可以通过分发策略发送给指定的Partition。Kafka Java客户端有默认的Partitioner,平均的向目标topic的各个Partition中生产数据,如果想要控制消息的分发策略,有两种方式,一种是在发送前创建ProducerRecord时指定分区(针对单个消息),另一种就是就是根据Key自己写算法。继承Partitioner接口,实现其partition方法。并且配置启动参数 props.put("partitioner.class","com.example.demo.MyPartitioner"),示例代码如下:

自定义的partitoner

package com.example.demo; import java.util.Map; import org.apache.kafka.clients.producer.Partitioner; import org.apache.kafka.common.Cluster; public class MyPartitioner implements Partitioner { @Override public void configure(Map<String, ?> configs) { } @Override public int partition(String topic, Object key, byte[] keyBytes, Object value, byte[] valueBytes, Cluster cluster) { if (Integer.parseInt((String)key)%3==1) return 0; else if (Integer.parseInt((String)key)%3==2) return 1; else return 2; } @Override public void close() { } }

producer类中指定partitioner.class

package com.example.demo; import java.util.Properties; import org.apache.kafka.clients.producer.KafkaProducer; import org.apache.kafka.clients.producer.Producer; import org.apache.kafka.clients.producer.ProducerRecord; public class MyProducer { public static void main(String[] args) { Properties props = new Properties(); props.put("bootstrap.servers", "192.168.1.124:9092"); props.put("acks", "all"); props.put("retries", 0); props.put("batch.size", 16384); props.put("linger.ms", 1); props.put("partitioner.class", "com.example.demo.MyPartitioner"); props.put("buffer.memory", 33554432); props.put("key.serializer", "org.apache.kafka.common.serialization.StringSerializer"); props.put("value.serializer", "org.apache.kafka.common.serialization.StringSerializer"); Producer<String, String> producer = new KafkaProducer<>(props); for (int i = 0; i < 100; i++) producer.send(new ProducerRecord<String, String>("powerTopic", Integer.toString(i), Integer.toString(i))); producer.close(); } }

测试consumer

package com.example.demo; import java.util.Arrays; import java.util.Properties; import org.apache.kafka.clients.consumer.ConsumerRecord; import org.apache.kafka.clients.consumer.ConsumerRecords; import org.apache.kafka.clients.consumer.KafkaConsumer; public class MyAutoCommitConsumer { public static void main(String[] args) { Properties props = new Properties(); props.put("bootstrap.servers", "192.168.1.124:9092"); props.put("group.id", "test"); props.put("enable.auto.commit", "true"); props.put("auto.commit.interval.ms", "1000"); props.put("key.deserializer", "org.apache.kafka.common.serialization.StringDeserializer"); props.put("value.deserializer", "org.apache.kafka.common.serialization.StringDeserializer"); @SuppressWarnings("resource") KafkaConsumer<String, String> consumer = new KafkaConsumer<>(props); consumer.subscribe(Arrays.asList("powerTopic")); while (true) { ConsumerRecords<String, String> records = consumer.poll(100); for (ConsumerRecord<String, String> record : records) System.out.printf("partition = %d,offset = %d, key = %s, value = %s%n",record.partition(), record.offset(), record.key(), record.value()); } } }

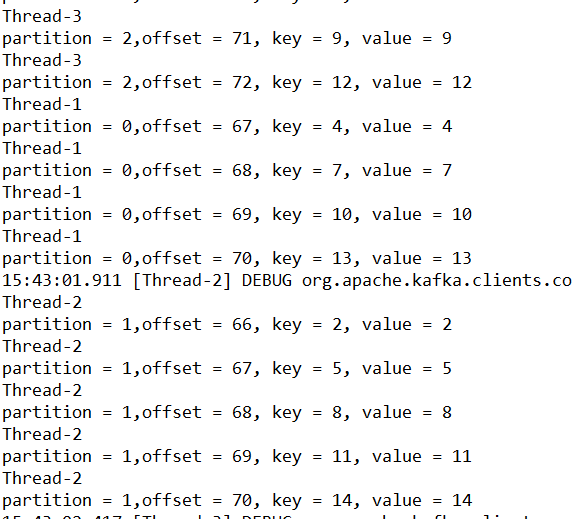

启动zookeeper和kafka,使用命令行新建一个 3个partition的topic:powerTopic,为了方便查看结果,将producer的循环次数设置为15,运行consumer和producer代码,效果如下:

虽然我们有三个分区,但是我们group组中只有一个消费者,所以三个分区的消息被这个消费者顺序消费,下面我们实现一个消费者组,示例代码如下:

ConsumerThread类

package com.example.demo; import org.apache.kafka.clients.consumer.ConsumerRecord; import org.apache.kafka.clients.consumer.ConsumerRecords; import org.apache.kafka.clients.consumer.KafkaConsumer; import java.util.Arrays; import java.util.Properties; public class ConsumerThread implements Runnable { private KafkaConsumer<String,String> kafkaConsumer; private final String topic; public ConsumerThread(String brokers,String groupId,String topic){ Properties properties = buildKafkaProperty(brokers,groupId); this.topic = topic; this.kafkaConsumer = new KafkaConsumer<String, String>(properties); this.kafkaConsumer.subscribe(Arrays.asList(this.topic)); } private static Properties buildKafkaProperty(String brokers,String groupId){ Properties properties = new Properties(); properties.put("bootstrap.servers", brokers); properties.put("group.id", groupId); properties.put("enable.auto.commit", "true"); properties.put("auto.commit.interval.ms", "1000"); properties.put("session.timeout.ms", "30000"); properties.put("auto.offset.reset", "earliest"); properties.put("key.deserializer", "org.apache.kafka.common.serialization.StringDeserializer"); properties.put("value.deserializer", "org.apache.kafka.common.serialization.StringDeserializer"); return properties; } @Override public void run() { while (true){ ConsumerRecords<String,String> consumerRecords = kafkaConsumer.poll(100); for(ConsumerRecord<String,String> item : consumerRecords){ System.out.println(Thread.currentThread().getName()); System.out.printf("partition = %d,offset = %d, key = %s, value = %s%n",item.partition(), item.offset(), item.key(), item.value()); } } } }

ConsumerGroup类

package com.example.demo; import java.util.ArrayList; import java.util.List; public class ConsumerGroup { private List<ConsumerThread> consumerThreadList = new ArrayList<ConsumerThread>(); public ConsumerGroup(String brokers,String groupId,String topic,int consumerNumber){ for(int i = 0; i< consumerNumber;i++){ ConsumerThread consumerThread = new ConsumerThread(brokers,groupId,topic); consumerThreadList.add(consumerThread); } } public void start(){ for (ConsumerThread item : consumerThreadList){ Thread thread = new Thread(item); thread.start(); } } }

消费者组启动类ConsumerGroupMain

package com.example.demo; public class ConsumerGroupMain { public static void main(String[] args){ String brokers = "192.168.1.124:9092"; String groupId = "group01"; String topic = "powerTopic"; int consumerNumber = 3; ConsumerGroup consumerGroup = new ConsumerGroup(brokers,groupId,topic,consumerNumber); consumerGroup.start(); } }

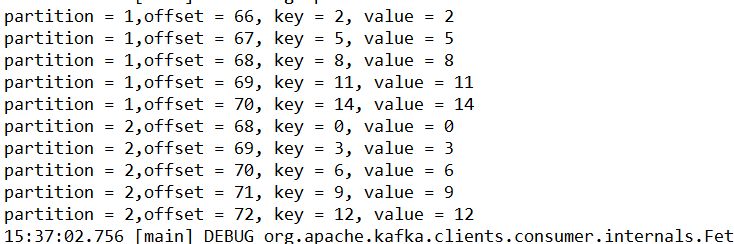

启动消费者和生产者,可以看到不同的分区是不同的线程去执行的效果如下: