项目总结一:情感分类项目(emojify)

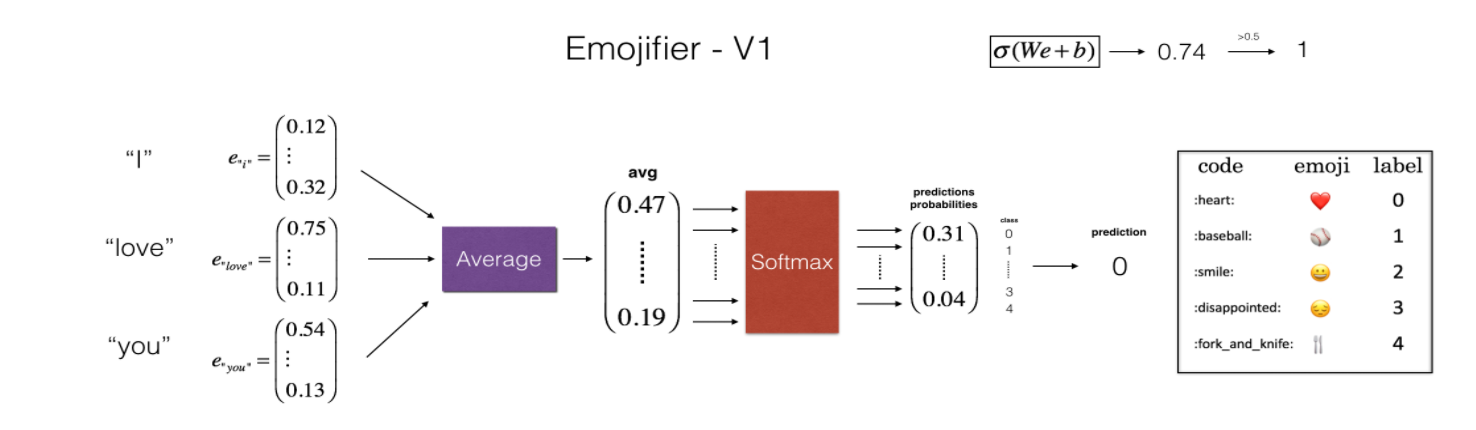

一、Emojifier-V1 模型

1、 模型

(1)前向传播过程:

(2)损失函数:计算the cross-entropy cost

(3)反向传播过程:计算dW,db

dz = a - Y_oh[i] dW = np.dot(dz.reshape(n_y,1), avg.reshape(1, n_h)) db = dz

(4) 参数更新: the stochastic gradient descent algorithm

W = W - learning_rate * dW b = b - learning_rate * db

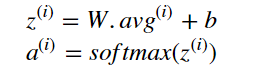

2、输入输出数据类型

(1)输入输出数据

输入数据X:句子

输出数据Y:表情

例如:

I am proud of your achievements 😄

(2)训练样本、检验样本

训练样本:X contains 127 sentences (strings),Y contains a integer label between 0 and 4 corresponding to an emoji for each sentence

检验样本:X contains 56 sentences (strings),Y contains a integer label between 0 and 4 corresponding to an emoji for each sentence

3、结果

(1)训练与检验结果

Training set: Accuracy: 0.977272727273 #训练集准确率 Test set: Accuracy: 0.857142857143 #检验集准确率

(2)测试结果:

i adore you ❤️ i love you ❤️ funny lol 😄 lets play with a ball ⚾ food is ready 🍴 not feeling happy 😄 #错误(1)the word "adore" does not appear in the training set.Because adore has a similar embedding as love, the algorithm has generalized correctly even to a word it has never seen before. Words such as heart, dear, beloved or adore have embedding vectors similar to love.

(2)ote though that it doesn't get "not feeling happy" correct. This algorithm ignores word ordering, so is not good at understanding phrases like "not happy."

4、总结:

- Even with a 127 training examples, you can get a reasonably good model for Emojifying. This is due to the generalization power word vectors gives you.

- Emojify-V1 will perform poorly on sentences such as "This movie is not good and not enjoyable" because it doesn't understand combinations of words--it just averages all the words' embedding vectors together, without paying attention to the ordering of words. You will build a better algorithm in the next part.

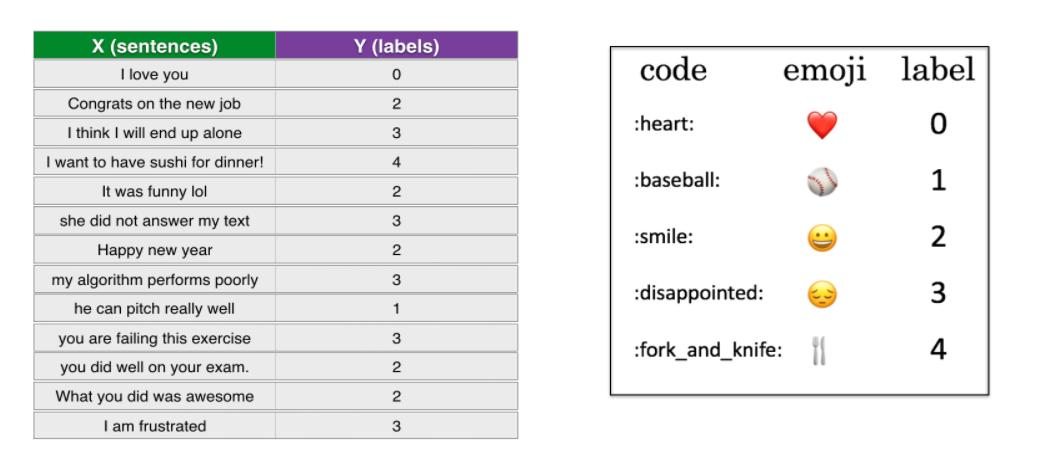

二、Emojifier-V2 模型

1、 模型

(1)Keras and mini-batching

most deep learning frameworks require that all sequences in the same mini-batch have the same length.The common solution to this is to use padding. Specifically, set a maximum sequence length, and pad all sequences to the same length.

(2)The Embedding layer

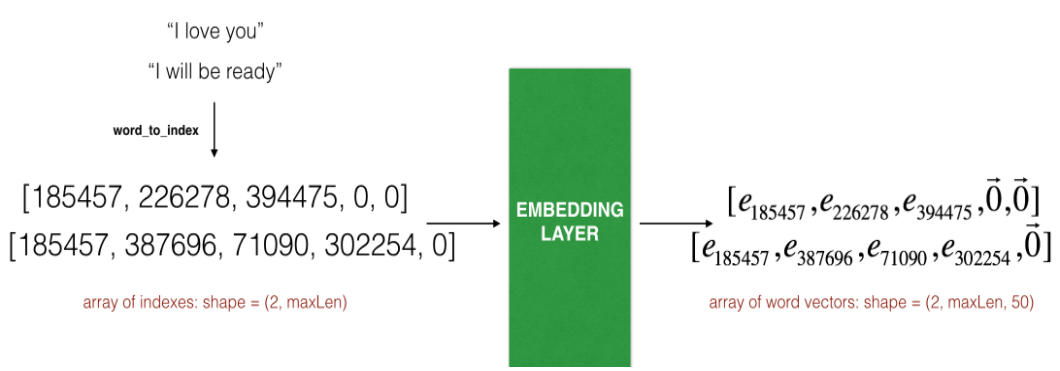

Embedding layer. This example shows the propagation of two examples through the embedding layer. Both have been zero-padded to a length of max_len=5. The final dimension of the representation is (2,max_len,50) because the word embeddings we are using are 50 dimensional.

(3)Building the Emojifier-V2

【Building model】

# GRADED FUNCTION: Emojify_V2

def Emojify_V2(input_shape, word_to_vec_map, word_to_index):

"""

Function creating the Emojify-v2 model's graph.

Arguments:

input_shape -- shape of the input, usually (max_len,)

word_to_vec_map -- dictionary mapping every word in a vocabulary into its 50-dimensional vector representation

word_to_index -- dictionary mapping from words to their indices in the vocabulary (400,001 words)

Returns:

model -- a model instance in Keras

"""

### START CODE HERE ###

# Define sentence_indices as the input of the graph, it should be of shape input_shape and dtype 'int32' (as it contains indices).

sentence_indices = Input(shape = input_shape, dtype =np.int32)

# Create the embedding layer pretrained with GloVe Vectors (≈1 line)

embedding_layer = pretrained_embedding_layer(word_to_vec_map, word_to_index)

# Propagate sentence_indices through your embedding layer, you get back the embeddings

embeddings = embedding_layer(sentence_indices)

# Propagate the embeddings through an LSTM layer with 128-dimensional hidden state

# Be careful, the returned output should be a batch of sequences.

# return_sequences: Boolean. Whether to return the last output in the output sequence, or the full sequence.

X = LSTM(128, return_sequences=True)(embeddings)

# Add dropout with a probability of 0.5

X = Dropout(rate=0.5)(X)

# Propagate X trough another LSTM layer with 128-dimensional hidden state

# Be careful, the returned output should be a single hidden state, not a batch of sequences.

# units: Positive integer, dimensionality of the output space.

# return_sequences: Boolean. Whether to return the last output in the output sequence, or the full sequence.

X = LSTM(128, return_sequences=False)(X)

# Add dropout with a probability of 0.5

X = Dropout(rate=0.5)(X)

# Propagate X through a Dense layer with softmax activation to get back a batch of 5-dimensional vectors.

#units: Positive integer, dimensionality of the output space.

X = Dense(5)(X)

# Add a softmax activation

X =Activation('softmax')(X)

# Create Model instance which converts sentence_indices into X.

model = Model(inputs=sentence_indices, outputs=X)

### END CODE HERE ###

return model

【model summary】

Layer (type) Output Shape Param # ================================================================= input_4 (InputLayer) (None, 10) 0 _________________________________________________________________ embedding_5 (Embedding) (None, 10, 50) 20000050 _________________________________________________________________ lstm_5 (LSTM) (None, 10, 128) 91648 _________________________________________________________________ dropout_4 (Dropout) (None, 10, 128) 0 _________________________________________________________________ lstm_6 (LSTM) (None, 128) 131584 _________________________________________________________________ dropout_5 (Dropout) (None, 128) 0 _________________________________________________________________ dense_2 (Dense) (None, 5) 645 _________________________________________________________________ activation_2 (Activation) (None, 5) 0 ================================================================= Total params: 20,223,927 Trainable params: 223,877 Non-trainable params: 20,000,050 _________________________________________________________________

【model compile】

model.compile(loss='categorical_crossentropy', optimizer='adam', metrics=['accuracy'])

【model fit】

model.fit(X_train_indices, Y_train_oh, epochs = 50, batch_size = 32, shuffle=True)

(1)输入输出数据

输入数据X:句子

输出数据Y:表情

例如:

I am proud of your achievements 😄

(2)训练样本、检验样本

训练样本:X contains 127 sentences (strings),Y contains a integer label between 0 and 4 corresponding to an emoji for each sentence

检验样本:X contains 56 sentences (strings),Y contains a integer label between 0 and 4 corresponding to an emoji for each sentence

3、结果

(1)训练与检验结果

train accuracy Epoch 50/50 132/132 [==============================] - 0s - loss: 0.0931 - acc: 0.9773 Test accuracy Test accuracy = 0.857142865658

(2)测试结果:

#错误的结果

Expected emoji:😞 prediction: work is hard 😄 Expected emoji:😞 prediction: This girl is messing with me ❤️ Expected emoji:😞 prediction: work is horrible 😄 Expected emoji:🍴 prediction: any suggestions for dinner 😄 Expected emoji:😄 prediction: you brighten my day ❤️ Expected emoji:😞 prediction: she is a bully ❤️ Expected emoji:😞 prediction: My life is so boring ❤️ Expected emoji:😄 prediction: will you be my valentine ❤️

#可以识别not feeling happy

not feeling happy 😞

4、总结:

- If you have an NLP task where the training set is small, using word embeddings can help your algorithm significantly. Word embeddings allow your model to work on words in the test set that may not even have appeared in your training set.

- Training sequence models in Keras (and in most other deep learning frameworks) requires a few important details:

- To use mini-batches, the sequences need to be padded so that all the examples in a mini-batch have the same length.

- An

Embedding()layer can be initialized with pretrained values. These values can be either fixed or trained further on your dataset. If however your labeled dataset is small, it's usually not worth trying to train a large pre-trained set of embeddings. LSTM()has a flag calledreturn_sequencesto decide if you would like to return every hidden states or only the last one.- You can use

Dropout()right afterLSTM()to regularize your network.