NFS 学习笔记

Sun's Network File System

[TOC]

A client application issues system calls to the client-side file system (such as open(), read(), write(), close(), mkdir(), etc.) in order to access files which are stored on the server.

客户端文件系统的作用就是执行这些系统调用所需要执行的动作。比如,客户端发出一个read()请求,客户端文件系统可能需要向服务端文件系统发送一个消息,请求读取某个特定的block;服务端然后就从磁盘中读取对应的块,然后向客户端返回所读到的内容。客户端文件系统然后就把数据复制进提供给read()系统调用的buffer。

On To NFS

在设计NFS时,SUN使用了一种不寻常的策略:SUN并没有开发一套封闭的、具有专利的系统,而是开发了一套开放的协议,这套协议规定了客户端和服务端进行通信所需要使用的数据格式。不同的群体可以各自开发他们自己的NFS服务器。

Focus: Simple And Fast Server Crash Recovery

在NFSv2中,协议设计的主要目标是:simple and fast server crasg recovery. 在于给多客户端单服务端环境中,这个目标具有非常重大的意义。

Key To Fast Crash Recovery: Statelessness

服务器不记录客户端上发生的任何事。比如,服务端不会知道哪个客户端正在caching哪个block,或者哪个文件当前正在被哪个客户端打开。协议被设计成,向每个请求传递它所需要的所有信息。

比如考虑无状态协议中的 open() 系统调用。

char buffer[MAX];

int fd = open("foo", O_RDONLY); // get descriptor "fd"

read(fd, buffer, MAX); // read MAX bytes from foo (via fd)

read(fd, buffer, MAX); // read MAX bytes from foo

...

read(fd, buffer, MAX); // read MAX bytes from foo

close(fd); // close file

上面的代码例子中,open()返回的是服务端上某个文件的文件描述符,这个文件描述符之后被用于read()等系统调用。

在这个例子中,返回的文件描述符就是一种 shared state between server and client.

假设server在第一次read结束之后就崩溃了。在server重启止呕,客户端继续执行之后的read。然而,此时服务端并不知道之后接收到的fd代表的是哪个文件。为了解决这种问题,客户端和服务端需要使用某种重启策略,客户端需要保证在其内存中保存足够的信息,以便它能够将足够的信息传递给服务器。

甚至还有客户端崩溃的情况需要考虑。假设客户端在打开要给文件之后就崩溃了,那么服务端永远不会知道何时去close这个文件。

NFS采用的策略:每个客户端操作都包含了为了完成请求所需要的所有信息。

The NFSv2 Protocol

像open这样的系统调用明显是 stateful 的。但是用户想要使用这种系统调用。所以问题的核心变成了:如何设计出无状态的、支持 POSIX 接口的协议。

理解 NFS 协议的关键在于理解 file handle. File handle 用于唯一地描述某个操作的实施对象。

可以把 file handle 理解为具有三个重要组件: a volume identifier, an inode number, and a generation number.

Volume identifier 告知服务器请求涉及到的文件系统是哪个;

Inode number 告诉服务器请求的是分区内的哪个文件;

当 inode number 被重复使用时,需要 generation number,每当 inode number 被重复使用,generation number都会加一,服务端由此保证使用旧 file handle 的客户端不会恰巧访问新创建的文件。

First, the LOOKUP protocol message is used to obtain a file handle, which is then subsequently used to access file data. The client passes a directory file handle and name of a file to look up, and the handle to that file (or directory) plus its attributes are passed back to the client from the server.

比如,假设客户端已经获得了根目录 / 的 fh(通过NFS mount protocol来获得)。如果一个客户端通过 NFS 打开 /foo.txt,那么客户端会向服务端发送一个lookup request,参数为根目录的 fh 和文件名 foo.txt;如果成功,那么 foo.txt 的 fh 和 attribute 将会被返回。

From Protocol To Distributed File System

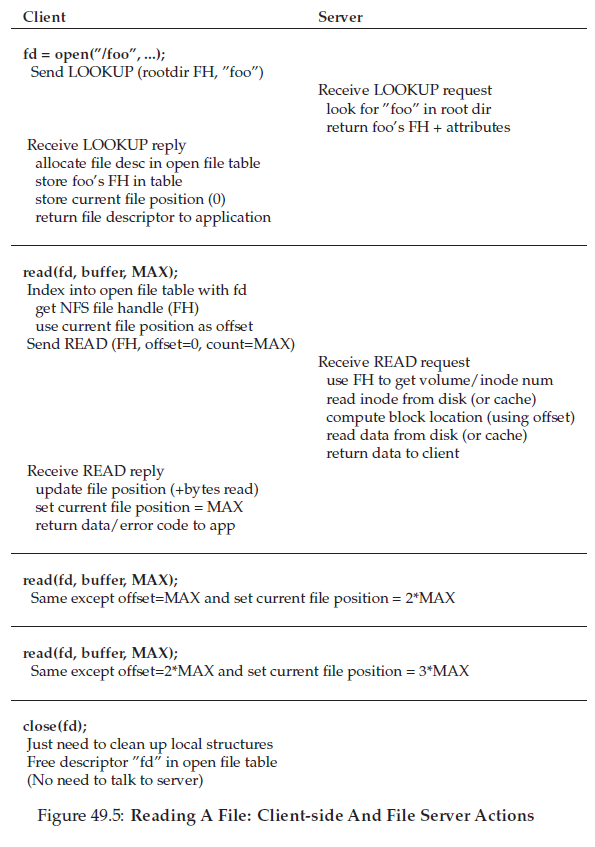

客户端如何记录文件访问相关的信息:维护一个 mapping from a integer file descriptor to an NFS file handle as well as the current file pointer. 客户端将每个read request转换成适当格式的 read protocol message which tells the server exactly which bytes from the file to read. 在一次成功 read 之后,客户端 update the current file position; 后续的read将会使用同一个fh,但是使用不同的offset

当文件第一次打开时,客户端文件系统发送一个 LOOKUP request message. Indeed, if a long pathname must be traversed (e.g., /home/remzi/foo.txt), the client would send three LOOKUPs: one to look up home in the directory /, one to look up remzi in home, and finally one to look up foo.txt in remzi.

Each server request has all the information needed to complete the request in its entirety.

Handling Server FailureWith Idempotent Operations

客户端有一个计时器。发送请求,当计时器耗尽,客户端直接执行resend。

The ability of the client to simply retry the request (regardless of what caused the failure) is due to an important property of most NFS requests: they are idempotent(幂等) 如果某个操作被执行多次的效果与被执行一次的效果相同,那么它就被称为是幂等的。

The heart of the design of crash recovery in NFS is the idempotency of most common operations. LOOKUP and READ requests are trivially idempotent, as they only read information from the file server and do not update it.

More interestingly, WRITE requests are also idempotent. If, for example, a WRITE fails, the client can simply retry it. The WRITE message contains the data, the count, and (importantly) the exact offset to write the data to. Thus, it can be repeated with the knowledge that the outcome of multiple writes is the same as the outcome of a single one.

Improving Performance: Client-side Caching

客户端文件系统缓存 file data and metadata that it has read from the server.

The cache also serves as a temporary buffer for writes.

The Cache Consistency Problem

假设 客户端C1 读文件 F,并且在C1本地内存中缓存了一份拷贝。同时C2 overwrites the file F, thus changing its contents.

第一个问题:

C2修改F之后会将file保存在自己的write buffer中,而不是立即就写回服务器。这期间从服务器获得的file将会是旧版本。Thus, by buffering writes at the client, other clientsmay get stale versions of the file, which may be undesirable. 这类问题被称为 update visibility

第二个问题:stale cache

in this case, C2 has finally flushed its writes to the file server, and thus the server has the latest version (F[v2]). However, C1 still has F[v1] in its cache; if a program running on C1 reads file F, it will get a stale version (F[v1]) and not the most recent copy (F[v2]), which is (often) undesirable.

First, to address update visibility, clients implement what is sometimes called flush-on-close (a.k.a., close-to-open) consistency semantics; specifically, when a file is written to and subsequently closed by a client application, the client flushes all updates to the server/

Second, to address the stale-cache problem, NFSv2 clients first check to see whether a file has changed before using its cached contents.Specifically, before using a cached block, the client-side file system will issue a GETATTR request to the server to fetch the file’s attributes.

实际实现中发现,NFS server was flooded with GETATTR requests. To remedy this situation, an attribute cache was added to each client. Attribute cache 每三秒刷新一次。

Implications On Server-Side Write Buffering

只有当写请求被彻底写入磁盘之后,NFS服务器才会向客户端返回success信息。如果仅仅在写入write buffer之后就返回success,那么可能会出问题:

Imagine the following sequence of writes as issued by a client:

write(fd, a_buffer, size); // fill first block with a’s

write(fd, b_buffer, size); // fill second block with b’s

write(fd, c_buffer, size); // fill third block with c’s

These writes overwrite the three blocks of a file with a block of a’s, then b’s, and then c’s. Thus, if the file initially looked like this:

xxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxx

yyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyy

zzzzzzzzzzzzzzzzzzzzzzzzzzzzzzzzzzzzzzzzzzzzzzzzzzzzzzzzzzzz

We might expect the final result after these writes to be like this, with the x’s, y’s, and z’s, would be overwritten with a’s, b’s, and c’s, respectively.

aaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaa

bbbbbbbbbbbbbbbbbbbbbbbbbbbbbbbbbbbbbbbbbbbbbbbbbbbbbbbbbbbb

cccccccccccccccccccccccccccccccccccccccccccccccccccccccccccc

Now let’s assume for the sake of the example that these three client writes were issued to the server as three distinct WRITE protocol messages. Assume the first WRITE message is received by the server and issued to the disk, and the client informed of its success. Now assume the second write is just buffered in memory, and the server also reports it success to the client before forcing it to disk; unfortunately, the server crashes before writing it to disk. The server quickly restarts and receives the third write request, which also succeeds.

Thus, to the client, all the requests succeeded, but we are surprised that the file contents look like this:

aaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaa

yyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyy <--- oops

cccccccccccccccccccccccccccccccccccccccccccccccccccccccccccc

为了避免上述问题,NFS服务器必须保证,在 inform the client of success 之前写请求被成功写入 persistent storage; 这样做使得客户端可以检查写期间的server failure, thus retry until it finally succeeds.

不过这也导致NFS服务器的写性能成为了瓶颈所在之处。