金字塔Lucas-Kanade光流示例

步骤

寻找角点

源码

cv::goodFeaturesToTrack( imgA, // Image to track cornersA, // Vector of detected corners (output) MAX_CORNERS, // Keep up to this many corners 0.01, // Quality level (percent of maximum) 5, // Min distance between corners cv::noArray(), // Mask 3, // Block size false, // true: Harris, false: Shi-Tomasi 0.04 // method specific parameter );

函数原型

void cv::goodFeaturesToTrack( //寻找哈尔角点 cv::InputArray image, // Input, CV_8UC1 or CV_32FC1 cv::OutputArray corners, // Output vector of corners int maxCorners, // Keep this many corners double qualityLevel, // (fraction) rel to best double minDistance, // Discard corner this close cv::InputArray mask = noArray(), // Ignore corners where mask=0 int blockSize = 3, // Neighborhood used bool useHarrisDetector = false, // false='Shi Tomasi metric' double k = 0.04 // Used for Harris metric );

| 参数 | 描述 |

|---|---|

| image | 单通道图像 |

| corner | 是vector<point2f>类型, 或者 cv::Mat类型(行为角点,列为x和y 的位置) |

| maxCorners | 角点数量 |

| qualityLevel | 通常介于0.1~0.01,返回质量 |

| minDistance | 相邻角点之间的最小间距 |

| mask | 必须与图像的尺寸大小相同,角点不会在mask=0 的地方生成 |

| blockSize | 计算角点时的邻域大小 |

| useHarrisDetector | 是否使用哈尔原始算法 |

| k | useHarrisDetector = true时的参数,最好保留默认值 |

寻找角点的亚像素精度

源码

cv::cornerSubPix( imgA, // Input image cornersA, // Vector of corners (input and output) cv::Size(win_size, win_size), // Half side length of search window cv::Size(-1, -1), // Half side length of dead zone (-1=none) cv::TermCriteria( cv::TermCriteria::MAX_ITER | cv::TermCriteria::EPS, 20, // Maximum number of iterations 0.03 // Minimum change per iteration ) );

函数原型

void cv::cornerSubPix( cv::InputArray image, // Input image cv::InputOutputArray corners, // Guesses in, and results out cv::Size winSize, // Area is NXN; N=(winSize*2+1) cv::Size zeroZone, // Size(-1,-1) to ignore cv::TermCriteria criteria // When to stop refinement );

| 参数 | 描述 |

|---|---|

| image | 计算角点的原始图像 |

| corners | 角点位置的初始值(从cv::goodFeaturesToTrack获得) |

| winSize | 指定将生成方程的窗口大小 |

| zeroZone | Size(-1,-1) to ignore |

| criteria | 终止条件 |

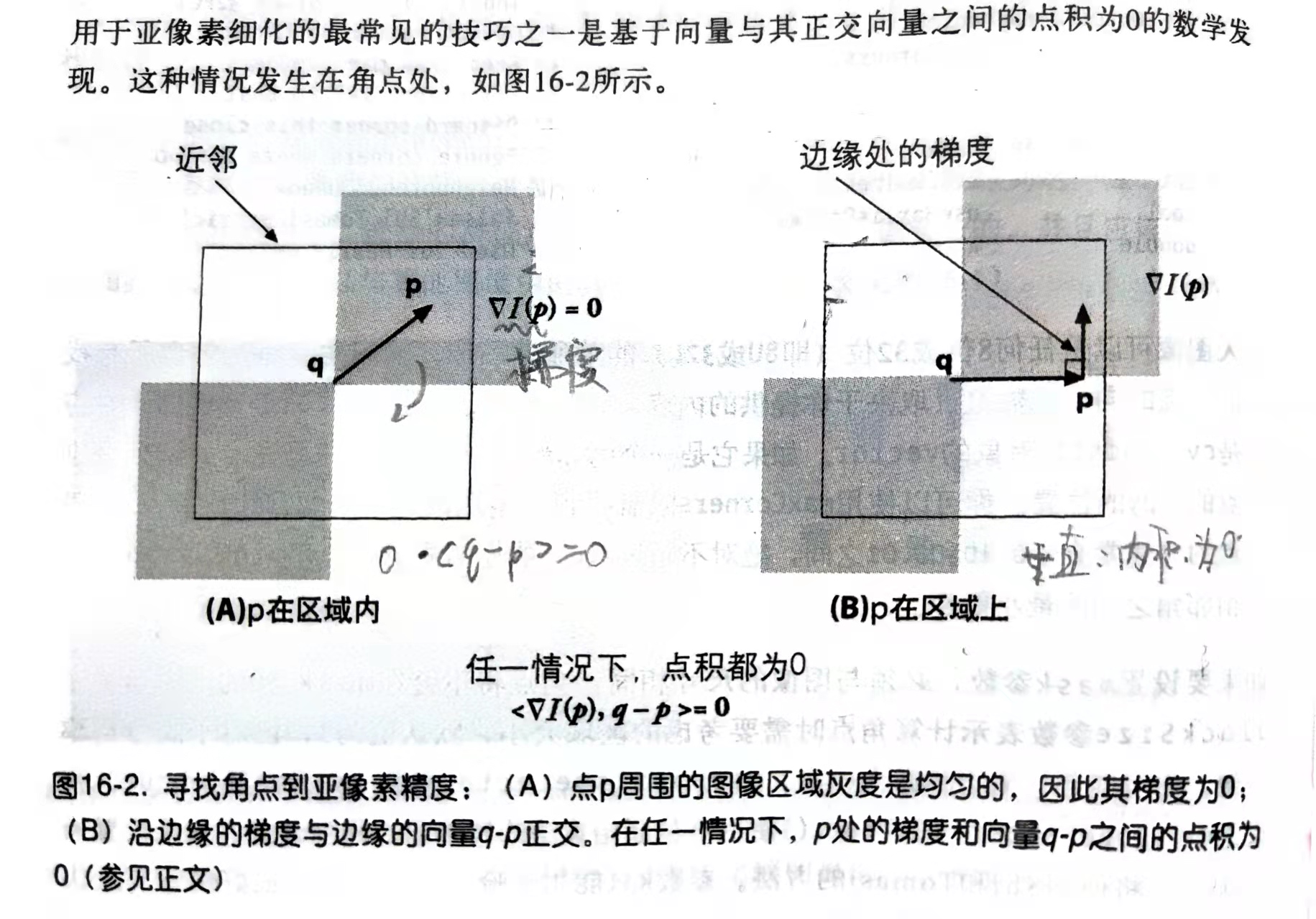

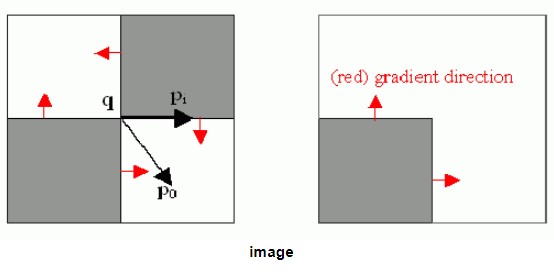

亚像素精度原理

p在q的邻域内,向量qp绕着q旋转360°. q向着的方向修正

In Figure 16-2, we assume a starting corner location q that is near the actual subpixel corner location. We examine vectors starting at point q and ending at p. When p is in a nearby uniform or “flat” region, the gradient there is 0. On the other hand, if the vector q-p aligns with an edge, then the gradient at p on that edge is orthogonal to the vector q-p. In either case, the dot product between the gradient at p and the vector qp is 0. We can assemble many such pairs of the gradient at a nearby point p and the associated vector q-p, set their dot product to 0, and solve this assemblage as a system of equations; the solution will yield a more accurate subpixel location for q, the exact location of the corner.

调用金字塔LK算法

源码

cv::calcOpticalFlowPyrLK( imgA, // Previous image imgB, // Next image cornersA, // Previous set of corners (from imgA) cornersB, // Next set of corners (from imgB) features_found, // Output vector, each is 1 for tracked cv::noArray(), // Output vector, lists errors (optional) cv::Size(win_size * 2 + 1, win_size * 2 + 1), // Search window size 5, // Maximum pyramid level to construct cv::TermCriteria( cv::TermCriteria::MAX_ITER | cv::TermCriteria::EPS, 20, // Maximum number of iterations 0.3 // Minimum change per iteration )

函数原型

void cv::calcOpticalFlowPyrLK( cv::InputArray prevImg, // Prior image (t-1), CV_8UC1 cv::InputArray nextImg, // Next image (t), CV_8UC1 cv::InputArray prevPts, // Vector of 2d start points (CV_32F) cv::InputOutputArray nextPts, // Results: 2d end points (CV_32F) cv::OutputArray status, // For each point, found=1, else=0 cv::OutputArray err, // Error measure for found points cv::Size winSize = Size(15,15), // size of search window int maxLevel = 3, // Pyramid layers to add cv::TermCriteria criteria = TermCriteria( // How to end search cv::TermCriteria::COUNT | cv::TermCriteria::EPS, 30, 0.01 ), int flags = 0, // use guesses, and/or eigenvalues double minEigThreshold = 1e-4 // for spatial gradient matrix );

| 参数 | 描述 |

|---|---|

| prevImg,nextImg | 连续的两帧图像 |

| prevPts,nextPts | 两帧图像对应的特征点 |

| status | 两帧图像的对应特征点匹配,status[i] = 1 |

| err | 对应特征点的错误度量 |

| winSize | 卷积核的大小 |

| maxLevel | 金字塔深度 |

| criteria | 终止条件 |

| flags | 终止条件用到的参数 |

| minEigThreshold | 类似cv::goodFeaturesToTrack的qualityLevel |

The argument flags can have one or both of the following values:

cv::OPTFLOW_LK_GET_MIN_EIGENVALS

Set this flag for a somewhat more detailed error measure. The default error meas‐

ure for the error output is the average per-pixel change in intensity between the

window around the previous corner and the window around the new corner.

With this flag set to true, that error is replaced with the minimum eigenvalue of

the Harris matrix associated with the corner.8cv::OPTFLOW_USE_INITIAL_FLOW

Use when the array nextPts already contains an initial guess for the feature’s

coordinates when the routine is called. (If this flag

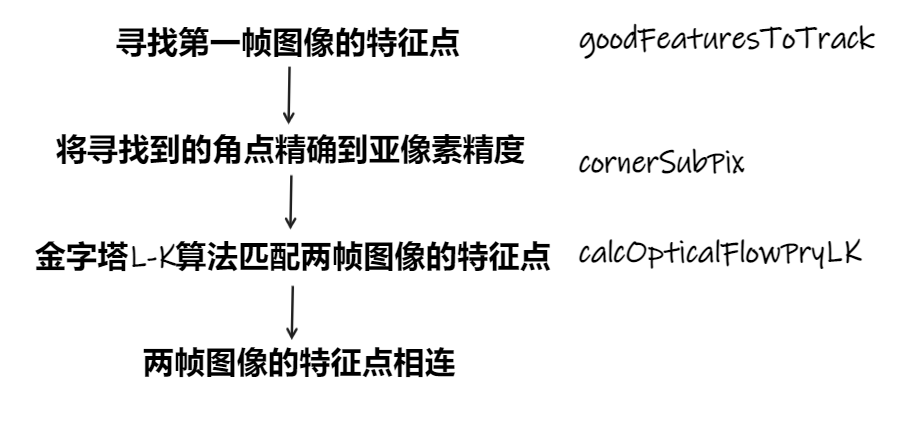

流程图

整体程序

// Example 16-1. Pyramid L-K optical flow // #include <iostream> #include <vector> #include <opencv2/opencv.hpp> static const int MAX_CORNERS = 1000; using std::cout; using std::endl; using std::vector; void help( char** argv ) { cout << "\nExample 16-1: Pyramid L-K optical flow example.\n" << endl; cout << "Call: " <<argv[0] <<" [image1] [image2]\n" << endl; cout << "\nExample:\n" << argv[0] << " ../example_16-01-imgA.png ../example_16-01-imgB.png\n" << endl; cout << "Demonstrates Pyramid Lucas-Kanade optical flow.\n" << endl; } int main(int argc, char** argv) { if (argc != 3) { help(argv); exit(-1); } // Initialize, load two images from the file system, and // allocate the images and other structures we will need for // results. // cv::Mat imgA = cv::imread(argv[1], cv::IMREAD_GRAYSCALE); cv::Mat imgB = cv::imread(argv[2], cv::IMREAD_GRAYSCALE); cv::Size img_sz = imgA.size(); int win_size = 10; cv::Mat imgC = cv::imread(argv[2], cv::IMREAD_GRAYSCALE); // The first thing we need to do is get the features // we want to track. // vector< cv::Point2f > cornersA, cornersB; const int MAX_CORNERS = 500; cv::goodFeaturesToTrack( imgA, // Image to track cornersA, // Vector of detected corners (output) MAX_CORNERS, // Keep up to this many corners 0.01, // Quality level (percent of maximum) 5, // Min distance between corners cv::noArray(), // Mask 3, // Block size false, // true: Harris, false: Shi-Tomasi 0.04 // method specific parameter ); cv::cornerSubPix( imgA, // Input image cornersA, // Vector of corners (input and output) cv::Size(win_size, win_size), // Half side length of search window cv::Size(-1, -1), // Half side length of dead zone (-1=none) cv::TermCriteria( cv::TermCriteria::MAX_ITER | cv::TermCriteria::EPS, 20, // Maximum number of iterations 0.03 // Minimum change per iteration ) ); // Call the Lucas Kanade algorithm // vector<uchar> features_found; cv::calcOpticalFlowPyrLK( imgA, // Previous image imgB, // Next image cornersA, // Previous set of corners (from imgA) cornersB, // Next set of corners (from imgB) features_found, // Output vector, each is 1 for tracked cv::noArray(), // Output vector, lists errors (optional) cv::Size(win_size * 2 + 1, win_size * 2 + 1), // Search window size 5, // Maximum pyramid level to construct cv::TermCriteria( cv::TermCriteria::MAX_ITER | cv::TermCriteria::EPS, 20, // Maximum number of iterations 0.3 // Minimum change per iteration ) ); // Now make some image of what we are looking at: // Note that if you want to track cornersB further, i.e. // pass them as input to the next calcOpticalFlowPyrLK, // you would need to "compress" the vector, i.e., exclude points for which // features_found[i] == false. for (int i = 0; i < static_cast<int>(cornersA.size()); ++i) { if (!features_found[i]) { continue; } line( imgC, // Draw onto this image cornersA[i], // Starting here cornersB[i], // Ending here cv::Scalar(0, 255, 0), // This color 1, // This many pixels wide cv::LINE_AA // Draw line in this style ); } cv::imshow("ImageA", imgA); cv::imshow("ImageB", imgB); cv::imshow("LK Optical Flow Example", imgC); cv::waitKey(0); return 0; }

结果

复现

#include <opencv2/highgui.hpp> #include <opencv2/imgcodecs.hpp> #include <opencv2/imgproc.hpp> #include <opencv2/tracking.hpp> #include <iostream> using namespace std; using namespace cv; #include <vector> int main(){ Mat imgA = imread("example_16-01-imgA.png",cv::IMREAD_GRAYSCALE); Mat imgB = imread("example_16-01-imgB.png",cv::IMREAD_GRAYSCALE); Mat imgC = imread("example_16-01-imgB.png",cv::IMREAD_UNCHANGED); // 寻找第一帧图像的特征点 vector<Point2f> ACorners,Bcorners; goodFeaturesToTrack(imgA,ACorners,500,0.01,5); // 将寻找到的角点精确到亚像素精度 TermCriteria criteria(TermCriteria::MAX_ITER|TermCriteria::EPS,20,0.3); cornerSubPix(imgA,ACorners,Size(10,10),Size(-1,-1),criteria); // 使用金字塔L-K算法匹配两帧图像的特征点 vector<uchar> isFeatureFound; calcOpticalFlowPyrLK(imgA,imgB,ACorners,Bcorners,isFeatureFound,noArray()); // 特征点运动轨迹 for(int i{0};i<(int)ACorners.size();i++){ line(imgC,ACorners[i],Bcorners[i],Scalar(0,255,0),1,cv::LINE_AA); } imshow("imgA",imgA); imshow("imgB",imgB); imshow("imgC",imgC); waitKey(0); return 0; }

本文作者:榴红八色鸫

本文链接:https://www.cnblogs.com/hezexian/p/16144930.html

版权声明:本作品采用知识共享署名-非商业性使用-禁止演绎 2.5 中国大陆许可协议进行许可。

【推荐】国内首个AI IDE,深度理解中文开发场景,立即下载体验Trae

【推荐】编程新体验,更懂你的AI,立即体验豆包MarsCode编程助手

【推荐】抖音旗下AI助手豆包,你的智能百科全书,全免费不限次数

【推荐】轻量又高性能的 SSH 工具 IShell:AI 加持,快人一步