kubeadm 线上集群部署(一) 外部 ETCD 集群搭建

| IP | Hostname | |

| 172.16.100.251 | nginx01 | 代理 apiverser |

| 172.16.100.252 | nginx02 | 代理 apiverser |

| 172.16.100.254 | apiserver01.xxx.com | VIP地址,主要用于nginx高可用确保nginx中途不会中途 |

| 172.16.100.51 | k8s-etcd-01 | etcd集群节点,默认关于ETCD所有操作均在此节点上操作 |

| 172.16.100.52 | k8s-etcd-02 | etcd集群节点 |

| 172.16.100.53 | k8s-etcd-03 | etcd集群节点 |

| 172.16.100.31 | k8s-master-01 | Work Master集群节点,默认关于k8s所有操作均在此节点上操作 |

| 172.16.100.32 | k8s-master-02 | Work Master集群节点 |

| 172.16.100.33 | k8s-master-03 | Work Master集群节点 |

| 172.16.100.34 | k8s-master-04 | Work Master集群节点 |

| 172.16.100.35 | k8s-master-05 | Work Master集群节点 |

| 172.16.100.36 | k8s-node-01 | Work node节点 |

| 172.16.100.37 | k8s-node-02 | Work node节点 |

| 172.16.100.38 | k8s-node-03 | Work node节点 |

介绍: Kubeadm集成了关于k8s部署的所有功能,在这里要强调的是,Kubeadm只负责安装和部署组件,不会参与其他服务的部署,比如有人以为可以用kubeadm安装nginx,这是k8s内部干的事情,和他没关系,在实际的生产环境过程当中,如果我们不熟悉每个组件的工作原理,那么我们将很难开展工作,比如排查故障,系统升级等。

首先,我们知道ETCD的安装在通信过程中可以使用http也可以使用https(默认),在作为基础设施的一部分,为安全考虑着想,一般线上都是使用的https,通过证书的方式进行加密通信,所以本次ETCD部署也会使用后者。

首先证书方面;

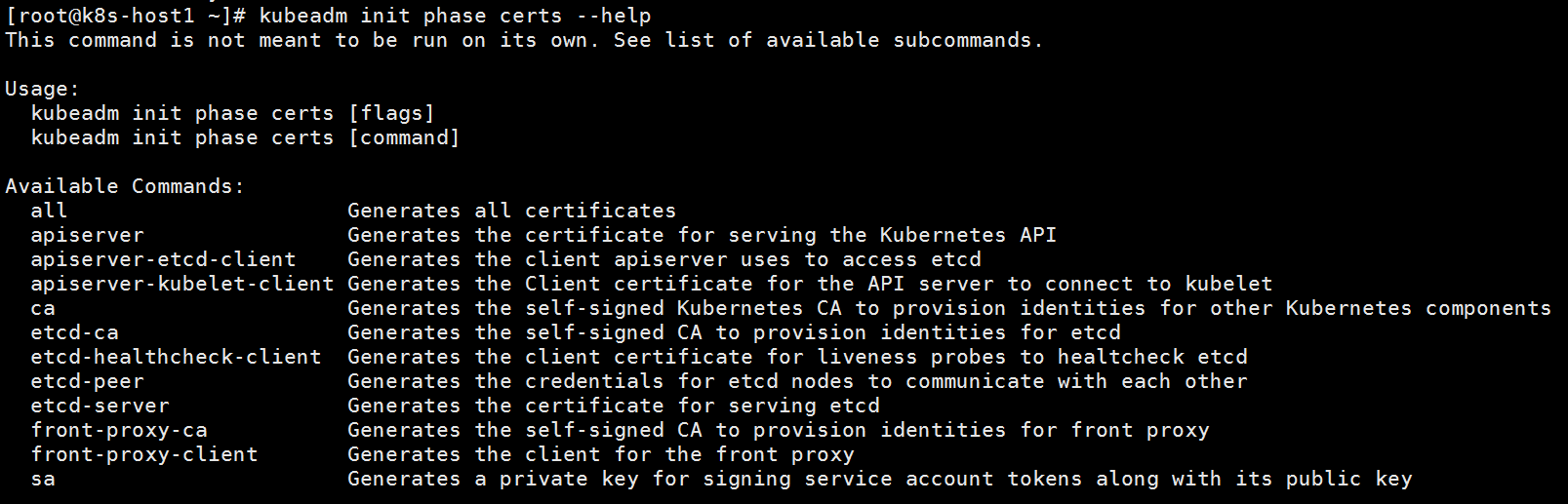

kubeadm集成了有关etcd和k8s所有证书的生成,如果你想生成的证书年限长一点通常可以直接修改源码重新编译打包成二进制文件,然后保存在你自己的文件里即可,这里推荐一篇别人写的.

证书期限修改 https://blog.51cto.com/lvsir666/2344986?source=dra

kubeadm创建证书的命令

kubeadm init phase certs --help

vim system_initializer.sh

#!/usr/bin/env bash systemctl stop firewalld systemctl disable firewalld swapoff -a sed -i 's/.*swap.*/#&/' /etc/fstab setenforce 0 sed -i 's/^SELINUX=enforcing$/SELINUX=permissive/' /etc/selinux/config sed -i "s/^SELINUX=enforcing/SELINUX=disabled/g" /etc/sysconfig/selinux sed -i "s/^SELINUX=enforcing/SELINUX=disabled/g" /etc/selinux/config sed -i "s/^SELINUX=permissive/SELINUX=disabled/g" /etc/sysconfig/selinux sed -i "s/^SELINUX=permissive/SELINUX=disabled/g" /etc/selinux/config cat <<EOF > /etc/sysctl.d/k8s.conf net.ipv4.ip_forward = 1 net.bridge.bridge-nf-call-ip6tables = 1 net.bridge.bridge-nf-call-iptables = 1 fs.may_detach_mounts = 1 vm.overcommit_memory=1 vm.panic_on_oom=0 vm.swappiness = 0 fs.inotify.max_user_watches=89100 fs.file-max=52706963 fs.nr_open=52706963 net.netfilter.nf_conntrack_max=2310720 EOF sysctl --system yum install ipvsadm ipset sysstat conntrack libseccomp wget -y :> /etc/modules-load.d/ipvs.conf module=( ip_vs ip_vs_lc ip_vs_wlc ip_vs_rr ip_vs_wrr ip_vs_lblc ip_vs_lblcr ip_vs_dh ip_vs_sh ip_vs_fo ip_vs_nq ip_vs_sed ip_vs_ftp nf_conntrack_ipv4 ) for kernel_module in ${module[@]};do /sbin/modinfo -F filename $kernel_module |& grep -qv ERROR && echo $kernel_module >> /etc/modules-load.d/ipvs.conf || : done systemctl enable --now systemd-modules-load.service mkdir -p /etc/yum.repos.d/bak mv /etc/yum.repos.d/CentOS* /etc/yum.repos.d/bak wget -P /etc/yum.repos.d/ http://mirrors.aliyun.com/repo/Centos-7.repo wget -P /etc/yum.repos.d/ http://mirrors.aliyun.com/repo/epel-7.repo wget -P /etc/yum.repos.d/ https://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo yum clean all cat <<EOF > /etc/yum.repos.d/kubernetes.repo [kubernetes] name=Kubernetes baseurl=http://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64 enabled=1 gpgcheck=0 repo_gpgcheck=0 gpgkey=http://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg http://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg EOF echo "* soft nofile 65536" >> /etc/security/limits.conf echo "* hard nofile 65536" >> /etc/security/limits.conf echo "* soft nproc 65536" >> /etc/security/limits.conf echo "* hard nproc 65536" >> /etc/security/limits.conf echo "* soft memlock unlimited" >> /etc/security/limits.conf echo "* hard memlock unlimited" >> /etc/security/limits.conf # 安装k8s组建 kubeadm reset iptables -F && iptables -t nat -F && iptables -t mangle -F && iptables -X ipvsadm --clear yum remove kubelet* -y yum remove kubectl* -y yum remove docker-ce* mkdir -p /data/kubelet ln -s /data/kubelet /var/lib/kubelet yum update -y && yum install -y kubeadm-1.13.5* kubelet-1.13.5* kubectl-1.13.5* kubernetes-cni-0.6* --disableexcludes=kubernetes # 替换kubeadm # 安装工具 yum install chrony vim net-tools -y ## 让集群支持nfs挂载 yum -y install nfs-utils && yum -y install rpcbind # 安装时间同步ntp yum install -y ntp echo "/usr/sbin/ntpdate cn.ntp.org.cn edu.ntp.org.cn &> /dev/null" >> /var/spool/cron/root # 安装docker,docker版本选择k8s官方推荐的版本 # https://kubernetes.io/docs/setup/cri/ yum install yum-utils -y yum install device-mapper-persistent-data lvm2 -y yum-config-manager \ --add-repo \ http://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo yum install docker-ce-18.06.3.ce -y mkdir /etc/docker cat >/etc/docker/daemon.json<<EOF { "exec-opts": ["native.cgroupdriver=systemd"], "registry-mirrors": ["https://fz5yth0r.mirror.aliyuncs.com"], "storage-driver": "overlay2", "storage-opts": [ "overlay2.override_kernel_check=true" ], "log-driver": "json-file", "log-opts": { "max-size": "1000m", "max-file": "50" } } EOF # docker 自动补全 yum install -y epel-release && cp /usr/share/bash-completion/completions/docker /etc/bash_completion.d/ yum install -y bash-completion systemctl enable --now docker.service systemctl enable --now kubelet.service systemctl start kubelet systemctl start start systemctl enable chronyd.service systemctl start chronyd.service yum install -y epel-release && cp /usr/share/bash-completion/completions/docker /etc/bash_completion.d/ yum install -y bash-completion source /usr/share/bash-completion/bash_completion source <(kubectl completion bash) echo "source <(kubectl completion bash)" >> ~/.bashrc # kubectl taint node k8s-host1 node-role.kubernetes.io/master=:NoSchedule

vim base_env_etcd_cluster_init.sh

#!/usr/bin/env bash

export HOST0=172.16.100.51

export HOST1=172.16.100.52

export HOST2=172.16.100.53

ETCDHOSTS=(${HOST0} ${HOST1} ${HOST2})

NAMES=("k8s-etcd-01" "k8s-etcd-02" "k8s-etcd-03")

sed -i '$a\'$HOST0' k8s-etcd-01' /etc/hosts

sed -i '$a\'$HOST1' k8s-etcd-02' /etc/hosts

sed -i '$a\'$HOST2' k8s-etcd-03' /etc/hosts

mkdir -p /etc/systemd/system/kubelet.service.d/

cat << EOF > /etc/systemd/system/kubelet.service.d/20-etcd-service-manager.conf

[Service]

ExecStart=

ExecStart=/usr/bin/kubelet --address=127.0.0.1 --pod-manifest-path=/etc/kubernetes/manifests --allow-privileged=true --cgroup-driver=systemd --pod-infra-container-image=registry.aliyuncs.com/google_containers/pause:3.1

Restart=always

EOF

# hostnamectl set-hostname

systemctl stop firewalld

systemctl disable firewalld

swapoff -a

sed -i 's/.*swap.*/#&/' /etc/fstab

setenforce 0

sed -i "s/^SELINUX=enforcing/SELINUX=disabled/g" /etc/sysconfig/selinux

sed -i "s/^SELINUX=enforcing/SELINUX=disabled/g" /etc/selinux/config

sed -i "s/^SELINUX=permissive/SELINUX=disabled/g" /etc/sysconfig/selinux

sed -i "s/^SELINUX=permissive/SELINUX=disabled/g" /etc/selinux/config

cat <<EOF > /etc/sysctl.d/k8s.conf

net.ipv4.ip_forward = 1

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

fs.may_detach_mounts = 1

vm.overcommit_memory=1

vm.panic_on_oom=0

vm.swappiness = 0

fs.inotify.max_user_watches=89100

fs.file-max=52706963

fs.nr_open=52706963

net.netfilter.nf_conntrack_max=2310720

EOF

sysctl -p /etc/sysctl.d/k8s.conf

wget -P /etc/yum.repos.d/ http://mirrors.aliyun.com/repo/epel-7.repo

wget -P /etc/yum.repos.d/ https://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo

cat <<EOF > /etc/yum.repos.d/kubernetes.repo

[kubernetes]

name=Kubernetes

baseurl=http://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64

enabled=1

gpgcheck=0

repo_gpgcheck=0

gpgkey=http://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg

http://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg

EOF

echo "* soft nofile 65536" >> /etc/security/limits.conf

echo "* hard nofile 65536" >> /etc/security/limits.conf

echo "* soft nproc 65536" >> /etc/security/limits.conf

echo "* hard nproc 65536" >> /etc/security/limits.conf

echo "* soft memlock unlimited" >> /etc/security/limits.conf

echo "* hard memlock unlimited" >> /etc/security/limits.conf

yum install ipvsadm ipset sysstat conntrack libseccomp wget -y

yum update -y

yum install -y kubeadm-1.13.5* kubelet-1.13.5* kubectl-1.13.5* kubernetes-cni-0.6* --disableexcludes=kubernetes

yum-config-manager \

--add-repo \

http://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo

yum install docker-ce-18.06.2.ce -y

mkdir /etc/docker

cat >/etc/docker/daemon.json<<EOF

{

"exec-opts": ["native.cgroupdriver=systemd"],

"storage-driver": "overlay2",

"storage-opts": [

"overlay2.override_kernel_check=true"

],

"log-driver": "json-file",

"log-opts": {

"max-size": "1000m",

"max-file": "50"

}

}

EOF

mkdir -p /data/docker

sed -i 's/ExecStart=\/usr\/bin\/dockerd/ExecStart=\/usr\/bin\/dockerd --graph=\/data\/docker/g' /usr/lib/systemd/system/docker.service

# docker 自动补全

systemctl start docker

yum install -y epel-release && cp /usr/share/bash-completion/completions/docker /etc/bash_completion.d/

yum install -y bash-completion

source /usr/share/bash-completion/bash_completion

docker pull registry.aliyuncs.com/google_containers/etcd:3.2.24

systemctl enable --now docker

systemctl enable --now kubelet

一目了然,你基本知道etcd所需要的证书是哪些了,下面我们来创建证书,创建证书之前我们需要生成关于etcd的初始化文件,通过执行 base_env_etcd_cluster_init.sh 获取

vim start.sh 修改IP

#!/usr/bin/env bash # sh-copy-id -i /root/.ssh/id_rsa.pub root@192.168.0.104 ## 参考链接 # #ED#ED#ED # https://kubernetes.io/docs/setup/independent/setup-ha-etcd-with-kubeadm/ # master 使用外部etcd集群 # https://kubernetes.io/docs/setup/independent/high-availability/ export HOST0=172.16.100.51 export HOST1=172.16.100.52 export HOST2=172.16.100.53 yum install -y wget # 初始化 kubeadm config mkdir -p /data/etcd curl -s https://gitee.com/hewei8520/File/raw/master/1.13.5/initializer_etcd_cluster/system_initializer.sh | bash curl -s https://gitee.com/hewei8520/File/raw/master/1.13.5/initializer_etcd_cluster/base_env_etcd_cluster_init.sh | bash wget https://github.com/qq676596084/QuickDeploy/raw/master/1.13.5/bin/kubeadm && chmod +x kubeadm ./kubeadm init phase certs etcd-ca ./kubeadm init phase certs etcd-server --config=/tmp/${HOST0}/kubeadmcfg.yaml ./kubeadm init phase certs etcd-peer --config=/tmp/${HOST0}/kubeadmcfg.yaml ./kubeadm init phase certs etcd-healthcheck-client --config=/tmp/${HOST0}/kubeadmcfg.yaml ./kubeadm init phase certs apiserver-etcd-client --config=/tmp/${HOST0}/kubeadmcfg.yaml systemctl restart kubelet sleep 3 kubeadm init phase etcd local --config=/tmp/${HOST0}/kubeadmcfg.yaml USER=root for HOST in ${HOST1} ${HOST2} do scp -r /tmp/${HOST}/* ${USER}@${HOST}: ssh ${USER}@${HOST} 'yum install -y wget' ssh ${USER}@${HOST} 'mkdir -p /etc/kubernetes/' scp -r /etc/kubernetes/pki ${USER}@${HOST}:/etc/kubernetes/ # 初始化系统 安装依赖以及docker ssh ${USER}@${HOST} 'curl -s https://gitee.com/hewei8520/File/raw/master/1.13.5/initializer_etcd_cluster/system_initializer.sh | bash' ssh ${USER}@${HOST} 'systemctl restart kubelet' sleep 3 ssh ${USER}@${HOST} 'kubeadm init phase etcd local --config=/root/kubeadmcfg.yaml' done sleep 5 docker run --rm -it \ --net host \ -v /etc/kubernetes:/etc/kubernetes registry.aliyuncs.com/google_containers/etcd:3.2.24 etcdctl \ --cert-file /etc/kubernetes/pki/etcd/peer.crt \ --key-file /etc/kubernetes/pki/etcd/peer.key \ --ca-file /etc/kubernetes/pki/etcd/ca.crt \ --endpoints https://${HOST0}:2379 cluster-health

耐心等待即可

注意:

如果你在初始化k8s时候,使用kubeadm reset操作,建议你手动重置你的ETCD集群,只需删掉数据目录手动重启kubelet服务即可,当服务可用就可以了。

参考资料:

https://kubernetes.io/docs/setup/independent/setup-ha-etcd-with-kubeadm/

https://kubernetes.io/docs/setup/independent/high-availability/