Kubernetes集群资源监控

Kubernetes集群资源监控

对k8s来说主要监控集群本身和Pod,集群监控主要有集群节点资源的监控,要了解每个节点的资源利用率如何,工作负载如何,这样可以了解集群中是否增加或减少节点。节点数要了解可用的节点有多少,不可用的节点有多少,这样可以对集群的成本做一定的评估。运行的pod的数量将显示可以的节点数是否足够,当某些节点挂掉之后,是否影响集群负载,能撑起整个集群

Pod的监控由这三个节点:kubernetes 指标,自身的指标主要是pod的实例数量和预期的数量,第二点容器的指标,每个pod要知道他的cpu、内存、网络的使用情况,第三点应用程序,主要和业务相关的

kubernetes监控的方案

| 监控方案 | 告警 | 特点 | 适用 |

|---|---|---|---|

| Zabbix | Y | 大量定制工作 | 大部分的互联网 |

| open-falcon | Y | 功能模块分解比较细,显得更复杂 | 系统和应用监控 |

| cAdvisor+Heapster+InfluxDB+Grafana | Y | 简单易用 | 容器监控 |

| cAvisor/exporter+Prometheus+Grafana | Y | 扩展性好 | 容器,应用,主机全方面监控 |

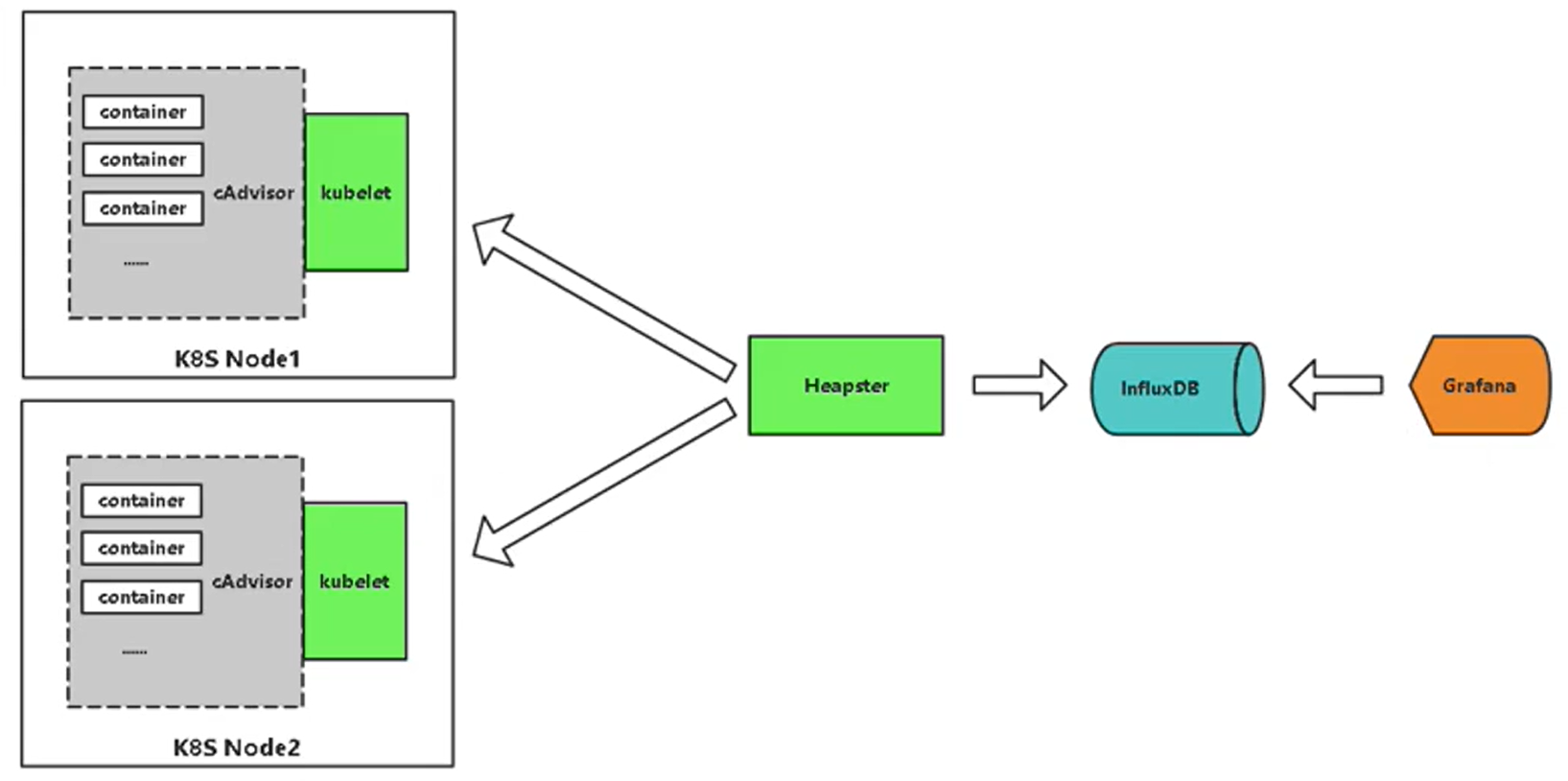

cAdvisor+Heapster+InfluxDB+Grafana

cAdisor是谷歌开源的一个容器监控系统,能采集容器的监控指标和宿主机的监控指标,Heapster这是谷歌开源的,主要收集cAdisor汇总的数据的,因为cAdisor不具有存储的功能只会实时的收集,用cAdisor必须要给他提供一个持久化存储,Heapster将每个节点cAdisor存储到InfluxDB中,cAdisor集成在kubelet中,只要kubelet启用的监控端口,都可以访问cAdisor收集的监控数据

kubelet会暴露一个端口,这个端口就是cAdisor采集数据的监控指标,Heapster是运行在k8s中作为一个Pod,他会从每个节点中收集cAdisor采集的数据,采集完后会存储到InfluxDB数据库中,InfluxDB是一个时序的数据库,非常适合以时间为查询条件的数据,Grafana进行仪表盘的展示

部署influxDB

采用Deployment方式,命名空间为kube-system

[root@k8s01 scripts]# cat influxdb.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: monitoring-influxdb

namespace: kube-system

spec:

selector:

matchLabels:

k8s-app: influxdb

replicas: 1

template:

metadata:

labels:

task: monitoring

k8s-app: influxdb

spec:

containers:

- name: influxdb

image: registry.cn-shenzhen.aliyuncs.com/cn-k8s/heapster-influxdb-amd64:v1.5.2

volumeMounts:

- mountPath: /data

name: influxdb-storage

volumes:

- name: influxdb-storage

emptyDir: {}

---

apiVersion: v1

kind: Service

metadata:

labels:

task: monitoring

kubernetes.io/cluster-service: 'true'

kubernetes.io/name: monitoring-influxdb

name: monitoring-influxdb

namespace: kube-system

spec:

ports:

- port: 8086

targetPort: 8086

selector:

k8s-app: influxdb

[root@k8s01 scripts]# kubectl create -f influxdb.yaml

deployment.apps/monitoring-influxdb created

service/monitoring-influxdb created

[root@k8s01 scripts]# kubectl get pods -n kube-system monitoring-influxdb-64f46fdcf-5jk8k

NAME READY STATUS RESTARTS AGE

monitoring-influxdb-64f46fdcf-5jk8k 1/1 Running 0 18s

[root@k8s01 scripts]#

部署heapster

Heapster首先从apiserver获取集群中所有Node的信息,然后通过这些Node上的kubelet获取有用数据,而kubelet本身的数据则是从cAdvisor得到。所有获取到的数据都被推到Heapster配置的后端存储中,并还支持数据的可视化。

由于Heapster需要从apiserver获取数据,所以需要对其进行授权。用户为cluster-admin,集群管理员用户。

[root@k8s01 scripts]# cat heapster.yaml

apiVersion: v1

kind: ServiceAccount

metadata:

name: heapster

namespace: kube-system

---

kind: ClusterRoleBinding

apiVersion: rbac.authorization.k8s.io/v1beta1

metadata:

name: heapster

roleRef:

kind: ClusterRole

name: cluster-admin

apiGroup: rbac.authorization.k8s.io

subjects:

- kind: ServiceAccount

name: heapster

namespace: kube-system

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: heapster

namespace: kube-system

spec:

selector:

matchLabels:

k8s-app: heapster

replicas: 1

template:

metadata:

labels:

task: monitoring

k8s-app: heapster

spec:

serviceAccountName: heapster

containers:

- name: heapster

image: registry.cn-shenzhen.aliyuncs.com/cn-k8s/heapster-amd64:v1.5.4

imagePullPolicy: IfNotPresent

command:

- /heapster

- --source=kubernetes:https://kubernetes.default

- --sink=influxdb:http://monitoring-influxdb:8086

---

apiVersion: v1

kind: Service

metadata:

labels:

task: monitoring

kubernetes.io/cluster-service: 'true'

kubernetes.io/name: Heapster

name: heapster

namespace: kube-system

spec:

ports:

- port: 80

targetPort: 8082

selector:

k8s-app: heapster

[root@k8s01 scripts]# kubectl create -f heapster.yaml

serviceaccount/heapster created

clusterrolebinding.rbac.authorization.k8s.io/heapster created

deployment.apps/heapster created

service/heapster created

[root@k8s01 scripts]# kubectl get pods -n kube-system heapster-76d7cbbb56-lk27t

NAME READY STATUS RESTARTS AGE

heapster-76d7cbbb56-lk27t 1/1 Running 0 54s

[root@k8s01 scripts]#

部署grafana

[root@k8s01 scripts]# cat grafana.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: monitoring-grafana

namespace: kube-system

spec:

selector:

matchLabels:

k8s-app: grafana

replicas: 1

template:

metadata:

labels:

task: monitoring

k8s-app: grafana

spec:

containers:

- name: grafana

image: registry.cn-shenzhen.aliyuncs.com/cn-k8s/heapster-grafana-amd64:v5.0.4

ports:

- containerPort: 3000

protocol: TCP

volumeMounts:

- mountPath: /var

name: grafana-storage

env:

- name: INFLUXDB_HOST

value: monitoring-influxdb

- name: GF_AUTH_BASIC_ENABLED

value: "false"

- name: GF_AUTH_ANONYMOUS_ENABLED

value: "true"

- name: GF_AUTH_ANONYMOUS_ORG_ROLE

value: Admin

- name: GF_SERVER_ROOT_URL

value: /

volumes:

- name: grafana-storage

emptyDir: {}

---

apiVersion: v1

kind: Service

metadata:

labels:

kubernetes.io/cluster-service: 'true'

kubernetes.io/name: monitoring-grafana

name: monitoring-grafana

namespace: kube-system

spec:

type: NodePort

ports:

- port : 80

targetPort: 3000

selector:

k8s-app: grafana

[root@k8s01 scripts]# kubectl create -f grafana.yaml

deployment.apps/monitoring-grafana created

service/monitoring-grafana created

[root@k8s01 scripts]# kubectl get pods -n kube-system monitoring-grafana-8546b578df-fbckb

NAME READY STATUS RESTARTS AGE

monitoring-grafana-8546b578df-fbckb 1/1 Running 0 52s

[root@k8s01 scripts]#

部署完成

[root@k8s01 scripts]# kubectl get pods,svc -n kube-system

NAME READY STATUS RESTARTS AGE

pod/coredns-6d8cfdd59d-8flfs 1/1 Running 2 47h

pod/heapster-76d7cbbb56-lk27t 1/1 Running 0 12m

pod/kube-flannel-ds-amd64-2pl7k 1/1 Running 10 7d23h

pod/kube-flannel-ds-amd64-8b2rz 1/1 Running 1 30h

pod/kube-flannel-ds-amd64-jtwwr 1/1 Running 5 8d

pod/monitoring-grafana-8546b578df-fbckb 1/1 Running 0 110s

pod/monitoring-influxdb-64f46fdcf-5jk8k 1/1 Running 0 18m

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/heapster ClusterIP 10.0.0.190 <none> 80/TCP 12m

service/kube-dns ClusterIP 10.0.0.2 <none> 53/UDP,53/TCP 8d

service/monitoring-grafana NodePort 10.0.0.81 <none> 80:31920/TCP 110s

service/monitoring-influxdb ClusterIP 10.0.0.148 <none> 8086/TCP 18m

[root@k8s01 scripts]#

通过31920端口访问:

浙公网安备 33010602011771号

浙公网安备 33010602011771号