Python多线程、多进程实现

劝君莫惜金缕衣,劝君惜取少年时。

花开堪折直须折,莫待无花空折枝。

内容摘要:

- paramiko模块

- 进程、与线程区别

- python GIL全局解释器锁

- 多线程

语法

join

线程锁之Lock\Rlock\信号量

将线程变为守护进程

Event事件

queue队列

生产者消费者模型- 多进程

语法

join

进程Queue

进程Pipe

进程Manager

进程同步

进程池

1.paramiko模块

安装:若已安装pip则:pip install paramiko

利用paramiko实现ssh,返回命令结果

上传下载文件:

#! /usr/bin/env python3

# -*- coding:utf-8 -*-

import paramiko

transport = paramiko.Transport("192.168.16.87", 22)

transport.connect(username="root", password="admin123")

sftp = paramiko.SFTPClient.from_transport(transport)

sftp.put("rsa.txt","/data/rsa.txt") # put file to Server(local file path, sever path)must write filename

# sftp.get("/root/.ssh/id_rsa","id_rsa_1") # get file from Server

transport.close()

上传下载文件key版

#! /usr/bin/env python3

# -*- coding:utf-8 -*-

import paramiko

private_key = paramiko.RSAKey.from_private_key_file("id_rsa_1") # 指定私钥所在文件

transport = paramiko.Transport("192.168.16.87", 22)

transport.connect(username="root", pkey=private_key)

sftp = paramiko.SFTPClient.from_transport(transport)

sftp.put("rsa.txt","/data/python6term/rsa.txt") # put file to Server(local file path, sever path)must write filename

# sftp.get("/root/.ssh/id_rsa","id_rsa_1") # get file from Server

transport.close()

2.线程与进程

2.1什么是线程?

线程是操作系统能够进行运算调度的最小单位(程序执行流的最小单元)。它被包含在进程之中,是进程中的实际运作单位。一条线程指的是进程中一个单一顺序的控制流,一个进程中可以并发多个线程,每条线程并行执行不同的任务。

一个标准的线程由线程ID,当前指令指针(PC),寄存器集合和堆栈组成。另外,线程是进程中的一个实体,是被系统独立调度和分派的基本单位,线程自己不拥有系统资源,只拥有一点儿在运行中必不可少的资源,但它可与同属一个进程的其它线程共享进程所拥有的全部资源。一个线程可以创建和撤消另一个线程,同一进程中的多个线程之间可以并发执行。由于线程之间的相互制约,致使线程在运行中呈现出间断性。线程也有就绪、阻塞和运行三种基本状态。就绪状态是指线程具备运行的所有条件,逻辑上可以运行,在等待处理机;运行状态是指线程占有处理机正在运行;阻塞状态是指线程在等待一个事件(如某个信号量),逻辑上不可执行。每一个程序都至少有一个线程,若程序只有一个线程,那就是程序本身。

线程是程序中一个单一的顺序控制流程。进程内一个相对独立的、可调度的执行单元,是系统独立调度和分派CPU的基本单位指运行中的程序的调度单位。在单个程序中同时运行多个线程完成不同的工作,称为多线程。

2.2什么是进程?

进程(Process)是计算机中的程序关于某数据集合上的一次运行活动,是系统进行资源分配和调度的基本单位,是操作系统结构的基础。在早期面向进程设计的计算机结构中,进程是程序的基本执行实体;在当代面向线程设计的计算机结构中,进程是线程的容器。程序是指令、数据及其组织形式的描述,进程是程序的实体。里面包含对各种资源的调用,内存的管理,网络接口的调用等。。。对各种资源管理的集合 就可以称为 进程

2.3线程和进程的区别

(1)线程共享内存空间;进程的内存是独立的

(2)同一个进程的线程之间可以直接交流;两个进程想通信,必须通过一个中间代理来实现

(3)创建新线程很简单; 创建新进程需要对其父进程进行一次克隆

(4)一个线程可以控制和操作同一进程里的其他线程;但是进程只能操作子进程

(5)改变主线程(如优先权),可能会影响其它线程;改变父进程,不影响子进程

3.Python GIL(Global Interpreter Lock)

无论你启多少个线程,你有多少个cpu, Python在执行的时候会淡定的在同一时刻只允许一个线程运行,这还叫什么多线程呀?

需要明确的一点是GIL并不是Python的特性,它是在实现Python解析器(CPython)时所引入的一个概念。就好比C++是一套语言(语法)标准,但是可以用不同的编译器来编译成可执行代码。有名的编译器例如GCC,INTEL C++,Visual C++等。Python也一样,同样一段代码可以通过CPython,PyPy,Psyco等不同的Python执行环境来执行。像其中的JPython就没有GIL。然而因为CPython是大部分环境下默认的Python执行环境。所以在很多人的概念里CPython就是Python,也就想当然的把GIL归结为Python语言的缺陷。所以这里要先明确一点:GIL并不是Python的特性,Python完全可以不依赖于GIL

more: http://www.dabeaz.com/python/UnderstandingGIL.pdf

4.threading模块

4.1启线程法1:

#! /usr/bin/env python3

# -*- coding:utf-8 -*-

import threading

import time

def func(name):

print("hello", name)

time.sleep(3)

t1 = threading.Thread(target=func,args=("alex",))

t2 = threading.Thread(target=func,args=("zingp",))

t1.start() # 并发

t2.start() # 并发

# func("alex") # 先执行

# func("zingp") # 再执行

启线程法2(类):

#! /usr/bin/env python3

# -*- coding:utf-8 -*-

import threading

import time

# tread类方法调用

class MyTread(threading.Thread):

def __init__(self,name):

super(MyTread,self).__init__()

self.name = name

def run(self):

print("hello",self.name)

time.sleep(3)

t1 = MyTread("alex")

t2 = MyTread("zingp")

t1.start() # 并发

t2.start() # 并发

4.2. join():主程序(主线程)会等待其他线程执行完

#! /usr/bin/env python3

# -*- coding:utf-8 -*-

import threading

import time

def func(name):

print("i am ", name)

time.sleep(3)

print("this tread done...")

star_time = time.time()

# 这里主线程和主线程启动的50个线程均为并行,互不影响;相当于51个线程并发

res = []

for i in range(50):

t = threading.Thread(target=func, args=(i,))

t.start()

res.append(t) # 每启动一个线程,就将这个实例加入列表

for j in res: # 历遍所启动的50个线程实例

j.join()

print("all tread has finished...", threading.current_thread())

print("total time:",time.time()-star_time)

4.3 Daemon:设置守护线程。程序会等待【非守护线程】结束才退出,不会等【守护线程】

#! /usr/bin/env python3

# -*- coding:utf-8 -*-

import threading

import time

def func(name):

print("i am ", name)

time.sleep(1)

print("this tread done...")

star_time = time.time()

# 这里主线程和主线程启动的50个线程均为并行,互不影响;相当于51个线程并发

for i in range(50):

t = threading.Thread(target=func, args=(i,))

t.setDaemon(True) # 将当前线程设置为守护线程,程序会等待【非守护线程】结束才退出,不会等【守护线程】。

t.start()

print("all tread has finished...", threading.current_thread(),threading.active_count())

print("total time:",time.time()-star_time)

4.4 线程锁、互斥锁Mutex

一个进程下可以启动多个线程,多个线程共享父进程的内存空间,也就意味着每个线程可以访问同一份数据,此时,如果2个线程同时要修改同一份数据,会出现什么状况?

import time

import threading

def addNum():

global num #在每个线程中都获取这个全局变量

print('--get num:',num )

time.sleep(1)

num -=1 #对此公共变量进行-1操作

num = 100 #设定一个共享变量

thread_list = []

for i in range(100):

t = threading.Thread(target=addNum)

t.start()

thread_list.append(t)

for t in thread_list: #等待所有线程执行完毕

t.join()

print('final num:', num )

正常来讲,这个num结果应该是0, 但在python 2.7上多运行几次,会发现,最后打印出来的num结果不总是0,为什么每次运行的结果不一样呢? 哈,很简单,假设你有A,B两个线程,此时都 要对num 进行减1操作, 由于2个线程是并发同时运行的,所以2个线程很有可能同时拿走了num=100这个初始变量交给cpu去运算,当A线程去处完的结果是99,但此时B线程运算完的结果也是99,两个线程同时CPU运算的结果再赋值给num变量后,结果就都是99。那怎么办呢? 很简单,每个线程在要修改公共数据时,为了避免自己在还没改完的时候别人也来修改此数据,可以给这个数据加一把锁, 这样其它线程想修改此数据时就必须等待你修改完毕并把锁释放掉后才能再访问此数据。

*注:不要在3.x上运行,不知为什么,3.x上的结果总是正确的,可能是自动加了锁

加锁版本:

import time

import threading

def addNum():

global num #在每个线程中都获取这个全局变量

print('--get num:',num )

time.sleep(1)

lock.acquire() #修改数据前加锁

num -=1 #对此公共变量进行-1操作

lock.release() #修改后释放

num = 100 #设定一个共享变量

thread_list = []

lock = threading.Lock() #生成全局锁

for i in range(100):

t = threading.Thread(target=addNum)

t.start()

thread_list.append(t)

for t in thread_list: #等待所有线程执行完毕

t.join()

print('final num:', num )

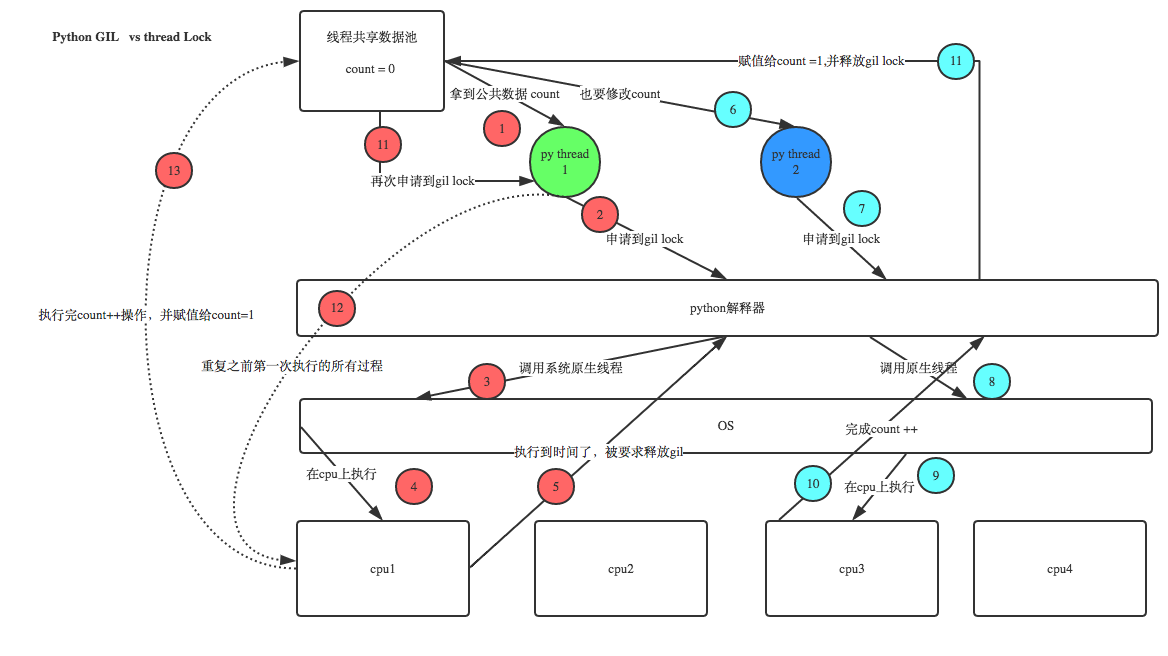

GIL VS Lock

Python已经有一个GIL来保证同一时间只能有一个线程来执行了,为什么这里还需要lock? 注意啦,这里的lock是用户级的lock,跟那个GIL没关系 ,具体看图:

RLock(递归锁)

说白了就是在一个大锁中还要再包含子锁

import threading, time

def run1():

print("grab the first part data")

lock.acquire()

global num

num += 1

lock.release()

return num

def run2():

print("grab the second part data")

lock.acquire()

global num2

num2 += 1

lock.release()

return num2

def run3():

lock.acquire()

res = run1()

print('--------between run1 and run2-----')

res2 = run2()

lock.release()

print(res, res2)

num, num2 = 0, 0

lock = threading.RLock()

for i in range(1):

t = threading.Thread(target=run3)

t.start()

while threading.active_count() != 1:

print(threading.active_count())

else:

print('----all threads done---')

print(num, num2)

Semaphore(信号量)

互斥锁 同时只允许一个线程更改数据,而Semaphore是同时允许一定数量的线程更改数据 ,比如厕所有3个坑,那最多只允许3个人上厕所,后面的人只能等里面有人出来了才能再进去。

#! /usr/bin/env python3

# -*- coding:utf-8 -*-

import threading

import time

def func(n):

semaphore.acquire()

time.sleep(1)

print("this thread is %s\n" % n)

semaphore.release()

semaphore = threading.BoundedSemaphore(5) # 信号量

for i in range(23):

t = threading.Thread(target=func,args=(i,))

t.start()

while threading.active_count() != 1:

pass

# print(threading.active_count())

else:print("all threads is done...")

4.5. Events

An event is a simple synchronization object;

the event represents an internal flag, and threads

can wait for the flag to be set, or set or clear the flag themselves.

event = threading.Event()

# a client thread can wait for the flag to be set

event.wait()

# a server thread can set or reset it

event.set()

event.clear()

If the flag is set, the wait method doesn’t do anything.

If the flag is cleared, wait will block until it becomes set again.

Any number of threads may wait for the same event.

通过Event来实现两个或多个线程间的交互,下面是一个红绿灯的例子,即起动一个线程做交通指挥灯,生成几个线程做车辆,车辆行驶按红灯停,绿灯行的规则。

#! /usr/bin/env python3

# -*- coding:utf-8 -*-

import threading

import time

def lighter():

count = 0

event.set()

while True:

if 5 < count < 10:

event.clear()

print("This is RED....")

elif count > 10:

event.set()

count = 0

else:

print("This is GREEN...")

time.sleep(1)

count += 1

def car(name):

while True:

if event.is_set():

print(" Green, The %s running...." % name)

time.sleep(1)

else:

print("RED, the %s is waiting..." % name)

event.wait()

print("green, %s start going..." % name)

event = threading.Event()

light = threading.Thread(target=lighter,)

light.start()

car1 = threading.Thread(target=car,args=("Tesla",))

car1.start()

4.6. 队列

queue is especially useful in threaded programming when information must be exchanged safely between multiple threads.

- class

queue.Queue(maxsize=0) #先入先出

- class

queue.LifoQueue(maxsize=0) #last in fisrt out - class

queue.PriorityQueue(maxsize=0) #存储数据时可设置优先级的队列

-

Constructor for a priority queue. maxsize is an integer that sets the upperbound limit on the number of items that can be placed in the queue. Insertion will block once this size has been reached, until queue items are consumed. If maxsize is less than or equal to zero, the queue size is infinite.

The lowest valued entries are retrieved first (the lowest valued entry is the one returned by

sorted(list(entries))[0]). A typical pattern for entries is a tuple in the form:(priority_number, data).

- exception

queue.Empty -

Exception raised when non-blocking

get()(orget_nowait()) is called on aQueueobject which is empty.

- exception

queue.Full -

Exception raised when non-blocking

put()(orput_nowait()) is called on aQueueobject which is full.

Queue.qsize()

Queue.empty() #return True if empty

Queue.full() # return True if full

Queue.put(item, block=True, timeout=None)-

Put item into the queue. If optional args block is true and timeout is None (the default), block if necessary until a free slot is available. If timeout is a positive number, it blocks at most timeout seconds and raises the

Fullexception if no free slot was available within that time. Otherwise (block is false), put an item on the queue if a free slot is immediately available, else raise theFullexception (timeout is ignored in that case).

Queue.put_nowait(item)-

Equivalent to

put(item, False).

Queue.get(block=True, timeout=None)-

Remove and return an item from the queue. If optional args block is true and timeout is None (the default), block if necessary until an item is available. If timeout is a positive number, it blocks at most timeout seconds and raises the

Emptyexception if no item was available within that time. Otherwise (block is false), return an item if one is immediately available, else raise theEmptyexception (timeout is ignored in that case).

Queue.get_nowait()-

Equivalent to

get(False).

Two methods are offered to support tracking whether enqueued tasks have been fully processed by daemon consumer threads.

Queue.task_done()-

Indicate that a formerly enqueued task is complete. Used by queue consumer threads. For each

get()used to fetch a task, a subsequent call totask_done()tells the queue that the processing on the task is complete.If a

join()is currently blocking, it will resume when all items have been processed (meaning that atask_done()call was received for every item that had beenput()into the queue).Raises a

ValueErrorif called more times than there were items placed in the queue.

Queue.join() block直到queue被消费完毕

#! /usr/bin/env python3

# -*- coding:utf-8 -*-

# 正常的队列是先进先出

import queue

q = queue.Queue(maxsize=10) # 设置队列大小,默认为无限大

q.put(1)

q.put(8)

q.put("alex")

q.put("zingp")

print(q.get())

print(q.get())

print(q.get())

print(q.get())

# 1

# 8

# jesson

# zingp

# print(q.get(timeout=5))

# put()和get()都可以设置超时时间,若设置,超时会报错。没设置则会卡住(阻塞)

q2 = queue.LifoQueue() # 后进先出

q2.put(1)

q2.put(2)

q2.put("zingp")

print(q2.get())

print(q2.get())

print(q2.get())

# zingp

# 2

# 1

q3 = queue.PriorityQueue() # 设置优先级

q3.put((-1,"chenronghua"))

q3.put((6,"hanyang"))

q3.put((10,"jesson"))

q3.put((4,"wangsen"))

print(q3.get())

print(q3.get())

print(q3.get())

print(q3.get())

# (-1, 'chenronghua')

# (4, 'wangsen')

# (6, 'hanyang')

# (10, 'jesson')

4.7. 生产者消费者模型

在并发编程中使用生产者和消费者模式能够解决绝大多数并发问题。该模式通过平衡生产线程和消费线程的工作能力来提高程序的整体处理数据的速度。

为什么要使用生产者和消费者模式?

在线程世界里,生产者就是生产数据的线程,消费者就是消费数据的线程。在多线程开发当中,如果生产者处理速度很快,而消费者处理速度很慢,那么生产者就必须等待消费者处理完,才能继续生产数据。同样的道理,如果消费者的处理能力大于生产者,那么消费者就必须等待生产者。为了解决这个问题于是引入了生产者和消费者模式。

什么是生产者消费者模式?

生产者消费者模式是通过一个容器来解决生产者和消费者的强耦合问题。生产者和消费者彼此之间不直接通讯,而通过阻塞队列来进行通讯,所以生产者生产完数据之后不用等待消费者处理,直接扔给阻塞队列,消费者不找生产者要数据,而是直接从阻塞队列里取,阻塞队列就相当于一个缓冲区,平衡了生产者和消费者的处理能力。

下面来学习一个最基本的生产者消费者模型的例子

import threading,time

import queue

q = queue.Queue(maxsize=10)

def Producer(name):

count = 1

while True:

q.put("骨头%s" % count)

print("生产了骨头",count)

count +=1

time.sleep(0.1)

def Consumer(name):

#while q.qsize()>0:

while True:

print("[%s] 取到[%s] 并且吃了它..." %(name, q.get()))

time.sleep(1)

p = threading.Thread(target=Producer,args=("Alex",))

c = threading.Thread(target=Consumer,args=("ChengRonghua",))

c1 = threading.Thread(target=Consumer,args=("王森",))

p.start()

c.start()

c1.start()

5.多进程

5.1语法

创建进程的语法和线程差不多,下面直接看段代码,实现启十个进程,每个进程又启一个线程~~~

#! /usr/bin/env python3

# -*- coding:utf-8 -*-

import multiprocessing

import time,threading

def thread_id():

"""获得线程ID。"""

print(" thread..")

print("thread_id:%s\n" % threading.get_ident())

def hello(name):

time.sleep(2)

print("hello %s..." % name)

# 启一个线程

t = threading.Thread(target=thread_id,)

t.start()

if __name__ == "__main__": # windows环境下必须写这句,不写会报错

for i in range(10):

# 启一个进程和一个线程的语法都差不多

p = multiprocessing.Process(target=hello,args=("progress %s" % i,))

p.start()

5.2 每一个进程都是由父进程创建

#! /usr/bin/env python3

# -*- coding:utf-8 -*-

# 每一个进程都是由父进程创建的

import multiprocessing

import os

def info(title):

print(title)

print("module name:", __name__)

print("parent process:",os.getppid())

print("process id:",os.getpid())

print("\n")

def f(name):

info("child process..")

print("hello",name)

if __name__ == "__main__":

info("\033[31;1m main process\033[0m ")

p = multiprocessing.Process(target=f,args=("jack",))

p.start()

5.3 进程Queue:进程间的通讯(数据传递)

#! /usr/bin/env python3

# -*- coding:utf-8 -*-

from multiprocessing import Process, Queue

def f(q):

q.put([42, None, 'hello'])

if __name__ == '__main__':

q = Queue()

p = Process(target=f, args=(q,))

p.start()

print(q.get()) # prints "[42, None, 'hello']"

p.join()

5.4进程Pipe:通过管道实现进程间的通讯

The Pipe() function returns a pair of connection objects connected by a pipe which by default is duplex (two-way). For example:

#! /usr/bin/env python3

# -*- coding:utf-8 -*-

# 进程之间也可以通过管道通讯

from multiprocessing import Process,Pipe

def f(conn):

conn.send([42,None,"hello from child"]) # 发数据

conn.send([42, None, "hello from child"]) # 多次发数据

print("from parent:",conn.recv()) # 收数据

conn.close()

if __name__ == "__main__":

parent_conn,child_conn = Pipe() # 创建管道,通过管道实现进程间通讯

p = Process(target=f,args=(child_conn,))

p.start()

print(parent_conn.recv()) # 接收数据

print(parent_conn.recv()) # 多次接收数据

parent_conn.send("hello zingp......")

p.join()

5.5进程Manager:真正实现进程间的数据共享(不只是数据传递)

A manager object returned by Manager() controls a server process which holds Python objects and allows other processes to manipulate them using proxies.

A manager returned by Manager() will support types list, dict, Namespace, Lock, RLock, Semaphore, BoundedSemaphore, Condition, Event, Barrier, Queue, Value and Array. For example,

#! /usr/bin/env python3

# -*- coding:utf-8 -*-

# 实现了进程之间的数据共享

from multiprocessing import Process, Manager

import os

def f(d,l):

d["name"] = "alex"

d["sex"] = "Man"

d["age"] = 33

l.append(os.getpid())

print(l)

if __name__ == "__main__":

with Manager() as manager:

d = manager.dict() # 生成一个字典,可以在多个进程直接共享和传递

l = manager.list(range(5)) # 生成一个列表,可以在多个进程直接共享和传递

res = []

for i in range(10):

p = Process(target=f,args=(d,l))

p.start()

res.append(p)

for j in res: # 等待结果

j.join()

print(d)

print(l)

5.6 进程同步

Without using the lock output from the different processes is liable to get all mixed up.

from multiprocessing import Process, Lock

def f(l, i):

l.acquire()

try:

print('hello world', i)

finally:

l.release()

if __name__ == '__main__':

lock = Lock()

for num in range(10):

Process(target=f, args=(lock, num)).start()

5.7 进程池Pool

进程池内部维护一个进程序列,当使用时,则去进程池中获取一个进程,如果进程池序列中没有可供使用的进进程,那么程序就会等待,直到进程池中有可用进程为止。

进程池中有两个方法:

- apply

- apply_async

#! /usr/bin/env python3

# -*- coding:utf-8 -*-

from multiprocessing import Pool

import os,time

def Foo(i):

time.sleep(2)

print("in process",os.getpid())

return i + 100

def Bar(arg):

print("-->exec done:",arg,os.getpid())

if __name__ == "__main__":

pool = Pool(processes=3) # 允许进程池同时放入5个进程

print("主进程:",os.getpid())

for i in range(10):

pool.apply_async(func=Foo,args=(1,),callback=Bar) # callback = 回调

# 这里回调的函数是主进程去回调的(生产中若所有进程完毕后将结果写入数据库,只需要写个回调就行了,不必每个进程中写入数据库)

# pool.apply(func=Foo,args=(1,)) # 串行

# pool.apply_async(func=Foo,args=(1,)) # 并行

print("end")

pool.close()

pool.join() # 这里一定是先close再join否则会出问题。。。如果注释掉该句,程序会直接关闭

浙公网安备 33010602011771号

浙公网安备 33010602011771号