Ubuntu20.04上容器、中间件等安装随记

1. 安装docker

教程 - https://www.runoob.com/docker/ubuntu-docker-install.html

在企业级应用中建议采用手动安装方式,或者确保安装脚本的安全性。

若个人使用,直接用官方脚本安装docker即可。

curl -fsSL https://get.docker.com -o get-docker.sh

sh get-docker.sh --mirror Aliyun

- 离线安装docker方式

- 离线安装docker-compose方式

2. 安装MySQL

1)Standalone,采用docker方式

sudo docker container run -it -d --name mysql-master -p 3306:3306 -v mysql-data:/var/lib/mysql -e MYSQL_ROOT_PASSWORD=1qaz2wsx mysql:8.0.29

2)主从

1. mysql配置文件

[mysqld] log-bin=binlog server-id=1

-

从,配置server-id,与主不能重复

[mysqld] server-id=2

-

主,创建备份账户并授权replication slave

grant replication slave on *.* to 'repl'@'%' identified by '123456'; ### 8.0以前版本 create user 'repl'@'%' identified by '1qaz2wsx'; ### 8.0以后版本 grant replication slave on *.* to 'repl'@'%'; flush privileges;

若主库有数据需要同步,先dump原有数据

flush tables with read lock; ### 锁主库实例,不允许写 mysqldump --all-databases --master-data > dump.db -u root -p ### 备份原有数据(mysql 8.0以前版本) mysqldump --all-databases --source-data > dump.db -u root -p ### 备份原有数据(mysql 8.0以后版本) show master status; ### 记录binlog位置(recorded_log_file_name, recorded_log_position) unlock tables; ### 解锁库实例

-

从,导入dump数据

mysql < dump.db -u root -p

-

从,设置master配置

CHANGE MASTER TO MASTER_HOST='192.168.1.31', MASTER_USER='repl', MASTER_PASSWORD='1qaz2wsx', MASTER_PORT=3306, MASTER_LOG_FILE='binlog.000003', MASTER_LOG_POS=28924, MASTER_CONNECT_RETRY=10, GET_MASTER_PUBLIC_KEY=1;

-

从,执行

start slave;

SHOW SLAVE STATUS;

若中途出现错误需要重新slave,则

stop slave;

reset slave;

3. 安装Redis

1)Standalone

sudo docker run -d --name redis-master -p 6379:6379 -v /home/chris/config/redis:/usr/local/etc/redis -v redis-data:/data redis:6.2.7-alpine redis-server /usr/local/etc/redis/redis.conf

可能需要考虑的配置项修改:

#bind 127.0.0.1 -::1 protected-mode yes port 6379 daemonize no logfile "/data/redis-6379.log" dbfilename dump-6379.rdb appendonly yes appendfilename "appendonly-6379.aof"

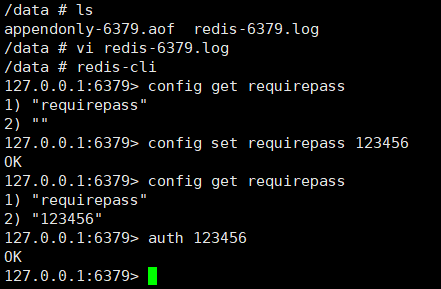

启动后进入redis容器,设置requirepass

4. 安装RabbitMQ

1)Standalone,Docker方式安装

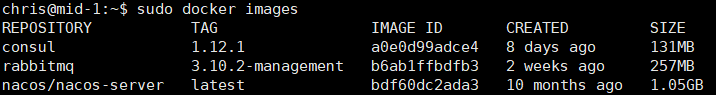

sudo docker run -d --name rabbitmq-master \ --hostname rabbitmq-master \ -p 5672:5672 -p 15672:15672 \ -v rabbitmq-data:/var/lib/rabbitmq \ -e RABBITMQ_DEFAULT_USER=admin \ -e RABBITMQ_DEFAULT_PASS=1qaz2wsx \ -e RABBITMQ_DEFAULT_VHOST=master_vhost \ rabbitmq:3.10.2-management

web访问15672验证

5. 安装Consul

1)开发模式,docker安装

consul server

sudo docker run \ -d \ --name=consul-server-1 \ -p 8500:8500 \ -p 8600:8600/udp \ consul:1.12.1 agent -server -ui -node=server-1 -bootstrap-expect=1 -client=0.0.0.0

sudo docker exec consul-server-1 consul members ### 获取 consul server ip

consul client

sudo docker run \ -d \ --name=consul-client-1 consul:1.12.1 agent -node=client-1 -join=172.17.0.3

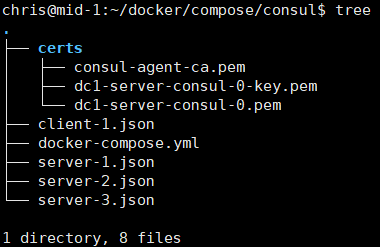

2)docker compose安装

为安全通信,创建CA,certification(可使用consul cli)

### 生成节点通信加密密钥 sudo docker exec consul-server-1 consul keygen ### 生成CA sudo docker exec consul-server-1 consul tls ca create ### 生成证书 sudo docker exec consul-server-1 consul tls cert create -server -dc dc1

配置文件server-*.json

{ "node_name": "consul-server-1", "server": true, "ui_config": { "enabled": true }, "data_dir": "/consul/data", "addresses": { "http": "0.0.0.0" }, "retry_join": ["consul-server-2", "consul-server-3"], "encrypt": "VLNUtEjVF1+Pl+DN4OhRCpAm90m1xR1cePHSGvBjqBc=", "verify_incoming": true, "verify_outgoing": true, "verify_server_hostname": true, "ca_file": "/consul/config/certs/consul-agent-ca.pem", "cert_file": "/consul/config/certs/dc1-server-consul-0.pem", "key_file": "/consul/config/certs/dc1-server-consul-0-key.pem" }

配置文件client-*.json

{ "node_name": "consul-client-1", "data_dir": "/consul/data", "retry_join": ["consul-server-1", "consul-server-2", "consul-server-3"], "encrypt": "VLNUtEjVF1+Pl+DN4OhRCpAm90m1xR1cePHSGvBjqBc=", "verify_incoming": true, "verify_outgoing": true, "verify_server_hostname": true, "ca_file": "/consul/config/certs/consul-agent-ca.pem", "cert_file": "/consul/config/certs/dc1-server-consul-0.pem", "key_file": "/consul/config/certs/dc1-server-consul-0-key.pem" }

docker-compose.yml

version: "3.7" services: consul-server-1: image: consul:1.12.1 container_name: consul-server-1 restart: always ports: - "8500:8500" - "8600:8600/tcp" - "8600:8600/udp" volumes: - ./server-1.json:/consul/config/server-1.json:ro - ./certs/:/consul/config/certs/:ro networks: - consul command: "agent -bootstrap-expect=3" consul-server-2: image: consul:1.12.1 container_name: consul-server-2 restart: always volumes: - ./server-2.json:/consul/config/server-2.json:ro - ./certs/:/consul/config/certs/:ro networks: - consul command: "agent -bootstrap-expect=3" consul-server-3: image: consul:1.12.1 container_name: consul-server-3 restart: always volumes: - ./server-3.json:/consul/config/server-3.json:ro - ./certs/:/consul/config/certs/:ro networks: - consul command: "agent -bootstrap-expect=3" consul-client-1: image: consul:1.12.1 container_name: consul-client-1 restart: always volumes: - ./client-1.json:/consul/config/client-1.json:ro - ./certs/:/consul/config/certs/:ro networks: - consul command: "agent" networks: consul: driver: bridge

启动

sudo docker compose -f docker-compose.yml up

6. 安装Nacos

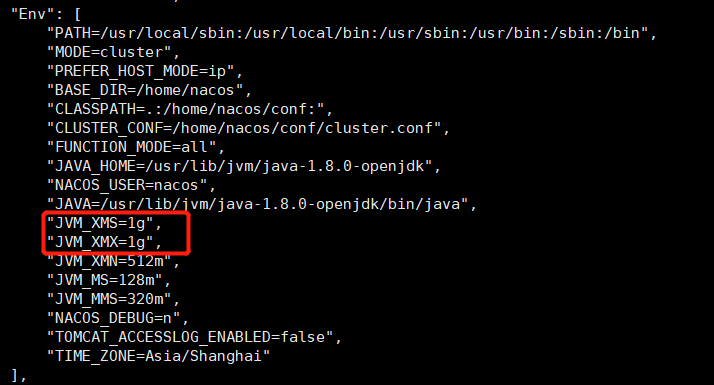

***建议用consul代替nacos。

sudo docker run -d -e MODE=standalone -p 8848:8848 --name nacos-server nacos/nacos-server:latest

7. 安装EFK(Elasticsearch, Filebeat, Kibana)

1)Standalone

采用docker compose方式安装,内存4G以上

docker-compose.yml

version: '2.2' services: es01: image: elasticsearch:7.17.4 container_name: es01 environment: - node.name=es01 - cluster.name=es-docker-cluster - discovery.seed_hosts=es02,es03 - cluster.initial_master_nodes=es01,es02,es03 - bootstrap.memory_lock=true - "ES_JAVA_OPTS=-Xms512m -Xmx512m" ulimits: memlock: soft: -1 hard: -1 volumes: - data01:/usr/share/elasticsearch/data ports: - 9200:9200 networks: - elastic es02: image: elasticsearch:7.17.4 container_name: es02 environment: - node.name=es02 - cluster.name=es-docker-cluster - discovery.seed_hosts=es01,es03 - cluster.initial_master_nodes=es01,es02,es03 - bootstrap.memory_lock=true - "ES_JAVA_OPTS=-Xms512m -Xmx512m" ulimits: memlock: soft: -1 hard: -1 volumes: - data02:/usr/share/elasticsearch/data networks: - elastic es03: image: elasticsearch:7.17.4 container_name: es03 environment: - node.name=es03 - cluster.name=es-docker-cluster - discovery.seed_hosts=es01,es02 - cluster.initial_master_nodes=es01,es02,es03 - bootstrap.memory_lock=true - "ES_JAVA_OPTS=-Xms512m -Xmx512m" ulimits: memlock: soft: -1 hard: -1 volumes: - data03:/usr/share/elasticsearch/data networks: - elastic kibana: image: kibana:7.17.4 container_name: kibana environment: ELASTICSEARCH_URL: http://es01:9200 ports: - "5601:5601" networks: - elastic volumes: - ./kibana.yml:/usr/share/kibana/config/kibana.yml filebeat: image: docker.elastic.co/beats/filebeat:7.17.4 container_name: filebeat user: root volumes: - ./filebeat.yml:/usr/share/filebeat/filebeat.yml - /var/run/docker.sock:/var/run/docker.sock - /var/lib/docker/containers:/var/lib/docker/containers:ro networks: - elastic volumes: data01: driver: local data02: driver: local data03: driver: local networks: elastic: driver: bridge

filebeat.yml

获取docker容器的日志

filebeat.inputs: - type: container paths: - /var/lib/docker/containers/*/*.log json.message_key: log json.keys_under_root: true processors: - add_docker_metadata: ~ output.elasticsearch: hosts: ["es01:9200"]

- 获取应用日志示例

filebeat.inputs: - type: filestream enabled: true id: tp-vehicle paths: - /var/logs/vehicle-archive/catalina.out fields: source: vehicle parsers: - multiline: type: pattern pattern: '^\[\d{2}:\d{2}:\d{2}:\d{3}] ' negate: true match: after - type: filestream enabled: true id: tp-driver paths: - /var/logs/driver-archive/catalina.out fields: source: driver parsers: - multiline: type: pattern pattern: '^\[\d{2}:\d{2}:\d{2}:\d{3}] ' negate: true match: after - type: filestream enabled: true id: tp-transpcorp paths: - /var/logs/transpcorp-archive/catalina.out fields: source: transpcorp parsers: - multiline: type: pattern pattern: '^\[\d{2}:\d{2}:\d{2}:\d{3}] ' negate: true match: after - type: filestream enabled: true id: tp-event paths: - /var/logs/event-archive/catalina.out fields: source: event parsers: - multiline: type: pattern pattern: '^\[\d{2}:\d{2}:\d{2}:\d{3}] ' negate: true match: after setup.ilm.enabled: false setup.template.name: "themeportrait-log" setup.template.pattern: "themeportrait-*" setup.template.overwrite: true setup.template.enabled: true output.elasticsearch: hosts: ["es01:9200"] index: "themeportrait-%{[fields.source]}" indices: - index: 'themeportrait-vehicle' when.equals: fields.source: 'vehicle' - index: 'themeportrait-driver' when.equals: fields.source: 'driver' - index: 'themeportrait-transpcorp' when.equals: fields.source: 'transpcorp' - index: 'themeportrait-event' when.equals: fields.source: 'event' #processors: # - add_host_metadata: ~

kibana.yml

server.port: 5601 server.host: "0" elasticsearch.hosts: ["http://es01:9200"] i18n.locale: "zh-CN"

以上文件置于同一文件夹下,运行

docker-compose up -d ### 新版docker compose

清醒时做事,糊涂时读书,大怒时睡觉,独处时思考; 做一个幸福的人,读书,旅行,努力工作,关心身体和心情,成为最好的自己

-- 共勉