Prometheus部署及Grafana可视化

博主自身的环境:使用docker部署

镜像以及json资源包连接

链接:https://pan.baidu.com/s/1jOIxJF9C_S_7XTeaurWXHw?pwd=eaq4

提取码:eaq4

node-exporter组件部署(调取虚拟机/云主机/主机的各种系统参数)

1.安装node-exporter

1-1:创建命名空间

[root@master ~]# kubectl create ns monitor-sa

namespace/monitor-sa created

1-2:上传压缩包到各个节点上并解压

[root@master ~]# docker load -i node-exporter.tar.gz

ad68498f8d86: Loading layer [==================================================>] 4.628MB/4.628MB

ad8512dce2a7: Loading layer [==================================================>] 2.781MB/2.781MB

cc1adb06ef21: Loading layer [==================================================>] 16.9MB/16.9MB

Loaded image: prom/node-exporter:v0.16.0

[root@master ~]# scp node-exporter.tar.gz node1:/root/

node-exporter.tar.gz 100% 23MB 87.6MB/s 00:00

[root@master ~]# scp node-exporter.tar.gz node2:/root/

node-exporter.tar.gz 100% 23MB 110.7MB/s 00:00

1-3:创建名为node-export.yaml文件的daemonset资源;daemonset可以在控制或工作节点上都有一个完全一样的pod;

查看污点:

[root@master prometheus]# kubectl describe nodes master | grep -i Taints:

Taints: node-role.kubernetes.io/master:NoSchedule

[root@master prometheus]# kubectl describe nodes node1 | grep -i Taints:

Taints: <none>

[root@master prometheus]# kubectl describe nodes node2 | grep -i Taints:

Taints: <none>

apiVersion: apps/v1

kind: DaemonSet

metadata:

name: node-exporter #名称

namespace: monitor-sa #命令空间

labels:

name: node-exporter #标签

spec:

selector:

matchLabels:

name: node-exporter

template:

metadata:

labels:

name: node-exporter #创建资源时的标签

spec:

hostPID: true

hostIPC: true

hostNetwork: true #pod调度到哪台机器就拥有哪台机器的ip

containers:

- name: node-exporter

image: prom/node-exporter:v0.16.0

imagePullPolicy: IfNotPresent #镜像拉取策略

ports:

- containerPort: 9100 #端口

resources:

requests:

cpu: 0.15

securityContext:

privileged: true #文件权限

args: #加载的文件

- --path.procfs

- /host/proc

- --path.sysfs

- /host/sys

- --collector.filesystem.ignored-mount-points

- '"^/(sys|proc|dev|host|etc)($|/)"'

volumeMounts: #主机目录挂载到容器中

- name: dev

mountPath: /host/dev

- name: proc

mountPath: /host/proc

- name: sys

mountPath: /host/sys

- name: rootfs

mountPath: /rootfs

tolerations: #污点容忍度

- key: "node-role.kubernetes.io/master"

operator: "Exists"

effect: "NoSchedule" #kubectl describe nodes master | grep -i Taints: 此命令查看容忍度在哪个节点上

volumes: #主机的目录

- name: proc

hostPath:

path: /proc

- name: dev

hostPath:

path: /dev

- name: sys

hostPath:

path: /sys

- name: rootfs

hostPath:

path: /

1-4:创建文件并查看

[root@master prometheus]# kubectl apply -f node-export.yaml

daemonset.apps/node-exporter created

[root@master prometheus]# kubectl get pods -n monitor-sa -owide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

node-exporter-l6vdw 1/1 Running 0 72s 192.168.56.141 master <none> <none>

node-exporter-xl7vb 1/1 Running 0 72s 192.168.56.151 node1 <none> <none>

node-exporter-xqx8w 1/1 Running 0 72s 192.168.56.152 node2 <none> <none>

[root@master prometheus]# ss -antulp | grep :9100

tcp LISTEN 0 128 [::]:9100 [::]:* users:(("node_exporter",pid=12836,fd=3))

1-5:访问节点查看资源信息

http://youIP:9100/metrics

2.部署Prometheus

2-1:创建sa账号对sa做rbac授权

创建一个名为monitor的账号

[root@master prometheus]# kubectl create serviceaccount monitor -n monitor-sa

serviceaccount/monitor created

将sa账号通过clusterrolebinding绑定至clusterrole上

[root@master prometheus]# kubectl create clusterrolebinding monitor-clusterrolebinding -n monitor-sa --clusterrole=cluster-admin --serviceaccount=monitor-sa:monitor

clusterrolebinding.rbac.authorization.k8s.io/monitor-clusterrolebinding created

授权给普通用户admin权限

[root@master prometheus]# kubectl create clusterrolebinding monitor-clusterrolebinding-1 -n monitor-sa --clusterrole=cluster-admin --user=system:serviceaccount:monitor:monitor-sa

clusterrolebinding.rbac.authorization.k8s.io/monitor-clusterrolebinding-1 created

2-2:创建work节点数据目录(prometheus读写数据)

[root@node1 ~]# mkdir /data

[root@node1 ~]# chmod 777 /data

[root@node2 ~]# mkdir /data

[root@node2 ~]# chmod 777 /data

3.部署Prometheus server服务

3-1:创建一个configmap存储卷,用来存放prometheus配置信息

创建资源

[root@master prometheus]# kubectl apply -f prometheus-cfg.yaml

configmap/prometheus-config created

查看

[root@master prometheus]# kubectl get cm -n monitor-sa

NAME DATA AGE

kube-root-ca.crt 1 111m

prometheus-config 1 56s

---

kind: ConfigMap

apiVersion: v1

metadata:

labels:

app: prometheus #标签

name: prometheus-config #名称

namespace: monitor-sa #命名空间

data:

prometheus.yml: | #以下此文件内容,官网可以查到

global:

scrape_interval: 15s #采集目标值主机监控的时间间隔

scrape_timeout: 10s #数据采集超时时间

evaluation_interval: 1m #触发告警检测的时间

scrape_configs: #数据源

- job_name: 'kubernetes-node'

kubernetes_sd_configs: #复发线/多种模式

- role: node #模式

relabel_configs: #正则过滤

- source_labels: [__address__]

regex: '(.*):10250' #将此端口转向连接为下方的端口

replacement: '${1}:9100'

target_label: __address__

action: replace

- action: labelmap

regex: __meta_kubernetes_node_label_(.+)

- job_name: 'kubernetes-node-cadvisor' #同上也是正则

kubernetes_sd_configs:

- role: node

scheme: https

tls_config:

ca_file: /var/run/secrets/kubernetes.io/serviceaccount/ca.crt

bearer_token_file: /var/run/secrets/kubernetes.io/serviceaccount/token

relabel_configs:

- action: labelmap

regex: __meta_kubernetes_node_label_(.+)

- target_label: __address__

replacement: kubernetes.default.svc:443 #服务地址

- source_labels: [__meta_kubernetes_node_name]

regex: (.+)

target_label: __metrics_path__

replacement: /api/v1/nodes/${1}/proxy/metrics/cadvisor

- job_name: 'kubernetes-apiserver' #匹配资源

kubernetes_sd_configs:

- role: endpoints

scheme: https

tls_config:

ca_file: /var/run/secrets/kubernetes.io/serviceaccount/ca.crt

bearer_token_file: /var/run/secrets/kubernetes.io/serviceaccount/token

relabel_configs:

- source_labels: [__meta_kubernetes_namespace, __meta_kubernetes_service_name, __meta_kubernetes_endpoint_port_name]

action: keep

regex: default;kubernetes;https

- job_name: 'kubernetes-service-endpoints' #服务监测指标

kubernetes_sd_configs:

- role: endpoints

relabel_configs:

- source_labels: [__meta_kubernetes_service_annotation_prometheus_io_scrape]

action: keep

regex: true

- source_labels: [__meta_kubernetes_service_annotation_prometheus_io_scheme]

action: replace

target_label: __scheme__

regex: (https?)

- source_labels: [__meta_kubernetes_service_annotation_prometheus_io_path]

action: replace

target_label: __metrics_path__

regex: (.+)

- source_labels: [__address__, __meta_kubernetes_service_annotation_prometheus_io_port]

action: replace

target_label: __address__

regex: ([^:]+)(?::\d+)?;(\d+)

replacement: $1:$2

- action: labelmap

regex: __meta_kubernetes_service_label_(.+)

- source_labels: [__meta_kubernetes_namespace]

action: replace

target_label: kubernetes_namespace

- source_labels: [__meta_kubernetes_service_name]

action: replace

target_label: kubernetes_name

4.通过deployment创建prometheus的UI界面

4-1:将镜像文件上传至work节点并解压

[root@node1 ~]# docker load -i prometheus-2-2-1.tar.gz

6a749002dd6a: Loading layer [==================================================>] 1.338MB/1.338MB

5f70bf18a086: Loading layer [==================================================>] 1.024kB/1.024kB

1692ded805c8: Loading layer [==================================================>] 2.629MB/2.629MB

035489d93827: Loading layer [==================================================>] 66.18MB/66.18MB

8b6ef3a2ab2c: Loading layer [==================================================>] 44.5MB/44.5MB

ff98586f6325: Loading layer [==================================================>] 3.584kB/3.584kB

017a13aba9f4: Loading layer [==================================================>] 12.8kB/12.8kB

4d04d79bb1a5: Loading layer [==================================================>] 27.65kB/27.65kB

75f6c078fa6b: Loading layer [==================================================>] 10.75kB/10.75kB

5e8313e8e2ba: Loading layer [==================================================>] 6.144kB/6.144kB

Loaded image: prom/prometheus:v2.2.1

[root@node2 ~]# docker load -i prometheus-2-2-1.tar.gz

6a749002dd6a: Loading layer [==================================================>] 1.338MB/1.338MB

5f70bf18a086: Loading layer [==================================================>] 1.024kB/1.024kB

1692ded805c8: Loading layer [==================================================>] 2.629MB/2.629MB

035489d93827: Loading layer [==================================================>] 66.18MB/66.18MB

8b6ef3a2ab2c: Loading layer [==================================================>] 44.5MB/44.5MB

ff98586f6325: Loading layer [==================================================>] 3.584kB/3.584kB

017a13aba9f4: Loading layer [==================================================>] 12.8kB/12.8kB

4d04d79bb1a5: Loading layer [==================================================>] 27.65kB/27.65kB

75f6c078fa6b: Loading layer [==================================================>] 10.75kB/10.75kB

5e8313e8e2ba: Loading layer [==================================================>] 6.144kB/6.144kB

Loaded image: prom/prometheus:v2.2.1

4-2:创建名为Prometheus-deploy.yaml的文件,并查看

[root@master prometheus]# kubectl apply -f prometheus-deploy.yaml

deployment.apps/prometheus-server created

[root@master prometheus]# kubectl get pods -n monitor-sa

NAME READY STATUS RESTARTS AGE

node-exporter-l6vdw 1/1 Running 0 92m

node-exporter-xl7vb 1/1 Running 0 92m

node-exporter-xqx8w 1/1 Running 0 92m

prometheus-server-5cdf9fd9cd-fxfr2 1/1 Running 0 7s

[root@master prometheus]# kubectl exec -it prometheus-server-5cdf9fd9cd-fxfr2 -n monitor-sa -- /bin/sh

/prometheus $ ls

lock wal

/prometheus $ cd /etc

/etc $ ls

group hosts mtab prometheus services ssl

hostname localtime passwd resolv.conf shadow

/etc $ exit

---

apiVersion: apps/v1

kind: Deployment #控制器

metadata:

name: prometheus-server #podname

namespace: monitor-sa #命名空间

labels:

app: prometheus #标签

spec:

replicas: 1 #pod副本数

selector:

matchLabels:

app: prometheus

component: server

#matchExpressions:

#- {key: app, operator: In, values: [prometheus]}

#- {key: component, operator: In, values: [server]}

template:

metadata:

labels:

app: prometheus #创建的所有pod都拥有这个标签

component: server

annotations:

prometheus.io/scrape: 'false'

spec:

nodeName: node1 #work节点主机名

serviceAccountName: monitor #名称

containers:

- name: prometheus

image: prom/prometheus:v2.2.1 #镜像

imagePullPolicy: IfNotPresent #拉去策略

command:

- prometheus

- --config.file=/etc/prometheus/prometheus.yml #prometheus-cfg.yaml文件中

- --storage.tsdb.path=/prometheus #目录

- --storage.tsdb.retention=720h #删除旧日期的时间

- --web.enable-lifecycle #热加载,热更新,不需删除创建

ports:

- containerPort: 9090

protocol: TCP

volumeMounts: #将下面的卷挂载的位置

- mountPath: /etc/prometheus

name: prometheus-config

- mountPath: /prometheus/

name: prometheus-storage-volume

volumes: #卷

- name: prometheus-config

configMap:

name: prometheus-config

- name: prometheus-storage-volume

hostPath:

path: /data

type: Directory

4-3:创建名为Prometheus-svc.yaml的文件

[root@master prometheus]# kubectl apply -f prometheus-svc.yaml

service/prometheus created

[root@master prometheus]# kubectl get svc -n monitor-sa

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

prometheus NodePort 10.99.102.58 <none> 9090:32550/TCP 50s

apiVersion: v1

kind: Service

metadata:

name: prometheus

namespace: monitor-sa

labels:

app: prometheus

spec:

type: NodePort #控制节点映射端口

ports:

- port: 9090

targetPort: 9090

protocol: TCP

selector:

app: prometheus

component: server

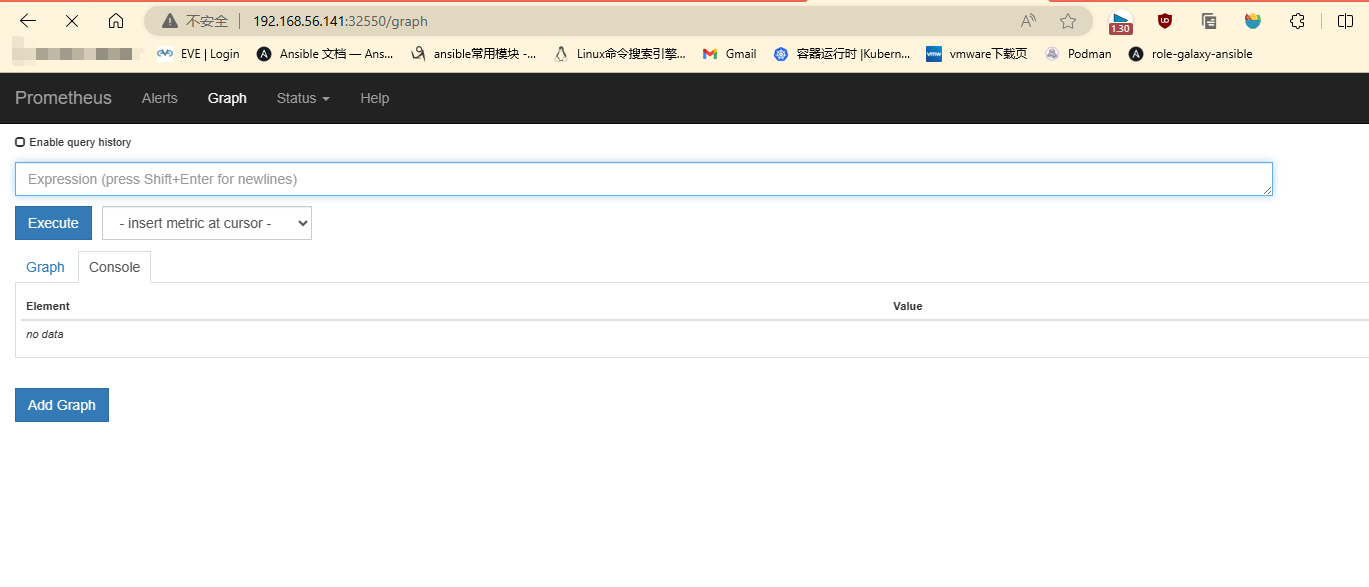

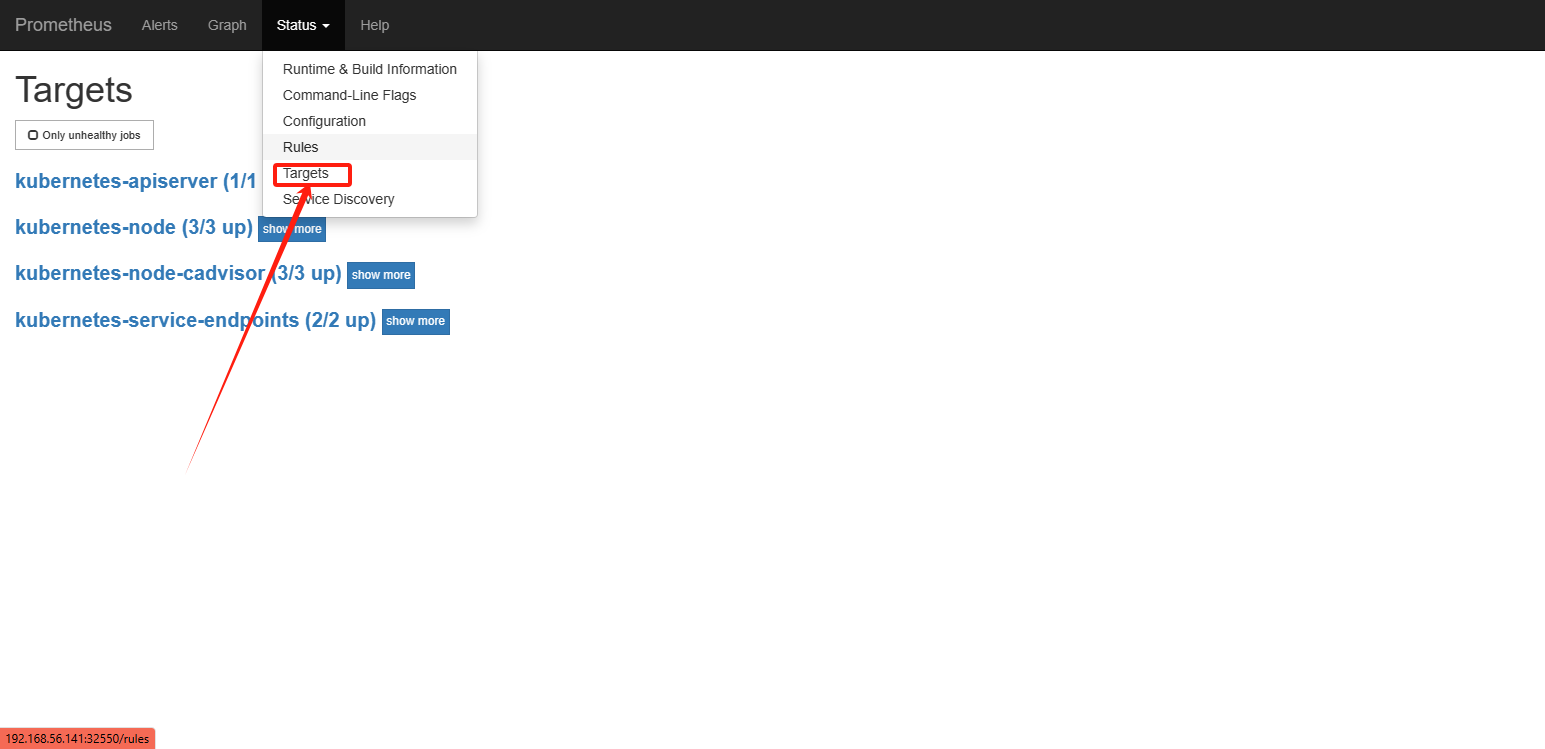

4-4:可通过访问主机IP加映射端口访问UI界面

查看采集的数据

4-5:更新prometheus-svc.yaml文件内容显出prometheus本身的svc信息

apiVersion: v1

kind: Service

metadata:

name: prometheus

namespace: monitor-sa

labels:

app: prometheus

annotations:

prometheus.io/scrape: "true"

prometheus.io/port: "9090"

spec:

type: NodePort

ports:

- port: 9090

targetPort: 9090

protocol: TCP

selector:

app: prometheus

component: server

删除重新创建

[root@master prometheus]# kubectl delete -f prometheus-svc.yaml

service "prometheus" deleted

[root@master prometheus]# kubectl delete -f prometheus-deploy.yaml

deployment.apps "prometheus-server" deleted

[root@master prometheus]# kubectl apply -f prometheus-svc.yaml

service/prometheus created

[root@master prometheus]# kubectl apply -f prometheus-deploy.yaml

deployment.apps/prometheus-server created

[root@master prometheus]# kubectl get svc -n monitor-sa

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

prometheus NodePort 10.99.5.72 <none> 9090:30844/TCP 28s

5.Prometheus热更新

[root@master prometheus]# kubectl get pods -n monitor-sa -owide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

node-exporter-l6vdw 1/1 Running 1 (134m ago) 6h13m 192.168.56.141 master

node-exporter-xl7vb 1/1 Running 1 (134m ago) 6h13m 192.168.56.151 node1

node-exporter-xqx8w 1/1 Running 1 (134m ago) 6h13m 192.168.56.152 node2

prometheus-server-5cdf9fd9cd-jdbzl 1/1 Running 0 18m 10.244.166.131 node1

[root@master prometheus]# curl -X POST http://10.244.166.131:9090/-/reload (重载不影响在运行的服务)

6.Grafana可视化prometheus数据

6-1:安装grafana

上传镜像至work节点并解压

[root@node1 ~]# docker load -i heapster-grafana-amd64_v5_0_4.tar.gz

6816d98be637: Loading layer [==================================================>] 4.642MB/4.642MB

523feee8e0d3: Loading layer [==================================================>] 161.5MB/161.5MB

43d2638621da: Loading layer [==================================================>] 230.4kB/230.4kB

f24c0fa82e54: Loading layer [==================================================>] 2.56kB/2.56kB

334547094992: Loading layer [==================================================>] 5.826MB/5.826MB

Loaded image: k8s.gcr.io/heapster-grafana-amd64:v5.0.4

[root@node2 ~]# docker load -i heapster-grafana-amd64_v5_0_4.tar.gz

6816d98be637: Loading layer [==================================================>] 4.642MB/4.642MB

523feee8e0d3: Loading layer [==================================================>] 161.5MB/161.5MB

43d2638621da: Loading layer [==================================================>] 230.4kB/230.4kB

f24c0fa82e54: Loading layer [==================================================>] 2.56kB/2.56kB

334547094992: Loading layer [==================================================>] 5.826MB/5.826MB

Loaded image: k8s.gcr.io/heapster-grafana-amd64:v5.0.4

创建文件

[root@master prometheus]# kubectl apply -f grafana.yaml

deployment.apps/monitoring-grafana created

service/monitoring-grafana created

查看namespace:kube-system下

apiVersion: apps/v1

kind: Deployment #资源类型

metadata:

name: monitoring-grafana

namespace: kube-system #命名空间 name放在了namespace下

spec:

replicas: 1

selector:

matchLabels:

task: monitoring

k8s-app: grafana

template:

metadata:

labels: #标签

task: monitoring

k8s-app: grafana

spec:

containers:

- name: grafana #镜像拉去

image: k8s.gcr.io/heapster-grafana-amd64:v5.0.4

imagePullPolicy: IfNotPresent

ports:

- containerPort: 3000

protocol: TCP

volumeMounts:

- mountPath: /etc/ssl/certs

name: ca-certificates

readOnly: true

- mountPath: /var

name: grafana-storage

env:

- name: INFLUXDB_HOST

value: monitoring-influxdb

- name: GF_SERVER_HTTP_PORT

value: "3000"

# The following env variables are required to make Grafana accessible via

# the kubernetes api-server proxy. On production clusters, we recommend

# removing these env variables, setup auth for grafana, and expose the grafana

# service using a LoadBalancer or a public IP.

- name: GF_AUTH_BASIC_ENABLED

value: "false"

- name: GF_AUTH_ANONYMOUS_ENABLED

value: "true"

- name: GF_AUTH_ANONYMOUS_ORG_ROLE

value: Admin

- name: GF_SERVER_ROOT_URL

# If you're only using the API Server proxy, set this value instead:

# value: /api/v1/namespaces/kube-system/services/monitoring-grafana/proxy

value: /

volumes:

- name: ca-certificates

hostPath:

path: /etc/ssl/certs

- name: grafana-storage

emptyDir: {}

---

apiVersion: v1

kind: Service

metadata:

labels:

# For use as a Cluster add-on (https://github.com/kubernetes/kubernetes/tree/master/cluster/addons)

# If you are NOT using this as an addon, you should comment out this line.

kubernetes.io/cluster-service: 'true'

kubernetes.io/name: monitoring-grafana

name: monitoring-grafana

namespace: kube-system

spec:

# In a production setup, we recommend accessing Grafana through an external Loadbalancer

# or through a public IP.

# type: LoadBalancer

# You could also use NodePort to expose the service at a randomly-generated port

# type: NodePort

ports:

- port: 80

targetPort: 3000

selector:

k8s-app: grafana

type: NodePort

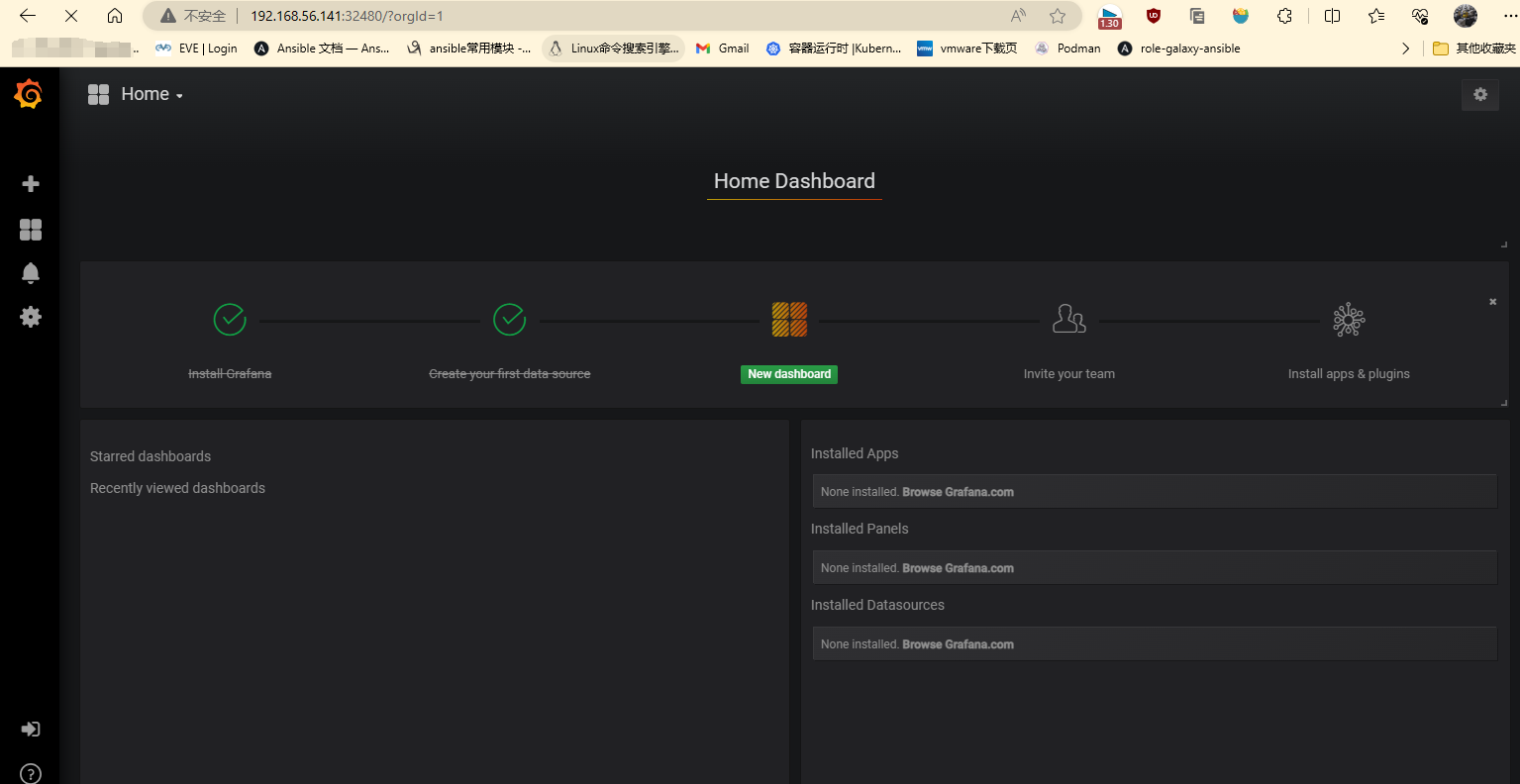

6-2:查看端口

[root@master prometheus]# kubectl get svc -n kube-system

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kube-dns ClusterIP 10.96.0.10 <none> 53/UDP,53/TCP,9153/TCP 9d

monitoring-grafana NodePort 10.102.202.134 <none> 80:32480/TCP 2m54s

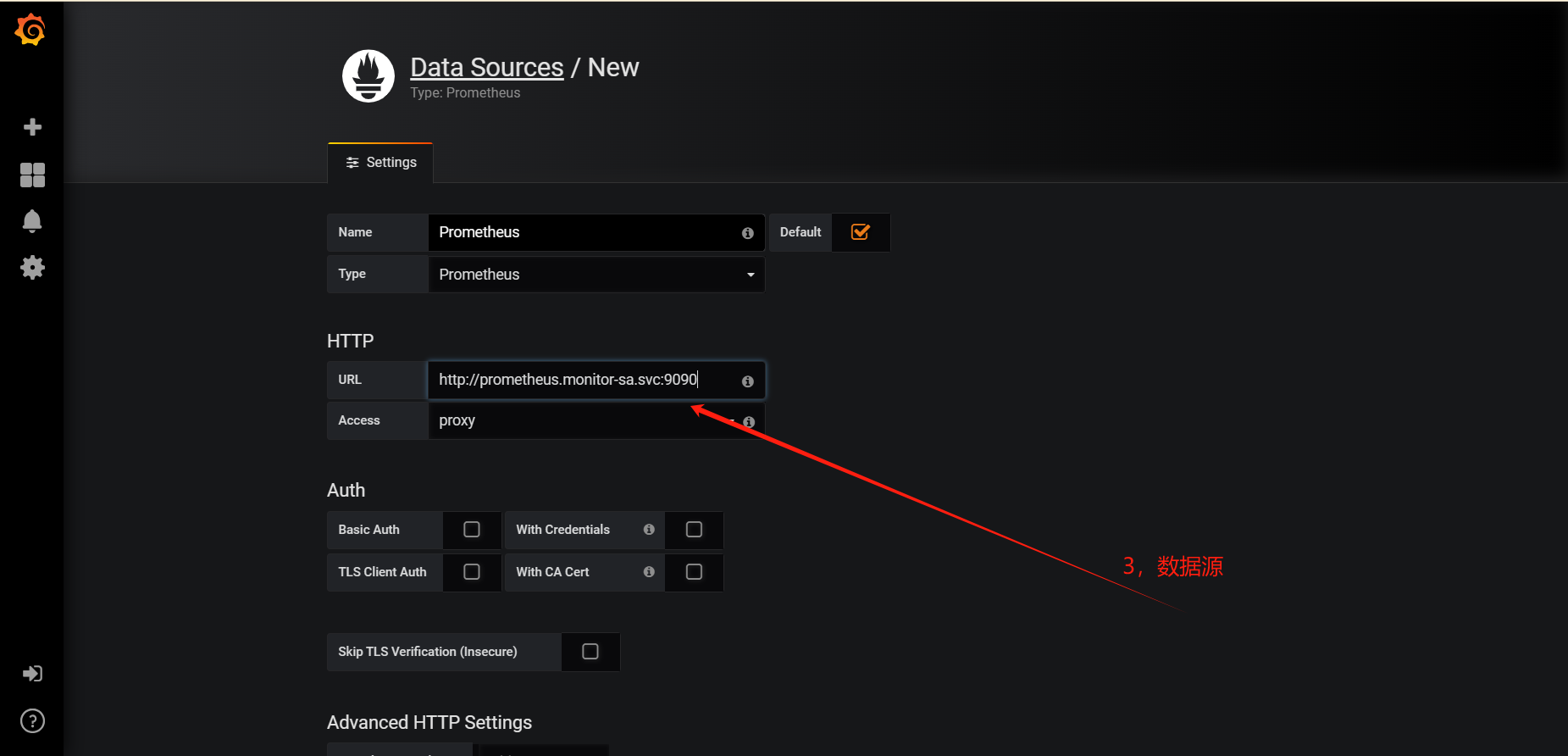

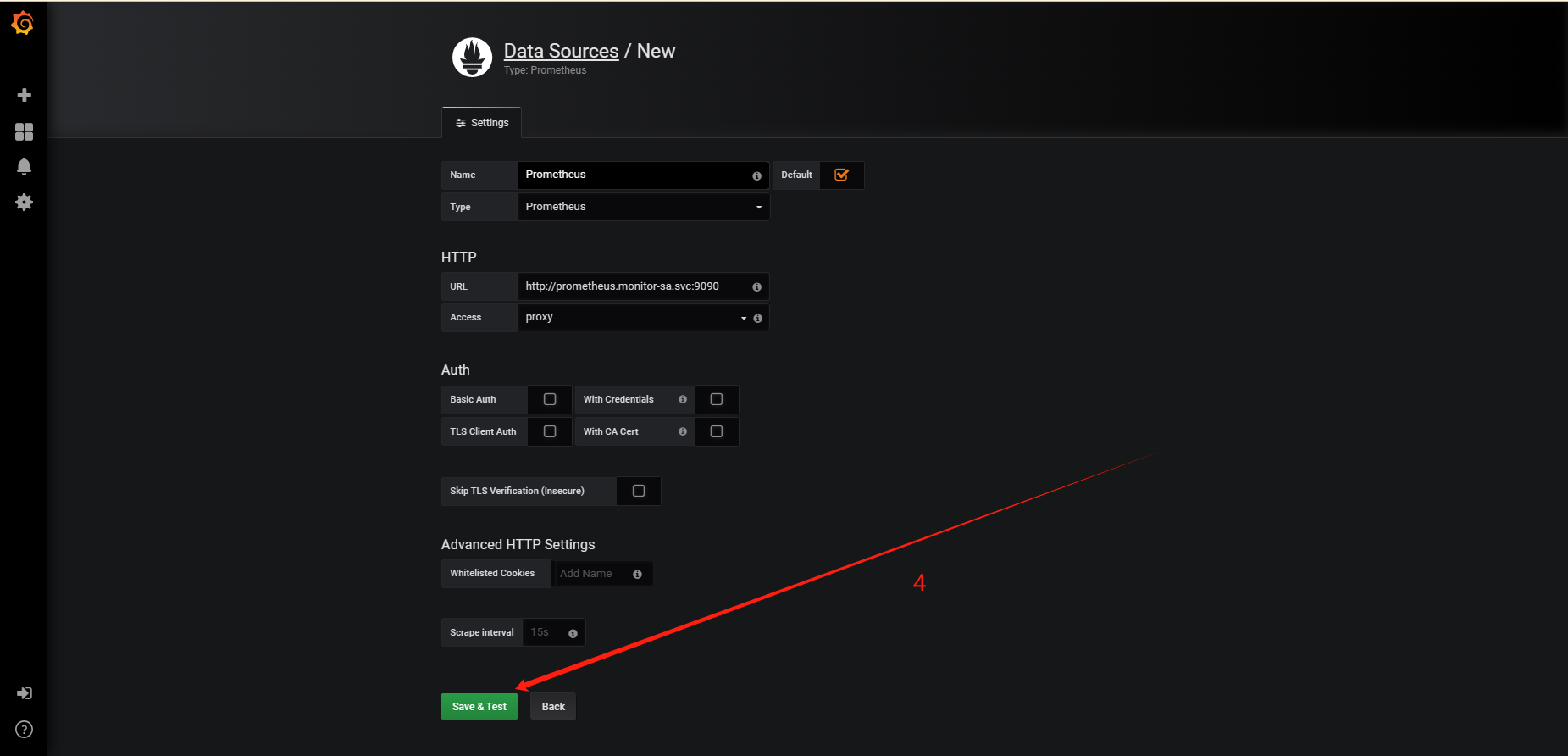

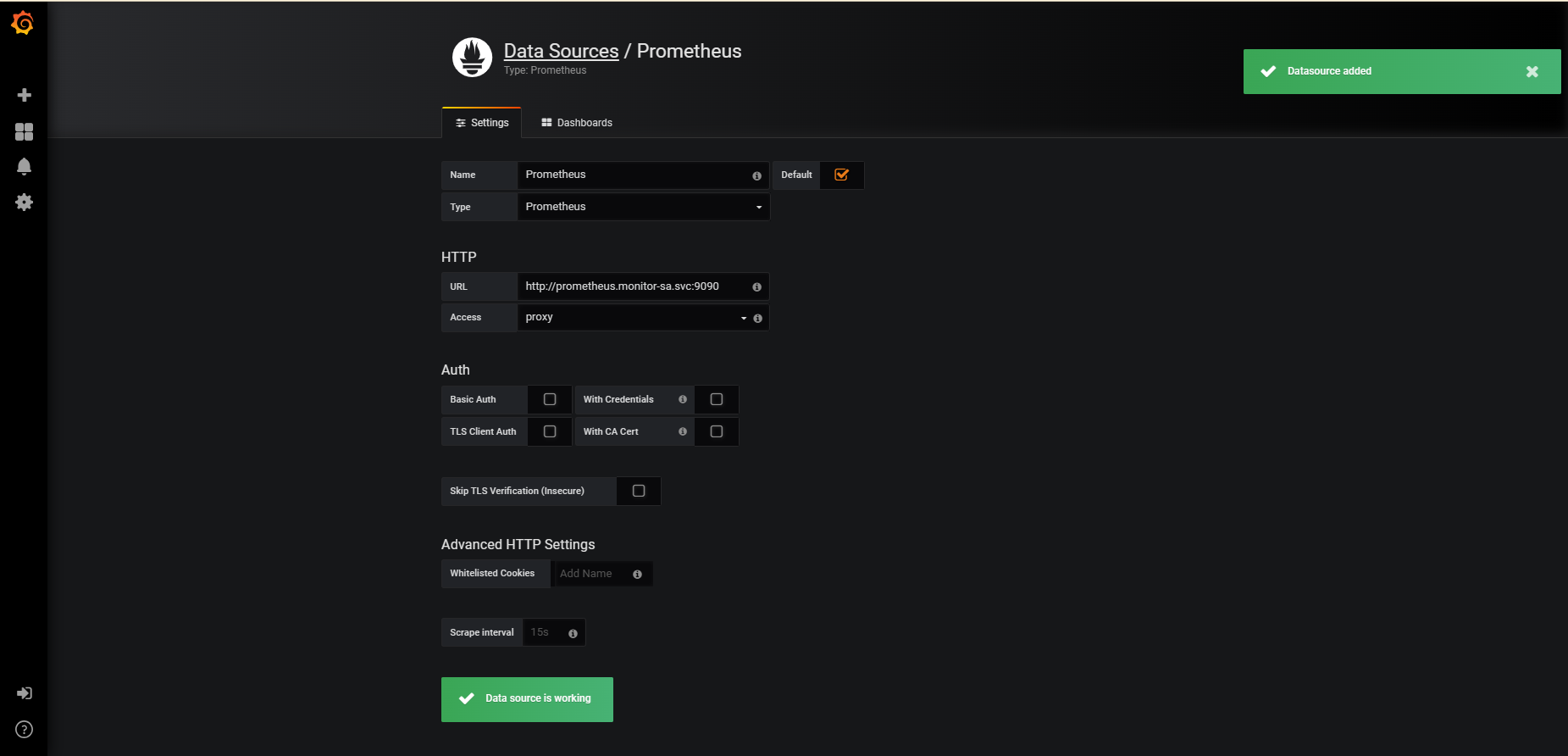

6-3:添加prometheus数据

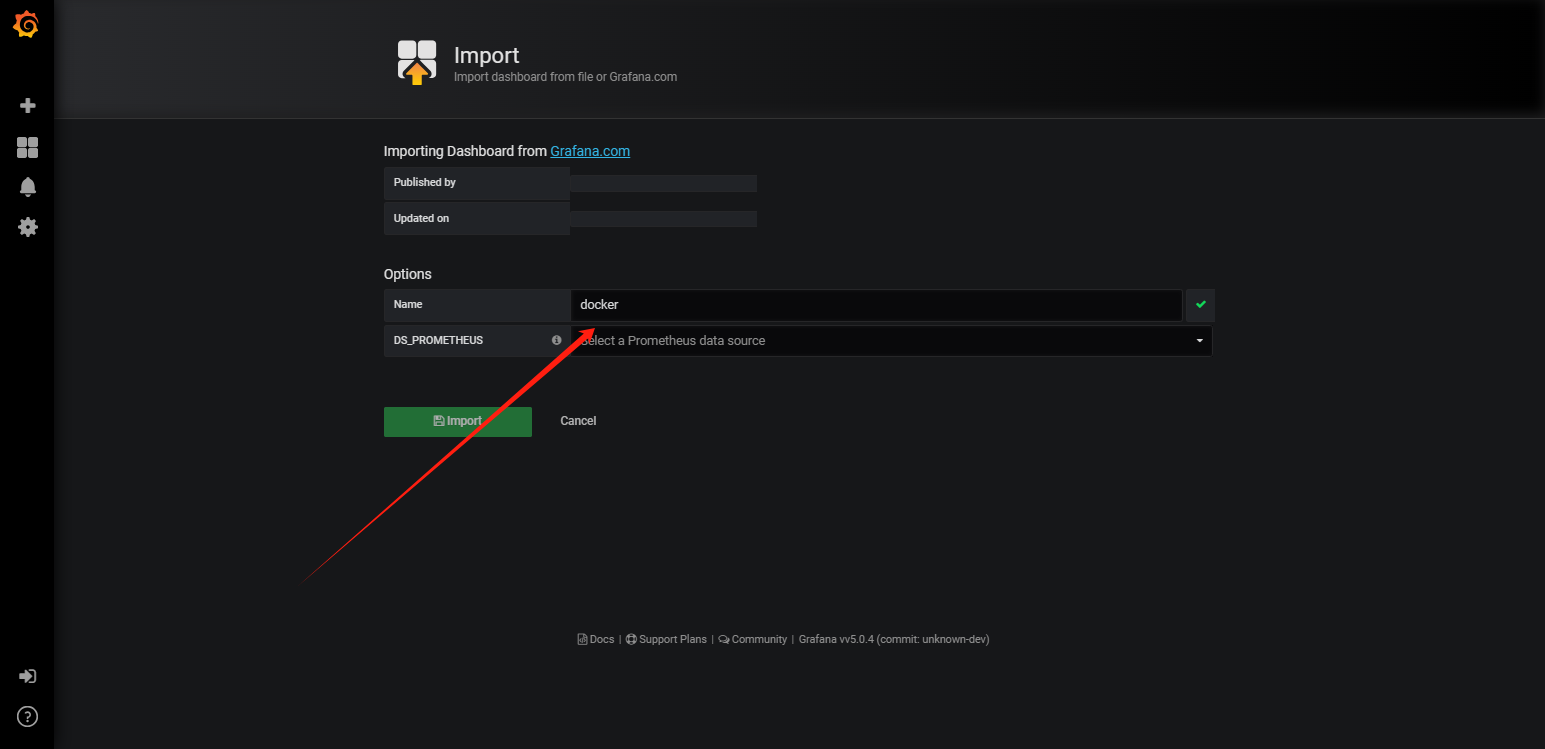

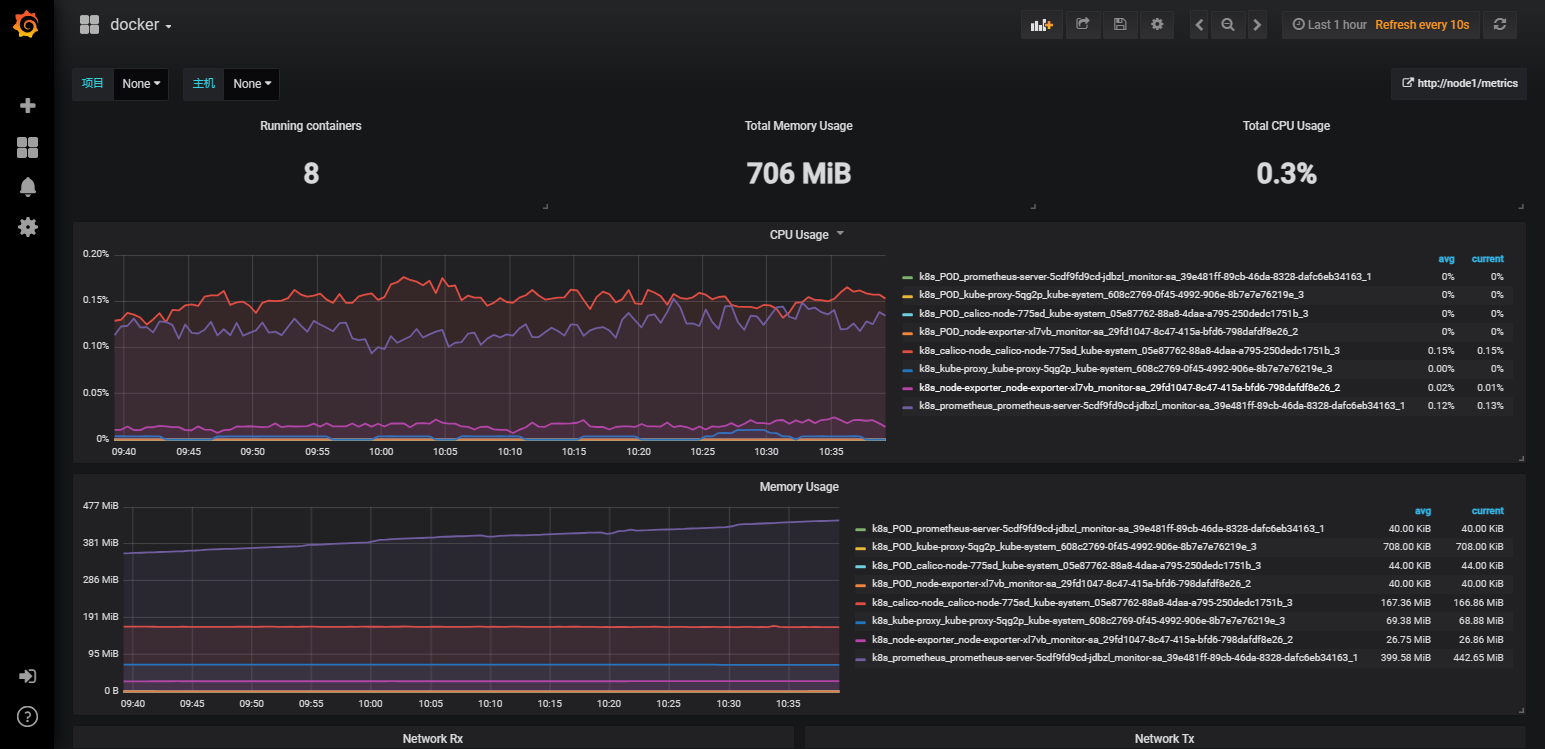

6-4:添加名为docker_rev1.json的文件连接容器的数据源

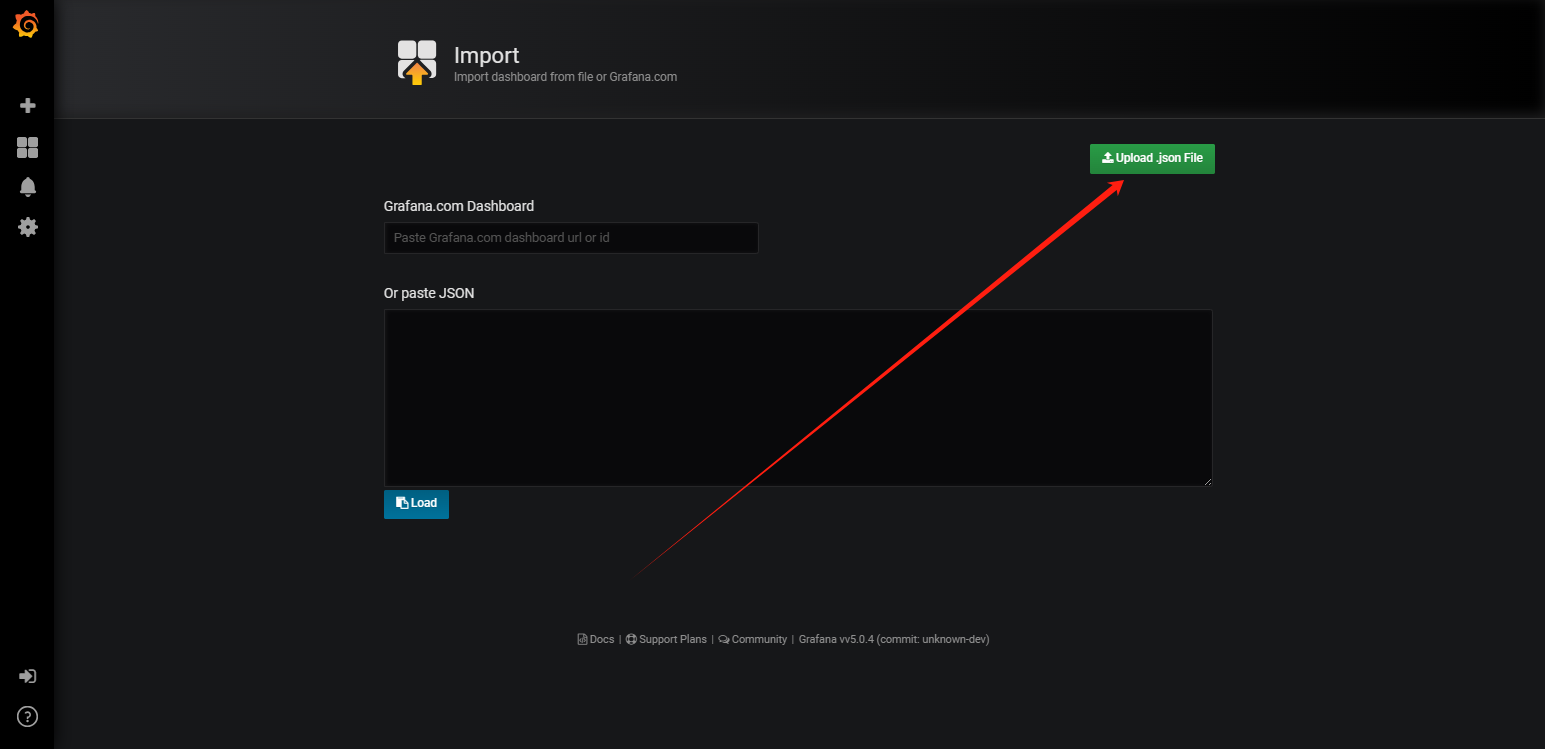

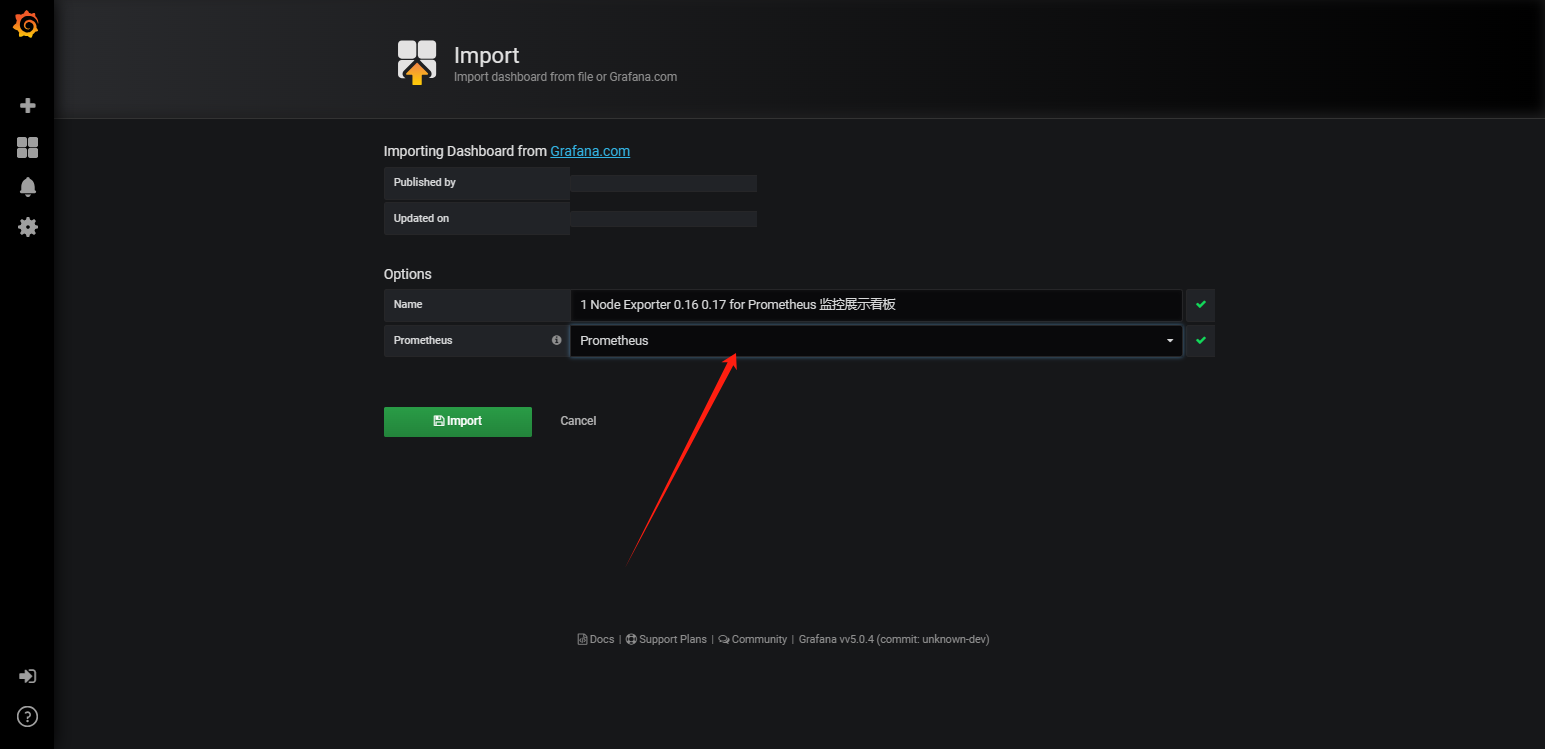

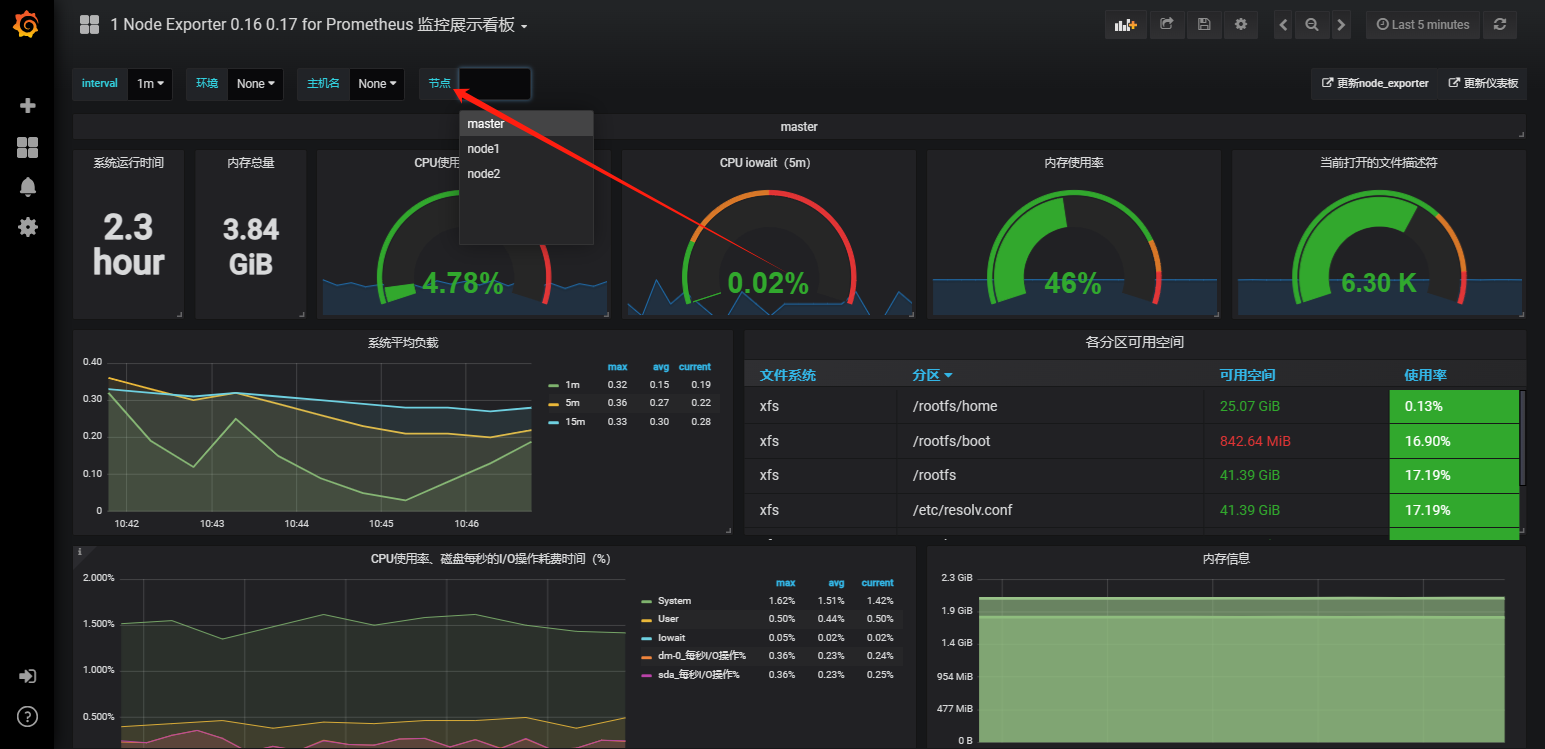

6-5:导入node-export.json文件连接数据源查看节点

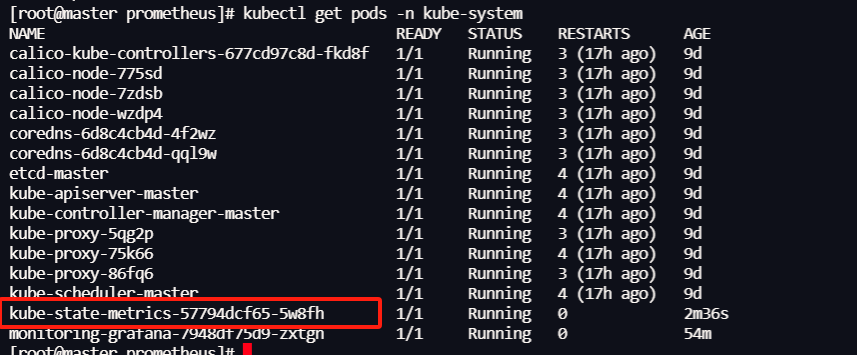

6-6:安装kube-state-metrics组件,让pod运行情况也可以查看

[root@master prometheus]# kubectl apply -f kube-state-metrics-rbac.yaml

serviceaccount/kube-state-metrics created

clusterrole.rbac.authorization.k8s.io/kube-state-metrics created

clusterrolebinding.rbac.authorization.k8s.io/kube-state-metrics created

---

apiVersion: v1

kind: ServiceAccount

metadata:

name: kube-state-metrics

namespace: kube-system

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

name: kube-state-metrics

rules:

- apiGroups: [""]

resources: ["nodes", "pods", "services", "resourcequotas", "replicationcontrollers", "limitranges", "persistentvolumeclaims", "persistentvolumes", "namespaces", "endpoints"]

verbs: ["list", "watch"]

- apiGroups: ["extensions"]

resources: ["daemonsets", "deployments", "replicasets"]

verbs: ["list", "watch"]

- apiGroups: ["apps"]

resources: ["statefulsets"]

verbs: ["list", "watch"]

- apiGroups: ["batch"]

resources: ["cronjobs", "jobs"]

verbs: ["list", "watch"]

- apiGroups: ["autoscaling"]

resources: ["horizontalpodautoscalers"]

verbs: ["list", "watch"]

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: kube-state-metrics

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: kube-state-metrics

subjects:

- kind: ServiceAccount

name: kube-state-metrics

namespace: kube-system

6-7:上传镜像至work节点并解压

[root@node1 ~]# docker load -i kube-state-metrics_1_9_0.tar.gz

932da5156413: Loading layer [==================================================>] 3.062MB/3.062MB

bd8df7c22fdb: Loading layer [==================================================>] 31MB/31MB

Loaded image: quay.io/coreos/kube-state-metrics:v1.9.0

[root@node2 ~]# docker load -i kube-state-metrics_1_9_0.tar.gz

932da5156413: Loading layer [==================================================>] 3.062MB/3.062MB

bd8df7c22fdb: Loading layer [==================================================>] 31MB/31MB

Loaded image: quay.io/coreos/kube-state-metrics:v1.9.0

6-8:创建kube-state-metrics的deploy文件

[root@master prometheus]# kubectl apply -f kube-state-metrics-deploy.yaml

deployment.apps/kube-state-metrics created

apiVersion: apps/v1

kind: Deployment

metadata:

name: kube-state-metrics

namespace: kube-system

spec:

replicas: 1

selector:

matchLabels:

app: kube-state-metrics

template:

metadata:

labels:

app: kube-state-metrics

spec:

serviceAccountName: kube-state-metrics

containers:

- name: kube-state-metrics

image: quay.io/coreos/kube-state-metrics:v1.9.0

imagePullPolicy: IfNotPresent

ports:

- containerPort: 8080

6-9:创建kube-state-metrics的svc文件

[root@master prometheus]# kubectl apply -f kube-state-metrics-svc.yaml

service/kube-state-metrics created

apiVersion: v1

kind: Service

metadata:

annotations:

prometheus.io/scrape: 'true'

name: kube-state-metrics

namespace: kube-system

labels:

app: kube-state-metrics

spec:

ports:

- name: kube-state-metrics

port: 8080

protocol: TCP

selector:

app: kube-state-metrics

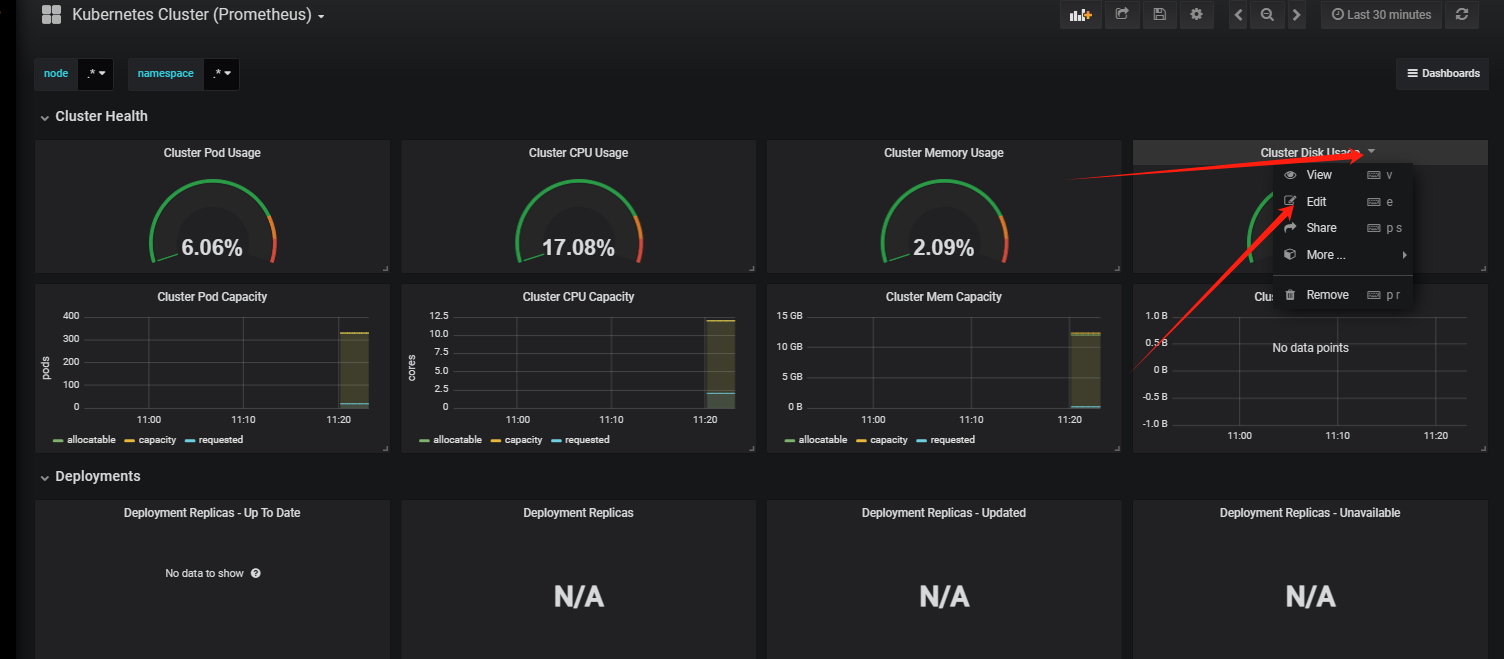

6-10:导入prometheus的json文件监测pod

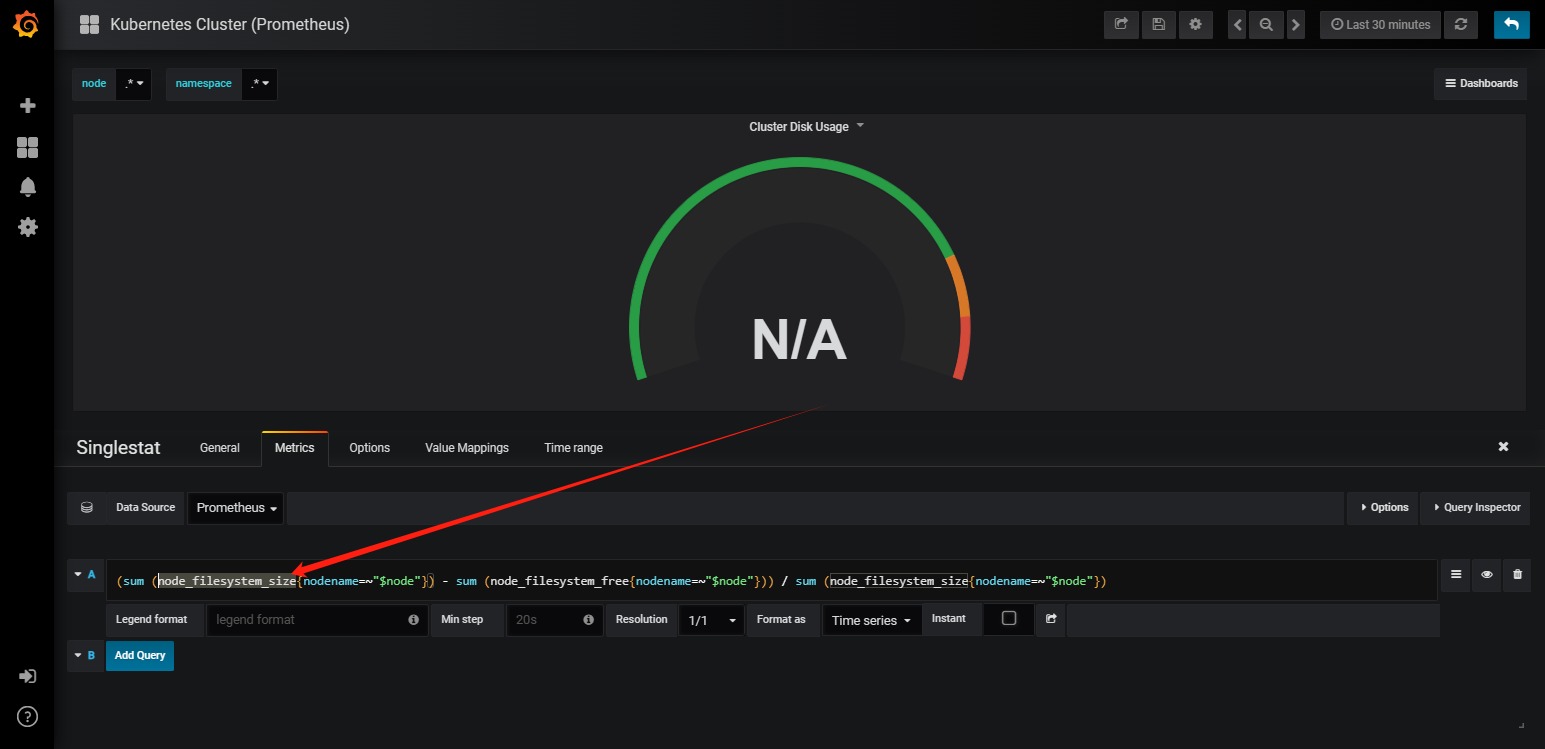

6-11:上方发现disk没有数据,填补一下

6-11-1:复制此内容到Prometheus页面去搜索

6-11-2:删除一个字母查看是否拥有(可能名称不同),此时发现grafana缺少“_bytes”

6-11-3:将在Prometheus上查到的文字替换掉grafana的名称即可显示

浙公网安备 33010602011771号

浙公网安备 33010602011771号