ETL组件----Flume与Kafka组件的数据处理

Flume与Kafka

1.在flume下的conf目录下创建syslog_mem_kafka.conf文件并添加内容

直接编辑会自动创建该文件,并修改权限为当前用户

sudo vim syslog_mem_kafka.conf

添加内容:

agent1.sources = src

agent1.channels = ch1

agent1.sinks = des1

#添加sources的类型,端口,主机名

agent1.sources.src.type = syslogtcp

agent1.sources.src.port = 6868

agent1.sources.src.host = master

#添加channels类型

agent1.channels.ch1.type = memory

#添加skins类型,kafka服务器名称

agent1.sinks.des1.type = org.apache.flume.sink.kafka.kafkaSink

agent1.sinks.des1.brokerList = master:9092,slave1:9092,slave2:9092

#指定kafka中topic名称

agent1.sinks.des1.topic = flumekafka

#将sources和channels连接

agent1.sources.src.channels = ch1

#将sinks和channel连接

agnet1.sinks.des1.channel = ch1

修改权限:sudo chown -R cwl02:cwl02 /opt/

2.启动三个节点的kafka

/opt/kafka/bin/kafka-server-start.sh /opt/kafka/config/server.properties

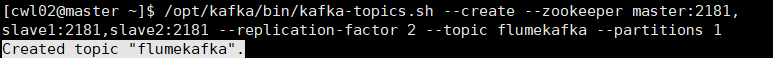

3.创建名为flumekafka的toplic

新建一个master终端

/opt/kafka/bin/kafka-topics.sh --create --zookeeper master:2181,slave1:2181,slave2:2181 --replication-factor 2 --topic flumekafka --partitions 1

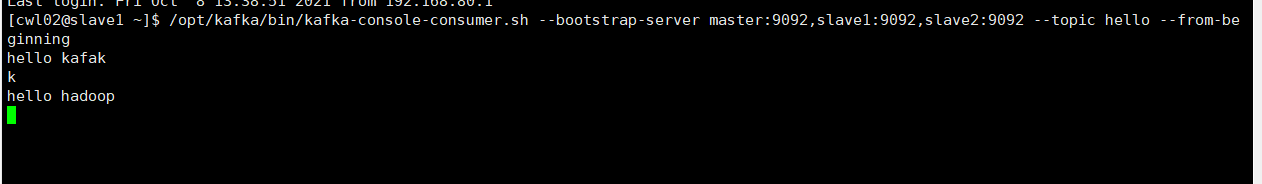

4.创建消费者

新建一个slave1终端

/opt/kafka/bin/kafka-console-consumer.sh --bootstrap-server master:9092,slave1:9092,slave2:9092 --topic flumekafka --from-beginning

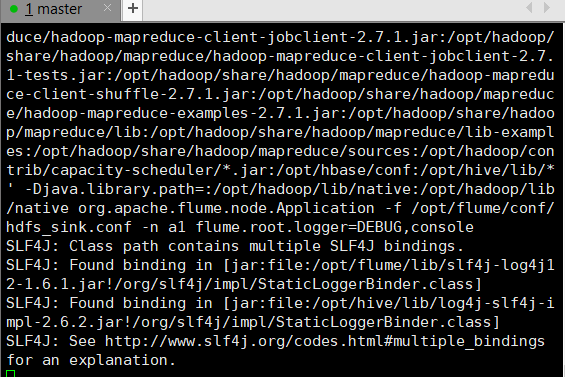

5.在新建的master终端启动Flume

再新建一个master终端输入‘hello kafkaflume’查看输出结果

flume-ng agent -c /opt/flume/conf/ -f /opt/flume/conf/syslog_mem_kafka.conf -n a1 -D flume.root.logger=DEBUG,console

浙公网安备 33010602011771号

浙公网安备 33010602011771号