[TensorFlow]学习笔记

Tensorflow学习指南课本代码

https://github.com/Hezi-Resheff/Oreilly-Learning-TensorFlow

GloVe资源:

https://apache-mxnet.s3.cn-north-1.amazonaws.com.cn/gluon/embeddings/glove/glove.6B.zip

https://apache-mxnet.s3.cn-north-1.amazonaws.com.cn/gluon/embeddings/glove/glove.42B.300d.zip

https://apache-mxnet.s3.cn-north-1.amazonaws.com.cn/gluon/embeddings/glove/glove.840B.300d.zip

https://apache-mxnet.s3.cn-north-1.amazonaws.com.cn/gluon/embeddings/glove/glove.twitter.27B.zip

tf.nn.conv2d()函数理解

https://blog.csdn.net/ifreewolf_csdn/article/details/88934681

tf.nn.conv2d()函数以及padding填充方式介绍

https://blog.csdn.net/sinat_34328764/article/details/84303919

不同行列矩阵相加:

https://www.zhihu.com/question/61919551

《Deep Learning》中文版第2.1节原文:

卷积核通道数大于1时:

import tensorflow as tf tf.compat.v1.disable_eager_execution() input = tf.Variable([[ [[1.], [2.], [3.], [4.], [5.]], [[6.], [7.], [8.], [9.], [10.]], [[11.], [12.], [13.], [14.], [15.]], [[16.], [17.], [18.], [18.], [20.]], [[21.], [22.], [23.], [24.], [25.]] ]]) filter1 = tf.Variable(tf.constant([[1., 0., 0., 1.], [2., 0., 0., 2.]], shape=[2, 2, 1, 2])) filter2 = tf.Variable(tf.constant([[1., 2.], [0., 0.], [0., 0.], [1., 2.]], shape=[2, 2, 1, 2])) op_same1 = tf.nn.conv2d(input, filter1, strides=[1, 2, 2, 1], padding='SAME') op_same2 = tf.nn.conv2d(input, filter2, strides=[1, 2, 2, 1], padding='SAME') op_valid1 = tf.nn.conv2d(input, filter1, strides=[1, 2, 2, 1], padding='VALID') op_valid2 = tf.nn.conv2d(input, filter2, strides=[1, 2, 2, 1], padding='VALID') init = tf.compat.v1.global_variables_initializer() with tf.compat.v1.Session() as sess: sess.run(init) # print("input:\n", sess.run(input)) print("\n\nfilter1:\n",sess.run(filter1)) print("\n\nfilter2:\n",sess.run(filter2)) print("\n\nop_same1:\n",sess.run(op_same1)) print("\n\nop_same2:\n",sess.run(op_same2)) print("\n\nop_valid1:\n",sess.run(op_valid1)) print("\n\nop_valid2:\n",sess.run(op_valid2)) ''' filter1: [[[[1. 0.]] [[0. 1.]]] [[[2. 0.]] [[0. 2.]]]] filter2: [[[[1. 2.]] [[0. 0.]]] [[[0. 0.]] [[1. 2.]]]] op_same1: [[[[13. 16.] # 13=1*1 + 2*0 + 6*2 + 7*0 [19. 22.] [25. 0.]] [[43. 46.] [49. 50.] [55. 0.]] [[21. 22.] [23. 24.] [25. 0.]]]] op_same2: [[[[ 8. 16.] [12. 24.] [ 5. 10.]] [[28. 56.] [31. 62.] [15. 30.]] [[21. 42.] [23. 46.] [25. 50.]]]] op_valid1: [[[[13. 16.] [19. 22.]] [[43. 46.] [49. 50.]]]] op_valid2: [[[[ 8. 16.] # 8=1*1 + 2*0 + 6*0 + 7*1 [12. 24.]] [[28. 56.] [31. 62.]]]] '''

【TensorFlow】tf.nn.max_pool实现池化操作

https://blog.csdn.net/mao_xiao_feng/article/details/53453926

tf.slice()到底怎么切的,看不懂你掐死我

https://www.jianshu.com/p/71e6ef6c121b

话谈tensorflow中feed_dict到底是个啥东西(理解就是python里的format,哈哈哈)

https://blog.csdn.net/woai8339/article/details/82958649

Python numpy.transpose 详解

https://blog.csdn.net/u012762410/article/details/78912667

TF2.0:tf.reshape与tf.transpose的区别

https://www.jianshu.com/p/da736223b697

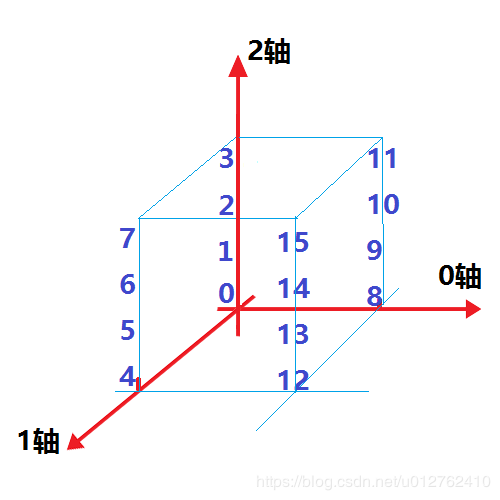

A = array([[[ 0, 1, 2, 3],

[ 4, 5, 6, 7]],

[[ 8, 9, 10, 11],

[12, 13, 14, 15]]])

PS:掌握好读数方式,容易理解关于tf.transpose的博文,读数方式先在原点上读2轴上的数字,然后向1轴移动再读取2轴上的数字,然后回到原点同样方式,向0轴移动(切记!0轴是最后才移动)

windows下用chrome不能连接tensorboard

https://blog.csdn.net/gejiaming/article/details/79603396

tensorboard --logdir=[目录路径] --host=127.0.0.1

运行http://127.0.0.1:6006/即可

【Word2Vec】Skip-Gram模型理解

https://www.jianshu.com/p/da235893e4a5

Word2Vec (Part 1): NLP With Deep Learning with Tensorflow (Skip-gram)

tf.nn.embedding_lookup函数的用法

https://www.cnblogs.com/gaofighting/p/9625868.html

关于word2vec的skip-gram模型使用负例采样nce_loss损失函数的源码剖析

https://www.cnblogs.com/xiaojieshisilang/p/9284634.html

【AI实战】手把手教你文字识别(识别篇:LSTM+CTC, CRNN, chineseocr方法)

https://my.oschina.net/u/876354/blog/3070699

python中yield的用法详解——最简单,最清晰的解释

https://blog.csdn.net/mieleizhi0522/article/details/82142856

浙公网安备 33010602011771号

浙公网安备 33010602011771号