【动手学深度学习pytorch】学习笔记 8.1 序列模型

8.1. 序列模型 — 动手学深度学习 2.0.0-beta0 documentation (d2l.ai)

51 序列模型【动手学深度学习v2】_哔哩哔哩_bilibili

书籍免费下载,有网页版,有Jupyter源代码版,有视频看,讲解清晰,有理论有实践。

感觉DL的东西,必须写代码跑跑看,读完书总感觉差点意思。

生成数据:

构建模型:

两个全连接层的多层感知机,ReLU激活函数和平方损失

net = nn.Sequential(nn.Linear(4, 10),

nn.ReLU(),

nn.Linear(10, 1))

net.apply(init_weights)

loss = nn.MSELoss(reduction='none')

训练模型:

def train(net, train_iter, loss, epochs, lr):

trainer = torch.optim.Adam(net.parameters(), lr)

for epoch in range(epochs):

for X, y in train_iter:

trainer.zero_grad()

l = loss(net(X), y)

l.sum().backward()

trainer.step()

print(f'epoch {epoch + 1}, '

f'loss: {d2l.evaluate_loss(net, train_iter, loss):f}')

net = get_net()

train(net, train_iter, loss, 5, 0.01)

预测:

单步预测 预测下一个时间步

多步预测 使用自己的预测(而不是原始数据)

k步预测 基于𝑘=1,4,16,64

源代码:

import torch

from torch import nn

from d2l import torch as d2l

import matplotlib.pyplot as plt

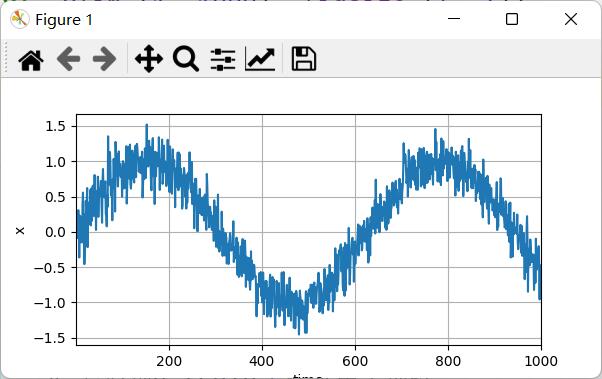

T = 1000 # 总共产生1000个点

time = torch.arange(1, T + 1, dtype=torch.float32)

x = torch.sin(0.01 * time) + torch.normal(0, 0.2, (T,))

d2l.plot(time, [x], 'time', 'x', xlim=[1, 1000], figsize=(6, 3))

plt.show()

tau = 4

features = torch.zeros((T - tau, tau))

for i in range(tau):

features[:, i] = x[i: T - tau + i]

labels = x[tau:].reshape((-1, 1))

batch_size, n_train = 16, 600 # 只有前n_train个样本用于训练

train_iter = d2l.load_array((features[:n_train], labels[:n_train]), batch_size, is_train=True)

# 初始化网络权重的函数

def init_weights(m):

if type(m) == nn.Linear:

nn.init.xavier_uniform_(m.weight)

# 一个简单的多层感知机

def get_net():

net = nn.Sequential(nn.Linear(4, 10),

nn.ReLU(),

nn.Linear(10, 1))

net.apply(init_weights)

return net

# 平方损失。注意:MSELoss计算平方误差时不带系数1/2

loss = nn.MSELoss(reduction='none')

def train(net, train_iter, loss, epochs, lr):

trainer = torch.optim.Adam(net.parameters(), lr)

for epoch in range(epochs):

for X, y in train_iter:

trainer.zero_grad()

l = loss(net(X), y)

l.sum().backward()

trainer.step()

print(f'epoch {epoch + 1}, '

f'loss: {d2l.evaluate_loss(net, train_iter, loss):f}')

net = get_net()

train(net, train_iter, loss, 5, 0.01)

onestep_preds = net(features)

d2l.plot([time, time[tau:]],

[x.detach().numpy(), onestep_preds.detach().numpy()], 'time',

'x', legend=['data', '1-step preds'], xlim=[1, 1000],

figsize=(6, 3))

plt.show()

multistep_preds = torch.zeros(T)

multistep_preds[: n_train + tau] = x[: n_train + tau]

for i in range(n_train + tau, T):

multistep_preds[i] = net(

multistep_preds[i - tau:i].reshape((1, -1)))

d2l.plot([time, time[tau:], time[n_train + tau:]],

[x.detach().numpy(), onestep_preds.detach().numpy(),

multistep_preds[n_train + tau:].detach().numpy()], 'time',

'x', legend=['data', '1-step preds', 'multistep preds'],

xlim=[1, 1000], figsize=(6, 3))

plt.show()

max_steps = 64

features = torch.zeros((T - tau - max_steps + 1, tau + max_steps))

# 列i(i<tau)是来自x的观测,其时间步从(i+1)到(i+T-tau-max_steps+1)

for i in range(tau):

features[:, i] = x[i: i + T - tau - max_steps + 1]

# 列i(i>=tau)是来自(i-tau+1)步的预测,其时间步从(i+1)到(i+T-tau-max_steps+1)

for i in range(tau, tau + max_steps):

features[:, i] = net(features[:, i - tau:i]).reshape(-1)

steps = (1, 4, 16, 64)

d2l.plot([time[tau + i - 1: T - max_steps + i] for i in steps],

[features[:, (tau + i - 1)].detach().numpy() for i in steps], 'time', 'x',

legend=[f'{i}-step preds' for i in steps], xlim=[5, 1000],

figsize=(6, 3))

plt.show()

【推荐】国内首个AI IDE,深度理解中文开发场景,立即下载体验Trae

【推荐】编程新体验,更懂你的AI,立即体验豆包MarsCode编程助手

【推荐】抖音旗下AI助手豆包,你的智能百科全书,全免费不限次数

【推荐】轻量又高性能的 SSH 工具 IShell:AI 加持,快人一步

· 震惊!C++程序真的从main开始吗?99%的程序员都答错了

· 别再用vector<bool>了!Google高级工程师:这可能是STL最大的设计失误

· 单元测试从入门到精通

· 【硬核科普】Trae如何「偷看」你的代码?零基础破解AI编程运行原理

· 上周热点回顾(3.3-3.9)

2017-06-08 【CCCC中国高校计算机大赛 - 网络技术挑战赛】 无线网络技术 样题解析