神经网络与深度学习(邱锡鹏)编程练习5 CNN pytorch版 解析

邱老师出题为填空题,填空比较容易完成。

做完之后,需要通读源代码。

每一个函数、每一行代码,都不应有存疑之处,将来才可以根据这个例子做自己的CNN。

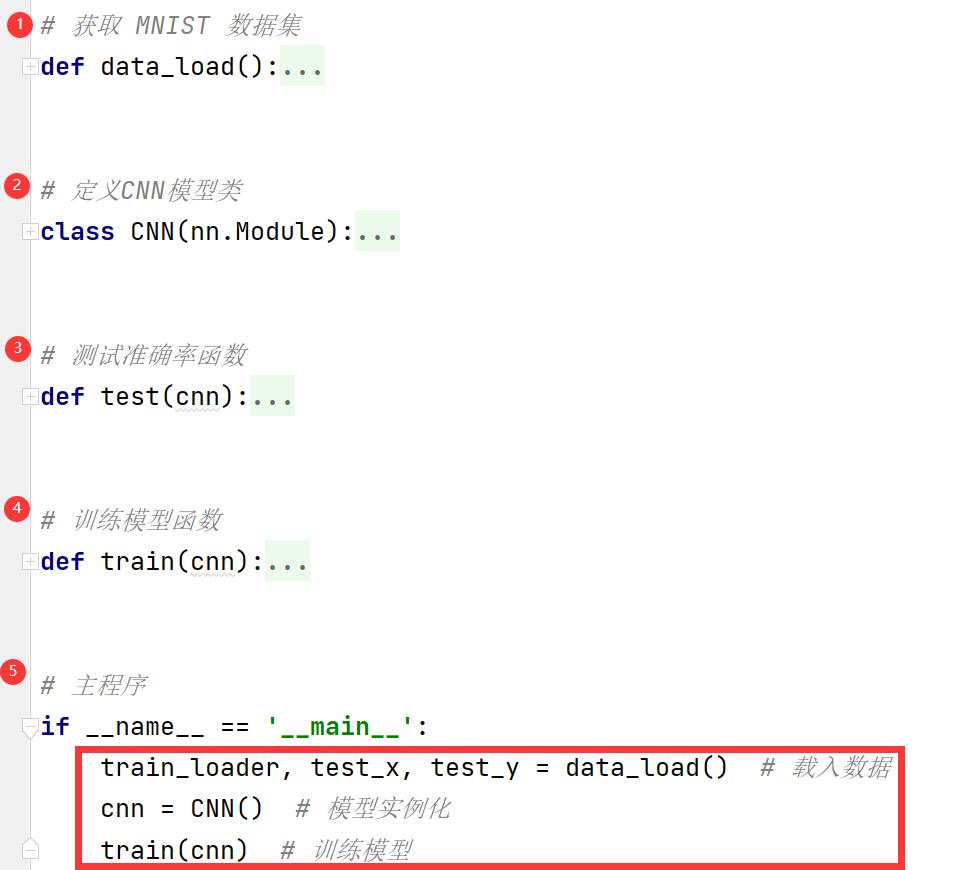

程序结构:

加完整注释的源代码:

# https://www.cnblogs.com/douzujun/p/13454960.html

import os

import torch

import torch.nn as nn

from torch.autograd import Variable

import torch.utils.data as Data

import torchvision

import torch.nn.functional as F

import numpy as np

learning_rate = 1e-4 # 学习率

keep_prob_rate = 0.7 # 丢弃率的函数参数

max_epoch = 1 # 训练轮次

BATCH_SIZE = 50 # 批 尺寸

# 获取 MNIST 数据集

def data_load():

DOWNLOAD_MNIST = False

if not (os.path.exists('./mnist/')) or not os.listdir('./mnist/'): # not mnist dir or mnist is empty dir

DOWNLOAD_MNIST = True

# 训练集load

train_data = torchvision.datasets.MNIST(root='./mnist/', train=True, transform=torchvision.transforms.ToTensor(),

download=DOWNLOAD_MNIST, )

train_loader = Data.DataLoader(dataset=train_data, batch_size=BATCH_SIZE, shuffle=True)

# 测试集load, test_x 图像, test_y 标签 。取了前500个测试数据。

test_data = torchvision.datasets.MNIST(root='./mnist/', train=False)

test_x = Variable(torch.unsqueeze(test_data.test_data, dim=1), volatile=True).type(torch.FloatTensor)[:500] / 255.

test_y = test_data.test_labels[:500].numpy()

return train_loader, test_x, test_y

# 定义CNN模型类

class CNN(nn.Module):

def __init__(self):

super(CNN, self).__init__()

self.conv1 = nn.Sequential( # 序列容器,层是有序的,严格按照顺序执行,相邻两层连接必须保证前一层的输出与后一层的输入匹配。

nn.Conv2d(in_channels=1, out_channels=32, kernel_size=7, stride=1, padding=0, ),

# 卷积 (输入通道, 输出通道, 卷积核尺寸, 步长, 填充)

nn.ReLU(), # 激活

nn.MaxPool2d(2), # 池化

)

self.conv2 = nn.Sequential(

nn.Conv2d(in_channels=32, out_channels=64, kernel_size=5, stride=1, padding=0, ), # 卷积

nn.ReLU(), # 激活

nn.MaxPool2d(1), # 池化

)

self.out1 = nn.Linear(7 * 7 * 64, 1024, bias=True) # 全连接层

self.dropout = nn.Dropout(keep_prob_rate) # 丢弃法 防止过拟合

self.out2 = nn.Linear(1024, 10, bias=True) # 全连接层

def forward(self, x): # 前向传播

x = self.conv1(x)

x = self.conv2(x)

x = x.view(-1, 64 * 7 * 7) # 重构张量的维度。 flatten the output of conv2 to (batch_size ,64 * 7 * 7)

out1 = self.out1(x)

out1 = F.relu(out1)

out1 = self.dropout(out1)

out2 = self.out2(out1)

output = F.softmax(out2)

return output

# 测试准确率函数

def test(cnn):

global prediction

y_pre = cnn(test_x) # 求预测值 (数值为概率)

# print(y_pre)

_, pre_index = torch.max(y_pre, 1) # 找 最大预测值的 索引号 (数值为整数)

pre_index = pre_index.view(-1) # 索引号 = 概率最大的那个数字的位置。因此,这个索引号就是预测的数字。

# print(pre_index)

prediction = pre_index.data.numpy() # torch convert to numpy

correct = np.sum(prediction == test_y) # 如果 预测值 = 标签 ,correct 加 1

# print(len(test_x)) # 测试集 大小 = 500

return correct / 500.0 # 正确的预测数 / 测试集总数 = 正确率

# 训练模型函数

def train(cnn):

optimizer = torch.optim.Adam(cnn.parameters(), lr=learning_rate) # 优化器Adam

loss_func = nn.CrossEntropyLoss() # 交叉熵误差

for epoch in range(max_epoch):

for step, (x_, y_) in enumerate(train_loader):

x, y = Variable(x_), Variable(y_) # 输入值; 标签值

output = cnn(x) # output:预测值

loss = loss_func(output, y) # 计算交叉熵误差

optimizer.zero_grad() # 梯度清零

loss.backward() # 反向传播

optimizer.step() # 参数优化

if step != 0 and step % 100 == 0:

print("=" * 10, step, "=" * 5, "=" * 5, "test accuracy is ", test(cnn), "=" * 10)

print("train finished !")

# 主程序

if __name__ == '__main__':

train_loader, test_x, test_y = data_load() # 载入数据

cnn = CNN() # 模型实例化

train(cnn) # 训练模型