神经网络与深度学习(邱锡鹏)编程练习4 FNN 正向传播 numpy

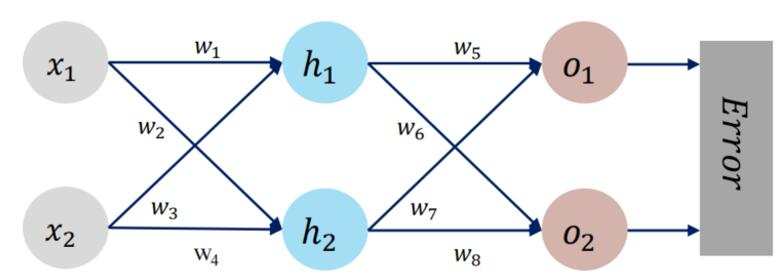

NN模型:

ref:【人工智能导论:模型与算法】MOOC 8.3 误差后向传播(BP) 例题 【第三版】 - HBU_DAVID - 博客园 (cnblogs.com)

实验目标:

理解正向传播过程,熟悉numpy编程。

初始值:

w1, w2, w3, w4, w5, w6, w7, w8 = 0.2, -0.4, 0.5, 0.6, 0.1, -0.5, -0.3, 0.8

x1, x2 = 0.5, 0.3

y1, y2 = 0.23, -0.07

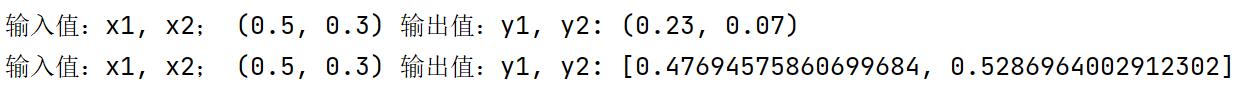

输出:

源代码1:(比较易读,先完成代码1)

import numpy as np

def sigmoid(z):

a = 1 / (1 + np.exp(-z))

return a

def forward_propagate(x1, x2, w1, w2, w3, w4, w5, w6, w7, w8): # 正向传播

in_h1 = w1 * x1 + w3 * x2

print(in_h1)

out_h1 = sigmoid(in_h1)

in_h2 = w2 * x1 + w4 * x2

print(in_h2)

out_h2 = sigmoid(in_h2)

in_o1 = w5 * out_h1 + w7 * out_h2

out_o1 = sigmoid(in_o1)

in_o2 = w6 * out_h1 + w8 * out_h2

out_o2 = sigmoid(in_o2)

return out_o1, out_o2

if __name__ == "__main__":

w1, w2, w3, w4, w5, w6, w7, w8 = 0.2, -0.4, 0.5, 0.6, 0.1, -0.5, -0.3, 0.8 # 可以给随机值,为配合PPT,给的指定值

x1, x2 = 0.5, 0.3 # 输入值

y1, y2 = 0.23, 0.07 #

print("输入值:x1, x2;",x1, x2, "输出值:y1, y2:", y1, y2)

out_o1, out_o2 = forward_propagate(x1, x2, w1, w2, w3, w4, w5, w6, w7, w8)

print("输入值:x1, x2;", x1, x2, "输出值:y1, y2:", round(out_o1, 2), round(out_o2, 2))源代码2:(代码1的简单改进)

import numpy as np

def sigmoid(z):

a = 1 / (1 + np.exp(-z))

return a

def forward_propagate(x, w1, w2, w3, w4): # 正向传播

in_h = [0.0, 0.0]

out_h = [0.0, 0.0]

in_o = [0.0, 0.0]

out_o = [0.0, 0.0]

# in_h1 = w1 * x1 + w3 * x2

in_h[0] = np.dot(w1, x)

out_h[0] = sigmoid(in_h[0])

# in_h2 = w2 * x1 + w4 * x2

in_h[1] = np.dot(w2, x)

out_h[1] = sigmoid(in_h[1])

# in_o1 = w5 * out_h1 + w7 * out_h2

in_o[0] = np.dot(w3, out_h)

out_o[0] = sigmoid(in_o[0])

# in_o2 = w6 * out_h1 + w8 * out_h2

in_o[1] = np.dot(w4, out_h)

out_o[1] = sigmoid(in_o[1])

return out_o

if __name__ == "__main__":

w1 = 0.2, 0.5

w2 = -0.4, 0.6

w3 = 0.1, -0.3

w4 = -0.5, 0.8

x = 0.5, 0.3 # 输入值

y = 0.23, 0.07 #

print("输入值:x1, x2;",x, "输出值:y1, y2:", y)

out_o = forward_propagate(x, w1, w2, w3, w4)

print("输入值:x1, x2;", x, "输出值:y1, y2:", out_o)