kubernetes 不同网络方案性能对比

网络方案性能验证

1 测试说明

涉及网络性能变量较多,比如网卡MTU值及报文大小、TCP windows size、多线程等,由于我们使用横向对比,在相同测试场景下比较不同网络方案的性能,所以暂时统一配置。

1.1 虚拟机配置

两台4C、8G的centos7虚拟机,使用桥接方式连通在同一台物理机上,分别作为服务器和客户端。

1.2 测试过程

本次测试使用 iperf 来打流,测试了 TCP 场景下 60s 的平均带宽,客户端和服务器端使用的命令分别如下:

ip netns exec ns0 iperf -c $server_ip -i 1 -t 60

ip netns exec ns0 iperf –s

注:由于 hostnetwork 场景下未用到网络命令空间,所以 iperf 命令在主机网络栈上执行。

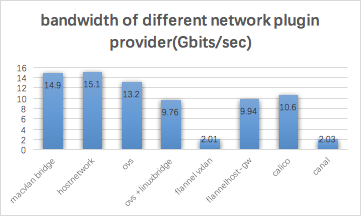

1.3 数据展示

上图展示了8种不同的网络方案,相同的测试场景下得到了网络容器之间通信带宽数据,测试结果单位为 Gbit/sec。

2 结果分析:

2.1 hostnetwork 性能最优

从测试结果可以看出,hostnetwork 场景下跨节点容器带宽性能最好,原因是由于通信过程中使用了主机网络栈,其他场景下由于使用了网络命名空间隔离,报文通信过程除了使用容器网络栈还都会经过主机网卡或者经过主机网络栈(除ovs和macvlan),所以性能上会有所损失

2.2 macvlan bridge 性能逼近 hostnetwork

macvlan 场景由于跨过主机网络栈,直接从宿主机网卡将报文投递出去,所以性能方面会较其他非 hostwork 场景下高。

由于不经过宿主机网络栈且没有控制流程,所以安全策略等不容易生效。

2.3 ovs 场景性能仅次于 macvlan

ovs 场景下使用 veth 直连容器网络命名空间和 ovs 网桥,并由 ovs datapath 在内核态匹配处理报文送至物理网卡投递报文,所以也没有经过宿主机命名空间,但是由于其受在内核态首包匹配不成功需要上送到用户态处理,且需要有 ovs 的控制,在增加了报文控制的,相较于 macvlan 简单直白的流程性能有所降低。

Ovs + linux bridge,相比于单纯使用 ovs + veth 场景性能下降 26%。Ovs + Linux bridge 提出的

2.4 增加了报文在容器网络命名空间的处理,Calico 较hostnetwork 性能下降 30%

Calico 使用了 underlay 网络来实现跨主机容器通信,相比于 hostnetwork 场景,只是增加了容器网络栈的处理流程,性能下降了将近 30%,相比于同样使用了有容器网络命名空间的 macvlan 和 ovs 场景,性能分别下降了29% 和 20%。

flannel host-gw场景与 calico 场景区别在于flannel host-gw 使用 linux bridge, calico 使用 veth 来连接主机和空器网络栈,性能差距将近1%。

2.5 flannel vxlan和 canal 场景网络性能最差

flannel vxlan 和canal 由于使用 vxlan 隧道技术,跨主机通信时要有报文封装解封装、性能上损耗很明显,较 hostnework 方案下了降86 %。 flannel 和 canal 的差别在于flannel 使用 linux bridge 来完成容器网络命名空间与宿主机/同节点容器通信,而 canal 则使用 veth,但是仍然可以看得出来直接使用veth 的网络性能比linux bridge 略高,有 1% 的性能提升。

3 总结:

上面介绍对比了,相同测试场景下不同容器网络方案的跨节点容器通信性能对比, hostnetwork 场景; 在容器场景下macvlan 的网络损耗非常低,但是由于其跨过主机网络栈,无法通过主机网络栈来实现网络访问控制,因而适用于对于网络安全要求较低,但是追求网络性能的场景;ovs 由于其高效、易用以及强大的 SDN 能力,在 Openstack 为主的 IaaS 和 Openshift,Controiv 等主导的 PaaS 平台占领了相当一部分的市场。 而且相比于 macvlan 对于报文的控制能力更强,所以可适用于业务场景较复杂的场景;flannel 和 canal 使用 vxlan 隧道来封装跨主机容器访问报文,性能损失严重,但是由于 vxlan 隔离了容器网络与基础物理网络,所以很容易地通过此方案将 PaaS 平台与原有业务平台有整合。

4 实现命令

flannel host-gw场景将flannel vxlan 场景下主机路由规则将从物理网卡

hostnework 场景下

4.1 macvlan

4.1.1 hosta

ip netns add ns0

ip netns add ns1

ip link add link eth0 macvlan0 type macvlan mode bridge

ip link set macvlan0 netns ns0

ip netns exec ns0 ip link set macvlan0 up

ip netns exec ns0 ip link set lo up

ip netns exec ns0 ip addr add 192.168.2.3/24 dev macvlan0

ip link set eth0 promisc on

4.1.2 hostb

ip netns add ns0

ip netns add ns1

ip link add link eth0 macvlan0 type macvlan mode bridge

ip link set macvlan0 netns ns0

ip netns exec ns0 ip link set macvlan0 up

ip netns exec ns0 ip link set lo up

ip netns exec ns0 ip addr add 192.168.2.5/24 dev macvlan0

ip link set eth0 promisc on

4.2 flannel vxlan

4.2.1 hosta

ip link add dev flannel_vxlan_c type veth peer name flannel_vxlan_h

brctl addif docker0 flannel_vxlan_h

ip link set flannel_vxlan_h up

ip addr add 10.5.58.1/24 dev docker0

ip netns add flannel_vxlan

ip netns exec flannel_vxlan ip link set lo up

ip link set flannel_vxlan_c netns flannel_vxlan

ip netns exec flannel_vxlan ip link set flannel_vxlan_c up

ip netns exec flannel_vxlan ip addr add 10.5.58.3/24 dev flannel_vxlan_c

ip netns exec flannel_vxlan ip route add default via 10.5.58.1

ip nei add 10.5.47.0 dev flannel.1 lladdr 8e:09:f3:4f:94:34

bridge fdb add 8e:09:f3:4f:94:34 dst 192.168.20.43 dev flannel.1

ip route add 10.5.58.0/24 via 10.5.58.0 dev flannel.1 onlink

4.2.2 hostb

ip link add dev flannel_vxlan_c type veth peer name flannel_vxlan_h

brctl addif docker0 flannel_vxlan_h

ip link set flannel_vxlan_h up

ip addr add 10.5.47.1/24 dev docker0

ip netns add flannel_vxlan

ip netns exec flannel_vxlan ip link set lo up

ip link set flannel_vxlan_c netns flannel_vxlan

ip netns exec flannel_vxlan ip link set flannel_vxlan_c up

ip netns exec flannel_vxlan ip addr add 10.5.47.3/24 dev flannel_vxlan_c

ip netns exec flannel_vxlan ip route add default via 10.5.47.1

ip nei add 10.5.58.0 dev flannel.1 lladdr 2a:33:36:9b:2f:28

bridge fdb add 8e:09:f3:4f:94:34 dst 192.168.20.42 dev flannel.1

ip route add 10.5.47.0/24 via 10.5.47.0 dev flannel.1 onlink

4.3 canal

4.3.1 hosta

ip link add dev canal-c type veth peer name canal-h

ip link set canal-c netns canal

ip netns exec canal ip link set lo up

ip netns exec canal ip link set canal-c up

ip netns exec canal ip addr add 10.5.58.103/24 dev canal-c

ip netns exec calico ip route add default via 169.254.1.1

ip netns exec canal ip route add 169.254.1.1 dev canal-c

ip route add 10.5.58.103 dev canal-h

ip link set canal-h up

sysctl -w net.ipv4.conf.canal-h.proxy_arp=1

ip nei add 10.5.47.0 dev flannel.1 lladdr 8e:09:f3:4f:94:34

bridge fdb add 8e:09:f3:4f:94:34 dst 192.168.20.43 dev flannel.1

ip route add 10.5.47.0/24 via 10.5.47.0 dev flannel.1 onlink

4.3.2 hostb

ip link add dev canal-c type veth peer name canal-h

ip link set canal-c netns canal

ip netns exec canal ip link set lo up

ip netns exec canal ip link set canal-c up

ip netns exec canal ip addr add 10.5.47.103/24 dev canal-c

ip netns exec calico ip route add default via 169.254.1.1

ip netns exec canal ip route add 169.254.1.1 dev canal-c

ip route add 10.5.47.103 dev canal-h

ip link set canal-h up

sysctl -w net.ipv4.conf.canal-h.proxy_arp=1

ip nei add 10.5.58.0 dev flannel.1 lladdr 8e:09:f3:4f:94:34

bridge fdb add 8e:09:f3:4f:94:34 dst 192.168.20.43 dev flannel.1

ip route add 10.5.58.0/24 via 10.5.58.0 dev flannel.1 onlink

4.4 calico

4.4.1 hosta

ip netns add calico

ip link add dev calico-c type veth peer name calico-h

ip link set calico-c netns calico

ip netns exec calico ip link set lo up

ip netns exec calico ip link set calico-c up

ip netns exec calico ip addr add 172.28.0.3/24 dev calico-c

ip netns exec calico ip route add default via 169.254.1.1

ip netns exec calico ip route add 169.254.1.1 dev calico-c

ip route add 172.28.0.3 dev calico-h

ip link set calico-h up

sysctl -w net.ipv4.conf.calico-h.proxy_arp=1

ip route add 172.28.1.0/24 via 192.168.20.43

4.4.2 hostb

ip netns add calico

ip link add dev calico-c type veth peer name calico-h

ip link set calico-c netns calico

ip netns exec calico ip link set lo up

ip netns exec calico ip link set calico-c up

ip netns exec calico ip addr add 172.28.1.3/24 dev calico-c

ip netns exec calico ip route add default via 169.254.1.1

ip netns exec calico ip route add 169.254.1.1 dev calico-c

ip route add 172.28.1.3 dev calico-h

ip link set calico-h up

sysctl -w net.ipv4.conf.calico-h.proxy_arp=1

ip route add 172.28.0.0/24 via 192.168.20.42

4.5 OVS

4.5.1 hosta

ip netns add ovs

ovs-vsctl add-br br-int -- set Bridge br-int fail_mode=secure

ip link add dev veth-c type veth peer name veth-ovs

ip link set veth-c netns ovs

ip netns exec ovs ip link set lo up

ip netns exec ovs ip link set veth-c up

ip netns exec ovs ip addr add 172.28.200.3/24 dev veth-c

ip link set veth-ovs promisc on

ip link set veth-ovs up

ovs-vsctl --may-exist add-port br-int veth-ovs -- set Interface veth-ovs ofport_request=1

ovs-vsctl --may-exist add-port br-int eth0 -- set Interface eth0 ofport_request=2

ovs-ofctl add-flow br-int "table=0, priority=100, ip, nw_dst=172.28.200.3 actions=output:1"

ovs-ofctl add-flow br-int "table=0, priority=100, arp,arp_tpa=172.28.200.3 actions=output:1"

ovs-ofctl add-flow br-int "table=0, priority=99, actions=output:2"

4.5.2 hostb

ip netns add ovs

ovs-vsctl add-br br-int -- set Bridge br-int fail_mode=secure

ip link add dev veth-c type veth peer name veth-ovs

ip link set veth-c netns ovs

ip netns exec ovs ip link set lo up

ip netns exec ovs ip link set veth-c up

ip netns exec ovs ip addr add 172.28.200.4/24 dev veth-c

ip link set veth-ovs promisc on

ip link set veth-ovs up

ovs-vsctl --may-exist add-port br-int veth-ovs -- set Interface veth-ovs ofport_request=1

ovs-vsctl --may-exist add-port br-int eth0 -- set Interface eth0 ofport_request=2

ovs-ofctl add-flow br-int "table=0, priority=100, ip, nw_dst=172.28.200.4 actions=output:1"

ovs-ofctl add-flow br-int "table=0, priority=100, arp,arp_tpa=172.28.200.4 actions=output:1"

ovs-ofctl add-flow br-int "table=0, priority=99, actions=output:2"

4.6 ovs with linux bridge

4.6.1 hosta

ip netns add ovs

brctl addbr linux-br

ip link set linux-br up

ovs-vsctl add-br br-int -- set Bridge br-int fail_mode=secure

ip link add dev ovs-br type veth peer name br-ovs

ip link set br-ovs promisc on

ip link set ovs-br promisc on

ip link set br-ovs up

ip link set ovs-br up

ovs-vsctl --may-exist add-port br-int br-ovs -- set Interface br-ovs ofport_request=1

brctl addif linux-br ovs-br

brctl addif linux-br veth-br

ip link add dev veth-c type veth peer name veth-br

brctl addif linux-br veth-br

ip link set veth-br up

ip link set veth-c netns ovs

ip netns exec ovs ip link set ovs up

ip netns exec ovs ip link set veth-c up

ip netns exec ovs ip addr add 172.28.100.3/24 dev veth-c

ovs-vsctl --may-exist add-port br-int eth0 -- set Interface eth0 ofport_request=2

ovs-vsctl add-port br-int eth0

ovs-ofctl add-flow br-int "table=0, priority=100, ip, nw_dst=172.28.100.3 actions=output:1"

ovs-ofctl add-flow br-int "table=0, priority=100,arp,arp_tpa=172.28.100.3 actions=output:1"

ovs-ofctl add-flow br-int "table=0, priority=99, actions=output:2"

4.6.2 hostb

ip netns add ovs

brctl addbr linux-br

ip link set linux-br up

ovs-vsctl add-br br-int -- set Bridge br-int fail_mode=secure

ip link add dev ovs-br type veth peer name br-ovs

ip link set br-ovs promisc on

ip link set ovs-br promisc on

ip link set br-ovs up

ip link set ovs-br up

ovs-vsctl --may-exist add-port br-int br-ovs -- set Interface br-ovs ofport_request=1

brctl addif linux-br ovs-br

brctl addif linux-br veth-br

ip link add dev veth-c type veth peer name veth-br

brctl addif linux-br veth-br

ip link set veth-br up

ip link set veth-c netns ovs

ip netns exec ovs ip link set ovs up

ip netns exec ovs ip link set veth-c up

ip netns exec ovs ip addr add 172.28.100.4/24 dev veth-c

ovs-vsctl --may-exist add-port br-int eth0 -- set Interface eth0 ofport_request=2

ovs-vsctl add-port br-int eth0

ovs-ofctl add-flow br-int "table=0, priority=100, ip, nw_dst=172.28.100.4 actions=output:1"

ovs-ofctl add-flow br-int "table=0, priority=100,arp,arp_tpa=172.28.100.4 actions=output:1"

ovs-ofctl add-flow br-int "table=0, priority=99, actions=output:2"

5 参考

Running etcd under Docker

https://coreos.com/etcd/docs/latest/v2/docker_guide.html

Running flannel

https://github.com/coreos/flannel/blob/master/Documentation/running.md

Centos7 安装openvswitch

https://www.jianshu.com/p/658332deac99