kubernetes service之node port

环境信息

CLIENT HOSTA HOSTB

192.168.55.230 eth0: 192.168.16.73 eth0: 192.168.16.139

flannel.1: 10.100.40.0/32 flannel.1: 10.100.81.0/32

PODIP: 10.100.81.180

K8S资源信息

[root@c2v73 ~]# kubectl get services httpserver -o wide

NAME CLUSTER-IP EXTERNAL-IP PORT(S) AGE SELECTOR

httpserver 10.254.112.35 <nodes> 3000:30000/TCP,8080:30080/TCP 304d caicloud-app=httpserver

[root@c2v73 ~]# kubectl get endpoints httpserver -o wide

NAME ENDPOINTS AGE

httpserver 10.100.81.180:8080,10.100.81.180:3000 304d

[root@c2v73 ~]# kubectl get pod httpserver-v2.0.0-rc.3-patch1-zvcbp -o wide

NAME READY STATUS RESTARTS AGE IP NODE

httpserver-v2.0.0-rc.3-patch1-zvcbp 1/1 Running 32 2d 10.100.81.180 kube-node-24

请求报文 192.168.55.230:randomport -> 192.168.16.73:30000

- HOSTA节点(转发节点)

[root@c2v73 ~]# iptables -vnL PREROUTING -t nat

Chain PREROUTING (policy ACCEPT 71 packets, 5113 bytes)

pkts bytes target prot opt in out source destination

79 5709 cali-PREROUTING all -- * * 0.0.0.0/0 0.0.0.0/0 /* cali:6gwbT8clXdHdC1b1 */

79 5709 KUBE-SERVICES all -- * * 0.0.0.0/0 0.0.0.0/0 /* kubernetes service portals */

44 4053 DOCKER all -- * * 0.0.0.0/0 0.0.0.0/0 ADDRTYPE match dst-type LOCAL

KUBE-SERVICES链中优匹配目的ip为vip的报文,最后默认匹配KUBE-NODEPORTS

Chain KUBE-SERVICES (2 references)

pkts bytes target prot opt in out source destination

0 0 KUBE-SVC-7LP2XUEI73XCWKBC tcp -- * * 0.0.0.0/0 10.254.87.134 /* default/hbase-master:h

ttp cluster IP */ tcp dpt:16010

0 0 KUBE-SVC-DJQF6FU6GLEBPD2P tcp -- * * 0.0.0.0/0 10.254.233.102 /* default/cauth-redis-sl

ave: cluster IP */ tcp dpt:6379

……

375 30172 KUBE-NODEPORTS all -- * * 0.0.0.0/0 0.0.0.0/0 /* kubernetes service nodeports; NOTE: this must be the last rule in this chain */ ADDRTYPE match dst-type LOCAL

在KUBE-NODEPORTS链中使用目的端口匹配规则后,首先给报文打MASQMARK,之后匹配NODEPORT SERVICE规则

**[root@c2v73 ~]# iptables -vnL KUBE-NODEPORTS -t nat |grep 30000

**

7 448 KUBE-MARK-MASQ tcp -- * * 0.0.0.0/0 0.0.0.0/0 /* default/httpserver:http */ tcp dpt:30000

7 448 KUBE-SVC-J5IAPMHBS2NR43EJ tcp -- * * 0.0.0.0/0 0.0.0.0/0 /* default/httpserver:http */ tcp dpt:30000

根据ENDPOINT个数,iptables会做平均分发,此例只有一个ENDPOINT,所以全部匹配该ENDPOINT规则

Chain KUBE-SVC-J5IAPMHBS2NR43EJ (2 references)

pkts bytes target prot opt in out source destination

7 448 KUBE-SEP-XZ7F6CLIJGJWI66P all -- * * 0.0.0.0/0 0.0.0.0/0 /* default/httpserver:http */

ENDPONT规则做DNAT,将目的ip和port转换为对应容器pod和port

[root@c2v73 ~]# iptables -vnL KUBE-SEP-XZ7F6CLIJGJWI66P -t nat

Chain KUBE-SEP-XZ7F6CLIJGJWI66P (1 references)

pkts bytes target prot opt in out source destination

0 0 KUBE-MARK-MASQ all -- * * 10.100.81.180 0.0.0.0/0 /* default/httpserver:http */

7 448 DNAT tcp -- * * 0.0.0.0/0 0.0.0.0/0 /* default/httpserver:http */ tcp to:10.100.81.180:3000

路由查询出接口

[root@c2v73 ~]# ip route |grep 10.100.81

10.100.81.0/24 via 10.100.81.0 dev flannel.1 onlink

[root@c2v73 ~]# ip -d link show flannel.1

12: flannel.1: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1450 qdisc noqueue state UNKNOWN mode DEFAULT

link/ether 1a:cc:4c:8b:d4:6c brd ff:ff:ff:ff:ff:ff promiscuity 0

vxlan id 1 local 192.168.16.73 dev eth0 srcport 0 0 dstport 8472 nolearning ageing 300 addrgenmode none

从一节点DNAT到另一节点,肯定要返回节点做SNAT,所以对于DNAT的报文打上0x4000 PKT mark

[root@c2v73 ~]# iptables -vnL KUBE-NODEPORTS -t nat |grep 30000

7 448 KUBE-MARK-MASQ tcp -- * * 0.0.0.0/0 0.0.0.0/0 /* default/httpserver:http */ tcp dpt:30000

7 448 KUBE-SVC-J5IAPMHBS2NR43EJ tcp -- * * 0.0.0.0/0 0.0.0.0/0 /* default/httpserver:http */ tcp dpt:30000

根据路由查询结果,出接口为flannel.1,所以MASQUERADE通过inet_select_addr从flannel.1上先把IP作SNAT,此时报文请求变为10.100.40.0:randomport->10.100.81.180:3000

[root@c2v73 ~]# iptables -vnL KUBE-POSTROUTING -t nat

Chain KUBE-POSTROUTING (1 references)

pkts bytes target prot opt in out source destination

7 798 MASQUERADE all -- * * 0.0.0.0/0 0.0.0.0/0 /* kubernetes service traffic requiring SNAT */ mark match 0x4000/0x4000

从vxlan端口(flannel.1)发送报文,驱动调用vxlan_xmit,通过目的mac查询vxlan_fdb先把remote ip(外层目的ip),即192.168.16.139,从vxlan_dev中获取源local ip 192.168.16.73(&vxlan->cfg.saddr),然后调iptunnel_xmit->ip_local_out从对应物理网口将报文送出去,并且由于 POSTROUTING 有如下匹配规则,所以 vxlan 报文不会再做一次 SNAT

iptables -vnL POSTROUTING -t nat

713K 51M RETURN all -- * * 192.168.16.0/20 192.168.16.0/20

1350 81000 MASQUERADE all -- * * 192.168.64.0/20 !224.0.0.0/4

[root@c2v73 ~]# ip nei |grep 10.100.81.0

10.100.81.0 dev flannel.1 lladdr aa:a1:54:36:e0:a9 PERMANENT

[root@c2v73 ~]# bridge fdb |grep aa:a1:54:36:e0:a9

aa:a1:54:36:e0:a9 dev flannel.1 dst 192.168.16.139 self permanent

- HOSTB(接收端)

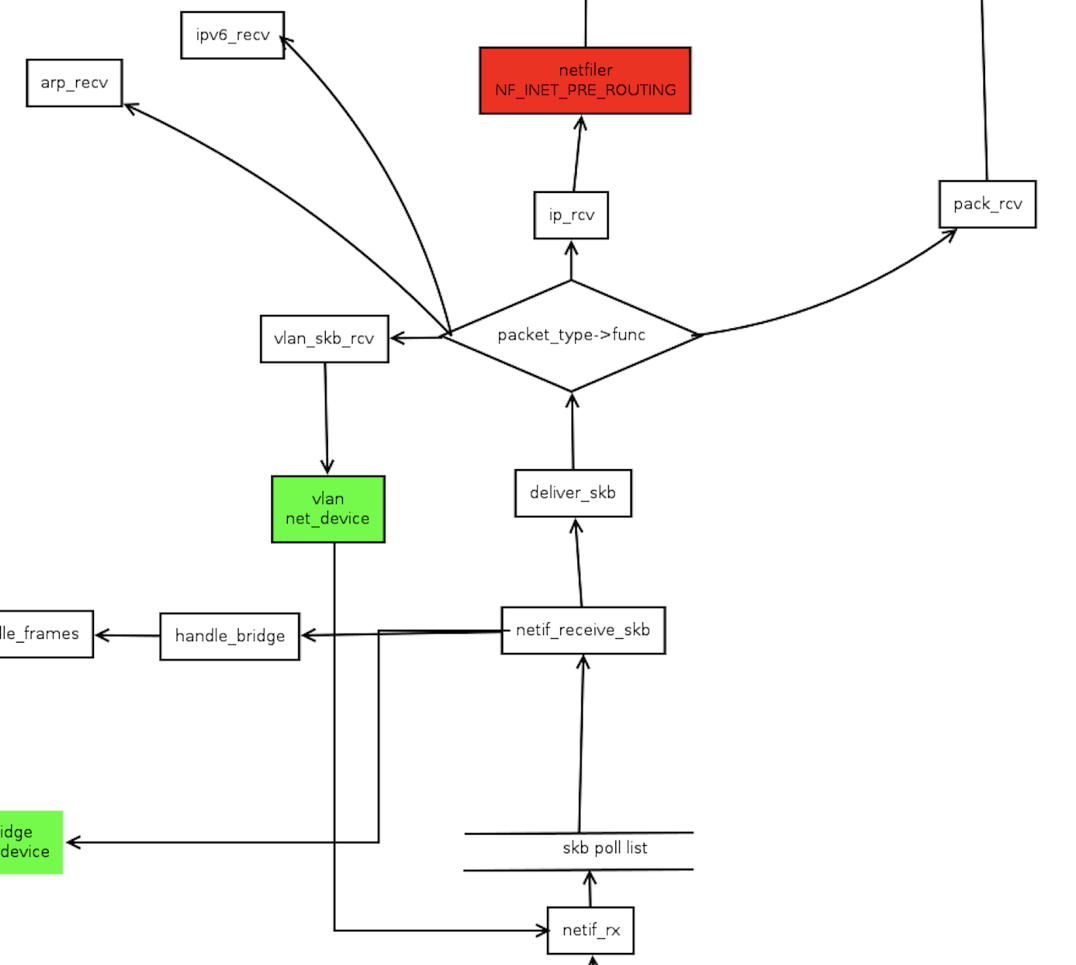

Vxlan udp报文处理报经过vxlan_rcv (ens3) -> netif_rx(vxlandev skb)

其中vxlan_rcv中会使用vxlan_vs_find_vni(vs, vxlan_vni(vxlan_hdr(skb)->vx_vni))通过vxlan报文vni来查找对应的vxlan_dev,所以vxlan网口vni要一致。

[root@c4v139 ~]# ip -d link show flannel.1

8: flannel.1: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1450 qdisc noqueue state UNKNOWN mode DEFAULT

link/ether aa:a1:54:36:e0:a9 brd ff:ff:ff:ff:ff:ff promiscuity 0

vxlan id 1 local 192.168.16.139 dev ens3 srcport 0 0 dstport 8472 nolearning ageing 300 addrgenmode none

报文从flannel.1转发查询路由转到veth接口calid9cdc8e8d37

[root@c4v139 ~]# ip route |grep 10.100.81.180

10.100.81.180 dev calid9cdc8e8d37 scope link

veth接口调用驱动函数发送报文时,调用veth_xmit->dev_forward_skb(peer, skb)->enqueue_to_backlog

dev_forward_skb会在本地将报文转发至另一个网口,即veth peer

调用软中断从接收队列获取数据包

- 返程报文

POD内返程报文为10.100.81.180:3000->10.100.40.0:randomport,路由查询出接口

root@httpserver-v2:/app# ip route

default via 169.254.1.1 dev eth0

169.254.1.1 dev eth0 scope link

此处calico配置了169.254.1.1作为下一跳,并将主机上的veth peer打开arp proxy的功能,所以下面查看到169.254.1.1的mac为veth peer的mac

root@httpserver-v2:/app# ip nei

192.168.16.139 dev eth0 lladdr 8a:85:67:77:67:83 STALE

169.254.1.1 dev eth0 lladdr 8a:85:67:77:67:83 REACHABLE

[root@c4v139 ~]# ip addr show calid9cdc8e8d37

137: calid9cdc8e8d37@if3: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP

link/ether 8a:85:67:77:67:83 brd ff:ff:ff:ff:ff:ff link-netnsid 4

inet6 fe80::8885:67ff:fe77:6783/64 scope link

valid_lft forever preferred_lft forever

[root@c4v139 ~]# sysctl -a |grep calid9cdc8e8d37 |grep proxy_arp

net.ipv4.conf.calid9cdc8e8d37.proxy_arp = 1

calid9cdc8e8d37 对arp请求169.254.1.1做了代理(如果网口开启了proxy_arp,且此ip查询路由后的出接口不是此网口则会对此ip做proxy; openstack中dvr模式中fip命名空间fg口就是如此设置)

查询路由从flannel.1送出去

[root@c4v139 ~]# ip route |grep 10.100.40.0

10.100.40.0/24 via 10.100.40.0 dev flannel.1 onlink

后面流程为从vxlan dev发送报文作vxlan封装至HOSTA,流程与上面HOSTA至HOSTB一致,所以不再重复描述。

到达HOSTB后,根据内核连接跟踪功能POSTROUTING做SNAT,PREROUTING会做DNAT,源ip和目的ip 分别转换为192.168.16.73:和192.168.55.230

引用

veth在内核的实现 http://ju.outofmemory.cn/entry/187069

数据包接收系列 — 上半部实现(内核接口)http://blog.csdn.net/zhangskd/article/details/22211295

网络子系统18_arp对代理的处理 http://blog.csdn.net/nerdx/article/details/12192961

calic FAQ https://docs.projectcalico.org/v2.0/usage/troubleshooting/faq#why-cant-i-see-the-16925411-address-mentioned-above-on-my-host

linux内核网络源代码调用关系 http://www.cnblogs.com/haoqingchuan/p/7882236.html