kerberos 集成hadoop安装

操作系统:centos7.9 数量:3

主机名映射关系

| IP地址 | hostname | 服务 |

| 192.168.0.124 | master | kerberos server kerberos client |

| 192.168.0.125 | slave | kerberos client |

| 192.168.0.126 | slave | kerberos client |

1.安装kerberos

服务端安装

yum -y install krb5-libs krb5-workstation krb5-server |

yum -y install krb5-devel krb5-workstation |

配置kerberos server 主要修改三个配置文件,如下:

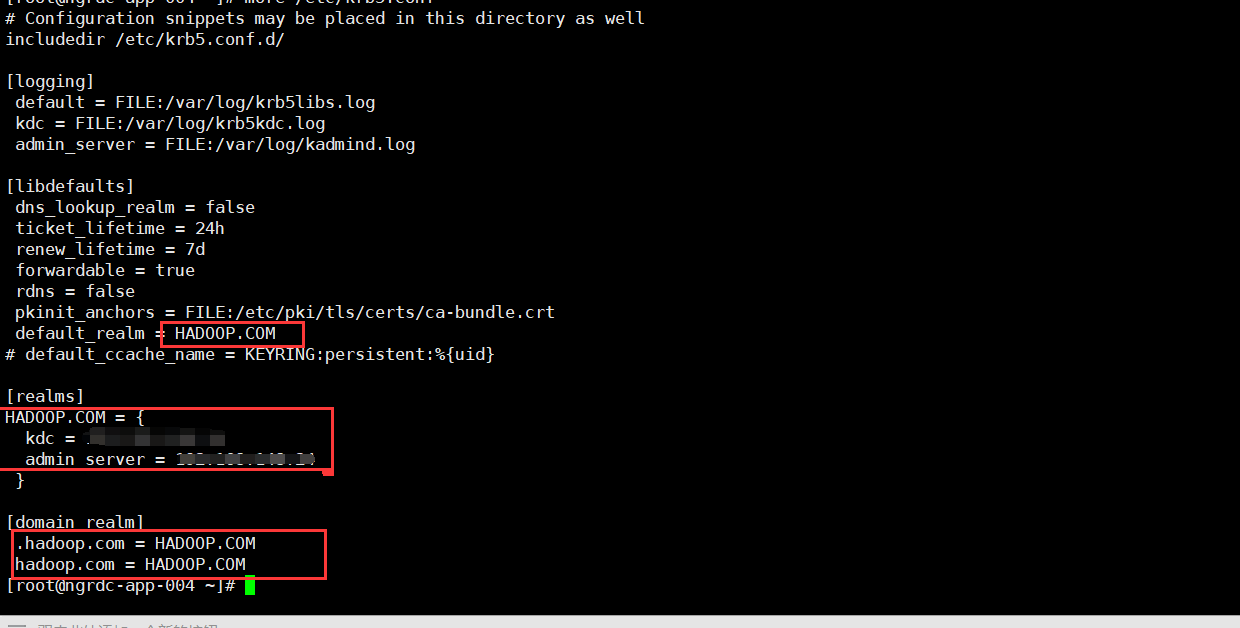

(1) /etc/krb5.conf

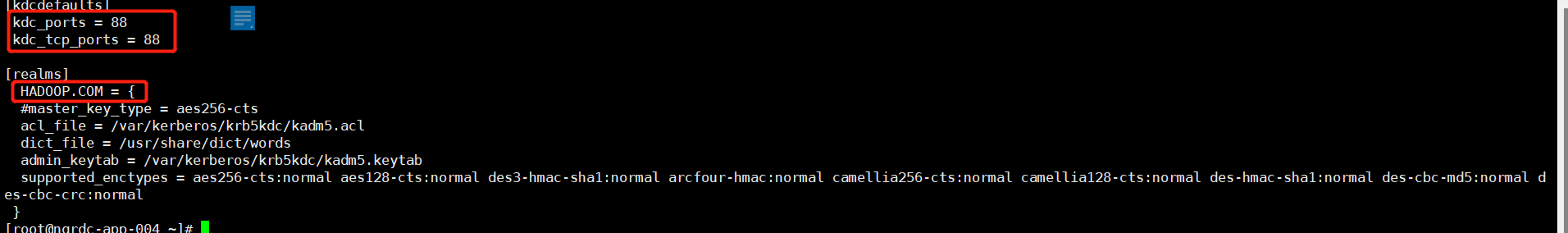

(2) /var/kerberos/krb5kdc/kdc.conf

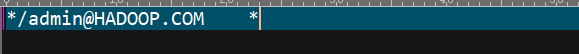

(3) /var/kerberos/krb5kdc/kadm5.acl

修改/etc/krb5.cof

修改/var/kerberos/krb5kdc/kdc.conf

修改/var/kerberos/krb5kdc/kadm5.acl

创建数据库

kdb5_util -s create HADOOP.COM

启动kdc服务

systemctl start krb5kdc

systemctl enable krb5kdc #开启自启

systemctl start kadmin

systemctl enable kadmin #开启自启

创建管理员

第一种方法

kadmin.local #进入控制台

addprinc admin/admin #创建管理员

第二种方法

echo -e "123456\n123456" | kadmin.local -q "addprinc admin/admin"

第三种方法

kadmin.local -q "addprinc addmin/admin"

客户端测试

kinit admin/admin

常用命令

add_principal, addprinc, ank 进入客户端可以创建主体 delete_principal, delprinc # 删除主体,删除的时候会询问是否删除 list_principals, listprincs, get_principals, getprincs #列出当前凭证 kadmin: xst -norandkey -k hadoop.keytab admin/admin hadoop/hadoop 生成hadoop.keytab文件 |

2.hdfs yarn 集成kerberos (默认三台机器已经安装好hadoop)

|

# hadoop是服务器用户 # kadmin.local -q “addprinc -randkey yarn/hadoop” # kadmin.local -q “addprinc -randkey mapred/hadoop” # kadmin.local -q “addprinc -randkey HTTP/hadoop” |

创建keytab文件

| kadmin.local -q “xst -norandkey -k /etc/hadoop.keytab hdfs/hadoop yarn/hadoop mapred/hadoop HTTP/hadoop” |

拷贝秘钥文件,将hadoop.keytab全部scp到hadoop集群的机器中

| scp /etc/hadoop.keytab hduser@hostname:${HADOOP_HOME}/etc/hadoop |

修改秘钥文件权限 修改Hadoop集群上的hadoop.keytab密钥文件权限(所有节点都要修改),例如:

|

# chown hduser:hadoop ${HADOOP_HOME}/etc/hadoop/hadoop.keytab #chmod 400 ${HADOOP_HOME}/etc/hadoop/hadoop.keytab |

配置hadoop kerberos认证 修改 core-site.xml

|

添加如下配置: <property> <name>hadoop.security.authentication</name> <value>kerberos</value> </property>

<property> <name>hadoop.security.authorization</name> <value>true</value> </property> <property> <name>hadoop.security.auth_to_local</name> <value> RULE:[2:0](hdfs@HADOOP.COM)s/.*/hduser/ RULE:[2:0]([yarn@HADOOP.COM)s/.*/hduser/ RULE:[2:0](mapred@HADOOP.COM)s/.*/hduser/ RULE:[2:0](hive@HADOOP.COM)s/.*/hduser/ RULE:[2:0](hbase@HADOOP.COM)s/.*/hduser/ DEFAULT </value> </property> |

参数解析:

hadoop.security.authorization: 是否开启认证

hadoop.security.authentication:当开启认证以后,使用什么样的方式认证

hadoop.security.auth_to_local: kerberos认证用户映射到本地文件系统下的什么用户

配置hadoop kerberos认证 hdfs-site.xml

|

<!-- NameNode Kerberos Config--> <property> <name>dfs.block.access.token.enable</name> <value>true</value> </property>

<property> <name>dfs.namenode.kerberos.principal</name> <value>hdfs/_HOST@HADOOP.COM</value> </property>

<property> <name>dfs.namenode.keytab.file</name> <value>/data1/hadoop/hadoop/etc/hadoop/hadoop.keytab</value> </property>

<property> <name>dfs.https.port</name> <value>50470</value> </property>

<property> <name>dfs.https.address</name> <value>0.0.0.0:50470</value> </property>

<property> <name>dfs.namenode.kerberos.https.principal</name> <value>HTTP/_HOST@HADOOP.COM</value> </property>

<property> <name>dfs.namenode.kerberos.internal.spnego.principal</name> <value>HTTP/_HOST@HADOOP.COM</value> </property> <!-- JournalNode Kerberos Config --> <property> <name>dfs.journalnode.kerberos.principal</name> <value>hdfs/_HOST@HADOOP.COM</value> </property>

<property> <name>dfs.journalnode.keytab.file</name> <value>/data1/hadoop/hadoop/etc/hadoop/hadoop.keytab</value> </property>

<!-- DataNode Kerberos Config -->

<property> <name>dfs.datanode.data.dir.perm</name> <value>700</value> </property>

<property> <name>dfs.datanode.kerberos.principal</name> <value>hdfs/_HOST@HADOOP.COM</value> </property>

<property> <name>dfs.datanode.keytab.file</name> <value>/data1/hadoop/hadoop/etc/hadoop/hadoop.keytab</value> </property>

<!-- DataNode SASL Config -->

<property> <name>dfs.datanode.address</name> <value>0.0.0.0:61004</value> </property>

<property> <name>dfs.datanode.http.address</name> <value>0.0.0.0:61006</value> </property>

<property> <name>dfs.http.policy</name> <value>HTTPS_ONLY</value> </property>

<property> <name>dfs.data.transfer.protection</name> <value>integrity</value> </property> <property> <name>dfs.web.authentication.kerberos.principal</name> <value>HTTP/_HOST@HADOOP.COM</value> </property>

<property> <name>dfs.web.authentication.kerberos.keytab</name> <value>/data1/hadoop/hadoop/etc/hadoop/hadoop.keytab</value> </property>

<property> <name>dfs.secondary.namenode.kerberos.internal.spnego.principal</name> <value>${dfs.web.authentication.kerberos.principal}</value> </property> <!--SecondaryNamenode Kerberos Config-->

<property> <name>dfs.secondary.namenode.kerberos.principal</name> <value>hdfs/_HOST@HADOOP.COM</value> </property>

<property> <name>dfs.secondary.namenode.keytab.file</name> <value>/data1/hadoop/hadoop/etc/hadoop/hadoop.keytab</value> </property> |

参数解析:

dfs.block.access.token.enable: 开启数据节点访问检查

dfs.namenode.kerberos.principal: namenode 服务认证主体,变量_HOST在实际中会替换成节点对应的主机名

dfs.namenode.keytab.file: 密钥文件

dfs.https.port:用于hsftp和swebhdf文件系统的https端口。也就是安全访问端口

dfs.namenode.kerberos.https.principal: (猜测是用于namenode web服务的认证主体,在官网没找到这个属性)

dfs.namenode.kerberos.internal.spnego.principal: 默认值${dfs.web.authentication.kerberos.principal}, 用于web ui spnego身份验证的服务器主体

dfs.datanode.data.dir.perm: 数据目录的权限,默认就是700

dfs.datanode.address: 用于传输数据的端口,如果配置了特权端口(小于1024),在启动datanode的时候需要以root启动,同时还还得配置Hadoop环境变量和其他的配置,比较麻烦

dfs.datanode.http.address:同上,如果是非特权端口,则可以配置sasl方式进行数据的传输(Hadoop版本需要在2.6以上)

dfs.http.policy: 协议类型,默认是HTTP_ONLY, 可选值:HTTP_AND_HTTPS, HHTPS_ONLY(ssl支持)

dfs.web.authentication.kerberos.principal: web认证

配置sasl

|

如果dfs.datanode.address和 dfs.datanode.http.address的端口号被设置成特权端口(小于1024),那么,在后面启动datanode节点时候,需要先使用root启动,并且还需要编译安装jsvc,以及修改hadoop-env.sh环境变量文件中HADOOP_SECURE_DN_USER值为集群的用户,比较麻烦,不推荐这种方法。 使用如下的方法比较简单: dfs.datanode.address和 dfs.datanode.http.address的端口号改成非特权端口,在kdc服务器执行 # openssl req -new -x509 -keyout test_ca_key -out test_ca_cert -days 9999 -subj '/C=CN/ST=China/L=Beijin/O=hduser/OU=security/CN=hadoop.com' 上述主要是用于生成根证书文件,把生成的文件拷贝到集群 # scp * 集群节点ip:/data1/hadoop/keystore 接下来在每台集群节点操作如下步骤: # keytool -keystore keystore -alias localhost -validity 9999 -genkey -keyalg RSA -keysize 2048 -dname "CN=hadoop.com, OU=test, O=test, L=Beijing, ST=China, C=cn"

# keytool -keystore truststore -alias CARoot -import -file test_ca_cert keytool -certreq -alias localhost -keystore keystore -file cert

# openssl x509 -req -CA test_ca_cert -CAkey test_ca_key -in cert -out cert_signed -days 9999 -CAcreateserial -passin pass:123456

# keytool -keystore keystore -alias CARoot -import -file test_ca_cert

# keytool -keystore keystore -alias localhost -import -file cert_signed 上述的所有命令在需要输入密码的时候最好统一,我这里做测试就设置的简单了,比如123456 |

修改ssl-server.xml文件

|

# cd ${HADOOP_HOME}/etc/hadoop/ # cp ssl-server.xml.examples ssl-server.xml 修改ssl.server.xml文件,改成如下: <configuration>

<property> <name>ssl.server.keystore.location</name> <value>/data1/hadoop/keystore/keystore</value> </property>

<property> <name>ssl.server.keystore.password</name> <value>123456</value> </property>

<property> <name>ssl.server.truststore.location</name> <value>/data1/hadoop/keystore/truststore</value> </property>

<property> <name>ssl.server.truststore.password</name> <value>123456</value> </property> <property> <name>ssl.server.keystore.keypassword</name> <value>123456</value> </property>

<property> <name>ssl.server.exclude.cipher.list</name> <value>TLS_ECDHE_RSA_WITH_RC4_128_SHA,SSL_DHE_RSA_EXPORT_WITH_DES40_CBC_SHA, SSL_RSA_WITH_DES_CBC_SHA,SSL_DHE_RSA_WITH_DES_CBC_SHA, SSL_RSA_EXPORT_WITH_RC4_40_MD5,SSL_RSA_EXPORT_WITH_DES40_CBC_SHA, SSL_RSA_WITH_RC4_128_MD5</value> <description>Optional. The weak security cipher suites that you want excluded from SSL communication.</description> </property> </configuration> |

修改ssl-client.xml文件

|

# cd ${HADOOP_HOME}/etc/hadoop/ # cp ssl-client.xml.examples ssl-client.xml 改成如下值: <configuration>

<property> <name>ssl.client.keystore.location</name> <value>/data1/hadoop/keystore/keystore</value> </property>

<property> <name>ssl.client.keystore.password</name> <value>123456</value> </property>

<property> <name>ssl.client.truststore.location</name> <value>/data1/hadoop/keystore/truststore</value> </property>

<property> <name>ssl.client.truststore.password</name> <value>123456</value> </property>

<property> <name>ssl.client.keystore.keypassword</name> <value>123456</value> </property> </configuration>

|

配置yarn-site.xml文件

|

添加如下配置: <!--kerberos 配置--> <!--配置resourcemanager认证用户--> <property> <name>yarn.resourcemanager.principal</name> <value>rm/_HOST@HADOOP.COM</value> </property> <!--配置resourcemanager密钥表--> <property> <name>yarn.resourcemanager.keytab</name> <value>/data1/hadoop/hadoop/etc/hadoop/hadoop.keytab</value> </property>

<!--配置nodemanager 认证用户--> <property> <name>yarn.nodemanager.principal</name> <value>nm/_HOST@HADOOP.COM</value> </property> <!--配置nodemanager密钥表--> <property> <name>yarn.nodemanager.keytab</name> <value>/data1/hadoop/hadoop/etc/hadoop/hadoop.keytab</value> </property>

<property> <name>yarn.resourcemanager.webapp.spnego-principal</name> <value>HTTP/_HOST@HADOOP.COM</value> </property>

<property> <name>yarn.resourcemanager.webapp.spnego-keytab-file</name> <value>/data1/hadoop/hadoop/etc/hadoop/hadoop.keytab</value> </property> |

【推荐】国内首个AI IDE,深度理解中文开发场景,立即下载体验Trae

【推荐】编程新体验,更懂你的AI,立即体验豆包MarsCode编程助手

【推荐】抖音旗下AI助手豆包,你的智能百科全书,全免费不限次数

【推荐】轻量又高性能的 SSH 工具 IShell:AI 加持,快人一步

· 震惊!C++程序真的从main开始吗?99%的程序员都答错了

· 【硬核科普】Trae如何「偷看」你的代码?零基础破解AI编程运行原理

· 单元测试从入门到精通

· 上周热点回顾(3.3-3.9)

· Vue3状态管理终极指南:Pinia保姆级教程