Scrapy案例02-腾讯招聘信息爬取

目录

1. 目标

目标:https://hr.tencent.com/position.php?&start=0#a

爬取所有的职位信息信息

- 职位名

- 职位url

- 职位类型

- 职位人数

- 工作地点

- 发布时间

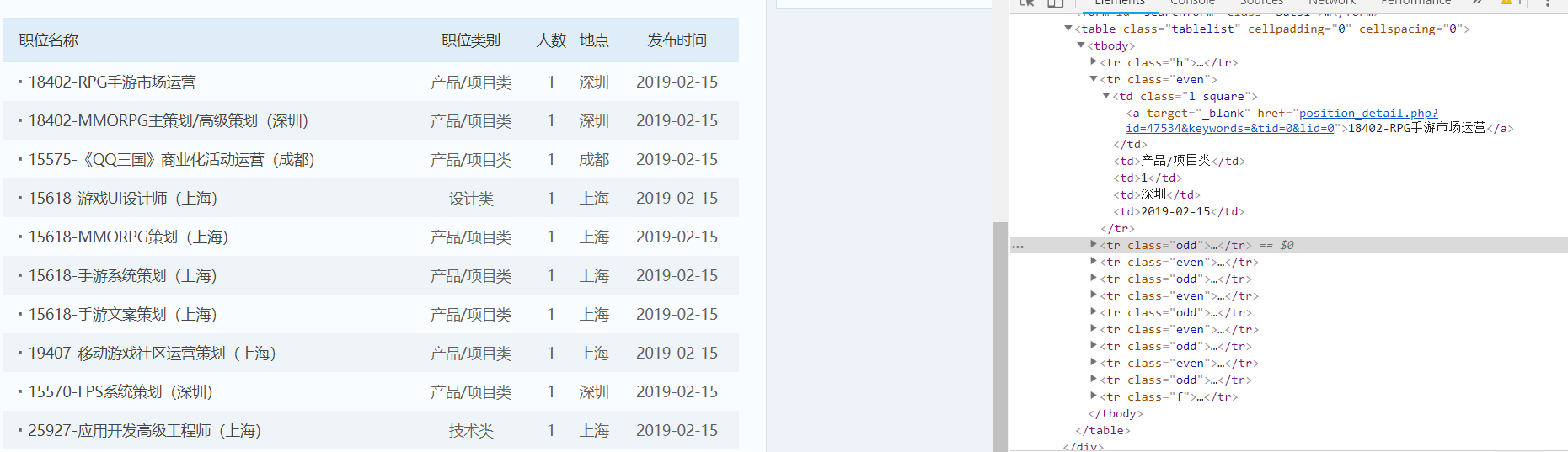

2. 网站结构分析

3. 编写爬虫程序

3.1. 配置需要爬取的目标变量

class TecentjobItem(scrapy.Item):

# define the fields for your item here like:

positionname = scrapy.Field()

positionlink = scrapy.Field()

positionType = scrapy.Field()

peopleNum = scrapy.Field()

workLocation = scrapy.Field()

publishTime = scrapy.Field()

3.2. 写爬虫文件scrapy

# -*- coding: utf-8 -*-

import scrapy

from tecentJob.items import TecentjobItem

class TencentSpider(scrapy.Spider):

name = 'tencent'

allowed_domains = ['tencent.com']

url = 'https://hr.tencent.com/position.php?&start='

offset = 0

start_urls = [url + str(offset)]

def parse(self, response):

for each in response.xpath("//tr[@class = 'even'] | //tr[@class = 'odd']"):

# 初始化模型对象

item = TecentjobItem()

item['positionname'] = each.xpath("./td[1]/a/text()").extract()[0]

item['positionlink'] = each.xpath("./td[1]/a/@href").extract()[0]

item['positionType'] = each.xpath("./td[2]/text()").extract()[0]

item['peopleNum'] = each.xpath("./td[3]/text()").extract()[0]

item['workLocation'] = each.xpath("./td[4]/text()").extract()[0]

item['publishTime'] = each.xpath("./td[5]/text()").extract()[0]

yield item

if self.offset < 100:

self.offset += 10

# 将请求重写发送给调度器入队列、出队列、交给下载器下载

# 拼接新的rurl,并回调parse函数处理response

# yield scrapy.Request(url, callback = self.parse)

yield scrapy.Request(self.url + str(self.offset), callback=self.parse)

3.3. 编写yield需要的管道文件

import json

class TecentjobPipeline(object):

def __init__(self):

self.filename = open("tencent.json", 'wb')

def process_item(self, item, spider):

text = json.dumps(dict(item),ensure_ascii=False) + "\n"

self.filename.write(text.encode('utf-8'))

return item

def close_spider(self, spider):

self.filename.close()

3.4. setting中配置请求抱头信息

DEFAULT_REQUEST_HEADERS = {

'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/71.0.3578.98 Safari/537.36',

'Accept': 'text/html,application/xhtml+xml,application/xml;q=0.9,*/*;q=0.8',

'Accept-Language': 'en',

}

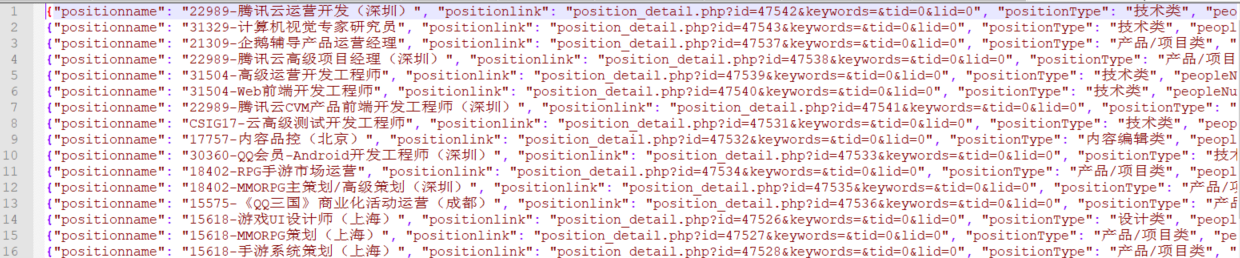

4. 最后结果