Kubernetes部署方式

官方提供Kubernetes部署3种方式

- minikube

Minikube.是一个工具,可以在本地快速运行一个单点的Kubernetes,尝试Kubernetes或日常开发的用户使用。不能用于生产环境。

官方文档: https://kubernetes.io/docs/setup/minikube/

- 二进制包

从官方下载发行版的二进制包,手动部署每个组件,组成Kubernetes集群。目前企业生产环境中主要使用该方式。

下载地址: https:/github.com/kubernetes/kubernetes/blob/master/CHANGELOG-1.11.md#v1113

- Kubeadm

Kubeadm是谷歌推出的一个专门用于快速部署kubernetes、集群的工具。在集群部署的过程中,可以通过kubeadm.init来初始化 master节点,然后使用kubeadm join将其他的节点加入到集群中。

Kubeadm通过简单配置可以快速将一个最小可用的集群运行起来。它在设计之初关注点是快速安装并将集群运行起来,而不是一步步关于各节点环境的准备工作。同样的,kubernetes集群在使用过程中的各种插件也不是kubeadm关注的重点,比如kubernetes集群 WEB Dashboard、prometheus监控集群业务等。kubeadm应用的日的是作为所有部署的基础,并通过kubeadm使得部署kubernetes集群更加容易。

Kubeadm的简单快捷的部署可以应用到如下三方面:

- l 新用户可以从kubeadm开始快速搭建Kubernete并了解。

- l 熟悉Kubernetes的用户可以使用kubeadm快速搭建集群并测试他们的应用。

- l 大型的项目可以将kubeadm配合其他的安装工具一起使用,形成一个比较复杂的系统。

官方文档:https://kubernetes.io/docs/setup/independent/install-kubeadm/

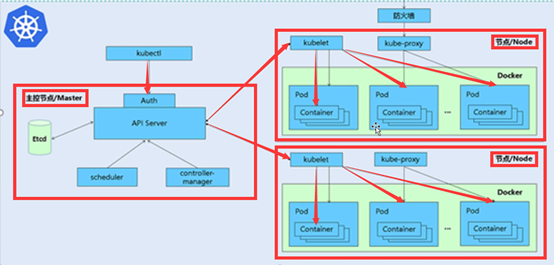

原理:

- 通过kubectl命令下发一些任务

kubectl:命令管理工具

- 下发任务需要通过验证(Auth)

- 然后到达API服务器,Scheduler推算任务下发后的分配工作,Controler manager控制稳定的应用台数

Etcd为存储器,存储的是控制信息

Scheduler完成分配工作

Controler manager是控制操作(比如想跑3台nginx,down掉一台,这时控制器会在增加一台)

- API server将任务下发给kubelet

被监控主机要安装kubelet,是接受API下发的任务,配合API工作

- Kubele接受到各自的分任务之后将分发给podZ执行

一个pod中有一个容器或者是多个容器

- 通过proxy代理将完后的任务通过防火墙发送到网络中

基于kubeadm 部署k8s集群

1、环境准备

|

主机ip |

主机名 |

组件 |

|

192.168.2.111 |

k8s-master或nfs |

kubeadm、kubelet、kubectl、docker-ce |

|

192.168.2.112 |

k8s-node-1 |

kebeadm、kubelet、kebectl、docker-ce |

|

192.168.2.113 |

k8s-node-2 |

kebeadm、kubelet、kubectl、docker-ce |

所有主机配置推荐CPU 2C+ Memory 2G+

2.主机初始化配置

- 所有主机配置禁用防火墙和selinux

-

12345678910

iptables -Fsystemctl stop firewalldsystemctl disable firewalldsetenforce 0sed -i'7c SELINUX=disabled'/etc/sysconfig/selinux<br>systemctl stop NetworkManager

- 配置主机名并绑定hosts

-

1234567891011121314151617181920212223242526272829

[root@node-1 ~]# hostname k8s-master[root@node-1 ~]# bash[root@master ~]# cat << EOF >> /etc/hosts192.168.2.111 k8s-master192.168.2.112 k8s-node-1192.168.2.113 k8s-node-2EOF[root@master ~]# scp /etc/hosts 192.168.2.112:/etc/[root@master ~]# scp /etc/hosts 192.168.2.113:/etc/[root@node-1 ~]# hostname k8s-node-1[root@node-1 ~]# bash[root@node-2 ~]# hostname k8s-node-2[root@node-2 ~]# bash - 安装基本软件包(三台都需要)

-

1

[root@k8s-master ~]# yum install -y vim wget net-tools lrzsz - 主配置初始化(三台都需要)

-

12345678910

[root@k8s-master ~]# swapoff -a[root@k8s-master ~]# sed -i'/swap/s/^/#/'/etc/fstab[root@k8s-master ~]# cat << EOF >> /etc/sysctl.conf> net.ipv4.ip_forward = 1> net.bridge.bridge-nf-call-ip6tables = 1> net.bridge.bridge-nf-call-iptables = 1> EOF如果sysctl -p 报错,需加载改模块[root@k8s-master ~]# modprobe br_netfilter -

三台主机分别部署docker环境,因为kubernetes读容器的编排需要Docker的支持(安装过程忽略)

- 配置阿里云的源(所有主机都需要)

-

12345678910111213

[root@k8s-master ~]# cat << EOF > /etc/yum.repos.d/kubernetes.repo[kubernetes]name=Kubernetesbaseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64/enabled=1gpgcheck=1repo_gpgcheck=1gpgkey=https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpghttps://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpgEOF[root@k8s-master ~]# ls /etc/yum.repos.d/bak docker-ce.repo kubernetes.repoCentOS-Base.repo epel.repo local.repo -

安装三个工具包(所有主机都需要)且设置为开机自启动

-

1

[root@k8s-master ~]# yum -y install kubectl-1.17.0 kubeadm-1.17.0 kubelet-1.17.0<br>[root@k8s-master ~]# yum -y install --nogpgcheck kubectl-1.17.0 kubeadm-1.17.0 kubelet-1.17.0<br>[root@k8s-master ~]# systemctl enable kubelet<br> Created symlinkfrom/etc/systemd/system/multi-user.target.wants/kubelet.service to /usr/lib/systemd/system/kubelet.service.kubelet刚安装完成后,迪过systemctl start kubelet,方式是无法启动的,需要加入节点或初始化为master后才可启动成功。

如果在命令执行过程中出现索引gpg检查失败的情况,请使用yum -y install --nogpgcheck kubelet kubeadm kubectl来安装。

- 在master端配置init-config.yaml文件

- Kubeadm提供了很多配置项,Kubeadm配置在Kubernetes集群中是存储在ConfigMapg,中的,也可将这些配置写入配置文件,方便管理复杂的配置项。Kubeadm配内容是通过kubeadm config命令写入配置文件的。

- 上传initt-config.yaml文件

-

1

<strong>init-config.yaml</strong>链接:https://pan.baidu.com/s/1BUuE4LkyYcxI_fyaEov8gg 提取码:m86t编辑文件:

1advertiseAddress:为自己的master的IP地址1kubernetesVersion: v1.17.0 -

1234567891011121314151617181920212223242526272829303132333435

[root@k8s-master ~]# vim init-config.yaml[root@k8s-master ~]# cat init-config.yamlapiVersion: kubeadm.k8s.io/v1beta2bootstrapTokens:- groups:- system:bootstrappers:kubeadm:default-node-tokentoken: abcdef.0123456789abcdefttl: 24h0m0susages:- signing- authenticationkind: InitConfigurationlocalAPIEndpoint:advertiseAddress: 192.168.2.111bindPort: 6443nodeRegistration:criSocket: /var/run/dockershim.sockname: k8s-mastertaints:- effect: NoSchedulekey: node-role.kubernetes.io/master---apiServer:timeoutForControlPlane: 4m0sapiVersion: kubeadm.k8s.io/v1beta2certificatesDir: /etc/kubernetes/pkiclusterName: kubernetescontrollerManager: {}dns:type: CoreDNSetcd:local:dataDir: /var/lib/etcdimageRepository: registry.aliyuncs.com/google_containerskind: ClusterConfigurationkubernetesVersion: v1.17.0networking: dnsDomain: cluster.local serviceSubnet: 10.96.0.0/12 podSubnet: 10.244.0.0/16 scheduler: {} - 安装master节点

-

[root@k8s-master ~]# kubeadm config images pull --config init-config.yaml

W1222 15:13:28.961040 17823 validation.go:28] Cannot validate kube-proxy config - no validator is available

W1222 15:13:28.961091 17823 validation.go:28] Cannot validate kubelet config - no validator is available

[config/images] Pulled registry.aliyuncs.com/google_containers/kube-apiserver:v1.17.0

[config/images] Pulled registry.aliyuncs.com/google_containers/kube-controller-manager:v1.17.0

[config/images] Pulled registry.aliyuncs.com/google_containers/kube-scheduler:v1.17.0

[config/images] Pulled registry.aliyuncs.com/google_containers/kube-proxy:v1.17.0

[config/images] Pulled registry.aliyuncs.com/google_containers/pause:3.1

[config/images] Pulled registry.aliyuncs.com/google_containers/etcd:3.4.3-0

[config/images] Pulled registry.aliyuncs.com/google_containers/coredns:1.6.5

[root@k8s-master ~]# - 查看镜像

-

123456789101112

[root@k8s-master ~]# docker imagesREPOSITORY TAG IMAGE ID CREATED SIZEregistry.aliyuncs.com/google_containers/kube-proxy v1.20.0 10cc881966cf 13 days ago 118MBregistry.aliyuncs.com/google_containers/kube-apiserver v1.20.0 ca9843d3b545 13 days ago 122MBregistry.aliyuncs.com/google_containers/kube-scheduler v1.20.0 3138b6e3d471 13 days ago 46.4MBregistry.aliyuncs.com/google_containers/kube-controller-manager v1.20.0 b9fa1895dcaa 13 days ago 116MBcentos 7 8652b9f0cb4c 5 weeks ago 204MBregistry.aliyuncs.com/google_containers/coredns 1.6.5 70f311871ae1 13 months ago 41.6MBregistry.aliyuncs.com/google_containers/etcd 3.4.3-0 303ce5db0e90 14 months ago 288MBgoogle/cadvisor latest eb1210707573 2 years ago 69.6MBregistry.aliyuncs.com/google_containers/pause 3.1 da86e6ba6ca1 3 years ago 742kBtutum/influxdb latest c061e5808198 4 years ago 290MB - 初始化

-

1

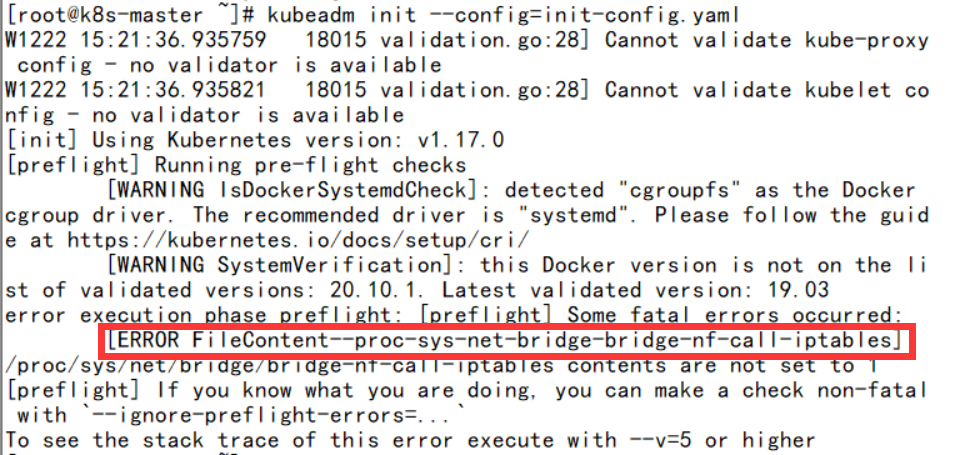

[root@k8s-master ~]# kubeadm init --config=init-config.yaml -

报错:

- 解决方法:

-

12

[root@k8s-master ~]# echo"1"> /proc/sys/net/bridge/bridge-nf-call-iptables[root@k8s-master ~]# kubeadm init --config=init-config.yaml -

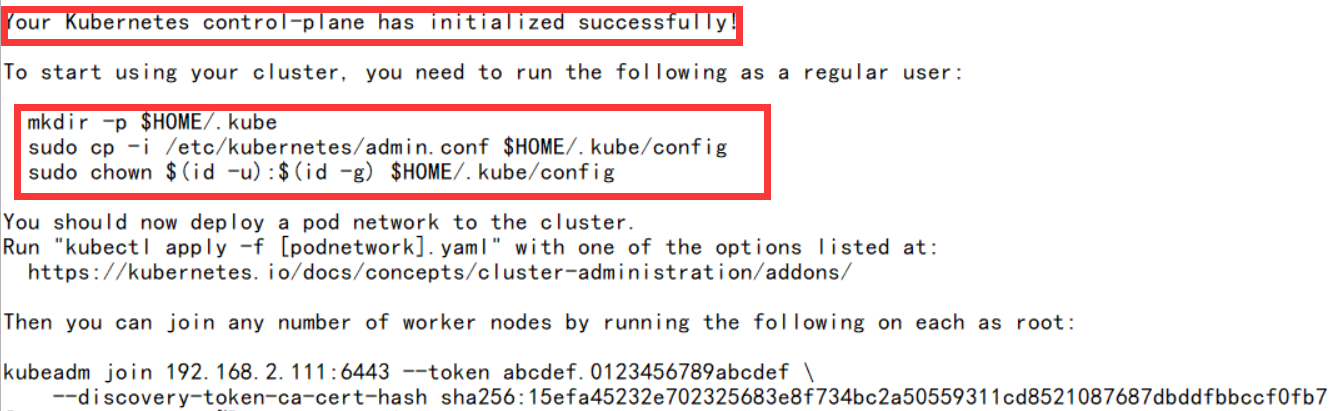

初始化成功

-

- 显示成功后会出现三个命令,直接将三条命令执行一下,初始化就成功了

-

1234

[root@k8s-master ~]# mkdir -p $HOME/.kube[root@k8s-master ~]# sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config[root@k8s-master ~]# sudo chown $(id -u):$(id -g) $HOME/.kube/config[root@k8s-master ~]# - 初始化过程中做了什么?

kubeadm init主要执行了以下操作:

[init]:指定版本进行初始化操作

[preflight] :初始化前的检查和下载所需要的Docker镜像文件

[kubelet-start]:生成 kubelet的配置文件”./var/lib/kubelet/config.xam,没有这个文件

kubelet无法启动,所以初始化之前的 kubelet实际上启动失败。

[certificates]:生成Kubernetes使用的证书,存放在/etc/kubernetes/pki目录中。

[kubecanfig]:生成 Kubeconfig文件,存放在/et/kubernetes目录中,组件之间通信需要使用对应文件。

[control-plane]:使用/etc/kubernetes/manifest日录下的YAML_文件,安装Master组件。

[etcd]:使用/etc/kubernetes/manifest/etcd.yaml安装Etcd服务。

[wait-control-plane]:等待control-plan部署的Master组件启动。

[apiclient]:检查Master组件服务状态。

[uploadconfig]:更新配置

[kubelet]:使用configMap配置 kubelet。

[patchnode]:更新CNI信息到Node 上,通过注释的方式记录。

[mark-control-plane]:为当前节点打标签,打了角色Master,和不可调度标签,这样默认就不会使用Master节点来运行Pod。

[bootstrap-token]:生成token记录下来,后边使用kubeadm join往集群中添加节点时

3.添加节点

- 查看节点

-

1234

[root@k8s-master ~]# kubectlgetnodesNAME STATUS ROLES AGE VERSIONk8s-master NotReady master 29m v1.17.0[root@k8s-master ~]# - 添加nod节点(将初始化中的命令复制到节点服务器上,两台)

-

1

[root@k8s-node-1 ~]# kubeadmjoin192.168.2.111:6443 --token abcdef.0123456789abcdef --discovery-token-ca-cert-hash sha256:15efa45232e702325683e8f734bc2a50559311cd8521087687dbddfbbccf0fb7 - 再次查看master(将会出现两个节点)

-

12345

[root@k8s-master ~]# kubectlgetnodesNAME STATUS ROLES AGE VERSIONk8s-master NotReady master 38m v1.17.0k8s-node-1 NotReady <none> 86s v1.17.0k8s-node-2 NotReady <none> 3s v1.17.0

4.安装flannel

Master节点NotReady的原因就是因为没有使用任何的网络插件,此时Node和Master的连接还不正常。目前最流行的Kubernetes网络插件有Flannel、Calico、Canal、Weave这里选择使用flannel。

安装包链接:https://pan.baidu.com/s/1qsZnAkCK7F08iltJVsKEgg

提取码:5lmo

- master上传kube-flannet.yml

- 所有主机上传flannel_v0.12.0-amd64.tar

- 所有节点主机的操作

-

1234567

[root@k8s-node-1 ~]# docker load < flannel_v0.12.0-amd64.tar256a7af3acb1: Loading layer [==================================================>] 5.844MB/5.844MBd572e5d9d39b: Loading layer [==================================================>] 10.37MB/10.37MB57c10be5852f: Loading layer [==================================================>] 2.249MB/2.249MB7412f8eefb77: Loading layer [==================================================>] 35.26MB/35.26MB05116c9ff7bf: Loading layer [==================================================>] 5.12kB/5.12kBLoaded image: quay.io/coreos/flannel:v0.12.0-amd64 - master的操作

-

1234567891011

[root@k8s-master ~]# kubectl apply -f kube-flannel.ymlpodsecuritypolicy.policy/psp.flannel.unprivileged createdclusterrole.rbac.authorization.k8s.io/flannel createdclusterrolebinding.rbac.authorization.k8s.io/flannel createdserviceaccount/flannel createdconfigmap/kube-flannel-cfg createddaemonset.apps/kube-flannel-ds-amd64 createddaemonset.apps/kube-flannel-ds-arm64 createddaemonset.apps/kube-flannel-ds-arm createddaemonset.apps/kube-flannel-ds-ppc64le createddaemonset.apps/kube-flannel-ds-s390x created - 查看是否搭建成功

-

12345

[root@k8s-master ~]# kubectlgetnodesNAME STATUS ROLES AGE VERSIONk8s-master Ready master 72m v1.17.0k8s-node-1 Ready <none> 34m v1.17.0k8s-node-2 Ready <none> 33m v1.17.0 -

12345678910111213141516171819202122232425262728

[root@k8s-master ~]# kubectlgetpods -n kube-systemNAME READY STATUS RESTARTS AGEcoredns-9d85f5447-d9vgg 1/1 Running 0 68mcoredns-9d85f5447-spptr 1/1 Running 0 68metcd-k8s-master 1/1 Running 0 69mkube-apiserver-k8s-master 1/1 Running 0 69mkube-controller-manager-k8s-master 1/1 Running 0 69mkube-flannel-ds-amd64-cgxk4 1/1 Running 0 21mkube-flannel-ds-amd64-cjd9z 1/1 Running 0 21mkube-flannel-ds-amd64-vfb6r 1/1 Running 0 21mkube-proxy-czpcx 1/1 Running 0 32mkube-proxy-vn5jp 1/1 Running 0 68mkube-proxy-w7g65 1/1 Running 0 30mkube-scheduler-k8s-master 1/1 Running 0 69m[root@k8s-master ~]# kubectlgetpods -A -n kube-systemNAMESPACE NAME READY STATUS RESTARTS AGEkube-system coredns-9d85f5447-d9vgg 1/1 Running 0 69mkube-system coredns-9d85f5447-spptr 1/1 Running 0 69mkube-system etcd-k8s-master 1/1 Running 0 69mkube-system kube-apiserver-k8s-master 1/1 Running 0 69mkube-system kube-controller-manager-k8s-master 1/1 Running 0 69mkube-system kube-flannel-ds-amd64-cgxk4 1/1 Running 0 21mkube-system kube-flannel-ds-amd64-cjd9z 1/1 Running 0 21mkube-system kube-flannel-ds-amd64-vfb6r 1/1 Running 0 21mkube-system kube-proxy-czpcx 1/1 Running 0 32mkube-system kube-proxy-vn5jp 1/1 Running 0 69mkube-system kube-proxy-w7g65 1/1 Running 0 30mkube-system kube-scheduler-k8s-master 1/1 Running 0 69m - 至此k8s集群环境搭建成功

基于二进制部署K8S

相关安装包

- 链接:https://pan.baidu.com/s/1Jmk4L1H2bLkFm-ryx7xzew

- 提取码:m3dg

1.docker安装完毕之后进行如下的配置

- 还是上面的三台虚拟机将三台虚拟机的名字分别改为以下三个

-

12345678910

hostname k8s-masterbashhostname k8s-node01bashhostname k8s-node02bash - 编写host文件,三台都需要,以master为例子,内容如下

-

12345

[root@k8s-master ~]# cat << EOF >> /etc/hosts192.168.2.111 k8s-master192.168.2.112 k8s-node01192.168.2.113 k8s-node02EOF

2.证书

k8s系统各个组件之间需要使用TLS证书进行通信,下列我们将使用CloudFlare的PKI工具集CFSSL来生成Certificate Authority 和其他证书。

-

master主机上安装证书生成工具

-

123456789

mkdir -p /root/software/sslcd /root/software/ssl/[root@k8s-master ssl]# wget https://pkg.cfssl.org/R1.2/cfssl_linux-amd64[root@k8s-master ssl]# wget https://pkg.cfssl.org/R1.2/cfssljson_linux-amd64[root@k8s-master ssl]# wget https://pkg.cfssl.org/R1.2/cfssl-certinfo_linux-amd64[root@k8s-master ssl]# chmod +x *//下载完后设置执行权限[root@k8s-master ssl]# mv cfssl_linux-amd64 /usr/local/bin/cfssl[root@k8s-master ssl]# mv cfssljson_linux-amd64 /usr/local/bin/cfssljson[root@k8s-master ssl]# mv cfssl-certinfo_linux-amd64 /usr/local/bin/cfssl-certinfo - 查看是否成功

-

12345678910111213141516171819202122232425

cfssl --helpUsage:Available commands:signversionocspdumpocspservescanbundlegenkeygencrlocsprefreshselfsigninfoserverevokecertinfogencertocspsignprint-defaultsTop-level flags:-allow_verification_with_non_compliant_keysAllow a SignatureVerifier to use keys which are technically non-compliant with RFC6962.-loglevelintLog level (0 = DEBUG, 5 = FATAL) (default1) - master主机编写证书相关的json文件(脚本)用来生成证书(一共4个文件,生成八个证书)

-

12345678910111213141516171819202122232425262728293031323334353637383940414243444546474849505152535455565758596061626364656667686970717273747576777879808182838485868788

[root@k8s-master ~]# cat << EOF > ca-config.json{"signing": {"default": {"expiry":"87600h"},"profiles": {"kubernetes": {"expiry":"87600h","usages": ["signing","key encipherment","server auth","client auth"]}}}}EOF[root@k8s-master ~]# cat << EOF > ca-csr.json{"CN":"kubernetes","key": {"algo":"rsa","size": 2048},"names": [{"C":"CN","L":"Beijing","ST":"Beijing","O":"k8s","OU":"System"}]}EOF[root@k8s-master ~]# cat << EOF > server-csr.json{"CN":"kubernetes","hosts": ["127.0.0.1","192.168.2.111","192.168.2.112","192.168.2.113","10.10.10.1","kubernetes","kubernetes.default","kubernetes.default.svc","kubernetes.default.svc.cluster","kubernetes.default.svc.cluster.local"],"key": {"algo":"rsa","size": 2048},"names": [{"C":"CN","L":"BeiJing","ST":"BeiJing","O":"k8s","OU":"System"}]}EOF[root@k8s-master ~]# cat << EOF > admin-csr.json{"CN":"admin","hosts": [],"key": {"algo":"rsa","size": 2048},"names": [{"C":"CN","L":"BeiJing","ST":"BeiJing","O":"system:masters","OU":"System"}]}EOF - 将四个文件进行 pem 证书的生成

-

1234567

[root@k8s-master ~]# cfssl gencert -initca ca-csr.json | cfssljson -bare ca -[root@k8s-master ~]# cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=kubernetes server-csr.json | cfssljson -bare server[root@k8s-master ~]# cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=kubernetes admin-csr.json | cfssljson -bare admin[root@k8s-master ~]# cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=kubernetes kube-proxy-csr.json | cfssljson -bare kube-proxy -

删除证书以外的 json 文件,只保留 pem 证书

-

12345678910111213

[root@k8s-master ssl]# ls | grep -v pem | xargs -i rm {}//删除证书以外的 json 文件,只保留 pem 证书[root@k8s-master ssl]# ls -l总用量 32-rw------- 1 root root 1675 11月 13 23:09 admin-key.pem-rw-r--r-- 1 root root 1399 11月 13 23:09 admin.pem-rw------- 1 root root 1679 11月 13 23:03 ca-key.pem-rw-r--r-- 1 root root 1359 11月 13 23:03 ca.pem-rw------- 1 root root 1675 11月 13 23:12 kube-proxy-key.pem-rw-r--r-- 1 root root 1403 11月 13 23:12 kube-proxy.pem-rw------- 1 root root 1679 11月 13 23:07 server-key.pem-rw-r--r-- 1 root root 1627 11月 13 23:07 server.pem

3.创建k8s目录,部署etcd

- 上传etcd包,解压包,并拷贝二进制bin文件将命令进行部署

-

123456

mkdir /opt/kubernetesmkdir /opt/kubernetes/{bin,cfg,ssl}tar xf etcd-v3.3.18-linux-amd64.tar.gzcd etcd-v3.3.18-linux-amd64/mv etcd /opt/kubernetes/bin/mv etcdctl /opt/kubernetes/bin/ -

在master主机创建/opt/kubernetes/cfg/etcd文件,这个文件是etcd的配置文件

123456789101112[root@k8s-master etcd-v3.3.18-linux-amd64]# cat /opt/kubernetes/cfg/etcd#[Member]ETCD_NAME="etcd01"ETCD_DATA_DIR="/var/lib/etcd/default.etcd"ETCD_LISTEN_PEER_URLS="https://192.168.2.111:2380"ETCD_LISTEN_CLIENT_URLS="https://192.168.2.111:2379"#[Clustering]ETCD_INITIAL_ADVERTISE_PEER_URLS="https://192.168.2.111:2380"ETCD_ADVERTISE_CLIENT_URLS="https://192.168.2.111:2379"ETCD_INITIAL_CLUSTER="etcd01=https://192.168.2.111:2380,etcd02=https://192.168.2.112:2380,etcd03=https://192.168.2.113:2380"ETCD_INITIAL_CLUSTER_TOKEN="etcd-cluster"ETCD_INITIAL_CLUSTER_STATE="new" - 创建启动脚本

-

1234567891011121314151617181920212223242526272829

[root@k8s-master etcd-v3.3.18-linux-amd64]# cat /usr/lib/systemd/system/etcd.service[Unit]Description=Etcd ServerAfter=network.targetAfter=network-online.targetWants=network-online.target[Service]Type=notifyEnvironmentFile=-/opt/kubernetes/cfg/etcdExecStart=/opt/kubernetes/bin/etcd \--name=${ETCD_NAME} \--data-dir=${ETCD_DATA_DIR} \--listen-peer-urls=${ETCD_LISTEN_PEER_URLS} \--listen-client-urls=${ETCD_LISTEN_CLIENT_URLS},http://127.0.0.1:2379 \--advertise-client-urls=${ETCD_ADVERTISE_CLIENT_URLS} \--initial-advertise-peer-urls=${ETCD_INITIAL_ADVERTISE_PEER_URLS} \--initial-cluster=${ETCD_INITIAL_CLUSTER} \--initial-cluster-token=${ETCD_INITIAL_CLUSTER} \--initial-cluster-state=new\--cert-file=/opt/kubernetes/ssl/server.pem \--key-file=/opt/kubernetes/ssl/server-key.pem \--peer-cert-file=/opt/kubernetes/ssl/server.pem \--peer-key-file=/opt/kubernetes/ssl/server-key.pem \--trusted-ca-file=/opt/kubernetes/ssl/ca.pem \--peer-trusted-ca-file=/opt/kubernetes/ssl/ca.pemRestart=on-failureLimitNOFILE=65536[Install]WantedBy=multi-user.target - 在master主机拷贝一份etcd脚本所依赖的证书

-

12

[root@k8s-master etcd-v3.3.18-linux-amd64]# cd /root/software/[root@k8s-master software]# cp ssl/server*pem ssl/ca*.pem /opt/kubernetes/ssl/ - 重新启动etcd(这个时候会卡死,但是无所谓,直接ctrl+c退出,卡的原因开始节点未连接)

-

12

[root@k8s-master software]# systemctl start etcd[root@k8s-master software]# systemctl enable etcd - 查看进程,只要进程在就可以

-

1

[root@k8s-master software]# ps aux | grep etcd - 在node1和node2也需要配置etcd文件,修改完的配置如下

-

12345678910111213141516171819202122232425262728

在node-01主机创建/opt/kubernetes/cfg/etcd文件,并写如下内容#[Member]ETCD_NAME="etcd02"ETCD_DATA_DIR="/var/lib/etcd/default.etcd"ETCD_LISTEN_PEER_URLS="https://192.168.2.112:2380"ETCD_LISTEN_CLIENT_URLS="https://192.168.2.112:2379"#[Clustering]ETCD_INITIAL_ADVERTISE_PEER_URLS="https://192.168.2.112:2380"ETCD_ADVERTISE_CLIENT_URLS="https://192.168.2.112:2379"ETCD_INITIAL_CLUSTER="etcd01=https://192.168.2.111:2380,etcd02=https://192.168.2.112:2380,etcd03=https://192.168.2.113:2380"ETCD_INITIAL_CLUSTER_TOKEN="etcd-cluster"ETCD_INITIAL_CLUSTER_STATE="new"在node-02主机创建/opt/kubernetes/cfg/etcd文件,并写如下内容#[Member]ETCD_NAME="etcd03"ETCD_DATA_DIR="/var/lib/etcd/default.etcd"ETCD_LISTEN_PEER_URLS="https://192.168.2.113:2380"ETCD_LISTEN_CLIENT_URLS="https://192.168.2.113:2379"#[Clustering]ETCD_INITIAL_ADVERTISE_PEER_URLS="https://192.168.2.113:2380"ETCD_ADVERTISE_CLIENT_URLS="https://192.168.2.113:2379"ETCD_INITIAL_CLUSTER="etcd01=https://192.168.2.111:2380,etcd02=https://192.168.2.112:2380,etcd03=https://192.168.2.113:2380"ETCD_INITIAL_CLUSTER_TOKEN="etcd-cluster"ETCD_INITIAL_CLUSTER_STATE="new" - master将启动脚本也传给节点主机

-

12

[root@k8s-master ~]# scp /usr/lib/systemd/system/etcd.service 192.168.2.112:/usr/lib/systemd/system/[root@k8s-master ~]# scp /usr/lib/systemd/system/etcd.service 192.168.2.113:/usr/lib/systemd/system/ - 节点主机分别重新启动etcd

-

123456

[root@k8s-node01 ~]# systemctl start etcdsystemctl enable etcdCreated symlinkfrom/etc/systemd/system/multi-user.target.wants/etcd.service to /usr/lib/systemd/system/etcd.service.[root@k8s-node02 ~]# systemctl start etcd[root@k8s-node02 ~]# systemctl enable etcdCreated symlinkfrom/etc/systemd/system/multi-user.target.wants/etcd.service to /usr/lib/systemd/system/etcd.service. - 将etcd的命令添加在全局的环境变量中

-

123

[root@k8s-master ~]# vim /etc/profileexport PATH=$PATH:/opt/kubernetes/bin[root@k8s-master ~]# source /etc/profile - 查看etcd集群的部署,会有三个节点

-

123

[root@k8s-master ~]# cd /root/software/ssl/[root@k8s-master ssl]# etcdctl --ca-file=ca.pem --cert-file=server.pem --key-file=server-key.pem --endpoints="https://192.168.2.111:2379,https://192.168.2.112,https://192.168.2.113:2379"cluster-health

至此etcd成功部署。

4.部署Flannel网络

flannel是overlay网络中的一种,也是将原数据包封装在另一种网络包里进行路由转换和通信。

- 在主节点写入分配子网段到etcd,供flanneld使用

-

1234

[root@k8s-master ~]# cd /root/software/ssl/[root@k8s-master ssl]# etcdctl -ca-file=ca.pem --cert-file=server.pem --key-file=server-key.pem --endpoints="https://192.168.2.111:2379,https://192.168.2.112:2379,https://192.168.2.113:2379"set/coreos.com/network/config'{"Network":"172.17.0.0/16","Backend":{"Type":"vxlan"} }'输出结果:{"Network":"172.17.0.0/16","Backend":{"Type":"vxlan"} } - 上传flannel包,解压包并拷贝到node节点

-

123

[root@k8s-master ~]# tar xf flannel-v0.12.0-linux-amd64.tar.gz[root@k8s-master ~]# scp flanneld mk-docker-opts.sh 192.168.2.112:/opt/kubernetes/bin/[root@k8s-master ~]# scp flanneld mk-docker-opts.sh 192.168.2.113:/opt/kubernetes/bin/ - 在 k8s-node1 与 k8s-node2 主机上分别编辑 flanneld 配置文件。下面以 k8s-node1 为例进行操作演示。

-

1234567891011121314151617

[root@k8s-node01 ~]# vim /opt/kubernetes/cfg/flanneldFLANNEL_OPTIONS="--etcd-endpoints=https://192.168.2.111:2379,https://192.168.2.112:2379,https://192.168.2.113:2379 -etcd-cafile=/opt/kubernetes/ssl/ca.pem -etcd-certfile=/opt/kubernetes/ssl/server.pem -etcd-keyfile=/opt/kubernetes/ssl/server-key.pem"[root@k8s-node1 ~]# cat <<EOF >/usr/lib/systemd/system/flanneld.service[Unit]Description=Flanneld overlay address etcd agentAfter=network-online.target network.targetBefore=docker.service[Service]Type=notifyEnvironmentFile=/opt/kubernetes/cfg/flanneldExecStart=/opt/kubernetes/bin/flanneld --ip-masq $FLANNEL_OPTIONSExecStartPost=/opt/kubernetes/bin/mk-docker-opts.sh -k DOCKER_NETWORK_OPTIONS -d /run/flannel/subnet.envRestart=on-failure[Install]WantedBy=multi-user.targetEOF -

在 k8s-node1 与 k8s-node2 主机上配置 Docker 启动指定网段,修改 Docker 配置脚本文件。下面以 k8s-node1 为例进行操作演示。(将原有ExecStart=/usr/bin/dockerd -H fd:// --containerd=/run/containerd/containerd.sock)进行注释,添加下面的两行。

-

123

[root@k8s-node01 ~]# vim /usr/lib/systemd/system/docker.serviceEnvironmentFile=/run/flannel/subnet.envExecStart=/usr/bin/dockerd $DOCKER_NETWORK_OPTIONS -

两台节点都需要重新启动flanneld

-

1234

systemctl start flanneldsystemctl enable flanneldsystemctl daemon-reloadsystemctl restart docker -

查看一下相应的网络,docker和flannel在同一网段

-

1234567891011121314151617

[root@k8s-node01 ~]# ifconfigdocker0: flags=4099<UP,BROADCAST,MULTICAST> mtu 1500inet 172.17.96.1 netmask 255.255.255.0 broadcast 172.17.96.255ether 02:42:53:3a:56:7f txqueuelen 0 (Ethernet)RX packets 0 bytes 0 (0.0 B)RX errors 0 dropped 0 overruns 0 frame 0TX packets 0 bytes 0 (0.0 B)TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0flannel.1: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1450inet 172.17.96.0 netmask 255.255.255.255 broadcast 0.0.0.0inet6 fe80::1430:4bff:fe88:a4a9 prefixlen 64 scopeid 0x20<link>ether 16:30:4b:88:a4:a9 txqueuelen 0 (Ethernet)RX packets 0 bytes 0 (0.0 B)RX errors 0 dropped 0 overruns 0 frame 0TX packets 0 bytes 0 (0.0 B)TX errors 0 dropped 68 overruns 0 carrier 0 collisions 0 -

在 k8s-node2 上测试到 node1 节点 docker0 网桥 IP 地址的连通性,出现如下结果说明Flanneld 安装成功。

-

123

[root@k8s-node02 ~]# ping 172.17.96.1PING 172.17.96.1 (172.17.96.1) 56(84) bytes of data.64 bytesfrom172.17.96.1: icmp_seq=1 ttl=64 time=0.543 ms至此 Node 节点的 Flannel 配置完成。

5.部署k8s-master组件

- 上传kubernetes-server-linux-amd64.tar.gz ,解压并添加在kubectl命令环境。

-

123

[root@k8s-master ~]# tar xf kubernetes-server-linux-amd64.tar.gz[root@k8s-master ~]# cd kubernetes/server/bin/[root@k8s-master bin]# cp kubectl /opt/kubernetes/bin/ -

创建TLS Booystrapping Token

-

123456

[root@k8s-master bin]# cd /opt/kubernetes/[root@k8s-master kubernetes]# export BOOTSTRAP_TOKEN=$(head -c 16 /dev/urandom | od -An -t x | tr -d' ')[root@k8s-master kubernetes]# cat <<EOF > token.csv${BOOTSTRAP_TOKEN},kubelet-bootstrap,10001,"system:kubelet-bootstrap"EOF -

创建kubelet kubeconfig

-

123456789101112131415161718192021222324

[root@k8s-master kubernetes]# export KUBE_APISERVER="https://192.168.2.111:6443"设置集群参数[root@k8s-master kubernetes]# cd /root/software/ssl/[root@k8s-master ssl]# kubectl configset-cluster kubernetes \--certificate-authority=./ca.pem \--embed-certs=true\--server=${KUBE_APISERVER} \--kubeconfig=bootstrap.kubeconfig设置客户端认证参数[root@k8s-master ssl]# kubectl configset-credentials kubelet-bootstrap --token=${BOOTSTRAP_TOKEN} --kubeconfig=bootstrap.kubeconfig设置上下文参数[root@k8s-master ssl]# kubectl configset-contextdefault\--cluster=kubernetes \--user=kubelet-bootstrap \--kubeconfig=bootstrap.kubeconfig设置默认上下文[root@k8s-master ssl]# kubectl config use-contextdefault--kubeconfig=bootstrap.kubeconfig

- 创建 kuby-proxy kubeconfig

-

12345678910111213141516171819

[root@k8s-master ssl]# kubectl configset-cluster kubernetes \--certificate-authority=./ca.pem \--embed-certs=true\--server=${KUBE_APISERVER} \--kubeconfig=kube-proxy.kubeconfig[root@k8s-master ssl]# kubectl configset-credentials kube-proxy \--client-certificate=./kube-proxy.pem \--client-key=./kube-proxy-key.pem \--embed-certs=true\--kubeconfig=kube-proxy.kubeconfig[root@k8s-master ssl]# kubectl configset-contextdefault\--cluster=kubernetes \--user=kube-proxy \--kubeconfig=kube-proxy.kubeconfig[root@k8s-master ssl]# kubectl config use-contextdefault\--kubeconfig=kube-proxy.kubeconfig

6.部署 Kube-apiserver

-

1234

[root@k8s-master ssl]# cd /root/kubernetes/server/bin/[root@k8s-master bin]# cp kube-controller-manager kube-scheduler kube-apiserver /opt/kubernetes/bin/[root@k8s-master bin]# cp /opt/kubernetes/token.csv /opt/kubernetes/cfg/[root@k8s-master bin]# cd /opt/kubernetes/bin -

上传master.zip到当前目录

-

123456789

[root@k8s-master bin]# unzip master.zipArchive: master.zipinflating: scheduler.shinflating: apiserver.shinflating: controller-manager.sh[root@k8s-master bin]# chmod +x *.sh[root@k8s-master bin]# ./apiserver.sh 192.168.2.111 https://192.168.2.111:2379,https://192.168.2.112:2379,https://192.168.2.113:2379Created symlinkfrom/etc/systemd/system/multi-user.target.wants/kube-apiserver.service to /usr/lib/systemd/system/kube-apiserver.service.

7.部署kube-controller-manager

-

12

[root@k8s-master bin]# sh controller-manager.sh 127.0.0.1Created symlinkfrom/etc/systemd/system/multi-user.target.wants/kube-controller-manager.service to /usr/lib/systemd/system/kube-controller-manager.service.

8.部署 kube-scheduler

-

12

[root@k8s-master bin]# sh scheduler.sh 127.0.0.1Created symlinkfrom/etc/systemd/system/multi-user.target.wants/kube-scheduler.service to /usr/lib/systemd/system/kube-scheduler.service.

9.测试组件是否运行正常

-

1234567

[root@k8s-master bin]# kubectlgetcsNAME STATUS MESSAGE ERRORscheduler Healthy oketcd-0 Healthy {"health":"true"}etcd-1 Healthy {"health":"true"}etcd-2 Healthy {"health":"true"}controller-manager Healthy ok

10.部署K8s-node 组件

- 准备环境

-

1234567891011121314151617181920212223242526272829303132333435

[root@k8s-master ~]# cd /root/software/ssl/[root@k8s-master ssl]# scp *kubeconfig 192.168.2.112:/opt/kubernetes/cfg/[root@k8s-master ssl]# scp *kubeconfig 192.168.2.113:/opt/kubernetes/cfg/[root@k8s-master ssl]# cd /root/kubernetes/server/bin[root@k8s-master bin]# scp kubelet kube-proxy 192.168.2.112:/opt/kubernetes/bin[root@k8s-master bin]# scp kubelet kube-proxy 192.168.2.113:/opt/kubernetes/bin[root@k8s-master bin]# kubectl create clusterrolebinding kubelet-bootstrap \--clusterrole=system:node-bootstrapper \--user=kubelet-bootstrapclusterrolebinding.rbac.authorization.k8s.io/kubelet-bootstrap created[root@k8s-master bin]# kubectl describe clusterrolebinding kubelet-bootstrapName: kubelet-bootstrapLabels: <none>Annotations: <none>Role:Kind: ClusterRoleName: system:node-bootstrapperSubjects:Kind Name Namespace---- ---- ---------User kubelet-bootstrap[root@k8s-node01 ~]# cd /opt/kubernetes/bin/上传node.zip[root@k8s-node01 bin]# unzip node.zipArchive: node.zipinflating: kubelet.shinflating: proxy.sh[root@k8s-node01 bin]# chmod +x *.sh[root@k8s-node01 bin]# sh kubelet.sh 192.168.2.112 192.168.2.254Created symlinkfrom/etc/systemd/system/multi-user.target.wants/kubelet.service to /usr/lib/systemd/system/kubelet.service. - 执行以下命令,两个节点主机都需要

-

123456789

[root@k8s-node02 ~]# cd /opt/kubernetes/bin/上传node.zip[root@k8s-node02 bin]# unzip node.zipArchive: node.zipinflating: kubelet.shinflating: proxy.sh[root@k8s-node02 bin]# chmod +x *.sh[root@k8s-node02 bin]# sh kubelet.sh 192.168.2.113 192.168.2.254Created symlinkfrom/etc/systemd/system/multi-user.target.wants/kubelet.service to /usr/lib/systemd/system/kubelet.service.

11.部署 kube-proxy

- 在两台node主机都需要执行

-

123456

[root@k8s-node01 bin]# sh proxy.sh 192.168.2.112Created symlinkfrom/etc/systemd/system/multi-user.target.wants/kube-proxy.service to /usr/lib/systemd/system/kube-proxy.service.[root@k8s-node02 bin]# sh proxy.sh 192.168.2.113Created symlinkfrom/etc/systemd/system/multi-user.target.wants/kube-proxy.service to /usr/lib/systemd/system/kube-proxy.service. - 查看node节点组件是否安装成功

-

12

[root@k8s-node01 bin]# ps -ef | grep kube[root@k8s-node02 bin]# ps -ef | grep kube - 查看自动签发证书

-

12345678

[root@k8s-master bin]# kubectlgetcsrNAME AGE REQUESTOR CONDITIONnode-csr-7M_L1gX2uGXM3prE3ruXM3IJsafgqYlpOI07jBpSjnI 3m2s kubelet-bootstrap Pendingnode-csr-FK7fRGabCBuX0W-Gt_ofM4VK5F_ZgNaIFsdEn1eVOq0 108s kubelet-bootstrap Pending[root@k8s-master bin]# kubectl certificate approve node-csr-7M_L1gX2uGXM3prE3ruXM3IJsafgqYlpOI07jBpSjnIcertificatesigningrequest.certificates.k8s.io/node-csr-7M_L1gX2uGXM3prE3ruXM3IJsafgqYlpOI07jBpSjnI approved[root@k8s-master bin]# kubectl certificate approve node-csr-FK7fRGabCBuX0W-Gt_ofM4VK5F_ZgNaIFsdEn1eVOq0certificatesigningrequest.certificates.k8s.io/node-csr-FK7fRGabCBuX0W-Gt_ofM4VK5F_ZgNaIFsdEn1eVOq0 approved - 查看节点

-

1234

[root@k8s-master bin]# kubectlgetnodeNAME STATUS ROLES AGE VERSION192.168.2.112 Ready <none> 50s v1.17.3192.168.2.113 Ready <none> 23s v1.17.3

至此k8s集群部署成功

.jpg)

【推荐】国内首个AI IDE,深度理解中文开发场景,立即下载体验Trae

【推荐】编程新体验,更懂你的AI,立即体验豆包MarsCode编程助手

【推荐】抖音旗下AI助手豆包,你的智能百科全书,全免费不限次数

【推荐】轻量又高性能的 SSH 工具 IShell:AI 加持,快人一步

· .NET Core 中如何实现缓存的预热?

· 从 HTTP 原因短语缺失研究 HTTP/2 和 HTTP/3 的设计差异

· AI与.NET技术实操系列:向量存储与相似性搜索在 .NET 中的实现

· 基于Microsoft.Extensions.AI核心库实现RAG应用

· Linux系列:如何用heaptrack跟踪.NET程序的非托管内存泄露

· TypeScript + Deepseek 打造卜卦网站:技术与玄学的结合

· Manus的开源复刻OpenManus初探

· AI 智能体引爆开源社区「GitHub 热点速览」

· 三行代码完成国际化适配,妙~啊~

· .NET Core 中如何实现缓存的预热?