ELK快速搭建日志平台

一、 介绍

1、日志主要包括系统日志、应用程序日志和安全日志。系统运维和开发人员可以通过日志了解服务器软硬件信息、检查配置过程中的错误及错误发生的原因。经常分析日志可以了解服务器的负荷,性能安全性,从而及时采取措施纠正错误。

2、通常,日志被分散的储存不同的设备上。如果你管理数十上百台服务器,你还在使用依次登录每台机器的传统方法查阅日志。这样是不是感觉很繁琐和效率低下。当务之急我们使用集中化的日志管理,例如:开源的syslog,将所有服务器上的日志收集汇总。

3、集中化管理日志后,日志的统计和检索又成为一件比较麻烦的事情,一般我们使用grep、awk和wc等Linux命令能实现检索和统计,但是对于要求更高的查询、排序和统计等要求和庞大的机器数量依然使用这样的方法难免有点力不从心。

4、开源实时日志分析ELK平台能够完美的解决我们上述的问题,ELK由ElasticSearch、Logstash和Kiabana三个开源工具组成。官方网站:https://www.elastic.co/products

1.Elasticsearch是个开源分布式搜索引擎,它的特点有:分布式,零配置,自动发现,索引自动分片,索引副本机制,restful风格接口,多数据源,自动搜索负载等。

2.Logstash是一个完全开源的工具,他可以对你的日志进行收集、分析,并将其存储供以后使用(如,搜索)。

3.kibana 也是一个开源和免费的工具,他Kibana可以为 Logstash 和 ElasticSearch 提供的日志分析友好的 Web 界面,可以帮助您汇总、分析和搜索重要数据日志。

10-Mac安装ELK

brew install elasticsearch,安装7.10.2版本

To have launchd start elasticsearch now and restart at login:

brew services start elasticsearch

Or, if you don't want/need a background service you can just run:

elasticsearch

==> Summary

🍺 /usr/local/Cellar/elasticsearch/7.10.2: 156 files, 113.5MB

==> Caveats

==> elasticsearch

Data: /usr/local/var/lib/elasticsearch/

Logs: /usr/local/var/log/elasticsearch/elasticsearch_yangjun.log

Plugins: /usr/local/var/elasticsearch/plugins/

Config: /usr/local/etc/elasticsearch/

To have launchd start elasticsearch now and restart at login:

brew services start elasticsearch

Or, if you don't want/need a background service you can just run:

elasticsearch

yangjun@yangjun ~ %

brew install logstash,安装7.10.2版本

注意版本要和elasticsearch一致,实在不行就自己去下载tar包下来

下载地址:https://www.elastic.co/cn/downloads/past-releases/logstash-7-10-2

yangjun@yangjun ~ % brew install logstash

==> Downloading https://ghcr.io/v2/homebrew/core/logstash/manifests/7.14.1

Already downloaded: /Users/yangjun/Library/Caches/Homebrew/downloads/bb7e451818765e37a15312cf7b96238736a51bef9ad2d895a32e73a34d1bbe4b--logstash-7.14.1.bottle_manifest.json

==> Downloading https://ghcr.io/v2/homebrew/core/logstash/blobs/sha256:02db87c4194fd1ff3f2dd864b7c1deb3350a0c9e434b4f182845e752f8e18c03

Already downloaded: /Users/yangjun/Library/Caches/Homebrew/downloads/45f170bdc70e11cbeb337c7ef6787baedbd3f2844a69d09df6a4a6d80b59c757--logstash--7.14.1.big_sur.bottle.tar.gz

==> Reinstalling logstash

==> Pouring logstash--7.14.1.big_sur.bottle.tar.gz

==> Caveats

Configuration files are located in /usr/local/etc/logstash/

To start logstash:

brew services start logstash

Or, if you don't want/need a background service you can just run:

/usr/local/opt/logstash/bin/logstash

==> Summary

🍺 /usr/local/Cellar/logstash/7.14.1: 12,576 files, 289.1MB

yangjun@yangjun ~ %

在conf下添加 logstash.conf

input {

beats {

port => 5044

}

tcp {

host => "127.0.0.1" port => 9250 mode => "server" tags => ["tags"] codec => json_lines

}

}

output {

elasticsearch {

hosts => ["http://localhost:9200"]

#index => "%{[@metadata][beat]}-%{[@metadata][version]}-%{+YYYY.MM.dd}"

}

stdout { codec => rubydebug }

}

启动 ./bin/logstash -f ./config/logstash.conf

brew install kibana,安装7.10.2版本

yangjun@yangjun ~ % brew install kibana

Updating Homebrew...

==> Auto-updated Homebrew!

Updated 2 taps (homebrew/core and homebrew/cask).

==> New Formulae

jpdfbookmarks

==> Updated Formulae

Updated 7 formulae.

==> Updated Casks

Updated 12 casks.

Warning: node@10 has been deprecated because it is not supported upstream!

==> Downloading https://ghcr.io/v2/homebrew/core/node/10/manifests/10.24.1_1

######################################################################## 100.0%

==> Downloading https://ghcr.io/v2/homebrew/core/node/10/blobs/sha256:84095e53ee

==> Downloading from https://pkg-containers.githubusercontent.com/ghcr1/blobs/sh

######################################################################## 100.0%

==> Downloading https://ghcr.io/v2/homebrew/core/kibana/manifests/7.10.2

######################################################################## 100.0%

==> Downloading https://ghcr.io/v2/homebrew/core/kibana/blobs/sha256:c218ab10fca

==> Downloading from https://pkg-containers.githubusercontent.com/ghcr1/blobs/sh

######################################################################## 100.0%

Warning: kibana has been deprecated because it is switching to an incompatible license!

==> Installing dependencies for kibana: node@10

==> Installing kibana dependency: node@10

==> Pouring node@10--10.24.1_1.big_sur.bottle.tar.gz

🍺 /usr/local/Cellar/node@10/10.24.1_1: 4,308 files, 53MB

==> Installing kibana

==> Pouring kibana--7.10.2.big_sur.bottle.tar.gz

==> Caveats

Config: /usr/local/etc/kibana/

If you wish to preserve your plugins upon upgrade, make a copy of

/usr/local/opt/kibana/plugins before upgrading, and copy it into the

new keg location after upgrading.

To have launchd start kibana now and restart at login:

brew services start kibana

Or, if you don't want/need a background service you can just run:

kibana

==> Summary

🍺 /usr/local/Cellar/kibana/7.10.2: 29,153 files, 300.8MB

==> Caveats

==> kibana

Config: /usr/local/etc/kibana/

If you wish to preserve your plugins upon upgrade, make a copy of

/usr/local/opt/kibana/plugins before upgrading, and copy it into the

new keg location after upgrading.

To have launchd start kibana now and restart at login:

brew services start kibana

Or, if you don't want/need a background service you can just run:

kibana

yangjun@yangjun ~ % mac下elk的安装

安装前准备

1.mac下安装最方便的就是Homebrew了。首先安装Homebrew。

2.elk需要java8的环境,java -version看是否当前java环境是java8。

安装elasticsearch

brew install elasticsearch && brew info elasticsearch

启动/关闭/重启elasticsearch:

brew service start elasticsearch

brew service stop elasticsearch

brew service restart elasticsearch

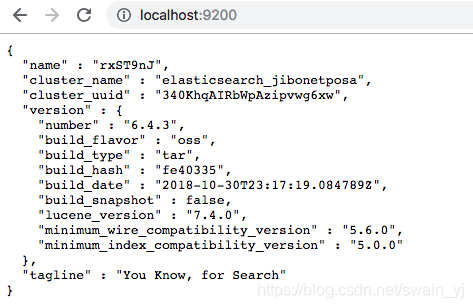

启动之后使用您喜欢的浏览器检查它是否在localhost和默认端口上正确运行:http:// localhost:9200

输出应该如下所示:

安装Logstash:

brew install logstash

启动/关闭/重启logstash

brew services start logstash

brew services stop logstash

brew services restart logstash

由于我们尚未配置Logstash管道,因此启动Logstash不会产生任何有意义的结果。我们将在下面的另一个步骤中返回配置Logstash。

安装Kibana

brew install kibana

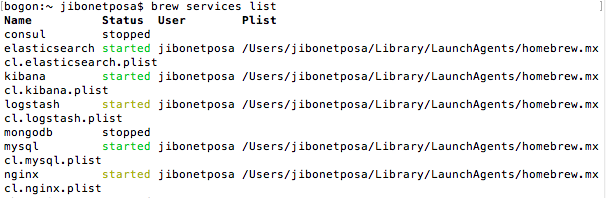

启动Kibana并检查所有ELK服务是否正在运行:

brew services start kibana

brew services list

Kibana需要进行一些配置更改才能正常工作,打开Kibana配置文件:kibana.yml

sudo vi /usr/local/etc/kibana/kibana.yml

取消注释用于定义Kibana端口和Elasticsearch实例的指令:

server.port: 5601

elasticsearch.url: "http://localhost:9200”

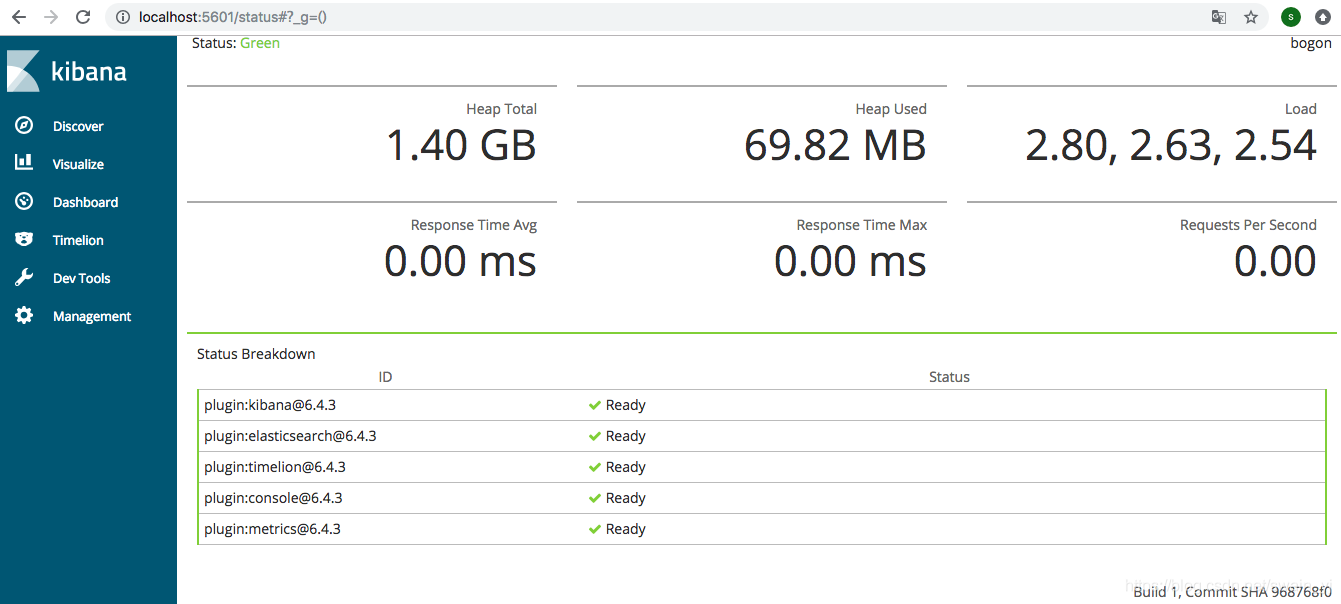

如果一切顺利,请在localhost:5601/status打开Kibana。你应该看到这样的东西:

恭喜,您已经在Mac上成功安装了ELK!

发送一些数据

您已准备好开始向Elasticsearch发送数据并享受堆栈提供的所有优点。下面是一个Logstash管道将syslog日志发送到堆栈的示例。 首先,您需要创建一个新的Logstash配置文件:

sudo vim /etc/logstash/conf.d/syslog.conf

输入以下配置:

input {

file {

path => [ "/var/log/*.log", "/var/log/messages", "/var/log/syslog" ]

type => "syslog"

}

}

filter {

if [type] == "syslog" {

grok {

match => { "message" => "%{SYSLOGTIMESTAMP:syslog_timestamp} %{SYSLOGHOST:syslog_hostname} %{DATA:syslog_program}(?:\[%{POSINT:syslog_pid}\])?: %{GREEDYDATA:syslog_message}" }

add_field => [ "received_at", "%{@timestamp}" ]

add_field => [ "received_from", "%{host}" ]

}

syslog_pri { }

date {

match => [ "syslog_timestamp", "MMM d HH:mm:ss", "MMM dd HH:mm:ss" ]

}

}

}

output {

elasticsearch {

hosts => ["127.0.0.1:9200"]

index => "syslog-demo"

}

stdout { codec => rubydebug }

}

然后重启logstash.

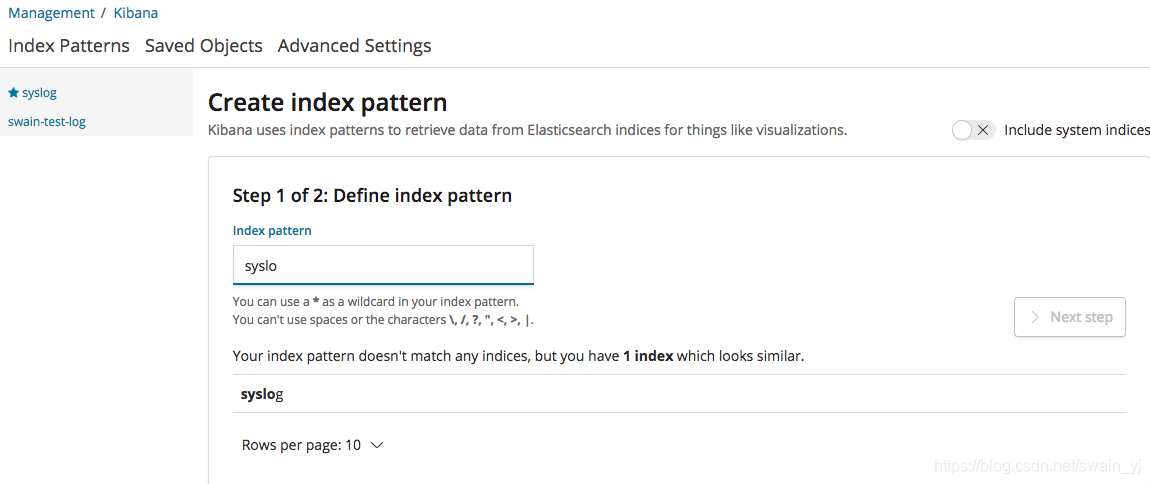

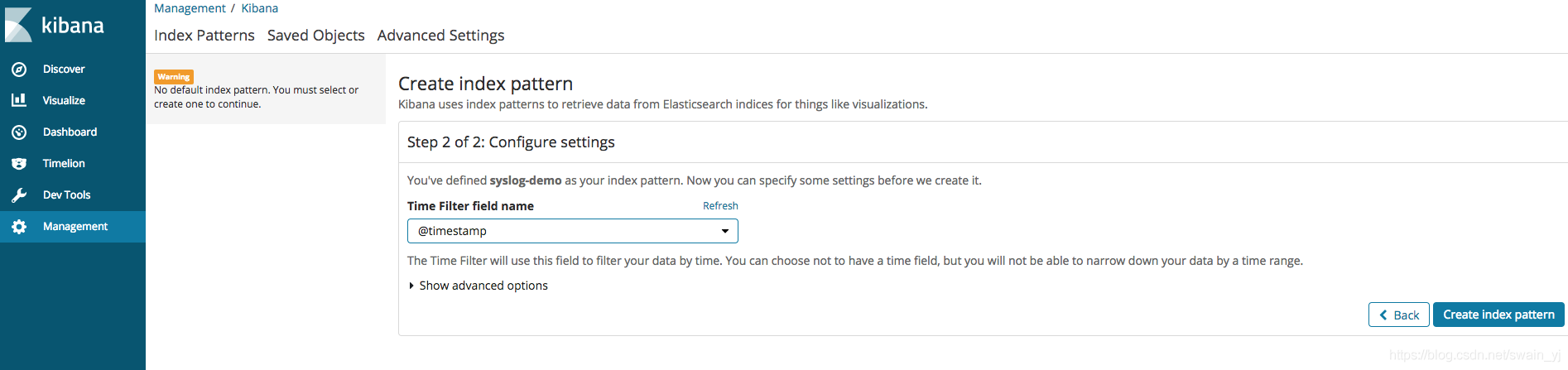

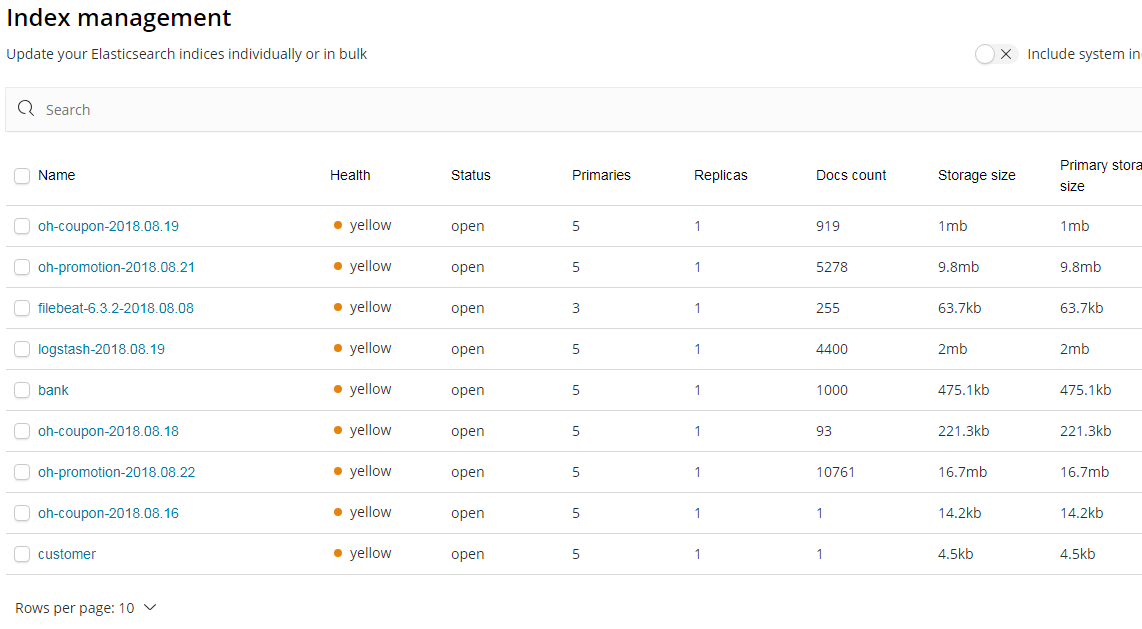

在Kibana的Management选项卡中,您应该看到由新的Logstash管道创建的新创建的“syslog-demo”索引。

将其作为index pattern输入,然后在下一步中选择@timestamp字段作为时间过滤器字段名称。

我们都准备好了!打开Discover页面,您将在Kibana中看到syslog数据。

mac下elk的安装-end

在需要收集日志的所有服务上部署logstash,作为logstash agent(logstash shipper)用于监控并过滤收集日志,

将过滤后的内容发送到logstash indexer,logstash indexer将日志收集在一起交给全文搜索服务ElasticSearch,

可以用ElasticSearch进行自定义搜索通过Kibana 来结合自定义搜索进行页面展示。

二、 安装ElasticSearch

1、 安装jdk

wget http://download.oracle.com/otn-pub/java/jdk/8u45-b14/jdk-8u45-linux-x64.tar.gz

mkdir /usr/local/java

tar -zxf jdk-8u45-linux-x64.tar.gz -C /usr/local/java/

export JAVA_HOME=/usr/local/java/jdk1.8.0_4

export PATH=$PATH:$JAVA_HOME/bin

export CLASSPATH=.:$JAVA_HOME/lib/tools.jar:$JAVA_HOME/lib/dt.jar:$CLASSPATH

2、 安装ElasticSearch

wget https://download.elasticsearch.org/elasticsearch/release/org/elasticsearch/distribution/tar/elasticsearch/2.2.0/elasticsearch-2.2.0.tar.gz

解压:tar -zxf elasticsearch-2.2.0.tar.gz -C ./

安装elasticsearch的head插件:

cd /data/program/software/elasticsearch-2.2.0

./bin/plugin install mobz/elasticsearch-head

执行结果:

安装elasticsearch的kopf插件

./bin/plugin install lmenezes/elasticsearch-kopf

执行结果:

创建elasticsearch的data和logs目录

mkdir data

mkdir logs

配置elasticsearch的配置文件

cd config/

备份一下源文件:

cp elasticsearch.yml elasticsearch.yml_back

编辑配置文件:

vim elasticsearch.yml

配置内容如下:

cluster.name: dst98 主机名称

node.name: node-1

path.data: /data/program/software/elasticsearch-2.2.0/data

path.logs: /data/program/software/elasticsearch-2.2.0/logs

network.host: 10.15.0.98 主机IP地址

network.port: 9200 主机端口

启动elasticsearch: ./bin/elasticsearch

报如下错误:说明不能以root账户启动,需要创建一个普通用户,用普通用户启动才可以。

[root@dst98 elasticsearch-2.2.0]# ./bin/elasticsearch

Exception in thread "main" java.lang.RuntimeException: don't run elasticsearch as root.

at org.elasticsearch.bootstrap.Bootstrap.initializeNatives(Bootstrap.java:93)

at org.elasticsearch.bootstrap.Bootstrap.setup(Bootstrap.java:144)

at org.elasticsearch.bootstrap.Bootstrap.init(Bootstrap.java:285)

at org.elasticsearch.bootstrap.Elasticsearch.main(Elasticsearch.java:35)

Refer to the log for complete error details.

添加用户及用户组

#groupadd search

#useradd -g search search

将data和logs目录的属主和属组改为search

#chown search.search /elasticsearch/ -R

然后切换用户并且启动程序:

su search

./bin/elasticsearch

后台启动:nohup ./bin/elasticsearch &

启动成功后浏览器访问如下:

通过安装head插件可以查看集群的一些信息,访问地址及结果如下:

三、安装Kibana

下载kibana:

wget https://download.elastic.co/kibana/kibana/kibana-4.4.0-linux-x64.tar.gz

解压:

tar -zxf kibana-4.4.0-linux-x64.tar.gz -C ./

重命名:

mv kibana-4.4.0-linux-x64 kibana-4.4.0

先备份配置文件:

/data/program/software/kibana-4.4.0/config

cp kibana.yml kibana.yml_back

修改配置文件:

server.port: 5601

server.host: "10.15.0.98"

elasticsearch.url: "http://10.15.0.98:9200" --ip为server的ip地址

kibana.defaultAppId: "discover"

elasticsearch.requestTimeout: 300000

elasticsearch.shardTimeout: 0

启动程序:

nohup ./bin/kibana &

四、配置Logstash

下载logstash到要采集日志的服务器上和安装ELK的机器上。

wget https://download.elastic.co/logstash/logstash/logstash-2.2.0.tar.gz

解压: tar -zxf logstash-2.2.0.tar.gz -C ./

运行如下命令进行测试:

./bin/logstash -e 'input { stdin{} } output { stdout {} }'

Logstash startup completed

Hello World! #输入字符

2015-07-15T03:28:56.938Z noc.vfast.com Hello World! #输出字符格式

注:其中-e参数允许Logstash直接通过命令行接受设置。使用CTRL-C命令可以退出之前运行的Logstash。

1、配置ElasticSearch上的LogStash读取redis里的日志写到ElasticSearch

进入logstash目录新建一个配置文件:

cd logstash-2.2.0

touch logstash-indexer.conf #文件名随便起

写如下配置到新建立的配置文件:

input和output根据日志服务器数量,可以增加多个。

input {

redis {

data_type => "list"

key => "mid-dst-oms-155"

host => "10.15.0.96"

port => 6379

db => 0

threads => 10

}

}

output {

if [type] == "mid-dst-oms-155"{

elasticsearch {

hosts => "10.15.0.98"

index => "mid-dst-oms-155"

codec => "json"

}

}

}

启动logstash:

nohup ./bin/logstash -f logstash-indexer.conf -l logs/logstash.log &

2、配置客户端的LogStash读取日志写入到redis

进入logstash目录新建一个配置文件:

cd logstash-2.2.0

touch logstash_agent.conf #文件名随便起

写如下配置到新建立的配置文件:

input和output根据日志服务器数量,可以增加多个。

input {

file {

path => [“/data/program/logs/MID-DST-OMS/mid-dst-oms.txt”]

type => “mid-dst-oms-155”

}

}

output{

redis {

host => “125.35.5.98”

port => 6379

data_type => “list”

key => “mid-dst-oms-155”

}

}

启动logstash:

nohup ./bin/logstash -f logstash_agent.conf -l logs/logstash.log &

备注:

logstash中input参数设置:

1. start_position:设置beginning保证从文件开头读取数据。

2. path:填入文件路径。

3. type:自定义类型为tradelog,由用户任意填写。

4. codec:设置读取文件的编码为GB2312,用户也可以设置为UTF-8等等

5. discover_interval:每隔多久去检查一次被监听的 path 下是否有新文件,默认值是15秒

6. sincedb_path:设置记录源文件读取位置的文件,默认为文件所在位置的隐藏文件。

7. sincedb_write_interval:每隔15秒记录一下文件读取位置

2. 前言

2.1. 现状

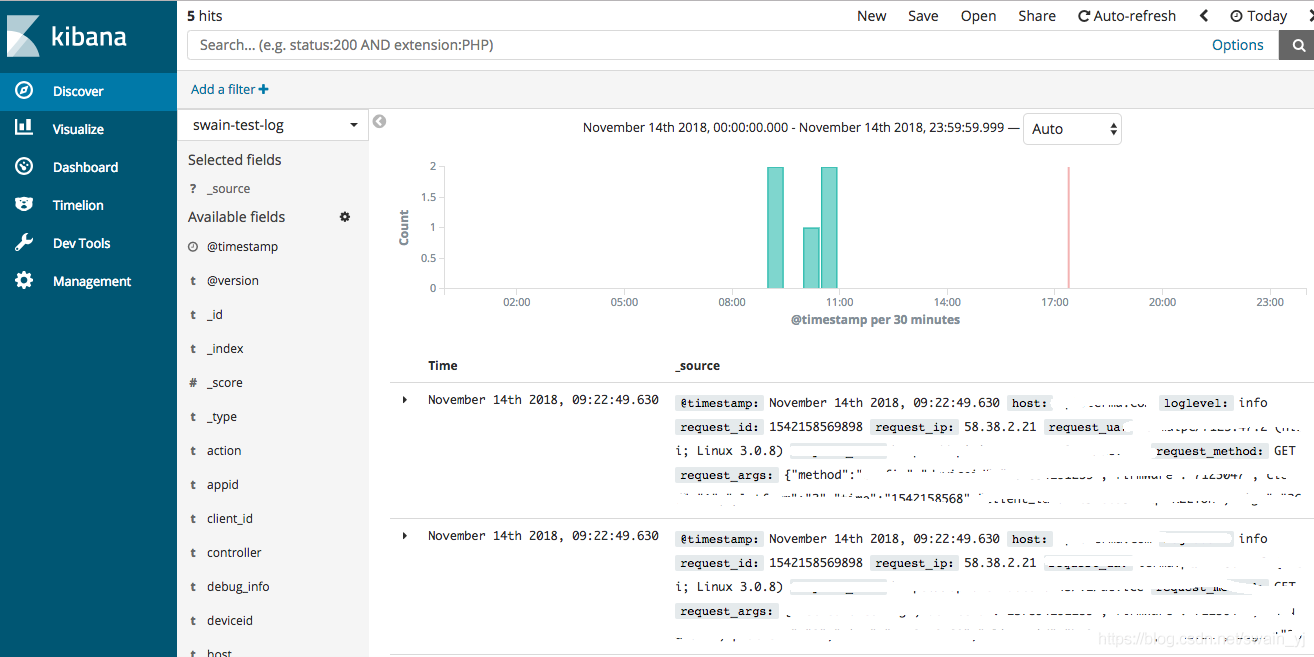

以前,查看日志都是通过SSH客户端登服务器去看,使用较多的命令就是 less 或者 tail。如果服务部署了好几台,就要分别登录到这几台机器上看,还要注意日志打印的时间(比如,有可能一个操作过来产生好的日志,这些日志还不是在同一台机器上,此时就需要根据时间的先后顺序推断用户的操作行为,就要在这些机器上来回切换)。而且,搜索的时候不方便,必须对vi,less这样的命令很熟悉,还容易看花了眼。为了简化日志检索的操作,可以将日志收集并索引,这样方便多了,用过Lucene的人应该知道,这种检索效率是很高的。基本上每个互联网公司都会有自己的日志管理平台和监控平台(比如,Zabbix),无论是自己搭建的,还是用的阿里云这样的云服务提供的,反正肯定有。下面,我们利用ELK搭建一个相对真实的日志管理平台。

2.2. 日志格式

我们的日志,现在是这样的:

每条日志的格式,类似于这样:

2018-08-22 00:34:51.952 [INFO ] [org.springframework.kafka.KafkaListenerEndpointContainer#0-1-C-1] [com.cjs.handler.MessageHandler][39] - 监听到注册事件消息:

2.3. logback.xml

2.4. 环境介绍

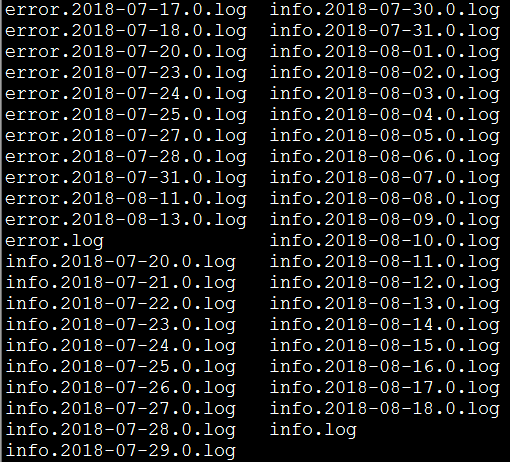

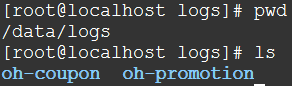

在本例中,各个系统的日志都在/data/logs/${projectName},比如:

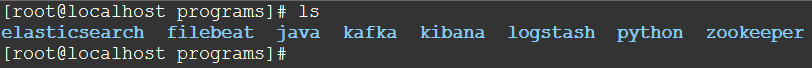

Filebeat,Logstash,Elasticsearch,Kibana都在一台虚拟机上,而且都是单实例,而且没有别的中间件

由于,日志每天都会归档,且实时日志都是输出在info.log或者error.log中,所以Filebeat采集的时候只需要监视这两个文件即可。

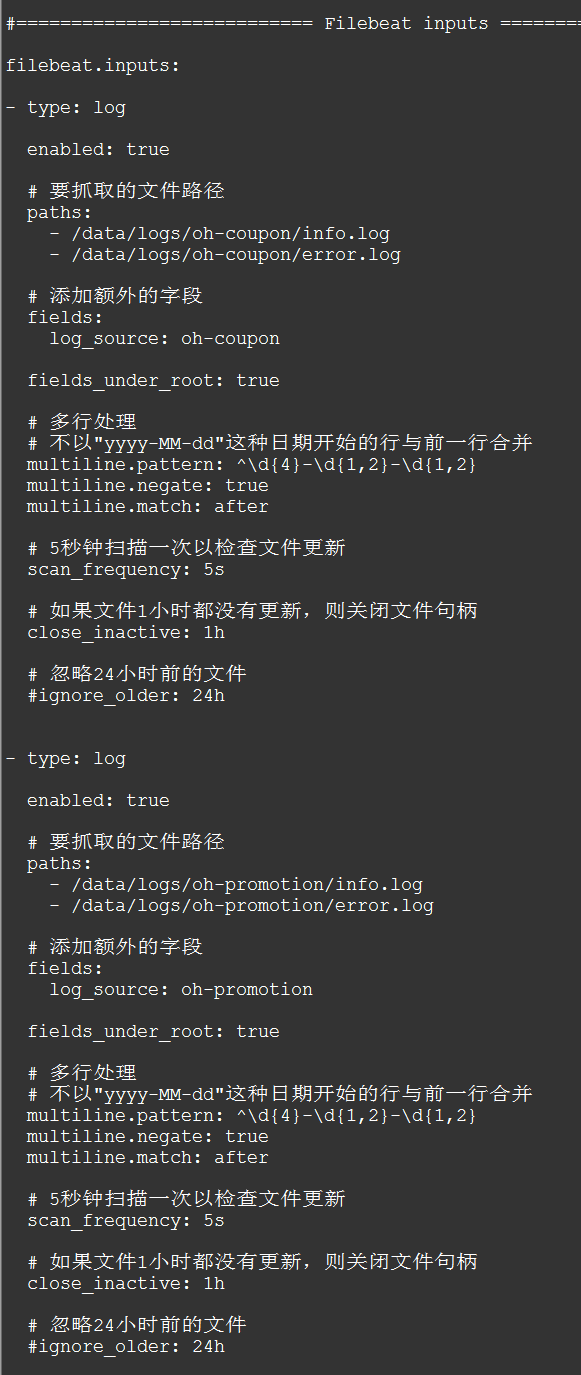

3. Filebeat配置

Filebeat的主要配置在于filebeat.yml配置文件中的 filebeat.inputs 和 output.logstash 区域:

#=========================== Filebeat inputs =============================

filebeat.inputs:

- type: log

enabled: true

# 要抓取的文件路径

paths:

- /data/logs/oh-coupon/info.log

- /data/logs/oh-coupon/error.log

# 添加额外的字段

fields:

log_source: oh-coupon

fields_under_root: true

# 多行处理

# 不以"yyyy-MM-dd"这种日期开始的行与前一行合并

multiline.pattern: ^\d{4}-\d{1,2}-\d{1,2}

multiline.negate: true

multiline.match: after

# 5秒钟扫描一次以检查文件更新

scan_frequency: 5s

# 如果文件1小时都没有更新,则关闭文件句柄

close_inactive: 1h

# 忽略24小时前的文件

#ignore_older: 24h

- type: log

enabled: true

paths:

- /data/logs/oh-promotion/info.log

- /data/logs/oh-promotion/error.log

fields:

log_source: oh-promotion

fields_under_root: true

multiline.pattern: ^\d{4}-\d{1,2}-\d{1,2}

multiline.negate: true

multiline.match: after

scan_frequency: 5s

close_inactive: 1h

ignore_older: 24h

#================================ Outputs =====================================

#-------------------------- Elasticsearch output ------------------------------

#output.elasticsearch:

# Array of hosts to connect to.

# hosts: ["localhost:9200"]

# Optional protocol and basic auth credentials.

#protocol: "https"

#username: "elastic"

#password: "changeme"

#----------------------------- Logstash output --------------------------------

output.logstash:

# The Logstash hosts

hosts: ["localhost:5044"]

# Optional SSL. By default is off.

# List of root certificates for HTTPS server verifications

#ssl.certificate_authorities: ["/etc/pki/root/ca.pem"]

# Certificate for SSL client authentication

#ssl.certificate: "/etc/pki/client/cert.pem"

# Client Certificate Key

#ssl.key: "/etc/pki/client/cert.key"

4. Logstash配置

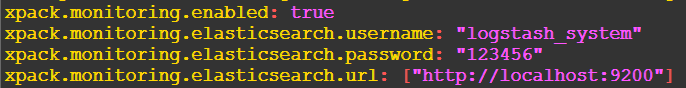

4.1. logstash.yml

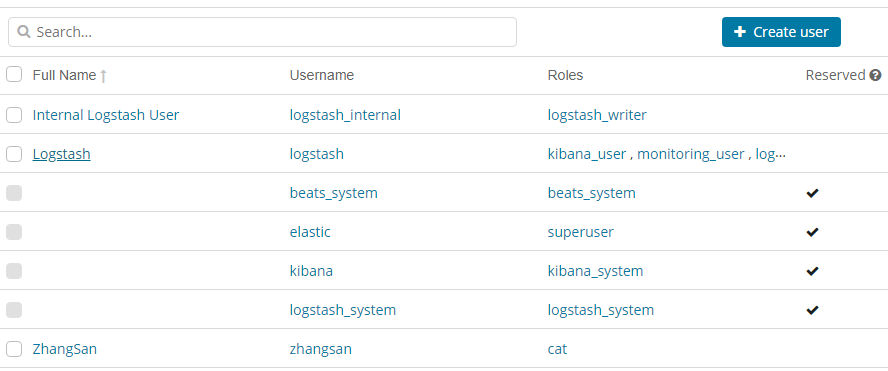

# X-Pack Monitoring # https://www.elastic.co/guide/en/logstash/current/monitoring-logstash.html xpack.monitoring.enabled: true xpack.monitoring.elasticsearch.username: "logstash_system" xpack.monitoring.elasticsearch.password: "123456" xpack.monitoring.elasticsearch.url: ["http://localhost:9200"]

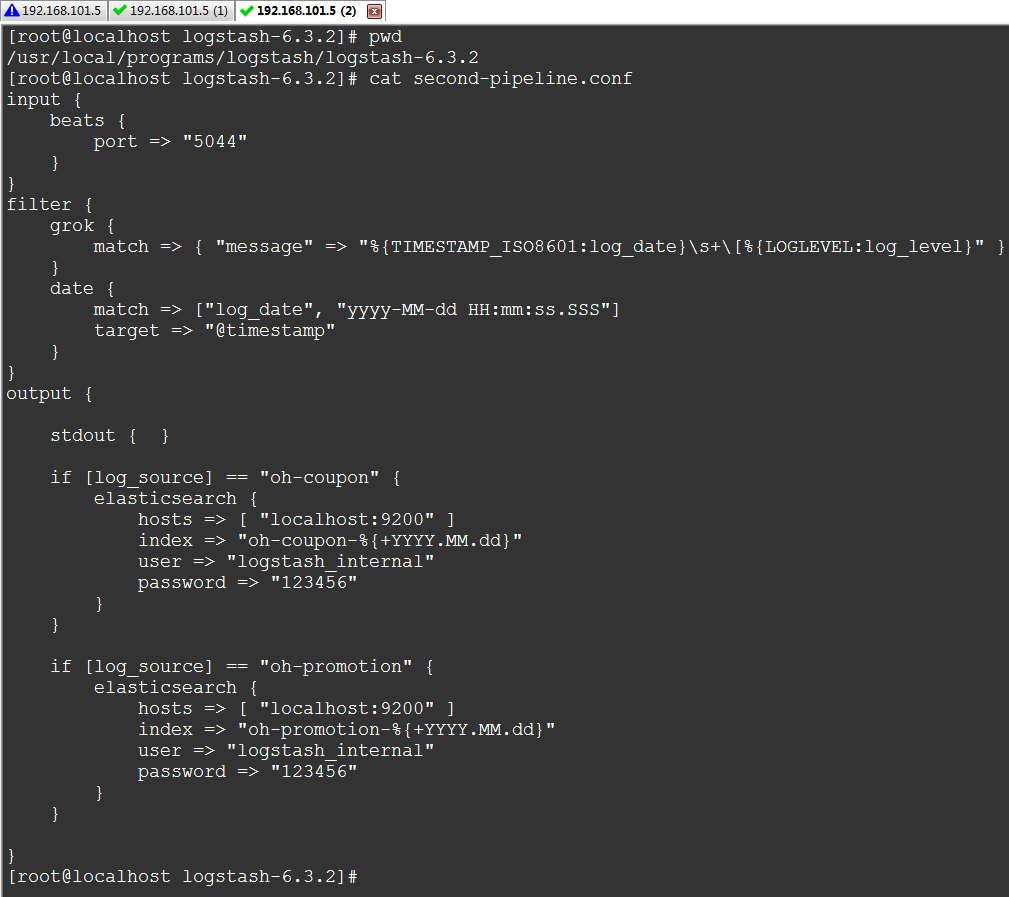

4.2. 管道配置

input {

beats {

port => "5044"

}

}

filter {

grok {

match => { "message" => "%{TIMESTAMP_ISO8601:log_date}\s+\[%{LOGLEVEL:log_level}" }

}

date {

match => ["log_date", "yyyy-MM-dd HH:mm:ss.SSS"]

target => "@timestamp"

}

}

output {

if [log_source] == "oh-coupon" {

elasticsearch {

hosts => [ "localhost:9200" ]

index => "oh-coupon-%{+YYYY.MM.dd}"

user => "logstash_internal"

password => "123456"

}

}

if [log_source] == "oh-promotion" {

elasticsearch {

hosts => [ "localhost:9200" ]

index => "oh-promotion-%{+YYYY.MM.dd}"

user => "logstash_internal"

password => "123456"

}

}

}

4.3. 插件

Logstash针对输入、过滤、输出都有好多插件

关于Logstash的插件在之前的文章中未曾提及,因为都是配置,所以不打算再单独写一篇了,这里稍微重点的提一下,下面几篇文章对此特别有帮助:

https://www.elastic.co/guide/en/logstash/current/input-plugins.html

https://www.elastic.co/guide/en/logstash/current/plugins-inputs-beats.html

https://www.elastic.co/guide/en/logstash/current/plugins-inputs-kafka.html

https://www.elastic.co/guide/en/logstash/current/filebeat-modules.html

https://www.elastic.co/guide/en/logstash/current/output-plugins.html

https://www.elastic.co/guide/en/logstash/current/logstash-config-for-filebeat-modules.html

https://www.elastic.co/guide/en/logstash/current/filter-plugins.html

本例中,到了输入插件:beats,过滤插件:grok和date,输出插件:elasticsearch

这里,最最重要的是 grok ,利用这个插件我们可以从消息中提取一些我们想要的字段

grok

https://www.elastic.co/guide/en/logstash/current/plugins-filters-grok.html

https://github.com/logstash-plugins/logstash-patterns-core/blob/master/patterns/grok-patterns

date

字段引用

5. Elasticsearch配置

5.1. elasticsearch.yml

xpack.security.enabled: true

其它均为默认

6. Kibana配置

6.1. kibana.yml

server.port: 5601 server.host: "192.168.101.5" elasticsearch.url: "http://localhost:9200" kibana.index: ".kibana" elasticsearch.username: "kibana" elasticsearch.password: "123456" xpack.security.enabled: true xpack.security.encryptionKey: "4297f44b13955235245b2497399d7a93"

7. 启动服务

7.1. 启动Elasticsearch

[root@localhost ~]# su - cheng [cheng@localhost ~]$ cd $ES_HOME [cheng@localhost elasticsearch-6.3.2]$ bin/elasticsearch

7.2. 启动Kibana

[cheng@localhost kibana-6.3.2-linux-x86_64]$ bin/kibana

7.3. 启动Logstash

[root@localhost logstash-6.3.2]# bin/logstash -f second-pipeline.conf --config.test_and_exit [root@localhost logstash-6.3.2]# bin/logstash -f second-pipeline.conf --config.reload.automatic

7.4. 启动Filebeat

[root@localhost filebeat-6.3.2-linux-x86_64]# rm -f data/registry [root@localhost filebeat-6.3.2-linux-x86_64]# ./filebeat -e -c filebeat.yml -d "publish"

8. 演示

9. 参考

https://www.cnblogs.com/liuxinan/p/5336971.html