抓取主板市盈率,市净率和股息率

思想:

1. 分析中国指数有限公司的网页数据存储情况。

2.交易日的获取,之后拼接为网页链接

3.逐个的访问网页连接获取数据

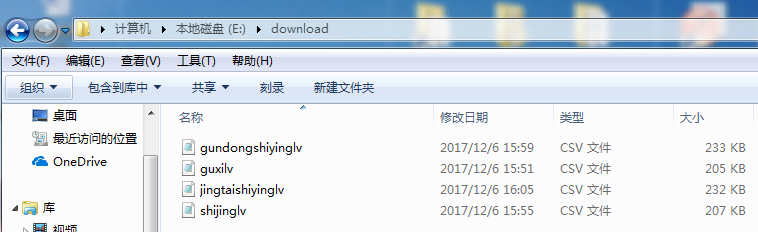

4.数据储存为CSV文件。

使用的知识:

1.网页解析BeautifulSoup标签的获得,标签内容的获取。

2.正则表达式的使用,提取url的时间。

3.数据存储,写入

4.意外情况的处理。

5.时间的记录

代码:

1 # hanbb 2 # come on!!! 3 import tushare as ts 4 import datetime 5 import requests 6 from bs4 import BeautifulSoup 7 import csv 8 import re 9 10 # 交易时间的获取 11 end = datetime.date.today() 12 data = ts.get_hist_data('000012',start='2015-01-01',end='end') # 可以在这里修改开始时间 13 date = list(data.index) 14 15 # 网站链接的拼接 16 urls = [] 17 urls_3 = [] 18 urls_2= [] 19 urls_1= [] 20 for date_time in date: 21 url = 'http://www.csindex.com.cn/zh-CN/downloads/industry-price-earnings-ratio?type=zy4&date=' + date_time 22 urls.append(url) 23 url_3 = 'http://www.csindex.com.cn/zh-CN/downloads/industry-price-earnings-ratio?type=zy3&date=' + date_time 24 urls_3.append(url_3) 25 url_2 = 'http://www.csindex.com.cn/zh-CN/downloads/industry-price-earnings-ratio?type=zy2&date=' + date_time 26 urls_2.append(url_2) 27 url_1 = 'http://www.csindex.com.cn/zh-CN/downloads/industry-price-earnings-ratio?type=zy1&date=' + date_time 28 urls_1.append(url_1) 29 # print(urls) 30 31 # 网页的访问 32 def get_html_text(url): 33 try: 34 r = requests.get(url) 35 r.raise_for_status() 36 r.encoding = r.apparent_encoding 37 return r.text 38 except: 39 return "" 40 41 # 网页的解析 和 获取信息 42 def getinfo(url): 43 html = get_html_text(url) 44 soup = BeautifulSoup(html,"html.parser") 45 td = soup.find_all("td") 46 47 # 将url的时间提取出来作为列表的索引 48 infodate = re.findall(r'\d{4}-\d{2}-\d{2}',url)[0] 49 #print(infodate) 50 #print(td) 51 #print(len(td) 52 53 # 提取列表的时间 54 if len(td) == 48: 55 info = infodate,td[0].string, td[1].string, td[2].string, td[3].string, td[4].string, td[5].string, td[6].string, td[ 56 7].string, \ 57 td[8].string, td[9].string, td[10].string, td[11].string, td[12].string, td[13].string, td[14].string, \ 58 td[15].string, \ 59 td[16].string, td[17].string, td[18].string, td[19].string, td[20].string, td[21].string, td[22].string, \ 60 td[23].string, \ 61 td[24].string, td[25].string, td[26].string, td[27].string, td[28].string, td[29].string, td[30].string, \ 62 td[31].string, \ 63 td[32].string, td[33].string, td[34].string, td[35].string, td[36].string, td[37].string, td[38].string, \ 64 td[39].string, \ 65 td[40].string, td[41].string, td[42].string, td[43].string, td[44].string, td[45].string, td[46].string, \ 66 td[47].string, 67 else: 68 info = infodate,0.0,1.0,2.0,3.0,4.0,5.0,6.0,7.0,\ 69 0.0,1.0,2.0,3.0,4.0,5.0,6.0,7.0,\ 70 0.0,1.0,2.0,3.0,4.0,5.0,6.0,7.0,\ 71 0.0,1.0,2.0,3.0,4.0,5.0,6.0,7.0,\ 72 0.0,1.0,2.0,3.0,4.0,5.0,6.0,7.0,\ 73 0.0,1.0,2.0,3.0,4.0,5.0,6.0,7.0, 74 return info 75 76 # 数据储存 77 def save(filename,info): 78 file = open('E:\\download\\{}.csv'.format(filename), 'a', newline='') # 打开的文件名称,追加模式,不写newline=''会出现行间距变大 79 writerfile = csv.writer(file) # 写入命令 80 writerfile.writerow(info) # 写入内容 81 file.close() # 关闭文件 82 83 def main(): 84 guxilv = "guxilv" 85 shijinglv = 'shijinglv' 86 gundongshiyinglv = "gundongshiyinglv" 87 jingtaishiyinglv = "jingtaishiyinglv" 88 print("正在采集数据,请稍后") 89 start_time = datetime.datetime.now() 90 count = 0 91 92 # 采集数据 股息率 93 for url in urls: 94 save(guxilv,getinfo(url)) 95 count+=1 96 print("已完成股息率的采集,共{}条数据存入{}文件夹".format(count*48,guxilv)) 97 end_time = datetime.datetime.now() 98 print((end_time - start_time).seconds) 99 100 # 采集数据 市净率 101 count = 0 102 for url in urls_3: 103 save(shijinglv, getinfo(url)) 104 count += 1 105 print("已完成市净率的采集,共{}条数据存入{}文件夹".format(count*48,shijinglv)) 106 end_time = datetime.datetime.now() 107 print((end_time - start_time).seconds) 108 109 # 采集数据 动态市盈率 110 count = 0 111 for url in urls_2: 112 save(gundongshiyinglv, getinfo(url)) 113 count += 1 114 print("已完成滚动市盈率的采集,共{}条数据存入{}文件夹".format(count*48, gundongshiyinglv)) 115 end_time = datetime.datetime.now() 116 print((end_time - start_time).seconds) 117 118 # 采集数据 静态市盈率 119 count = 0 120 for url in urls_1: 121 save(jingtaishiyinglv, getinfo(url)) 122 count += 1 123 print("已完成静态市盈率的采集,共{}条数据存入{}文件夹".format(count*48,jingtaishiyinglv)) 124 end_time = datetime.datetime.now() 125 print((end_time - start_time).seconds) 126 127 main()

浙公网安备 33010602011771号

浙公网安备 33010602011771号