RDD

RDD:(spark进行计算的基本单位(弹性分布式数据集))

1.获取RDD

加载文件获取

val = rdd = sc.texFile()

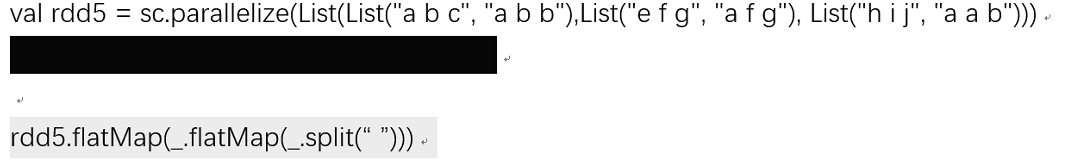

通过并行化获取

val rdd = rdd1 = sc.parallelize()

2.有关rdd常用方法

sc.parallelize(new ...,num) num为分区个数 rdd.partition.length //查看分区个数

rdd.sortBy(X=>X,true) //true 升序,false降序,默认

rdd.collect //显示内容

rdd.flatMap(_.split("")) //压平

rdd.union(rdd1) //并集

rdd union rdd1 //合并

rdd.distinct //去重

rdd.intersection //交集

rdd.join(rdd1) //把键相同的值合并 前提rdd中为对偶

.leftOutJion(rdd1) //左联

.rightOutJoin(rdd1) //右联

rdd.groupByKey() //根据key进行分组

rdd.top

rdd.take

rdd.takeOrdered

rdd.first

3. 转换(Transformation):

map(func)

filter(func)

flatMap(func)

mapPartitions(func)

mapPartitionsWithIndex(func)

sample(withReplacement, fraction, seed)

union(otherDataset)

intersection(otherDataset)

distinct([numPartitions]))

groupByKey([numPartitions])

reduceByKey(func, [numPartitions])

aggregateByKey(zeroValue)(seqOp, combOp, [numPartitions])

sortByKey([ascending], [numPartitions])

join(otherDataset, [numPartitions])

cogroup(otherDataset, [numPartitions])

cartesian(otherDataset)

pipe(command, [envVars])

coalesce(numPartitions)

repartition(numPartitions)

repartitionAndSortWithinPartitions(partitioner)

动作(Action)

动作(actions) reduce(func) collect() count() first() take(n) takeSample(withReplacement, num, [seed]) takeOrdered(n, [ordering]) saveAsTextFile(path) saveAsSequenceFile(path) saveAsObjectFile(path) countByKey() foreach(func)

本文来自博客园踩坑狭,作者:韩若明瞳,转载请注明原文链接:https://www.cnblogs.com/han-guang-xue/p/10030980.html

【推荐】国内首个AI IDE,深度理解中文开发场景,立即下载体验Trae

【推荐】编程新体验,更懂你的AI,立即体验豆包MarsCode编程助手

【推荐】抖音旗下AI助手豆包,你的智能百科全书,全免费不限次数

【推荐】轻量又高性能的 SSH 工具 IShell:AI 加持,快人一步

· .NET Core 中如何实现缓存的预热?

· 从 HTTP 原因短语缺失研究 HTTP/2 和 HTTP/3 的设计差异

· AI与.NET技术实操系列:向量存储与相似性搜索在 .NET 中的实现

· 基于Microsoft.Extensions.AI核心库实现RAG应用

· Linux系列:如何用heaptrack跟踪.NET程序的非托管内存泄露

· TypeScript + Deepseek 打造卜卦网站:技术与玄学的结合

· Manus的开源复刻OpenManus初探

· AI 智能体引爆开源社区「GitHub 热点速览」

· 三行代码完成国际化适配,妙~啊~

· .NET Core 中如何实现缓存的预热?