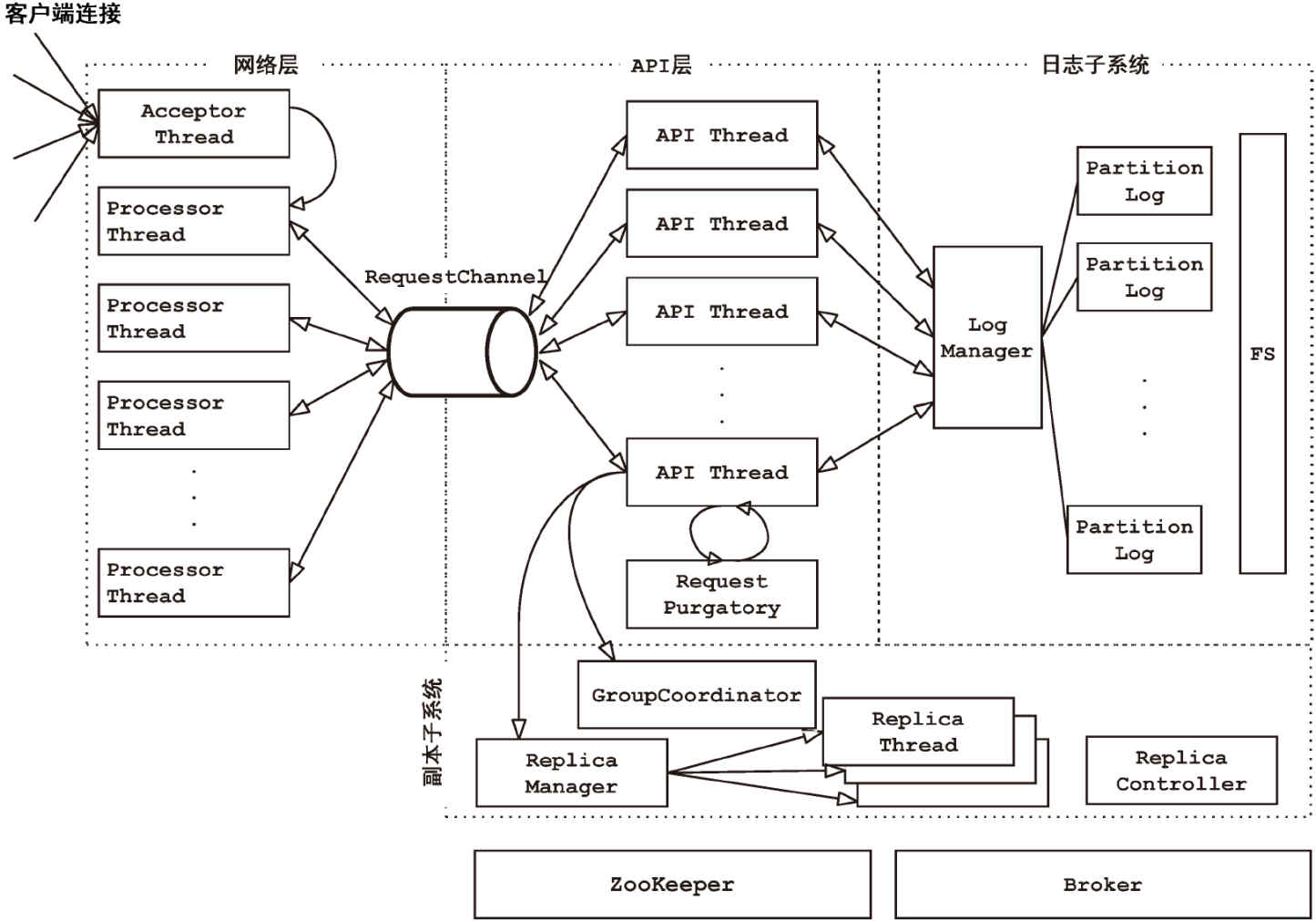

kafka broker的网络模型

生产环境出现了kafka接收到消息后,出现落盘与消费的延时,所以研究下kafka的网络模型,看了一些源码:

源码版本:0.8与1.1.0

图片来源于《kafka源码剖析》

网络层

Socketserver部分

0.8版本:

首先看一下他的startup方法:

在startup的方法中会创建多个processor用于接受请求发送响应的,processor的数量由配置num.network.threads决定,再创建一个acceptor用于监听新的连接。

/**

* Start the socket server

*/

def startup() {

val quotas = new ConnectionQuotas(maxConnectionsPerIp, maxConnectionsPerIpOverrides)

for(i <- 0 until numProcessorThreads) {

processors(i) = new Processor(i,

time,

maxRequestSize,

aggregateIdleMeter,

newMeter("IdlePercent", "percent", TimeUnit.NANOSECONDS, Map("networkProcessor" -> i.toString)),

numProcessorThreads,

requestChannel,

quotas,

connectionsMaxIdleMs)

//启动processor

Utils.newThread("kafka-network-thread-%d-%d".format(port, i), processors(i), false).start()

}

newGauge("ResponsesBeingSent", new Gauge[Int] {

def value = processors.foldLeft(0) { (total, p) => total + p.countInterestOps(SelectionKey.OP_WRITE) }

})

// register the processor threads for notification of responses

//监听来自processor对应的socketChannel的请求,将请求放入RequestChannel中

requestChannel.addResponseListener((id:Int) => processors(id).wakeup())

// start accepting connections

//启动一个acceptor线程用于监听新的连接

this.acceptor = new Acceptor(host, port, processors, sendBufferSize, recvBufferSize, quotas)

Utils.newThread("kafka-socket-acceptor", acceptor, false).start()

acceptor.awaitStartup

info("Started")

}

1.1.0版本:

this.synchronized {

//获取连接信息

connectionQuotas = new ConnectionQuotas(maxConnectionsPerIp, maxConnectionsPerIpOverrides)

//创建一个acceptor和config.numNetworkThreads个 processor,默认值3

createAcceptorAndProcessors(config.numNetworkThreads, config.listeners)

}

启动acceptor:

endpoints.foreach { endpoint =>

val listenerName = endpoint.listenerName

val securityProtocol = endpoint.securityProtocol

//遍历endpoint,为每一个服务的IP创建一个acceptor并使用KafkaThread启动

val acceptor = new Acceptor(endpoint, sendBufferSize, recvBufferSize, brokerId, connectionQuotas)

KafkaThread.nonDaemon(s"kafka-socket-acceptor-$listenerName-$securityProtocol-${endpoint.port}", acceptor).start()

acceptor.awaitStartup()

acceptors.put(endpoint, acceptor)

addProcessors(acceptor, endpoint, processorsPerListener)

}

启动processor

//acceptor启动每一个processor

acceptor.addProcessors(listenerProcessors)

private[network] def addProcessors(newProcessors: Buffer[Processor]): Unit = synchronized {

newProcessors.foreach { processor =>

KafkaThread.nonDaemon(s"kafka-network-thread-$brokerId-${endPoint.listenerName}-${endPoint.securityProtocol}-${processor.id}",

processor).start()

}

processors ++= newProcessors

}

* **acceptor** 0.8与1.1.0无差

/**

* Accept loop that checks for new connection attempts

*/

def run() {

//acceptor也会注册一个selector,用于监听新的连接。

serverChannel.register(selector, SelectionKey.OP_ACCEPT);

startupComplete()

var currentProcessor = 0

while(isRunning) {

val ready = selector.select(500)

if(ready > 0) {

val keys = selector.selectedKeys()

val iter = keys.iterator()

while(iter.hasNext && isRunning) {

var key: SelectionKey = null

try {

key = iter.next

iter.remove()

if(key.isAcceptable)

accept(key, processors(currentProcessor))

else

throw new IllegalStateException("Unrecognized key state for acceptor thread.")

// round robin to the next processor thread

// 采用轮询的方式将key(serverSocketChannel)分配给currentProcessor

currentProcessor = (currentProcessor + 1) % processors.length

} catch {

case e: Throwable => error("Error while accepting connection", e)

}

}

}

}

debug("Closing server socket and selector.")

swallowError(serverChannel.close())

swallowError(selector.close())

shutdownComplete()

}

}

- processor

将新的连接放入队列中newConnections 就是一个ConcurrentLinkedQueue;

/**

* Queue up a new connection for reading

*/

def accept(socketChannel: SocketChannel) {

newConnections.add(socketChannel)

wakeup()

}

procesor的执行方法:

override def run() {

startupComplete()

try {

while (isRunning) {

try {

// setup any new connections that have been queued up

//注册读事件并监听请求

configureNewConnections()

// register any new responses for writing

//将handler处理后的responseQueue中的响应取出

processNewResponses()

//获取read或者write事件

poll()

//处理已经到达的包,写入selector的completedReceives中

processCompletedReceives()

//重新注册该channel的read事件

processCompletedSends()

processDisconnected()

}

}

/**

* Register any new connections that have been queued up

*/

从队列中取出socketChannel,注册selector进行监听;每一个processor都会打开一个selector用于事件的监听。

private def configureNewConnections() {

while(newConnections.size() > 0) {

val channel = newConnections.poll()

debug("Processor " + id + " listening to new connection from " + channel.socket.getRemoteSocketAddress)

channel.register(selector, SelectionKey.OP_READ)

}

}

当acceptor接到一个新的请求后,就会创建一个新的连接,通过selector监听将得到的新的连接socketChannel通过acceptor方法交给某一个processor,processor会将socketChannel存入到ConcurrentLinkedQueue队列中,将队列中的所有socketChannel取出来并注册到本processor对应的selector进行事件的监听,监听socketChannel的read事件,后续的这个连接发送的请求由该processor进行处理,处理完后会将响应性写到这个socketChannel中。

RequestChannel

RequestChannel 是连接请求与api层的缓冲区,processor监听到请求响应后将该请求存入到RequestChannel;根据配置(num.io.threads)Kafka生成了一组KafkaRequestHandler线程,叫做KafkaRequestHandlerPool,KafkaRequestHandler线程从resuestChannel中取出request,交给对应的apis进行处理。

private def processCompletedReceives() {

selector.completedReceives.asScala.foreach { receive =>

try {

openOrClosingChannel(receive.source) match {

case Some(channel) =>

val header = RequestHeader.parse(receive.payload)

val context = new RequestContext(header, receive.source, channel.socketAddress,

channel.principal, listenerName, securityProtocol)

val req = new RequestChannel.Request(processor = id, context = context,

startTimeNanos = time.nanoseconds, memoryPool, receive.payload, requestChannel.metrics)

//processor将请求放入requestChannel中

requestChannel.sendRequest(req)

selector.mute(receive.source)

case None =>

// This should never happen since completed receives are processed immediately after `poll()`

throw new IllegalStateException(s"Channel ${receive.source} removed from selector before processing completed receive")

}

}

KafkaRequestHandler.scala

KafkaRequestHandler(){

case request: RequestChannel.Request =>

try {

request.requestDequeueTimeNanos = endTime

trace(s"Kafka request handler $id on broker $brokerId handling request $request")

//遍历requestchannel,并调用api层进行业务处理

apis.handle(request)

} catch {

case e: FatalExitError =>

shutdownComplete.countDown()

Exit.exit(e.statusCode)

case e: Throwable => error("Exception when handling request", e)

} finally {

request.releaseBuffer()

}

}

Api层

0.8版本:

KafkaApis.scala

/**

* Top-level method that handles all requests and multiplexes to the right api

*/

def handle(request: RequestChannel.Request) {

try{

trace("Handling request: " + request.requestObj + " from client: " + request.remoteAddress)

request.requestId match {

case RequestKeys.ProduceKey => handleProducerOrOffsetCommitRequest(request)

case RequestKeys.FetchKey => handleFetchRequest(request)

case RequestKeys.OffsetsKey => handleOffsetRequest(request)

case RequestKeys.MetadataKey => handleTopicMetadataRequest(request)

case RequestKeys.LeaderAndIsrKey => handleLeaderAndIsrRequest(request)

case RequestKeys.StopReplicaKey => handleStopReplicaRequest(request)

case RequestKeys.UpdateMetadataKey => handleUpdateMetadataRequest(request)

case RequestKeys.ControlledShutdownKey => handleControlledShutdownRequest(request)

case RequestKeys.OffsetCommitKey => handleOffsetCommitRequest(request)

case RequestKeys.OffsetFetchKey => handleOffsetFetchRequest(request)

case RequestKeys.ConsumerMetadataKey => handleConsumerMetadataRequest(request)

case requestId => throw new KafkaException("Unknown api code " + requestId)

}

} catch {

case e: Throwable =>

request.requestObj.handleError(e, requestChannel, request)

error("error when handling request %s".format(request.requestObj), e)

} finally

request.apiLocalCompleteTimeMs = SystemTime.milliseconds

}

1.1.0版本

def handle(request: RequestChannel.Request) {

try {

trace(s"Handling request:${request.requestDesc(true)} from connection ${request.context.connectionId};" +

s"securityProtocol:${request.context.securityProtocol},principal:${request.context.principal}")

request.header.apiKey match {

case ApiKeys.PRODUCE => handleProduceRequest(request)

//follower向leader拉取消息

case ApiKeys.FETCH => handleFetchRequest(request)

case ApiKeys.LIST_OFFSETS => handleListOffsetRequest(request)

case ApiKeys.METADATA => handleTopicMetadataRequest(request)

case ApiKeys.LEADER_AND_ISR => handleLeaderAndIsrRequest(request)

case ApiKeys.STOP_REPLICA => handleStopReplicaRequest(request)

case ApiKeys.UPDATE_METADATA => handleUpdateMetadataRequest(request)

case ApiKeys.CONTROLLED_SHUTDOWN => handleControlledShutdownRequest(request)

case ApiKeys.OFFSET_COMMIT => handleOffsetCommitRequest(request)

case ApiKeys.OFFSET_FETCH => handleOffsetFetchRequest(request)

两个socket的补充:

- SocketChannel:负责连接的数据传输

- ServerSocketChannel:负责连接的监听

acceptor 中监听ServerSocketChannel的新的连接,创建SocketChannel并交给processor进行处理。

浙公网安备 33010602011771号

浙公网安备 33010602011771号