[python基础] celery beat/task/flower解析

一.Celery 介绍

Celery 是一个强大的分布式任务队列,它可以让任务的执行完全脱离主程序,甚至可以被分配到其他主机上运行。我们通常使用它来实现异步任务( async task )和定时任务( crontab )。 异步任务比如是发送邮件、或者文件上传, 图像处理等等一些比较耗时的操作 ,定时任务是需要在特定时间执行的任务。它的架构组成如下图:

[以上转自]作者:Shyllin 来源:CSDN 原文:https://blog.csdn.net/Shyllin/article/details/80940643?utm_source=copy 版权声明:本文为博主原创文章,转载请附上博文链接!

划重点~

(1). 任务模块:包含异步任务和定时任务。异步任务在业务逻辑中触发被发送到任务队列,定时任务由celery beat周期性的发往任务队列。

(2).celery beat:任务调度器。beat进程会读取配置文件里的内容(celerybeat_schedule里设置),周期性的将配置中到期需要执行的任务发送到任务队列。

(3).消息中间件broker:任务调度队列。接收任务生产者发来的消息任务,存储到队列中。因为celery本身并不提供队列服务,官方推荐rabbitMQ和redis,在这里我们使用redis。

(4).任务执行单元worker:实行任务的处理单元。实时监控消息队列,获取队列调度中的任务,并执行它。

(5).任务结果存储backend:用于任务执行结果的存储,同中间件一样可以用rabbitMQ和redis。

二.代码实🌰:

(1).目录结构

celery_learning

|----celery_config.py

|----celery_app.py

|----tasks.py

|----test.py

(2)代码:

celery_config.py

#-*-coding=utf-8-*- from __future__ import absolute_import from celery.schedules import crontab # 中间件 BROKER_URL = 'redis://localhost:6379/6'

# 结果存储

CELERY_RESULT_BACKEND = 'redis://:127.0.0.1:6379/5'

# 默认worker队列 CELERY_DEFAULT_QUEUE = 'default' # 异步任务 CELERY_IMPORTS = ( "tasks" ) from datetime import timedelta # celery beat CELERYBEAT_SCHEDULE = { 'add':{ 'task':'tasks.add', 'schedule':timedelta(seconds=10), 'args':(1,12) } }

[注] backend一定要在broker之后设置,不然会报错:

ValueError: invalid literal for int() with base 10: '127.0.0.1'

celery_app.py

from __future__ import absolute_import from celery import Celery app = Celery('celery_app') app.config_from_object('celery_config')

tasks.py

from celery_app import app @app.task(queue='default') def add(x, y): return x + y @app.task(queue='default') def sub(x, y): return x - y

test.py

import sys, os # sys.path.append(os.path.abspath('.')) sys.path.append(os.path.abspath('..')) from tasks import add def add_loop(): ret = add.apply_async((1, 2), queue='default') print(type(ret)) return ret if __name__ == '__main__': ret = add_loop() print(ret.get()) print(ret.status)

三.执行步骤:

(1).异步任务:

终端输入 celery -A celery_app worker -Q default --loglevel=info

执行test.py

[结果如下]:

python test.py

<class 'celery.result.AsyncResult'> 3 SUCCESS

worker队列

[2018-10-16 15:17:15,173: INFO/MainProcess] Received task: tasks.add[79bfdfc8-d6eb-44b4-b094-4355961d18b3] [2018-10-16 15:17:15,193: INFO/ForkPoolWorker-2] Task tasks.add[79bfdfc8-d6eb-44b4-b094-4355961d18b3] succeeded in 0.0133806611411s: 3

(2)定时任务:

终端1输入 celery -A celery_app worker -Q default --loglevel=info

终端2输入 celery -A celery_app beat

celery beat v4.2.0 (windowlicker) is starting. __ - ... __ - _ LocalTime -> 2018-10-16 15:30:57 Configuration -> . broker -> redis://localhost:6379/6 . loader -> celery.loaders.app.AppLoader . scheduler -> celery.beat.PersistentScheduler . db -> celerybeat-schedule . logfile -> [stderr]@%WARNING . maxinterval -> 5.00 minutes (300s)

[结果如下]:

[2018-10-16 15:31:27,078: INFO/MainProcess] Received task: tasks.add[80e5b7a9-f610-47b2-91ae-2de731ee58f2] [2018-10-16 15:31:27,089: INFO/ForkPoolWorker-2] Task tasks.add[80e5b7a9-f610-47b2-91ae-2de731ee58f2] succeeded in 0.00957242585719s: 13 [2018-10-16 15:31:37,078: INFO/MainProcess] Received task: tasks.add[3b03d215-139b-4072-856e-b4941c332215] [2018-10-16 15:31:37,080: INFO/ForkPoolWorker-3] Task tasks.add[3b03d215-139b-4072-856e-b4941c332215] succeeded in 0.000707183033228s: 13 [2018-10-16 15:31:47,077: INFO/MainProcess] Received task: tasks.add[2ecb1645-5dff-4647-8035-fd1fdf8a4249] [2018-10-16 15:31:47,079: INFO/ForkPoolWorker-2] Task tasks.add[2ecb1645-5dff-4647-8035-fd1fdf8a4249] succeeded in 0.000692856963724s: 13 [2018-10-16 15:31:57,079: INFO/MainProcess] Received task: tasks.add[3abac1ce-df1d-4c2f-b08f-3248c054f893] [2018-10-16 15:31:57,082: INFO/ForkPoolWorker-3] Task tasks.add[3abac1ce-df1d-4c2f-b08f-3248c054f893] succeeded in 0.00103094079532s: 13

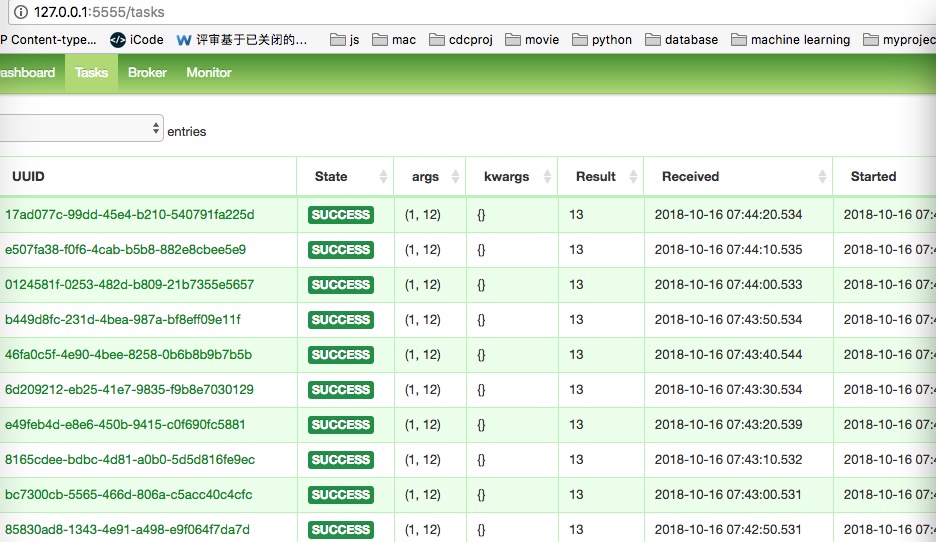

四.celery flower

(1).查看任务历史,任务具体参数,开始时间等信息。

(2).提供图表和统计数据。

(3).实现全面的远程控制功能, 包括但不限于 撤销/终止任务, 关闭重启 worker, 查看正在运行任务。

(4).提供一个 HTTP API , 方便集成。

终端执行:celery flower --broker=redis://localhost:6379/6

[I 181016 15:41:13 command:139] Visit me at http://localhost:5555 [I 181016 15:41:13 command:144] Broker: redis://localhost:6379/6 [I 181016 15:41:13 command:147] Registered tasks: [u'celery.accumulate', u'celery.backend_cleanup', u'celery.chain', u'celery.chord', u'celery.chord_unlock', u'celery.chunks', u'celery.group', u'celery.map', u'celery.starmap']