cka 2024 刷题

先看哔哩哔哩上的课程

https://www.bilibili.com/video/BV1pw411F7cz/

通过视频整理的题目如下:

!!!如有错误,烦请批评指正!!!

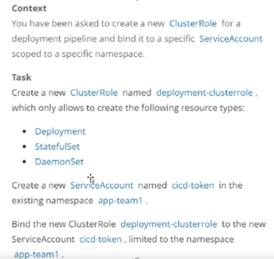

一.基于角色的访问控制-RBAC (4/25%)

参考:

https://kubernetes.io/zh-cn/docs/reference/access-authn-authz/rbac/

Kubectl create clusterrole deployment-clusterrole --verb=create --resource=deployments,statefulsets,daemonsetse

kubectl create serviceaccount cicd-tokene -n app-team1

Kubectl create rolebinding cicd-token-binding --clusterrole=deployment-clusterrole --serviceaccount=app-team1:cicd-tokene -n app-team1

验证:

$kubectl create clusterrole deployment-clusterrole

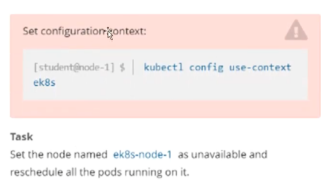

二.节点维护--指定node节点不可用(4/25%)

参考:

https://kubernetes.io/zh-cn/docs/concepts/architecture/nodes/

https://kubernetes.io/zh-cn/docs/tasks/administer-cluster/safely-drain-node/

https://kubernetes.io/docs/reference/generated/kubectl/kubectl-commands#drain

#切换集群

Kubectl config use-context ek8s

#设置节点为不可调度状态

Kubectl cordon ek8s-node-1

#强制清空节点(驱逐所有pod)

kubectl drain ek8s-node-1 --delete-emptydir-data --ignore-daemonsets --force

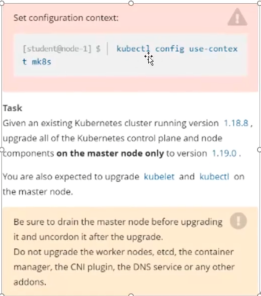

三.k8s版本升级(7/25%)

参考:

https://kubernetes.io/zh-cn/docs/tasks/administer-cluster/kubeadm/kubeadm-upgrade/

只对master node做升级,1.18.8升级到1.19.0 ,在master还要升级kubelet和kubectl,升级前要清空master节点,不要升级工作节点、etcd、容器管理、cni、dns等等

#切换集群

Kubectl config use-context mk8s

#查看控制节点

Kubectl get nodes

#设置节点为不可调度状态

Kubectl cordon k8s-master

#强制清空节点(驱逐所有pod)

kubectl drain k8s-master --delete-emptydir-data --ignore-daemonsets --force

#切换到master节点

Ssh k8s-master

apt update

apt-cache madison kubeadm

# 用最新的补丁版本号替换 1.29.x-* 中的 x

sudo apt-mark unhold kubeadm && \

sudo apt-get update && sudo apt-get install -y kubeadm='1.29.x-*' && \

sudo apt-mark hold kubeadm

kubeadm version

#验证升级计划

sudo kubeadm upgrade plan

#选择要升级到的目标版本但不升级etcd

sudo kubeadm upgrade apply v1.29.x --etcd-upgrade=false

# 升级 kubectl 和 kubelete

# 用最新的补丁版本替换 1.29.x-* 中的 x

sudo apt-mark unhold kubelet kubectl && \

sudo apt-get update && sudo apt-get install -y kubelet='1.29.x-*' kubectl='1.29.x-*' && \

sudo apt-mark hold kubelet kubectl

systemctl daemon-reload

systemctl restart kubelet

kubectl uncordon k8s-master

#查看节点有没有升级成功

Kubectl get nodes

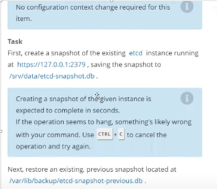

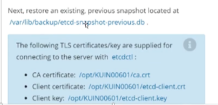

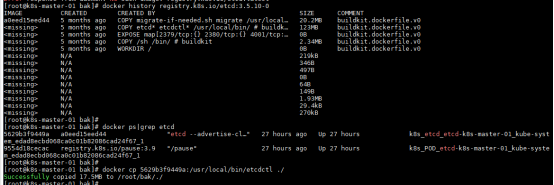

四.Etcd数据库备份恢复(7/25%)

参考:https://kubernetes.io/zh-cn/docs/tasks/administer-cluster/configure-upgrade-etcd/

备份:

准备工作:

vim .bashrc

alias etcdctl='etcdctl --endpoints=https://127.0.0.1:2379 --cacert=/etc/kubernetes/pki/etcd/ca.crt --cert=/etc/kubernetes/pki/etcd/server.crt --key=/etc/kubernetes/pki/etcd/server.key'

source .bashrc

export ETCDCTL_API=3

etcdctl snapshot save /root/bak/etcd-snapshot.db

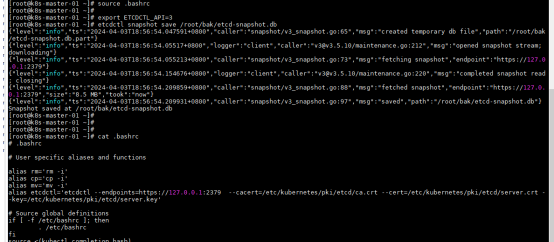

还原:

#停止kube-apiserver和etcd

$ mv /etc/kubernetes/manifests /etc/kubernetes/manifests.bak

#备份etcd文件

$ mv /var/lib/etcd /var/lib/etcd.bak

#恢复历史数据:

export ETCDCTL_API=3

etcdctl --endpoints=https://127.0.0.1:2379 \ --cacert=<trusted-ca-file> --cert=<cert-file> --key=<key-file> \ --data-dir /var/lib/etcd snapshot restore /var/lib/backup/etcd-snapshot-previous.db

#启动kube-apiserver和etcd

$ mv /etc/kubernetes/manifests.bak /etc/kubernetes/manifests

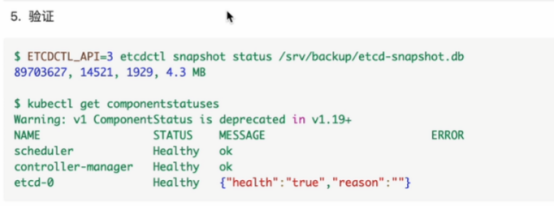

#验证:

$ETCDCTL_API=3 etcdctl snapshot status /var/lib/backup/etcd-snapshot-previous.db

$kubectl get compinentstatuses

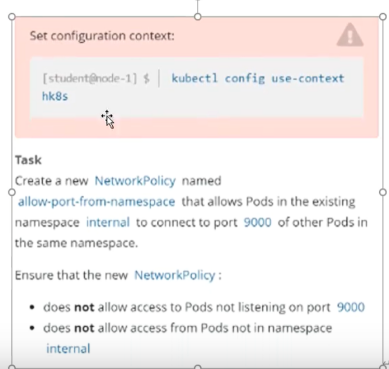

五.网络策略networkpolicy(7/20%)

参考:

https://kubernetes.io/zh-cn/docs/concepts/services-networking/network-policies/

1.网络策略名称为all-port-from-namespace

2.允许internal名字空间的访问9000端口

3.不允许访问非9000端口的pods

4.不是ns=internal不允许pods访问

Kubectl config use-context hk8s

$kubectl get namespaces internal --show-labels

‘kubernets.io/metadata.name=internal’

Vim xxx.yaml

apiVersion: networking.k8s.io/v1

kind: NetworkPolicy

metadata:

name: all-port-from-namespace

namespace: internal

spec:

podSelector: {}

policyTypes:

- Ingress

ingress:

- from:

- namespaceSelector:

matchLabels:

kubernets.io/metadata.name: internal

ports:

- protocol: TCP

port: 9000

kubectl apply -f xxx.yaml

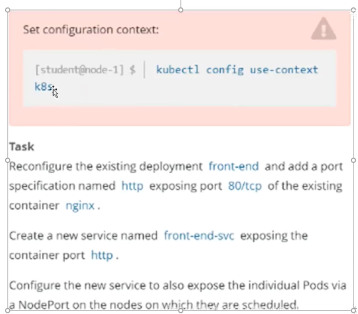

六.四层负载均衡service(7/20%)

参考:

https://kubernetes.io/zh-cn/docs/concepts/services-networking/service/

kubectl expose deploy front-end --name=front-end-svc --port=80 --target-port=http --type=NodePort

验证:

kubectl get services front-end-svc

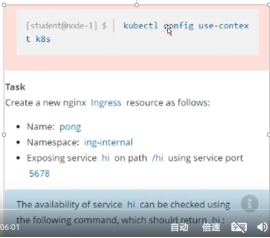

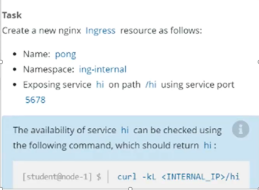

七.七层负载均衡ingress(7/20%)

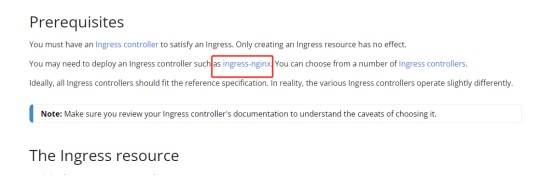

参考:

https://kubernetes.io/zh-cn/docs/concepts/services-networking/ingress/

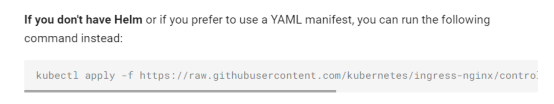

补充,安装ingress

kubectl apply -f https://raw.githubusercontent.com/kubernetes/ingress-nginx/controller-v1.10.0/deploy/static/provider/cloud/deploy.yaml

考试的时候好像不需要安装

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: pong

namespace: ing-internal

spec:

rules:

- http:

paths:

- pathType: Prefix

path: "/hi"

backend:

service:

name: hi

port:

number: 5678

kubectl apply -f xxx.yaml

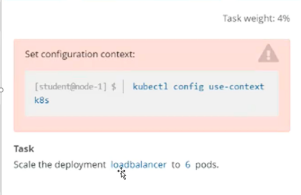

八.Deployment 管理pod扩缩容(4/15%)

方法1:

Kubectl edit deployment loadbalancer

replicas: 6

方法2:

kubectl scale –-replicas=6 deployment loadbalancer

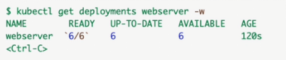

验证:

$kubectl get deployment webserver –w

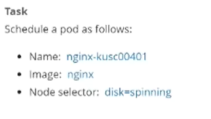

九.pod指定节点部署(4/15%)

参考:

https://kubernetes.io/zh-cn/docs/tasks/configure-pod-container/assign-pods-nodes/

apiVersion: v1

kind: Pod

metadata:

name: nginx-kusc00401

spec:

containers:

- name: nginx

image: nginx

imagePullPolicy: IfNotPresent

nodeSelector:

disk: spinning

kubectl apply -f xxx.yaml

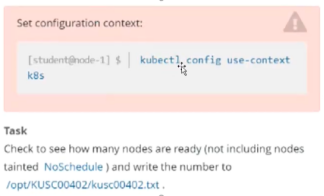

十.检查node节点的健康状态(4/15%)

Kubectl config use-context k8s

Kubectl get node |grep -i ready #查出有ready状态的所有node,记录总数为A

Kubectl describe node|grep Taint|grep NoSchedule #查出存在污点NoSchedule的节点,记录总数为B

x=A-B

$ echo x >> /opt/KUSC00402/kusc00402.txt #将符合题意的数量输入文件

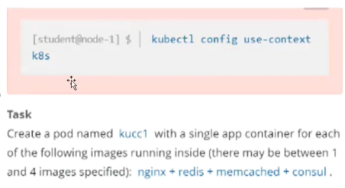

十一.一个pod封装多个容器(4/15%)

參考:

https://kubernetes.io/zh-cn/docs/tasks/configure-pod-container/assign-pods-nodes/

kubectl config use-context

apiVersion: v1

kind: Pod

metadata:

name: kuccl

spec:

containers:

- name: nginx

image: nginx

imagePullPolicy: IfNotPresent

- name: redis

image: redis

imagePullPolicy: IfNotPresent

- name: memcached

image: memcached

imagePullPolicy: IfNotPresent

- name: consul

image: consul

imagePullPolicy: IfNotPresent

kubectl apply -f xxx.yaml

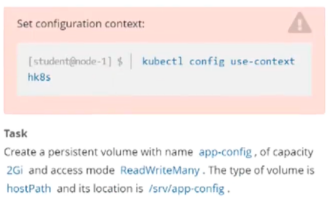

十二.持久化存储卷persistentvolume(4/10%)

參考:

https://kubernetes.io/zh-cn/docs/tasks/configure-pod-container/configure-persistent-volume-storage/

Kubectl config use-context hk8s

apiVersion: v1

kind: PersistentVolume

metadata:

name: app-config

spec:

storageClassName: manual

capacity:

storage: 2Gi

accessModes:

- ReadWriteMany

hostPath:

path: "/srv/app-config "

kubectl apply -f xxx.yaml

kubectl get pv

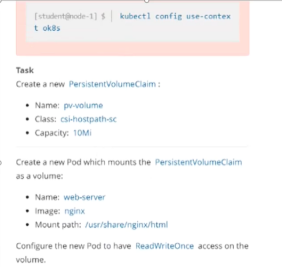

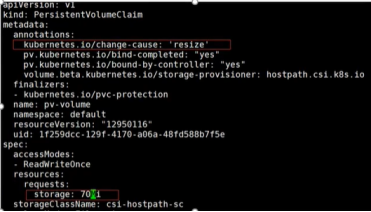

十三.PersistentVolumeClaims(7/10%)

參考:

https://kubernetes.io/zh-cn/docs/tasks/configure-pod-container/configure-persistent-volume-storage/

kubectl config use-context ok8s

Vim pv-claim.yaml

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: pv-volume

spec:

storageClassName: csi-hostpath-sc

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 10Mi

kubectl apply -f pv-claim.yaml

验证:

kubectl get pvc pv-volume

vim pvc-pod.yaml

apiVersion: v1

kind: Pod

metadata:

name: web-server

spec:

volumes:

- name: pv-volume-storage

persistentVolumeClaim:

claimName: pv-volume

containers:

- name: nginx

image: nginx

volumeMounts:

- mountPath: "/usr/share/nginx/html"

name: pv-volume-storage

kubectl apply -f pvc-pod.yaml

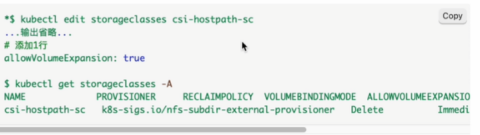

允许扩容:

https://kubernetes.io/docs/concepts/storage/storage-classes/

Kubectl edit storageclasses csi-hostpath-sc

Kubectl get storageclasses -A

方法1:

Kubectl edit pvc pv-volume --record

两个红色框框

方法2:(比较好)

kubectl patch pvc pv-volume -p '{"spec":{"resources":{"requests":{"storage":"70Mi"}}}}' --record

验证:

Kubectl get pvc

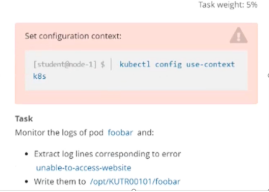

十四.收集pod日志(5/30%)

Kubectl config use-context k8s

Kubectl logs foobar |grep unable-to-access-website > /opt/KUTR00101/foobar

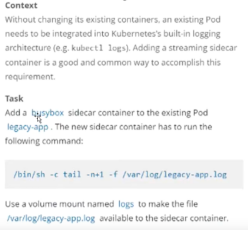

十五.Sidecar代理(7/30%)

参考:

https://kubernetes.io/zh-cn/docs/concepts/cluster-administration/logging/

Kubeclt get pods legacy-app -o yaml > legacy-app-sidecar.yaml

在不去修改旧容器的情况下,添加一个容器

- name: busybox-add

image: busybox

args: [/bin/sh, -c, 'tail -n+1 -F /var/log/legacy-app.log']

volumeMounts:

- name: logs

mountPath: /var/log

volumes:

- name: logs

emptyDir: {}

kubectl apply -f legacy-app-sidecar.yaml

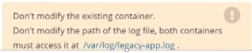

十六.监控pod度量指标(5/30%)

kubectl config use-context k8s

kubectl top pod -A -l name=cpu-user

比对cpu消耗比较高的pod,将pod的NAME写入要求的文件里

echo “xxxxxx” > /opt/KUTR00401/KUTR00401.txt

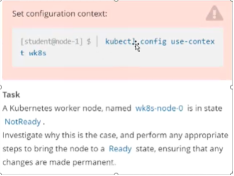

十七.集群故障排查 - kubelet故障(13/30%)

$kubectl config use-context wk8s

$kubectl get pod -o wide #查看wk8s-node-0对应的ip是多少

$ssh {对应的ip}

$sudo -i

$systemctl status kubelet

$systemctl restart kubelet

$systemctl enable kubelet

【推荐】国内首个AI IDE,深度理解中文开发场景,立即下载体验Trae

【推荐】编程新体验,更懂你的AI,立即体验豆包MarsCode编程助手

【推荐】抖音旗下AI助手豆包,你的智能百科全书,全免费不限次数

【推荐】轻量又高性能的 SSH 工具 IShell:AI 加持,快人一步

· DeepSeek 开源周回顾「GitHub 热点速览」

· 物流快递公司核心技术能力-地址解析分单基础技术分享

· .NET 10首个预览版发布:重大改进与新特性概览!

· AI与.NET技术实操系列(二):开始使用ML.NET

· 单线程的Redis速度为什么快?