1、使用Python3爬取美女图片-网站中的每日更新一栏

此代码是根据网络上其他人的代码优化而成的,

环境准备:

pip install lxml

pip install bs4

pip install urllib

1 #!/usr/bin/env python

2 #-*- coding: utf-8 -*-

3

4 import requests

5 from bs4 import BeautifulSoup

6 import os

7 import urllib

8 import random

9

10

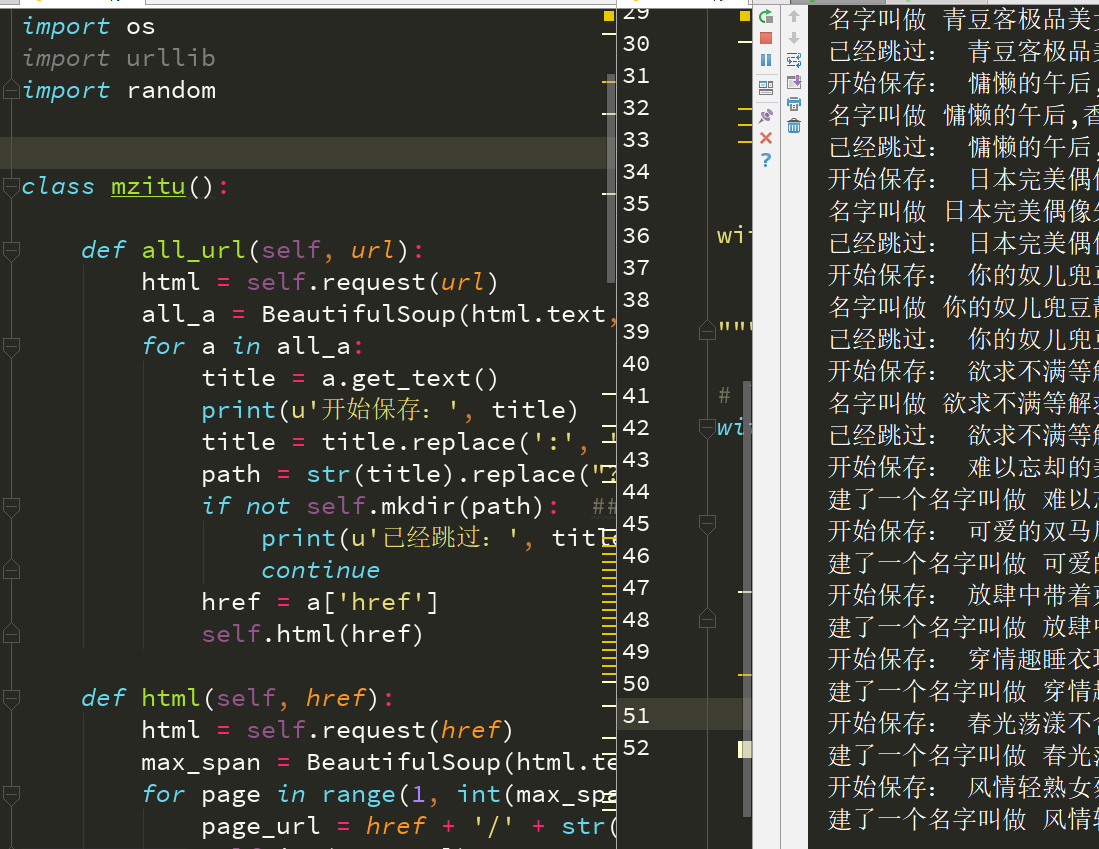

11 class mzitu():

12

13 def all_url(self, url):

14 html = self.request(url)

15 all_a = BeautifulSoup(html.text, 'lxml').find('div', class_='all').find_all('a')

16 for a in all_a:

17 title = a.get_text()

18 print(u'开始保存:', title)

19 title = title.replace(':', '')

20 path = str(title).replace("?", '_')

21 if not self.mkdir(path): ##跳过已存在的文件夹

22 print(u'已经跳过:', title)

23 continue

24 href = a['href']

25 self.html(href)

26

27 def html(self, href):

28 html = self.request(href)

29 max_span = BeautifulSoup(html.text, 'lxml').find('div', class_='pagenavi').find_all('span')[-2].get_text()

30 for page in range(1, int(max_span) + 1):

31 page_url = href + '/' + str(page)

32 self.img(page_url)

33

34 def img(self, page_url):

35 img_html = self.request(page_url)

36 img_url = BeautifulSoup(img_html.text, 'lxml').find('div', class_='main-image').find('img')['src']

37 self.save(img_url, page_url)

38

39 def save(self, img_url, page_url):

40 name = img_url[-9:-4]

41 try:

42 img = self.requestpic(img_url, page_url)

43 f = open(name + '.jpg', 'ab')

44 f.write(img.content)

45 f.close()

46 except FileNotFoundError: ##捕获异常,继续往下走

47 print(u'图片不存在已跳过:', img_url)

48 return False

49

50 def mkdir(self, path): ##这个函数创建文件夹

51 path = path.strip()

52 isExists = os.path.exists(os.path.join("D:\mzitu", path))

53 if not isExists:

54 print(u'建了一个名字叫做', path, u'的文件夹!')

55 path = path.replace(':','')

56 os.makedirs(os.path.join("D:\mzitu", path))

57 os.chdir(os.path.join("D:\mzitu", path)) ##切换到目录

58 return True

59 else:

60 print(u'名字叫做', path, u'的文件夹已经存在了!')

61 return False

62

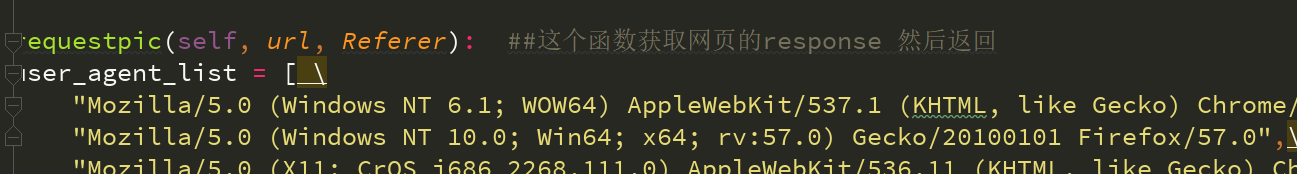

63 def requestpic(self, url, Referer): ##这个函数获取网页的response 然后返回

64 user_agent_list = [ \

65 "Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/537.1 (KHTML, like Gecko) Chrome/22.0.1207.1 Safari/537.1" \

65-1 "Mozilla/5.0 (Windows NT 10.0; Win64; x64; rv:57.0) Gecko/20100101 Firefox/57.0",\

66 "Mozilla/5.0 (X11; CrOS i686 2268.111.0) AppleWebKit/536.11 (KHTML, like Gecko) Chrome/20.0.1132.57 Safari/536.11", \

67 "Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/536.6 (KHTML, like Gecko) Chrome/20.0.1092.0 Safari/536.6", \

68 "Mozilla/5.0 (Windows NT 6.2) AppleWebKit/536.6 (KHTML, like Gecko) Chrome/20.0.1090.0 Safari/536.6", \

69 "Mozilla/5.0 (Windows NT 6.2; WOW64) AppleWebKit/537.1 (KHTML, like Gecko) Chrome/19.77.34.5 Safari/537.1", \

70 "Mozilla/5.0 (X11; Linux x86_64) AppleWebKit/536.5 (KHTML, like Gecko) Chrome/19.0.1084.9 Safari/536.5", \

71 "Mozilla/5.0 (Windows NT 6.0) AppleWebKit/536.5 (KHTML, like Gecko) Chrome/19.0.1084.36 Safari/536.5", \

72 "Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/536.3 (KHTML, like Gecko) Chrome/19.0.1063.0 Safari/536.3", \

73 "Mozilla/5.0 (Windows NT 5.1) AppleWebKit/536.3 (KHTML, like Gecko) Chrome/19.0.1063.0 Safari/536.3", \

74 "Mozilla/5.0 (Macintosh; Intel Mac OS X 10_8_0) AppleWebKit/536.3 (KHTML, like Gecko) Chrome/19.0.1063.0 Safari/536.3", \

75 "Mozilla/5.0 (Windows NT 6.2) AppleWebKit/536.3 (KHTML, like Gecko) Chrome/19.0.1062.0 Safari/536.3", \

76 "Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/536.3 (KHTML, like Gecko) Chrome/19.0.1062.0 Safari/536.3", \

77 "Mozilla/5.0 (Windows NT 6.2) AppleWebKit/536.3 (KHTML, like Gecko) Chrome/19.0.1061.1 Safari/536.3", \

78 "Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/536.3 (KHTML, like Gecko) Chrome/19.0.1061.1 Safari/536.3", \

79 "Mozilla/5.0 (Windows NT 6.1) AppleWebKit/536.3 (KHTML, like Gecko) Chrome/19.0.1061.1 Safari/536.3", \

80 "Mozilla/5.0 (Windows NT 6.2) AppleWebKit/536.3 (KHTML, like Gecko) Chrome/19.0.1061.0 Safari/536.3", \

81 "Mozilla/5.0 (X11; Linux x86_64) AppleWebKit/535.24 (KHTML, like Gecko) Chrome/19.0.1055.1 Safari/535.24", \

82 "Mozilla/5.0 (Windows NT 6.2; WOW64) AppleWebKit/535.24 (KHTML, like Gecko) Chrome/19.0.1055.1 Safari/535.24"

83 ]

84 ua = random.choice(user_agent_list)

85 headers = {'User-Agent': ua, "Referer": Referer} ##较之前版本获取图片关键参数在这里

86 content = requests.get(url, headers=headers)

87 return content

88

89 def request(self, url): ##这个函数获取网页的response 然后返回

90 headers = {

91 'User-Agent': "Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/537.1 (KHTML, like Gecko) Chrome/22.0.1207.1 Safari/537.1"}

92 content = requests.get(url, headers=headers)

93 return content

94

95

96 Mzitu = mzitu() ##实例化

97 Mzitu.all_url('http://www.mzitu.com/all/') ##给函数all_url传入参数 你可以当作启动爬虫(就是入口)

98 print(u'恭喜您下载完成啦!')

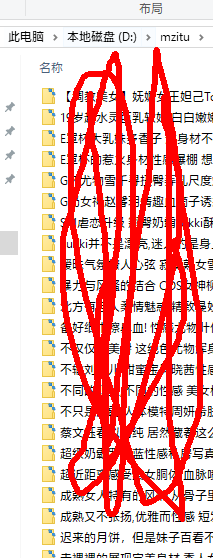

执行步骤:

重复执行代码的话已保存的不会再次下载保存

执行结果:

遇到的错误如何解决:

1、错误提示:requests.exceptions.ChunkedEncodingError: ("Connection broken: ConnectionResetError(10054, '远程主机强迫关闭了一个现有的连接。', None, 10054, None)", ConnectionResetError(10054, '远程主机强迫关闭了一个现有的连接。', None, 10054, None))

错误原因分析:访问量瞬间过大,被网站反爬机制拦截了

解决方法:稍等一段时间再次执行即可

2、requests.exceptions.ChunkedEncodingError: ("Connection broken: ConnectionResetError(10054, '远程主机强迫关闭了一个现有的连接。', None, 10054, None)", ConnectionResetError(10054, '远程主机强迫关闭了一个现有的连接。', None, 10054, None))

错误原因分析:可能对方服务器做了反爬

解决方法:requests手动添加一下header

【推荐】国内首个AI IDE,深度理解中文开发场景,立即下载体验Trae

【推荐】编程新体验,更懂你的AI,立即体验豆包MarsCode编程助手

【推荐】抖音旗下AI助手豆包,你的智能百科全书,全免费不限次数

【推荐】轻量又高性能的 SSH 工具 IShell:AI 加持,快人一步

· Linux系列:如何用heaptrack跟踪.NET程序的非托管内存泄露

· 开发者必知的日志记录最佳实践

· SQL Server 2025 AI相关能力初探

· Linux系列:如何用 C#调用 C方法造成内存泄露

· AI与.NET技术实操系列(二):开始使用ML.NET

· 被坑几百块钱后,我竟然真的恢复了删除的微信聊天记录!

· 没有Manus邀请码?试试免邀请码的MGX或者开源的OpenManus吧

· 【自荐】一款简洁、开源的在线白板工具 Drawnix

· 园子的第一款AI主题卫衣上架——"HELLO! HOW CAN I ASSIST YOU TODAY

· Docker 太简单,K8s 太复杂?w7panel 让容器管理更轻松!