Principles of Distributed Systems

Distributed Systems

A distributed system is a computer system with multiple sequential processes that communicate and interact with each other that appears to users as a single coherent system.

Characteristics

-No shared memory

-Physically separate

-Network connected

-Independent clocks(Synchronous / Asynchronous)

-Message passing

-Different architectures

-Heterogeneous

Why Distribute?

• Resource sharing

– Storage

– Computational power

– System objects and services

• Scalability

– More resources can be easily and transparently added to the system

– Geographically

• Accessibility

– User access from anywhere on the network

– System components and services can be discovered and accessed

• Fault tolerance

– Able to progress despite component failures

– Recover when failures are repaired

Issues

• Difficult to debug

– Event ordering (no global clock)

– Hard to obtain global picture of execution

• Communication problems

– Uncertain delays

• No central administration

– Detection of failures

• Security

Service Oriented Architecture

• Services are well-defined and self-contained functions that do not depend on the state of other services

• SOA is a collection of services that communicate with each other through some well-defined protocols

• They are most commonly connected by web service

• Components of this type of distributed systems is said to be loosely coupled Interaction Model

Examples of Distributed Systems

• Cloud computing

– Infrastructure as a service (IaaS) – Platform as a service (PaaS)

– Software as a service (SaaS)

• Distributed information systems

– Transaction processing

• Ubiquitous computing

– Mobile ad hoc networks (MANETs)

– Wireless sensor networks (WSNs)

• Hadoop

– Map-Reduce

• Kafka

– Stream processing of big data

A distributed system is a computer system with multiple sequential processes that communicate and interact with each other to achieve a collective com- putational goal. The only form of communication in a distributed system is presumed to be by passing messages. Messages can be affected by delays, fail- ures, and security attacks, so programming distributed systems requires more sophisticated tools than for traditional sequential software.

For instance:

• there is no global clock as each processor’s clock can not be exactly syn- chronized with the other clocks in the system,

• distributed systems are highly concurrent, with many different tasks run- concurrently on the system while sharing system resources,

• distributed systems are often expected to continue to function even when part of the system fails.

The Internet is a vast distributed system where over 200 million computers communicate using standardized internet protocols to pass messages. An organi- zation’s own internal network (an intranet) is a distributed system which might allow particular resources to be shared within the organization, its own custom protocols for communication, and access to the Internet via some firewall which filters the messages passing to and from the intranet.

Some distributed systems include embedded devices, requiring communication between processors with vastly different capabilities (termed ubiquitous or pervasive computing). Also, a distributed system might allow portable devices, such as laptop computers, tablets, cellular phones, and digital cameras to dynamically join the system to utilize and share resources such as internet access, databases or printers (termed mobile or nomadic computing).

A special case of a distributed system is a parallel computer, a computer in which multiple processors can utilize shared memory. Parallel computers are designed for high computational performance, whereas most other types of distributed systems are designed for sharing system resources, are typically more flexible and scalable, and the processors are often geographically separated with their own private memory.

There are many challenges posed by the construction of distributed systems:

distributed systems are often created from heterogeneous components, with a variety of different computer and network hardware, operating systems, middleware and programming languages,

the components from many vendors that comprise distributed systems often need to be made to conform to open standards with published key interfaces, so that the system can be extended and re-implemented in different ways,

systems must maintain security, ensuring confidentiality of the messages, correct identification of users connected to the system, the integrity of the system under security attacks such as denial of service,

and suitable handling of mobile code, they should be scalable, remaining effective as the system grows without bottlenecks developing that hinder performance, parts of systems might have wide variations in workload, such as those involved in multimedia applications, or parts could suffer from slow or intermittent connectivity,

failures must be detected, reduced in severity, and handled appropriately to keep systems functioning when possible,

many distributed systems are very asynchronous, processes might take arbitrarily long to complete, it might be difficult to distinguish between a delay in message transmission and a dropped message, and there might be arbitrary drift rates in clocks making ordering of events in the system difficult,

system resources must be managed to allow concurrent use by multiple clients, handling conflicting updates,

systems should be configured to appear transparent to application programs, enabling local and remote resources to be accessed in identical ways without knowledge of their actual physical locations.

The client-server architecture model considers a distributed system as consisting of client processes and server processes where clients send request messages to servers which then communicate back response messages.

For example, in the portion of the Internet known as the Web a browser is considered to be a client that makes requests from web servers using the HTTP protocol. An alternative architecture model is the peer-to-peer model which decentralizes data and resources by having processes interact cooperatively to provide a uniform service. The nodes in a peer-to-peer system might differ in the resources that they contribute to the system but they all have the same capabilities. The Napster file sharing system was an example of a peer-to-peer system that provided a means for users to exchange music files. Some variations on these two models include:

• partitioning or replicating data across several servers in a network to reduce the load on a single server or to improve fault tolerance in the case that a server fails

• having proxy servers and clients which cache data for repeated operations to reduce network load,

• using mobile code such as applets to move some of the computational burden from the server to the client, or mobile agents such as worms that travel around the system to perform some task,

• including low cost thin client devices which are controlled by a more powerful compute server,

• networking fat client computers with a remote file server so that applications are downloaded and run locally on each computer but files are managed by the central file server,

• allowing spontaneous interoperation where mobile devices can conveniently discover services that are available in their immediate environment,

• using index or coordinating servers to help organize activity in a peer-to- peer network.

Network infrastructure such as transmission media (twisted copper wires, coaxial cables, fibre optic cables, wireless channels), hardware (such as routers, switches, bridges), and software (such as drivers and routing algorithms) have a great effect on a distributed system. They help determine the performance of a distributed system, both in terms of the latency, which is the delay between when a message is first sent and when it starts to arrive at the destination, and the data transfer rate, the speed at which data is transmitted in millions of bits per second (Mbps). The infrastructure also affect the scalability, reliability, and security of the system.

Networks are often categorized into one of several types depending on their range and infrastructure:

personal area network which typically uses a wireless technology such as Bluetooth (IEEE 802.15.1) to connect together personal devices such as cellular phones, PDAs, and headsets with a range of about 10m and band- width between 0.5-2Mbps,

local area network which uses a technology such as Ethernet (IEEE 802.3) to connect together computers via a single high speed communication medium such as wire with a range of about 2km and bandwidth 10- 100Mbps, or instead a wireless technology such as WiFi (IEEE 802.11) with a range of 150m-1.5km and bandwidth 2-54Mbps,

metropolitan area network which uses high-bandwidth cables and one of several alternative technologies, such as ATM (Asynchronous Transfer Mode) having a range up to 50km and bandwidth up to 150Mbps,

wide area network which carries messages at lower speeds but over large dis- tances (worldwide) using routers that can cause latency of up to 500ms, ei- ther using a technology such as IP routing with bandwidth 0.01-600Mbps, or a wireless technology such as GSM with bandwidth 0.01-2Mbps, internetwork such as the Internet which interconnects multiple networks to provide common data communication facilities.

When a message is passed along a network it is subdivided into packets of restricted length (the maximum transfer unit), and header information is added to identify the source, destination, and sequence number of the packet. The length of packets being transmitted is restricted so that each computer can be ensured to have adequate buffer space available for incoming packets and long messages do not tie up communication channels. A sequence number is included with each packet so that the message can be reassembled in the correct order at the destination, and missing packets can be identified.

In networks that do not have direct connections between every pair of processors the packets get routed through the network from the source to the destination using algorithms such as the distance vector algorithm or the linked state algorithm, and the routing might take congestion points into consideration, avoiding routing packets via heavily loaded nodes (which could drop packets if incoming packets overflow their available buffers).

Network software that handles the transmission of messages is arranged into layers, where each layer extends the properties of the layer below it. At the source each layer (except the topmost) accepts the data to be transmitted from the layer above it and translates the data into a format acceptable for the layer below, until it is in a form suitable for the particular transmission medium. At the destination the translation is reversed to reassemble the message that was sent from the source. Although there is a significant performance cost using protocol layers they provide substantial benefits, as each layer is simple and flexible it is much simpler to develop new protocols layered on top of standard- ized protocols at lower levels without having to worry about particular network infrastructure.

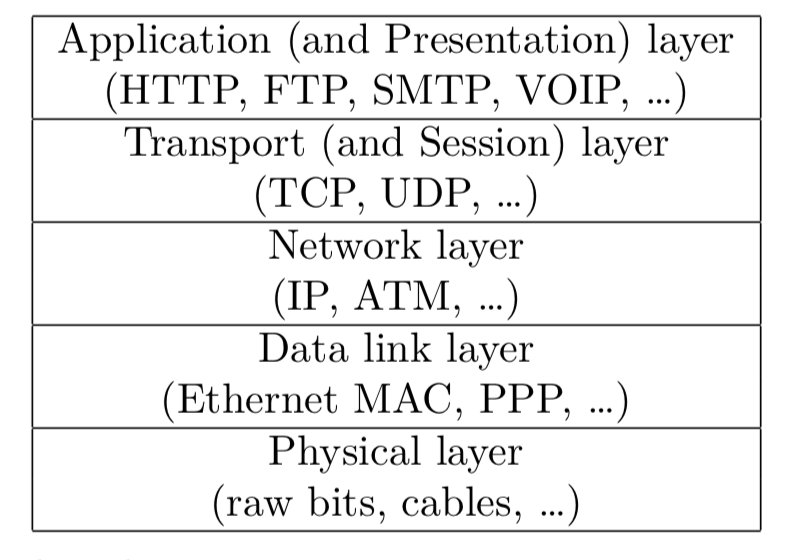

The Open Systems Interconnection (OSI) reference model describes network communication in terms of seven layers:

-application which is chosen to meet the particular communication service requirements for an application program; presentation which handles the encryption and translation of the data into a format independent of the platform;

-session which handles failures such as dropped information and automatic recovery;

-transport which handles the transmission of messages between ports and subdivides them into packets;

-network which handles the routing of packets through a network;

-data link which handles the transmission of packets between nodes that are directly connected; and physical which consists of the circuits and hardware that actually transmit signals.

Many networks, in particular the Internet, combine the presentation layer as part of the application layer and the session layer as part of the transport layer. For example, HTTPS handles the application layer requirements for communication with web servers as well as encryption, and TCP handles the transport layer requirements for handling a connection between ports as well as the retransmission of dropped packets.

Exercise 1.1 (Transmission times) Suppose a client sends a 200 byte TCP request message to a server which produces a response containing 5000 bytes after

2ms processing across an Ethernet network that has a latency per packet of 5ms, a TCP connection set up time of 5ms, bandwidth 100Mbps and MTU of 1000 bytes (ignoring headers). Assuming that the network is lightly loaded (so that few packets are dropped) estimate the total time to complete the communication.

Repeat the calculation for a Bluetooth connection that has latency 10ms and bandwidth 2Mbps.