1 前言

线上有套Redis 5.x单机在运行,为了能实现Redis高可用和以后能横向扩展放弃Redis主从、Redis哨兵,决定将Redis单机迁移到Redis Cluster。

此方案适用于Redis 5.X、6.X版本。

迁移方式:

- 使用RDB、AOF迁移:

- 步骤较多,相对复杂;

- 对Redis单机与Redis Cluster之间网络要求不高;

- 停机时间长。

- 使用Redis-shake迁移:

- 相对简单;

- 需保证Redis单机与Redis Cluster之间网络能通;

- 停机时间短。

2 使用RDB、AOF迁移

Redis默认未启用AOF,可以根据自己的需求来开启AOF(开启AOF对性能有一定的影响)。

2.1 移动哈希槽

Redis Cluster总共有16384个哈希槽,16384哈希槽是平均分布在Master节点上,我的Redis Cluster是6个节点(3个Master,3个Slave,Master与Slave之间是主从关系)。

查看Redis Cluster节点信息和哈希槽分布情况

[redis]# redis-cli -p 6381 -a Passwd@123 --cluster check 127.0.0.1:6381

Warning: Using a password with '-a' or '-u' option on the command line interface may not be safe.

127.0.0.1:6381 (0939488f...) -> 0 keys | 5461 slots | 1 slaves.

10.150.57.13:6383 (cab3f65e...) -> 0 keys | 5461 slots | 1 slaves.

10.150.57.13:6382 (3b02d1d4...) -> 0 keys | 5462 slots | 1 slaves.

[OK] 0 keys in 3 masters.

0.00 keys per slot on average.

>>> Performing Cluster Check (using node 127.0.0.1:6381)

M: 0939488f471b96bca4feffff01a9f179e10041a5 127.0.0.1:6381

slots:[0-5460] (5461 slots) master

1 additional replica(s)

M: cab3f65e28f8f42dd78e1bb2ea6bb417960db600 10.150.57.13:6383

slots:[10923-16383] (5461 slots) master

1 additional replica(s)

S: 6c18fdfd69e67df4340437169738e8e00e67e4dd 10.150.57.13:6384

slots: (0 slots) slave

replicates 3b02d1d43f219759e76df2804d4ed160f602a7ea

S: bf2fe8ffbbb22772c600af19ce7ce15a89d26ba5 10.150.57.13:6385

slots: (0 slots) slave

replicates cab3f65e28f8f42dd78e1bb2ea6bb417960db600

S: d83e5f8dc57cb6c8fbc4ed2dd667835cbf6b7542 10.150.57.13:6386

slots: (0 slots) slave

replicates 0939488f471b96bca4feffff01a9f179e10041a5

M: 3b02d1d43f219759e76df2804d4ed160f602a7ea 10.150.57.13:6382

slots:[5461-10922] (5462 slots) master

1 additional replica(s)

[OK] All nodes agree about slots configuration.

>>> Check for open slots...

>>> Check slots coverage...

[OK] All 16384 slots covered.

移动10.150.57.13:6382节点上的哈希槽到10.150.57.13:6381上

[redis]# redis-cli -p 6381 -a Passwd@123 --cluster reshard 10.150.57.13:6382

Warning: Using a password with '-a' or '-u' option on the command line interface may not be safe.

>>> Performing Cluster Check (using node 10.150.57.13:6383)

M: cab3f65e28f8f42dd78e1bb2ea6bb417960db600 10.150.57.13:6383

slots:[10923-16383] (5461 slots) master

1 additional replica(s)

S: d83e5f8dc57cb6c8fbc4ed2dd667835cbf6b7542 10.150.57.13:6386

slots: (0 slots) slave

replicates 0939488f471b96bca4feffff01a9f179e10041a5

M: 3b02d1d43f219759e76df2804d4ed160f602a7ea 10.150.57.13:6382

slots: (0 slots) master

S: bf2fe8ffbbb22772c600af19ce7ce15a89d26ba5 10.150.57.13:6385

slots: (0 slots) slave

replicates cab3f65e28f8f42dd78e1bb2ea6bb417960db600

S: 6c18fdfd69e67df4340437169738e8e00e67e4dd 10.150.57.13:6384

slots: (0 slots) slave

replicates 0939488f471b96bca4feffff01a9f179e10041a5

M: 0939488f471b96bca4feffff01a9f179e10041a5 10.150.57.13:6381

slots:[0-10922] (10923 slots) master

2 additional replica(s)

[OK] All nodes agree about slots configuration.

>>> Check for open slots...

>>> Check slots coverage...

[OK] All 16384 slots covered.

How many slots do you want to move (from 1 to 16384)? 5461 #指定移动哈希槽的数量

What is the receiving node ID? 0939488f471b96bca4feffff01a9f179e10041a5 #指定接收端的Redis ID

Please enter all the source node IDs.

Type 'all' to use all the nodes as source nodes for the hash slots.

Type 'done' once you entered all the source nodes IDs.

Source node #1: 3b02d1d43f219759e76df2804d4ed160f602a7ea #指定源端Redis ID

Source node #2: done

省略Moving输出

Do you want to proceed with the proposed reshard plan (yes/no)? yes #输入“yes”继续进行reshard

省略Moving输出

移动10.150.57.13:6383节点上的哈希槽到10.150.57.13:6381上

[redis]# redis-cli -p 6381 -a Passwd@123 --cluster reshard 10.150.57.13:6383

Warning: Using a password with '-a' or '-u' option on the command line interface may not be safe.

>>> Performing Cluster Check (using node 10.150.57.13:6383)

M: cab3f65e28f8f42dd78e1bb2ea6bb417960db600 10.150.57.13:6383

slots:[10923-16383] (5461 slots) master

1 additional replica(s)

S: d83e5f8dc57cb6c8fbc4ed2dd667835cbf6b7542 10.150.57.13:6386

slots: (0 slots) slave

replicates 0939488f471b96bca4feffff01a9f179e10041a5

M: 3b02d1d43f219759e76df2804d4ed160f602a7ea 10.150.57.13:6382

slots: (0 slots) master

S: bf2fe8ffbbb22772c600af19ce7ce15a89d26ba5 10.150.57.13:6385

slots: (0 slots) slave

replicates cab3f65e28f8f42dd78e1bb2ea6bb417960db600

S: 6c18fdfd69e67df4340437169738e8e00e67e4dd 10.150.57.13:6384

slots: (0 slots) slave

replicates 0939488f471b96bca4feffff01a9f179e10041a5

M: 0939488f471b96bca4feffff01a9f179e10041a5 10.150.57.13:6381

slots:[0-10922] (10923 slots) master

2 additional replica(s)

[OK] All nodes agree about slots configuration.

>>> Check for open slots...

>>> Check slots coverage...

[OK] All 16384 slots covered.

How many slots do you want to move (from 1 to 16384)? 5461 #指定移动哈希槽的数量

What is the receiving node ID? 0939488f471b96bca4feffff01a9f179e10041a5 #指定接收端的Redis ID

Please enter all the source node IDs.

Type 'all' to use all the nodes as source nodes for the hash slots.

Type 'done' once you entered all the source nodes IDs.

Source node #1: cab3f65e28f8f42dd78e1bb2ea6bb417960db600 #指定源端Redis ID

Source node #2: done

省略Moving输出

Do you want to proceed with the proposed reshard plan (yes/no)? yes #输入“yes”继续进行reshard

省略Moving输出

查看哈希槽的分布情况

[redis]# redis-cli -p 6381 -a Passwd@123 --cluster check 127.0.0.1:6383

Warning: Using a password with '-a' or '-u' option on the command line interface may not be safe.

127.0.0.1:6383 (cab3f65e...) -> 0 keys | 0 slots | 0 slaves.

10.150.57.13:6382 (3b02d1d4...) -> 0 keys | 0 slots | 0 slaves.

10.150.57.13:6381 (0939488f...) -> 0 keys | 16384 slots | 3 slaves.

[OK] 0 keys in 3 masters.

0.00 keys per slot on average.

>>> Performing Cluster Check (using node 127.0.0.1:6383)

M: cab3f65e28f8f42dd78e1bb2ea6bb417960db600 127.0.0.1:6383

slots: (0 slots) master

S: d83e5f8dc57cb6c8fbc4ed2dd667835cbf6b7542 10.150.57.13:6386

slots: (0 slots) slave

replicates 0939488f471b96bca4feffff01a9f179e10041a5

M: 3b02d1d43f219759e76df2804d4ed160f602a7ea 10.150.57.13:6382

slots: (0 slots) master

S: bf2fe8ffbbb22772c600af19ce7ce15a89d26ba5 10.150.57.13:6385

slots: (0 slots) slave

replicates 0939488f471b96bca4feffff01a9f179e10041a5

S: 6c18fdfd69e67df4340437169738e8e00e67e4dd 10.150.57.13:6384

slots: (0 slots) slave

replicates 0939488f471b96bca4feffff01a9f179e10041a5

M: 0939488f471b96bca4feffff01a9f179e10041a5 10.150.57.13:6381

slots:[0-16383] (16384 slots) master

3 additional replica(s)

[OK] All nodes agree about slots configuration.

>>> Check for open slots...

>>> Check slots coverage...

[OK] All 16384 slots covered.至此可以看到16384个哈希槽全部在10.150.57.13:6381节点上。

2.2 传输RDB、AOF

将Redis单机的RDB快照、AOF文件传输到10.150.57.13:6381节点相应的RDB、AOF路径下。

重启Redis Cluster(会自动加载单机的RDB、AOF到Redis Cluster 10.150.57.13:6381节点)。

2.3 重新分布哈希槽

查看哈希槽分布情况

[redis]# redis-cli -p 6381 -a Passwd@123 --cluster check 127.0.0.1:6383

Warning: Using a password with '-a' or '-u' option on the command line interface may not be safe.

127.0.0.1:6383 (cab3f65e...) -> 0 keys | 0 slots | 0 slaves.

10.150.57.13:6381 (0939488f...) -> 0 keys | 16384 slots | 3 slaves.

10.150.57.13:6382 (3b02d1d4...) -> 0 keys | 0 slots | 0 slaves.

[OK] 0 keys in 3 masters.

0.00 keys per slot on average.

>>> Performing Cluster Check (using node 127.0.0.1:6383)

M: cab3f65e28f8f42dd78e1bb2ea6bb417960db600 127.0.0.1:6383

slots: (0 slots) master

S: d83e5f8dc57cb6c8fbc4ed2dd667835cbf6b7542 10.150.57.13:6386

slots: (0 slots) slave

replicates 0939488f471b96bca4feffff01a9f179e10041a5

M: 0939488f471b96bca4feffff01a9f179e10041a5 10.150.57.13:6381

slots:[0-16383] (16384 slots) master

3 additional replica(s)

M: 3b02d1d43f219759e76df2804d4ed160f602a7ea 10.150.57.13:6382

slots: (0 slots) master

S: bf2fe8ffbbb22772c600af19ce7ce15a89d26ba5 10.150.57.13:6385

slots: (0 slots) slave

replicates 0939488f471b96bca4feffff01a9f179e10041a5

S: 6c18fdfd69e67df4340437169738e8e00e67e4dd 10.150.57.13:6384

slots: (0 slots) slave

replicates 0939488f471b96bca4feffff01a9f179e10041a5

[OK] All nodes agree about slots configuration.

>>> Check for open slots...

>>> Check slots coverage...

[OK] All 16384 slots covered.此时全部(16384个)哈希槽在10.150.57.13:6381节点上。

将16384个哈希槽平均分布在3个Master节点上

[redis]# redis-cli -p 6381 -a Passwd@123 --cluster rebalance --cluster-use-empty-masters 10.150.57.13:6381

Warning: Using a password with '-a' or '-u' option on the command line interface may not be safe.

>>> Performing Cluster Check (using node 10.150.57.13:6381)

[OK] All nodes agree about slots configuration.

>>> Check for open slots...

>>> Check slots coverage...

[OK] All 16384 slots covered.

>>> Rebalancing across 3 nodes. Total weight = 3.00

Moving 5462 slots from 10.150.57.13:6381 to 10.150.57.13:6382

#############################################################################################

Moving 5461 slots from 10.150.57.13:6381 to 10.150.57.13:6383

#############################################################################################

再次查看哈希槽的分布情况

[redis]# redis-cli -p 6381 -a Passwd@123 --cluster check 127.0.0.1:6381

Warning: Using a password with '-a' or '-u' option on the command line interface may not be safe.

127.0.0.1:6381 (0939488f...) -> 0 keys | 5461 slots | 1 slaves.

10.150.57.13:6382 (3b02d1d4...) -> 0 keys | 5462 slots | 1 slaves.

10.150.57.13:6383 (cab3f65e...) -> 0 keys | 5461 slots | 1 slaves.

[OK] 0 keys in 3 masters.

0.00 keys per slot on average.

>>> Performing Cluster Check (using node 127.0.0.1:6381)

M: 0939488f471b96bca4feffff01a9f179e10041a5 127.0.0.1:6381

slots:[10923-16383] (5461 slots) master

1 additional replica(s)

M: 3b02d1d43f219759e76df2804d4ed160f602a7ea 10.150.57.13:6382

slots:[0-5461] (5462 slots) master

1 additional replica(s)

S: bf2fe8ffbbb22772c600af19ce7ce15a89d26ba5 10.150.57.13:6385

slots: (0 slots) slave

replicates cab3f65e28f8f42dd78e1bb2ea6bb417960db600

M: cab3f65e28f8f42dd78e1bb2ea6bb417960db600 10.150.57.13:6383

slots:[5462-10922] (5461 slots) master

1 additional replica(s)

S: d83e5f8dc57cb6c8fbc4ed2dd667835cbf6b7542 10.150.57.13:6386

slots: (0 slots) slave

replicates 0939488f471b96bca4feffff01a9f179e10041a5

S: 6c18fdfd69e67df4340437169738e8e00e67e4dd 10.150.57.13:6384

slots: (0 slots) slave

replicates 3b02d1d43f219759e76df2804d4ed160f602a7ea

[OK] All nodes agree about slots configuration.

>>> Check for open slots...

>>> Check slots coverage...

[OK] All 16384 slots covered.

至此,迁移Redis单机到Redis Cluster完成。

3 使用Redis-shake迁移

3.1 介绍

3.1.1 介绍

GitHub地址:https://github.com/alibaba/RedisShake

redis-shake是阿里云Redis&MongoDB团队开源的用于redis数据同步的工具。

redis-shake is a tool for synchronizing data between two redis databases. Redis-shake 是一个用于在两个 redis之 间同步数据的工具,满足用户非常灵活的同步、迁移需求。

3.1.2 基本功能

redis-shake是阿里基于redis-port基础上进行改进的一款产品。它支持解析、恢复、备份、同步四个功能。以下主要介绍同步sync。

- 恢复restore:将RDB文件恢复到目的redis数据库。

- 备份dump:将源redis的全量数据通过RDB文件备份起来。

- 解析decode:对RDB文件进行读取,并以json格式解析存储。

- 同步sync:支持源redis和目的redis的数据同步,支持全量和增量数据的迁移,支持从云下到阿里云云上的同步,也支持云下到云下不同环境的同步,支持单节点、主从版、集群版之间的互相同步。需要注意的是,如果源端是集群版,可以启动一个RedisShake,从不同的db结点进行拉取,同时源端不能开启move slot功能;对于目的端,如果是集群版,写入可以是1个或者多个db结点。

- 同步rump:支持源redis和目的redis的数据同步,仅支持全量的迁移。采用scan和restore命令进行迁移,支持不同云厂商不同redis版本的迁移。

3.1.3 基本原理

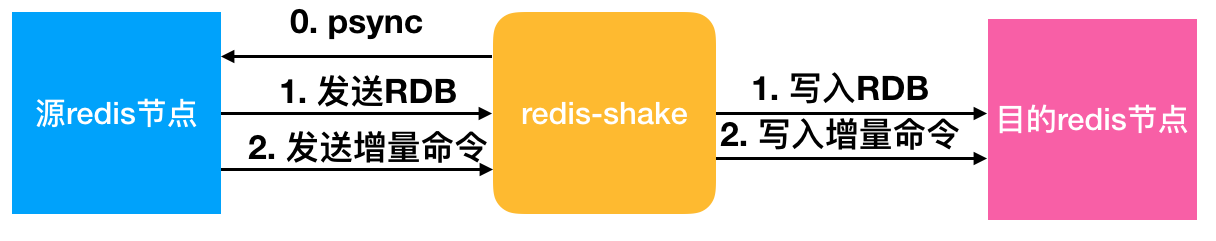

redis-shake的基本原理就是模拟一个从节点加入源redis集群,首先进行全量拉取并回放,然后进行增量的拉取(通过psync命令)。如下图所示:

如果源端是集群模式,只需要启动一个redis-shake进行拉取,同时不能开启源端的move slot操作。如果目的端是集群模式,可以写入到一个结点,然后再进行slot的迁移,当然也可以多对多写入。

目前,redis-shake到目的端采用单链路实现,对于正常情况下,这不会成为瓶颈,但对于极端情况,qps比较大的时候,此部分性能可能成为瓶颈,后续我们可能会计划对此进行优化。另外,redis-shake到目的端的数据同步采用异步的方式,读写分离在2个线程操作,降低因为网络时延带来的同步性能下降。

3.2 迁移

3.2.1 安装redis-shake

[root]# wget https://github.com/alibaba/RedisShake/releases/download/release-v2.1.1-20210903/release-v2.1.1-20210903.tar.gz

[root]# tar -zxvf release-v2.1.1-20210903.tar.gz

3.2.2 配置参数文件

配置redis-shake.conf参数文件(修改的部分)

[root]# cd release-v2.1.1-20210903

[root]# vi redis-shake.conf

source.type = standalone #源端架构类型

source.address = 127.0.0.1:6379 #源端IP:PORT

source.password_raw = Passwd@123 #源端密码

target.type = cluster #目的端架构类型

target.address = 10.150.57.13:6381;10.150.57.13:6382;10.150.57.13:6383 #目的端IP:PORT(Redis Cluster的Master或者Slave)

target.password_raw = Passwd@123 #目的端密码

key_exists = rewrite #如果目的端有同样的键值对,则覆盖[root]# cat redis-shake.conf

点击查看代码

# This file is the configuration of redis-shake.

# If you have any problem, please visit: https://github.com/alibaba/RedisShake/wiki/FAQ

# 有疑问请先查阅:https://github.com/alibaba/RedisShake/wiki/FAQ

# current configuration version, do not modify.

# 当前配置文件的版本号,请不要修改该值。

conf.version = 1

# id

id = redis-shake

# The log file name, if left blank, it will be printed to stdout,

# otherwise it will be printed to the specified file.

# 日志文件名,留空则会打印到 stdout,否则打印到指定文件。

# for example:

# log.file =

# log.file = /var/log/redis-shake.log

log.file = /var/log/redis-shake.log

# log level: "none", "error", "warn", "info", "debug".

# default is "info".

# 日志等级,可选:none error warn info debug

# 默认为:info

log.level = info

# 进程文件存储目录,留空则会输出到当前目录,

# 注意这个是目录,真正生成的 pid 是 {pid_path}/{id}.pid

# 例如:

# pid_path = ./

# pid_path = /var/run/

pid_path =

# pprof port.

system_profile = 9310

# restful port, set -1 means disable, in `restore` mode RedisShake will exit once finish restoring RDB only if this value

# is -1, otherwise, it'll wait forever.

# restful port, 查看 metric 端口, -1 表示不启用. 如果是`restore`模式,只有设置为-1才会在完成RDB恢复后退出,否则会一直block。

# http://127.0.0.1:9320/conf 查看 redis-shake 使用的配置

# http://127.0.0.1:9320/metric 查看 redis-shake 的同步情况

http_profile = 9320

# parallel routines number used in RDB file syncing. default is 64.

# 启动多少个并发线程同步一个RDB文件。

parallel = 32

# source redis configuration.

# used in `dump`, `sync` and `rump`.

# source redis type, e.g. "standalone" (default), "sentinel" or "cluster".

# 1. "standalone": standalone db mode.

# 2. "sentinel": the redis address is read from sentinel.

# 3. "cluster": the source redis has several db.

# 4. "proxy": the proxy address, currently, only used in "rump" mode.

# used in `dump`, `sync` and `rump`.

# 源端 Redis 的类型,可选:standalone sentinel cluster proxy

# 注意:proxy 只用于 rump 模式。

source.type = standalone

# ip:port

# the source address can be the following:

# 1. single db address. for "standalone" type.

# 2. ${sentinel_master_name}:${master or slave}@sentinel single/cluster address, e.g., mymaster:master@127.0.0.1:26379;127.0.0.1:26380, or @127.0.0.1:26379;127.0.0.1:26380. for "sentinel" type.

# 3. cluster that has several db nodes split by semicolon(;). for "cluster" type. e.g., 10.1.1.1:20331;10.1.1.2:20441.

# 4. proxy address(used in "rump" mode only). for "proxy" type.

# 源redis地址。对于sentinel或者开源cluster模式,输入格式为"master名字:拉取角色为master或者slave@sentinel的地址",别的cluster

# 架构,比如codis, twemproxy, aliyun proxy等需要配置所有master或者slave的db地址。

# 源端 redis 的地址

# 1. standalone 模式配置 ip:port, 例如: 10.1.1.1:20331

# 2. cluster 模式需要配置所有 nodes 的 ip:port, 例如: source.address = 10.1.1.1:20331;10.1.1.2:20441

source.address = 127.0.0.1:6379

# source password, left blank means no password.

# 源端密码,留空表示无密码。

source.password_raw =

# auth type, don't modify it

source.auth_type = auth

# tls enable, true or false. Currently, only support standalone.

# open source redis does NOT support tls so far, but some cloud versions do.

source.tls_enable = false

# input RDB file.

# used in `decode` and `restore`.

# if the input is list split by semicolon(;), redis-shake will restore the list one by one.

# 如果是decode或者restore,这个参数表示读取的rdb文件。支持输入列表,例如:rdb.0;rdb.1;rdb.2

# redis-shake将会挨个进行恢复。

source.rdb.input =

# the concurrence of RDB syncing, default is len(source.address) or len(source.rdb.input).

# used in `dump`, `sync` and `restore`. 0 means default.

# This is useless when source.type isn't cluster or only input is only one RDB.

# 拉取的并发度,如果是`dump`或者`sync`,默认是source.address中db的个数,`restore`模式默认len(source.rdb.input)。

# 假如db节点/输入的rdb有5个,但rdb.parallel=3,那么一次只会

# 并发拉取3个db的全量数据,直到某个db的rdb拉取完毕并进入增量,才会拉取第4个db节点的rdb,

# 以此类推,最后会有len(source.address)或者len(rdb.input)个增量线程同时存在。

source.rdb.parallel = 0

# for special cloud vendor: ucloud

# used in `decode` and `restore`.

# ucloud集群版的rdb文件添加了slot前缀,进行特判剥离: ucloud_cluster。

source.rdb.special_cloud =

# target redis configuration. used in `restore`, `sync` and `rump`.

# the type of target redis can be "standalone", "proxy" or "cluster".

# 1. "standalone": standalone db mode.

# 2. "sentinel": the redis address is read from sentinel.

# 3. "cluster": open source cluster (not supported currently).

# 4. "proxy": proxy layer ahead redis. Data will be inserted in a round-robin way if more than 1 proxy given.

# 目的redis的类型,支持standalone,sentinel,cluster和proxy四种模式。

target.type = cluster

# ip:port

# the target address can be the following:

# 1. single db address. for "standalone" type.

# 2. ${sentinel_master_name}:${master or slave}@sentinel single/cluster address, e.g., mymaster:master@127.0.0.1:26379;127.0.0.1:26380, or @127.0.0.1:26379;127.0.0.1:26380. for "sentinel" type.

# 3. cluster that has several db nodes split by semicolon(;). for "cluster" type.

# 4. proxy address. for "proxy" type.

target.address = 10.150.57.13:6381;10.150.57.13:6382;10.150.57.13:6383

# target password, left blank means no password.

# 目的端密码,留空表示无密码。

target.password_raw = Gaoyu@029

# auth type, don't modify it

target.auth_type = auth

# all the data will be written into this db. < 0 means disable.

target.db = 0

# Format: 0-5;1-3 ,Indicates that the data of the source db0 is written to the target db5, and

# the data of the source db1 is all written to the target db3.

# Note: When target.db is specified, target.dbmap will not take effect.

# 例如 0-5;1-3 表示源端 db0 的数据会被写入目的端 db5, 源端 db1 的数据会被写入目的端 db3

# 当 target.db 开启的时候 target.dbmap 不会生效.

target.dbmap =

# tls enable, true or false. Currently, only support standalone.

# open source redis does NOT support tls so far, but some cloud versions do.

target.tls_enable = false

# output RDB file prefix.

# used in `decode` and `dump`.

# 如果是decode或者dump,这个参数表示输出的rdb前缀,比如输入有3个db,那么dump分别是:

# ${output_rdb}.0, ${output_rdb}.1, ${output_rdb}.2

target.rdb.output = local_dump

# some redis proxy like twemproxy doesn't support to fetch version, so please set it here.

# e.g., target.version = 4.0

target.version =

# use for expire key, set the time gap when source and target timestamp are not the same.

# 用于处理过期的键值,当迁移两端不一致的时候,目的端需要加上这个值

fake_time =

# how to solve when destination restore has the same key.

# rewrite: overwrite.

# none: panic directly.

# ignore: skip this key. not used in rump mode.

# used in `restore`, `sync` and `rump`.

# 当源目的有重复 key 时是否进行覆写, 可选值:

# 1. rewrite: 源端覆盖目的端

# 2. none: 一旦发生进程直接退出

# 3. ignore: 保留目的端key,忽略源端的同步 key. 该值在 rump 模式下不会生效.

key_exists = rewrite

# filter db, key, slot, lua.

# filter db.

# used in `restore`, `sync` and `rump`.

# e.g., "0;5;10" means match db0, db5 and db10.

# at most one of `filter.db.whitelist` and `filter.db.blacklist` parameters can be given.

# if the filter.db.whitelist is not empty, the given db list will be passed while others filtered.

# if the filter.db.blacklist is not empty, the given db list will be filtered while others passed.

# all dbs will be passed if no condition given.

# 指定的db被通过,比如0;5;10将会使db0, db5, db10通过, 其他的被过滤

filter.db.whitelist =

# 指定的db被过滤,比如0;5;10将会使db0, db5, db10过滤,其他的被通过

filter.db.blacklist =

# filter key with prefix string. multiple keys are separated by ';'.

# e.g., "abc;bzz" match let "abc", "abc1", "abcxxx", "bzz" and "bzzwww".

# used in `restore`, `sync` and `rump`.

# at most one of `filter.key.whitelist` and `filter.key.blacklist` parameters can be given.

# if the filter.key.whitelist is not empty, the given keys will be passed while others filtered.

# if the filter.key.blacklist is not empty, the given keys will be filtered while others passed.

# all the namespace will be passed if no condition given.

# 支持按前缀过滤key,只让指定前缀的key通过,分号分隔。比如指定abc,将会通过abc, abc1, abcxxx

filter.key.whitelist =

# 支持按前缀过滤key,不让指定前缀的key通过,分号分隔。比如指定abc,将会阻塞abc, abc1, abcxxx

filter.key.blacklist =

# filter given slot, multiple slots are separated by ';'.

# e.g., 1;2;3

# used in `sync`.

# 指定过滤slot,只让指定的slot通过

filter.slot =

# filter lua script. true means not pass. However, in redis 5.0, the lua

# converts to transaction(multi+{commands}+exec) which will be passed.

# 控制不让lua脚本通过,true表示不通过

filter.lua = false

# big key threshold, the default is 500 * 1024 * 1024 bytes. If the value is bigger than

# this given value, all the field will be spilt and write into the target in order. If

# the target Redis type is Codis, this should be set to 1, please checkout FAQ to find

# the reason.

# 正常key如果不大,那么都是直接调用restore写入到目的端,如果key对应的value字节超过了给定

# 的值,那么会分批依次一个一个写入。如果目的端是Codis,这个需要置为1,具体原因请查看FAQ。

# 如果目的端大版本小于源端,也建议设置为1。

big_key_threshold = 524288000

# enable metric

# used in `sync`.

# 是否启用metric

metric = true

# print in log

# 是否将metric打印到log中

metric.print_log = false

# sender information.

# sender flush buffer size of byte.

# used in `sync`.

# 发送缓存的字节长度,超过这个阈值将会强行刷缓存发送

sender.size = 104857600

# sender flush buffer size of oplog number.

# used in `sync`. flush sender buffer when bigger than this threshold.

# 发送缓存的报文个数,超过这个阈值将会强行刷缓存发送,对于目的端是cluster的情况,这个值

# 的调大将会占用部分内存。

sender.count = 4095

# delay channel size. once one oplog is sent to target redis, the oplog id and timestamp will also

# stored in this delay queue. this timestamp will be used to calculate the time delay when receiving

# ack from target redis.

# used in `sync`.

# 用于metric统计时延的队列

sender.delay_channel_size = 65535

# enable keep_alive option in TCP when connecting redis.

# the unit is second.

# 0 means disable.

# TCP keep-alive保活参数,单位秒,0表示不启用。

keep_alive = 0

# used in `rump`.

# number of keys captured each time. default is 100.

# 每次scan的个数,不配置则默认100.

scan.key_number = 50

# used in `rump`.

# we support some special redis types that don't use default `scan` command like alibaba cloud and tencent cloud.

# 有些版本具有特殊的格式,与普通的scan命令有所不同,我们进行了特殊的适配。目前支持腾讯云的集群版"tencent_cluster"

# 和阿里云的集群版"aliyun_cluster",注释主从版不需要配置,只针对集群版。

scan.special_cloud =

# used in `rump`.

# we support to fetching data from given file which marks the key list.

# 有些云版本,既不支持sync/psync,也不支持scan,我们支持从文件中进行读取所有key列表并进行抓取:一行一个key。

scan.key_file =

# limit the rate of transmission. Only used in `rump` currently.

# e.g., qps = 1000 means pass 1000 keys per second. default is 500,000(0 means default)

qps = 200000

# enable resume from break point, please visit xxx to see more details.

# 断点续传开关

resume_from_break_point = false

# ----------------splitter----------------

# below variables are useless for current open source version so don't set.

# replace hash tag.

# used in `sync`.

replace_hash_tag = false3.2.3 迁移

启动redis-shake

[root]# ./redis-shake.linux -type=sync -conf=redis-shake.conf日志输出:

点击查看代码

2021/12/02 23:53:58 [WARN] source.auth_type[auth] != auth

2021/12/02 23:53:58 [WARN] target.auth_type[auth] != auth

2021/12/02 23:53:58 [INFO] the target redis type is cluster, all db syncing to db0

2021/12/02 23:53:58 [INFO] input password is empty, skip auth address[127.0.0.1:6379] with type[auth].

2021/12/02 23:53:58 [INFO] input password is empty, skip auth address[127.0.0.1:6379] with type[auth].

2021/12/02 23:53:58 [INFO] source rdb[127.0.0.1:6379] checksum[yes]

2021/12/02 23:53:58 [WARN]

______________________________

\ \ _ ______ |

\ \ / \___-=O'/|O'/__|

\ RedisShake, here we go !! \_______\ / | / )

/ / '/-==__ _/__|/__=-| -GM

/ Alibaba Cloud / * \ | |

/ / (o)

------------------------------

if you have any problem, please visit https://github.com/alibaba/RedisShake/wiki/FAQ

2021/12/02 23:53:58 [INFO] redis-shake configuration: {"ConfVersion":1,"Id":"redis-shake","LogFile":"","LogLevel":"info","SystemProfile":9310,"HttpProfile":9320,"Parallel":32,"SourceType":"standalone","SourceAddress":"127.0.0.1:6379","SourcePasswordRaw":"***","SourcePasswordEncoding":"***","SourceAuthType":"auth","SourceTLSEnable":false,"SourceRdbInput":[],"SourceRdbParallel":1,"SourceRdbSpecialCloud":"","TargetAddress":"10.150.57.13:6381;10.150.57.13:6382;10.150.57.13:6383","TargetPasswordRaw":"***","TargetPasswordEncoding":"***","TargetDBString":"0","TargetDBMapString":"","TargetAuthType":"auth","TargetType":"cluster","TargetTLSEnable":false,"TargetRdbOutput":"local_dump","TargetVersion":"6.2.1","FakeTime":"","KeyExists":"rewrite","FilterDBWhitelist":[],"FilterDBBlacklist":[],"FilterKeyWhitelist":[],"FilterKeyBlacklist":[],"FilterSlot":[],"FilterLua":false,"BigKeyThreshold":524288000,"Metric":true,"MetricPrintLog":false,"SenderSize":104857600,"SenderCount":4095,"SenderDelayChannelSize":65535,"KeepAlive":0,"PidPath":"","ScanKeyNumber":50,"ScanSpecialCloud":"","ScanKeyFile":"","Qps":200000,"ResumeFromBreakPoint":false,"Psync":true,"NCpu":0,"HeartbeatUrl":"","HeartbeatInterval":10,"HeartbeatExternal":"","HeartbeatNetworkInterface":"","ReplaceHashTag":false,"ExtraInfo":false,"SockFileName":"","SockFileSize":0,"FilterKey":null,"FilterDB":"","Rewrite":false,"SourceAddressList":["127.0.0.1:6379"],"TargetAddressList":["10.150.57.13:6381","10.150.57.13:6382","10.150.57.13:6383"],"SourceVersion":"5.0.12","HeartbeatIp":"127.0.0.1","ShiftTime":0,"TargetReplace":false,"TargetDB":0,"Version":"develop,cc226f841e2e244c48246ebfcfd5a50396b59710,go1.15.7,2021-09-03_10:06:55","Type":"sync","TargetDBMap":null}

2021/12/02 23:53:58 [INFO] DbSyncer[0] starts syncing data from 127.0.0.1:6379 to [10.150.57.13:6381 10.150.57.13:6382 10.150.57.13:6383] with http[9321], enableResumeFromBreakPoint[false], slot boundary[-1, -1]

2021/12/02 23:53:58 [INFO] input password is empty, skip auth address[127.0.0.1:6379] with type[auth].

2021/12/02 23:53:58 [INFO] DbSyncer[0] psync connect '127.0.0.1:6379' with auth type[auth] OK!

2021/12/02 23:53:58 [INFO] DbSyncer[0] psync send listening port[9320] OK!

2021/12/02 23:53:58 [INFO] DbSyncer[0] try to send 'psync' command: run-id[?], offset[-1]

2021/12/02 23:53:58 [INFO] Event:FullSyncStart Id:redis-shake

2021/12/02 23:53:58 [INFO] DbSyncer[0] psync runid = a579ea824ae5845c709d093d75467cfee86753e0, offset = 849589400, fullsync

2021/12/02 23:53:58 [INFO] DbSyncer[0] rdb file size = 276

2021/12/02 23:53:58 [INFO] Aux information key:redis-ver value:5.0.12

2021/12/02 23:53:58 [INFO] Aux information key:redis-bits value:64

2021/12/02 23:53:58 [INFO] Aux information key:ctime value:1638460438

2021/12/02 23:53:58 [INFO] Aux information key:used-mem value:1902536

2021/12/02 23:53:58 [INFO] Aux information key:repl-stream-db value:0

2021/12/02 23:53:58 [INFO] Aux information key:repl-id value:a579ea824ae5845c709d093d75467cfee86753e0

2021/12/02 23:53:58 [INFO] Aux information key:repl-offset value:849589400

2021/12/02 23:53:58 [INFO] Aux information key:aof-preamble value:0

2021/12/02 23:53:58 [INFO] db_size:8 expire_size:0

2021/12/02 23:53:58 [INFO] DbSyncer[0] total = 276B - 276B [100%] entry=8

2021/12/02 23:53:58 [INFO] DbSyncer[0] sync rdb done

2021/12/02 23:53:58 [INFO] DbSyncer[0] FlushEvent:IncrSyncStart Id:redis-shake

2021/12/02 23:53:58 [INFO] input password is empty, skip auth address[127.0.0.1:6379] with type[auth].

2021/12/02 23:53:59 [INFO] DbSyncer[0] sync: +forwardCommands=0 +filterCommands=0 +writeBytes=0

2021/12/02 23:54:00 [INFO] DbSyncer[0] sync: +forwardCommands=0 +filterCommands=0 +writeBytes=0

2021/12/02 23:54:46 [INFO] DbSyncer[0] sync: +forwardCommands=0 +filterCommands=0 +writeBytes=0

sh2021/12/02 23:54:47 [INFO] DbSyncer[0] sync: +forwardCommands=0 +filterCommands=0 +writeBytes=0

gui 42021/12/02 23:54:48 [INFO] DbSyncer[0] sync: +forwardCommands=1 +filterCommands=0 +writeBytes=4

2021/12/02 23:54:49 [INFO] DbSyncer[0] sync: +forwardCommands=0 +filterCommands=0 +writeBytes=0

redis-2021/12/02 23:54:50 [INFO] DbSyncer[0] sync: +forwardCommands=0 +filterCommands=0 +writeBytes=0

cl2021/12/02 23:54:51 [INFO] DbSyncer[0] sync: +forwardCommands=0 +filterCommands=0 +writeBytes=0

i

2021/12/02 23:54:52 [INFO] DbSyncer[0] sync: +forwardCommands=0 +filterCommands=0 +writeBytes=0

2021/12/02 23:54:53 [INFO] DbSyncer[0] sync: +forwardCommands=0 +filterCommands=0 +writeBytes=0

keys 2021/12/02 23:54:54 [INFO] DbSyncer[0] sync: +forwardCommands=0 +filterCommands=0 +writeBytes=0

*

2021/12/02 23:54:55 [INFO] DbSyncer[0] sync: +forwardCommands=0 +filterCommands=0 +writeBytes=0

set 2021/12/02 23:54:56 [INFO] DbSyncer[0] sync: +forwardCommands=0 +filterCommands=0 +writeBytes=0

2021/12/02 23:54:57 [INFO] DbSyncer[0] sync: +forwardCommands=0 +filterCommands=0 +writeBytes=0

2021/12/02 23:54:58 [INFO] DbSyncer[0] sync: +forwardCommands=1 +filterCommands=0 +writeBytes=4

key12021/12/02 23:54:59 [INFO] DbSyncer[0] sync: +forwardCommands=0 +filterCommands=0 +writeBytes=0

00 2021/12/02 23:55:00 [INFO] DbSyncer[0] sync: +forwardCommands=0 +filterCommands=0 +writeBytes=0

name102021/12/02 23:55:01 [INFO] DbSyncer[0] sync: +forwardCommands=0 +filterCommands=0 +writeBytes=0

0

2021/12/02 23:55:02 [INFO] DbSyncer[0] sync: +forwardCommands=2 +filterCommands=0 +writeBytes=23

2021/12/02 23:55:03 [INFO] DbSyncer[0] sync: +forwardCommands=0 +filterCommands=0 +writeBytes=0

2021/12/02 23:55:04 [INFO] DbSyncer[0] sync: +forwardCommands=0 +filterCommands=0 +writeBytes=0

2021/12/02 23:55:05 [INFO] DbSyncer[0] sync: +forwardCommands=0 +filterCommands=0 +writeBytes=0

2021/12/02 23:55:06 [INFO] DbSyncer[0] sync: +forwardCommands=0 +filterCommands=0 +writeBytes=04 redis-full-check校验工具

4.1 介绍

4.1.1 简介

redis-full-check是阿里云Redis&MongoDB团队开源的用于校验2个redis数据是否一致的工具,通常用于redis数据迁移(redis-shake)后正确性的校验。

支持:单节点、主从版、集群版、带proxy的云上集群版(阿里云)之间的同构或者异构对比,版本支持2.x-5.x。

4.1.2 基本原理

下图是基本的逻辑比较:

redis-full-check通过全量对比源端和目的端的redis中的数据的方式来进行数据校验,其比较方式通过多轮次比较:每次都会抓取源和目的端的数据进行差异化比较,记录不一致的数据进入下轮对比。然后通过多伦比较不断收敛,减少因数据增量同步导致的源库和目的库的数据不一致。最后sqlite中存在的数据就是最终的差异结果。

redis-full-check对比的方向是单向:抓取源库A的数据,然后检测是否位于B中,反向不会检测,也就是说,它检测的是源库是否是目的库的子集。如果希望对比双向,则需要对比2次,第一次以A为源库,B为目的库,第二次以B为源库,A为目的库。

下图是基本的数据流图,redis-full-check内部分为多轮比较,也就是黄色框所指示的部分。每次比较,会先抓取比较的key,第一轮是从源库中进行抓取,后面轮次是从sqlite3 db中进行抓取;抓取key之后是分别抓取key对应的field和value进行对比,然后将存在差异的部分存入sqlite3 db中,用于下次比较。

4.1.3 不一致类型

redis-full-check判断不一致的方式主要分为2类:key不一致和value不一致。

key不一致

key不一致主要分为以下几种情况:

- lack_target : key存在于源库,但不存在于目的库。

- type: key存在于源库和目的库,但是类型不一致。

- value: key存在于源库和目的库,且类型一致,但是value不一致。

value不一致

不同数据类型有不同的对比标准:

- string: value不同。

- hash: 存在field,满足下面3个条件之一:

- field存在于源端,但不存在与目的端。

- field存在于目的端,但不存在与源端。

- field同时存在于源和目的端,但是value不同。

- set/zset:与hash类似。

- list: 与hash类似。

field冲突类型有以下几种情况(只存在于hash,set,zset,list类型key中):

- lack_source: field存在于源端key,field不存在与目的端key。

- lack_target: field不存在与源端key,field存在于目的端key。

- value: field存在于源端key和目的端key,但是field对应的value不同。

4.1.4 比较原理

对比模式(comparemode)有三种可选:

- KeyOutline:只对比key值是否相等。

- ValueOutline:只对比value值的长度是否相等。

- FullValue:对比key值、value长度、value值是否相等。

对比会进行comparetimes轮(默认comparetimes=3)比较:

- 第一轮,首先找出在源库上所有的key,然后分别从源库和目的库抓取进行比较。

- 第二轮开始迭代比较,只比较上一轮结束后仍然不一致的key和field。

- 对于key不一致的情况,包括lack_source ,lack_target 和type,从源库和目的库重新取key、value进行比较。

- value不一致的string,重新比较key:从源和目的取key、value比较。

- value不一致的hash、set和zset,只重新比较不一致的field,之前已经比较且相同的filed不再比较。这是为了防止对于大key情况下,如果更新频繁,将会导致校验永远不通过的情况。

- value不一致的list,重新比较key:从源和目的取key、value比较。

- 每轮之间会停止一定的时间(Interval)。

对于hash,set,zset,list大key处理采用以下方式:

- len <= 5192,直接取全量field、value进行比较,使用如下命令:hgetall,smembers,zrange 0 -1 withscores,lrange 0 -1。

- len > 5192,使用hscan,sscan,zscan,lrange分批取field和value。

4.2 校验

4.2.1 安装

GitHub:https://github.com/alibaba/RedisFullCheck

安装redis-full-check

[root]# wget https://github.com/alibaba/RedisFullCheck/releases/download/release-v1.4.8-20200212/redis-full-check-1.4.8.tar.gz

[root]# tar -zxvf redis-full-check-1.4.8.tar.gz

redis-full-check-1.4.8/

redis-full-check-1.4.8/redis-full-check

redis-full-check-1.4.8/ChangeLog

[root]# redis-full-check-1.4.8

[root]# ./redis-full-check --help

Usage:

redis-full-check [OPTIONS]

Application Options:

-s, --source=SOURCE Set host:port of source redis. If db type is cluster, split by semicolon(;'), e.g.,

10.1.1.1:1000;10.2.2.2:2000;10.3.3.3:3000. We also support auto-detection, so "master@10.1.1.1:1000" or

"slave@10.1.1.1:1000" means choose master or slave. Only need to give a role in the master or slave.

-p, --sourcepassword=Password Set source redis password

--sourceauthtype=AUTH-TYPE useless for opensource redis, valid value:auth/adminauth (default: auth)

--sourcedbtype= 0: db, 1: cluster 2: aliyun proxy, 3: tencent proxy (default: 0)

--sourcedbfilterlist= db white list that need to be compared, -1 means fetch all, "0;5;15" means fetch db 0, 5, and 15 (default: -1)

-t, --target=TARGET Set host:port of target redis. If db type is cluster, split by semicolon(;'), e.g.,

10.1.1.1:1000;10.2.2.2:2000;10.3.3.3:3000. We also support auto-detection, so "master@10.1.1.1:1000" or

"slave@10.1.1.1:1000" means choose master or slave. Only need to give a role in the master or slave.

-a, --targetpassword=Password Set target redis password

--targetauthtype=AUTH-TYPE useless for opensource redis, valid value:auth/adminauth (default: auth)

--targetdbtype= 0: db, 1: cluster 2: aliyun proxy 3: tencent proxy (default: 0)

--targetdbfilterlist= db white list that need to be compared, -1 means fetch all, "0;5;15" means fetch db 0, 5, and 15 (default: -1)

-d, --db=Sqlite3-DB-FILE sqlite3 db file for store result. If exist, it will be removed and a new file is created. (default: result.db)

--result=FILE store all diff result into the file, format is 'db diff-type key field'

--comparetimes=COUNT Total compare count, at least 1. In the first round, all keys will be compared. The subsequent rounds of the

comparison will be done on the previous results. (default: 3)

-m, --comparemode= compare mode, 1: compare full value, 2: only compare value length, 3: only compare keys outline, 4: compare full

value, but only compare value length when meets big key (default: 2)

--id= used in metric, run id, useless for open source (default: unknown)

--jobid= used in metric, job id, useless for open source (default: unknown)

--taskid= used in metric, task id, useless for open source (default: unknown)

-q, --qps= max batch qps limit: e.g., if qps is 10, full-check fetches 10 * $batch keys every second (default: 15000)

--interval=Second The time interval for each round of comparison(Second) (default: 5)

--batchcount=COUNT the count of key/field per batch compare, valid value [1, 10000] (default: 256)

--parallel=COUNT concurrent goroutine number for comparison, valid value [1, 100] (default: 5)

--log=FILE log file, if not specified, log is put to console

--loglevel=LEVEL log level: 'debug', 'info', 'warn', 'error', default is 'info'

--metric print metric in log

--bigkeythreshold=COUNT

-f, --filterlist=FILTER if the filter list isn't empty, all elements in list will be synced. The input should be split by '|'. The end of

the string is followed by a * to indicate a prefix match, otherwise it is a full match. e.g.: 'abc*|efg|m*'

matches 'abc', 'abc1', 'efg', 'm', 'mxyz', but 'efgh', 'p' aren't'

--systemprofile=SYSTEM-PROFILE port that used to print golang inner head and stack message (default: 20445)

-v, --version

Help Options:

-h, --help Show this help message

参数解释:

-s, --source=SOURCE 源redis库地址(ip:port),如果是集群版,那么需要以分号(;)分割不同的db,只需要配置主或者从的其中之一。例如:10.1.1.1:1000;10.2.2.2:2000;10.3.3.3:3000。

-p, --sourcepassword=Password 源redis库密码

--sourceauthtype=AUTH-TYPE 源库管理权限,开源reids下此参数无用。

--sourcedbtype= 源库的类别,0:db(standalone单节点、主从),1: cluster(集群版),2: 阿里云

--sourcedbfilterlist= 源库需要抓取的逻辑db白名单,以分号(;)分割,例如:0;5;15表示db0,db5和db15都会被抓取

-t, --target=TARGET 目的redis库地址(ip:port)

-a, --targetpassword=Password 目的redis库密码

--targetauthtype=AUTH-TYPE 目的库管理权限,开源reids下此参数无用。

--targetdbtype= 参考sourcedbtype

--targetdbfilterlist= 参考sourcedbfilterlist

-d, --db=Sqlite3-DB-FILE 对于差异的key存储的sqlite3 db的位置,默认result.db

--comparetimes=COUNT 比较轮数

-m, --comparemode= 比较模式,1表示全量比较,2表示只对比value的长度,3只对比key是否存在,4全量比较的情况下,忽略大key的比较

--id= 用于打metric

--jobid= 用于打metric

--taskid= 用于打metric

-q, --qps= qps限速阈值

--interval=Second 每轮之间的时间间隔

--batchcount=COUNT 批量聚合的数量

--parallel=COUNT 比较的并发协程数,默认5

--log=FILE log文件

--result=FILE 不一致结果记录到result文件中,格式:'db diff-type key field'

--metric=FILE metric文件

--bigkeythreshold=COUNT 大key拆分的阈值,用于comparemode=4

-f, --filterlist=FILTER 需要比较的key列表,以分号(;)分割。例如:"abc*|efg|m*"表示对比'abc', 'abc1', 'efg', 'm', 'mxyz',不对比'efgh', 'p'。

-v, --version4.2.2 校验

校验源端与目的端键值对

[root]# ./redis-full-check --source=10.150.57.9:6379 --sourcepassword= --sourcedbtype=0 --target="10.150.57.13:6381;10.150.57.13:6382;10.150.57.13:6383" --targetpassword=Gaoyu@029 --targetdbtype=1 --comparemode=1 --qps=10 --batchcount=1000 --parallel=10

[root@guizhou_hp-pop-10-150-57-9 redis-full-check-1.4.8]# ./redis-full-check --source=10.150.57.9:6379 --sourcepassword= --sourcedbtype=0 --target="10.150.57.13:6381;10.150.57.13:6382;10.150.57.13:6383" --targetpassword=Gaoyu@029 --targetdbtype=1 --comparemode=1 --qps=10 --batchcount=1000 --parallel=10

[INFO 2021-12-03-10:47:25 main.go:65]: init log success

[INFO 2021-12-03-10:47:25 main.go:168]: configuration: {10.150.57.9:6379 auth 0 -1 10.150.57.13:6381;10.150.57.13:6382;10.150.57.13:6383 Gaoyu@029 auth 1 -1 result.db 3 1 unknown unknown unknown 10 5 1000 10 false 16384 20445 false}

[INFO 2021-12-03-10:47:25 main.go:170]: ---------

[INFO 2021-12-03-10:47:25 full_check.go:238]: sourceDbType=0, p.sourcePhysicalDBList=[meaningless]

[INFO 2021-12-03-10:47:25 full_check.go:243]: db=0:keys=9

[INFO 2021-12-03-10:47:25 full_check.go:253]: ---------------- start 1th time compare

[INFO 2021-12-03-10:47:25 full_check.go:278]: start compare db 0

[INFO 2021-12-03-10:47:25 scan.go:20]: build connection[source redis addr: [10.150.57.9:6379]]

[INFO 2021-12-03-10:47:26 full_check.go:203]: stat:

times:1, db:0, dbkeys:9, finish:33%, finished:true

KeyScan:{9 9 0}

KeyEqualInProcess|string|equal|{9 9 0}

[INFO 2021-12-03-10:47:26 full_check.go:250]: wait 5 seconds before start

[INFO 2021-12-03-10:47:31 full_check.go:253]: ---------------- start 2th time compare

[INFO 2021-12-03-10:47:31 full_check.go:278]: start compare db 0

[INFO 2021-12-03-10:47:31 full_check.go:203]: stat:

times:2, db:0, finished:true

KeyScan:{0 0 0}

[INFO 2021-12-03-10:47:31 full_check.go:250]: wait 5 seconds before start

[INFO 2021-12-03-10:47:36 full_check.go:253]: ---------------- start 3th time compare

[INFO 2021-12-03-10:47:36 full_check.go:278]: start compare db 0

[INFO 2021-12-03-10:47:36 full_check.go:203]: stat:

times:3, db:0, finished:true

KeyScan:{0 0 0}

[INFO 2021-12-03-10:47:36 full_check.go:328]: --------------- finished! ----------------

all finish successfully, totally 0 key(s) and 0 field(s) conflict校验完毕,没有键冲突。

使用RDB、AOF和redis-shake迁移Redis键值对。使用redis-full-check校验键值对。

使用RDB、AOF和redis-shake迁移Redis键值对。使用redis-full-check校验键值对。

【推荐】国内首个AI IDE,深度理解中文开发场景,立即下载体验Trae

【推荐】编程新体验,更懂你的AI,立即体验豆包MarsCode编程助手

【推荐】抖音旗下AI助手豆包,你的智能百科全书,全免费不限次数

【推荐】轻量又高性能的 SSH 工具 IShell:AI 加持,快人一步

· 记一次.NET内存居高不下排查解决与启示

· 探究高空视频全景AR技术的实现原理

· 理解Rust引用及其生命周期标识(上)

· 浏览器原生「磁吸」效果!Anchor Positioning 锚点定位神器解析

· 没有源码,如何修改代码逻辑?

· 分享4款.NET开源、免费、实用的商城系统

· 全程不用写代码,我用AI程序员写了一个飞机大战

· MongoDB 8.0这个新功能碉堡了,比商业数据库还牛

· 白话解读 Dapr 1.15:你的「微服务管家」又秀新绝活了

· 记一次.NET内存居高不下排查解决与启示