prometheus-k8s

一,prometheus在k8s中部署

1,创建命名空间

root@slave001:/apps# kubectl create ns monitoring namespace/monitoring created

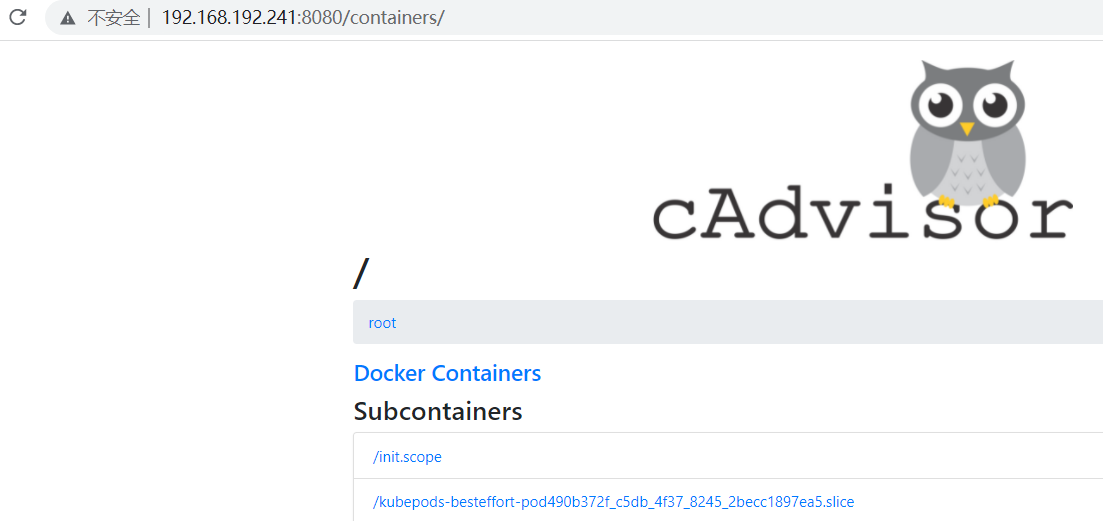

2,k8s每个节点部署cadvisor 8080

root@master001:~/prometheus# cat case1-daemonset-deploy-cadvisor.yaml apiVersion: apps/v1 kind: DaemonSet metadata: name: cadvisor namespace: monitoring spec: selector: matchLabels: app: cAdvisor template: metadata: labels: app: cAdvisor spec: tolerations: #污点容忍,忽略master的NoSchedule - effect: NoSchedule key: node-role.kubernetes.io/master hostNetwork: true restartPolicy: Always # 重启策略 containers: - name: cadvisor image: gexuchuan123/cadvisor:v0.39.2 imagePullPolicy: IfNotPresent # 镜像策略 ports: - containerPort: 8080 volumeMounts: - name: root mountPath: /rootfs - name: run mountPath: /var/run - name: sys mountPath: /sys - name: docker mountPath: /var/lib/docker volumes: - name: root hostPath: path: / - name: run hostPath: path: /var/run - name: sys hostPath: path: /sys - name: docker hostPath: path: /var/lib/docker

3,k8s每个节点部署node-exporter 9100

root@master001:~/prometheus# cat case2-daemonset-deploy-node-exporter.yaml apiVersion: apps/v1 kind: DaemonSet metadata: name: node-exporter namespace: monitoring labels: k8s-app: node-exporter spec: selector: matchLabels: k8s-app: node-exporter template: metadata: labels: k8s-app: node-exporter spec: tolerations: - effect: NoSchedule key: node-role.kubernetes.io/master containers: - image: prom/node-exporter:v1.3.1 imagePullPolicy: IfNotPresent name: prometheus-node-exporter ports: - containerPort: 9100 hostPort: 9100 protocol: TCP name: metrics volumeMounts: - mountPath: /host/proc name: proc - mountPath: /host/sys name: sys - mountPath: /host name: rootfs args: - --path.procfs=/host/proc - --path.sysfs=/host/sys - --path.rootfs=/host volumes: - name: proc hostPath: path: /proc - name: sys hostPath: path: /sys - name: rootfs hostPath: path: / hostNetwork: true hostPID: true --- apiVersion: v1 kind: Service metadata: annotations: prometheus.io/scrape: "true" labels: k8s-app: node-exporter name: node-exporter namespace: monitoring spec: type: NodePort ports: - name: http port: 9100 nodePort: 39100 protocol: TCP selector: k8s-app: node-exporter

4,查看

root@slave001:/apps# kubectl get po -nmonitoring -owide NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES cadvisor-f58s5 1/1 Running 0 20m 192.168.192.152 192.168.192.152 <none> <none> cadvisor-np42s 1/1 Running 0 20m 192.168.192.153 192.168.192.153 <none> <none> cadvisor-v4mwr 1/1 Running 0 20m 192.168.192.151 192.168.192.151 <none> <none> node-exporter-897xp 1/1 Running 5 4m55s 192.168.192.151 192.168.192.151 <none> <none> node-exporter-gvkm9 1/1 Running 5 4m55s 192.168.192.152 192.168.192.152 <none> <none> node-exporter-xx5vg 1/1 Running 5 4m55s 192.168.192.153 192.168.192.153 <none> <none>

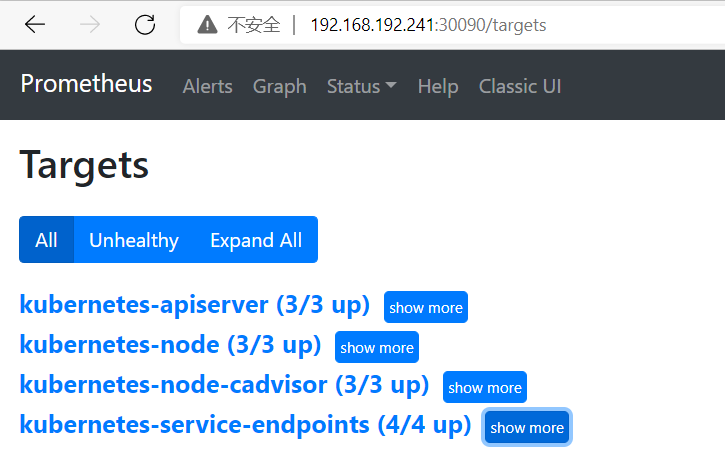

5,在k8s中部署prometheus监控数据

root@master001:~/prometheus# mkdir -p /data/prometheusdata #创建挂载目录 root@master001:~/prometheus# chmod 777 /data/prometheusdata/

root@master001:~/prometheus# kubectl create serviceaccount monitor -nmonitoring #创建监控账号

root@master001:~/prometheus# kubectl create clusterrolebinding monitor-clusterrolebinding -nmonitoring --clusterrole=cluster-admin --serviceaccount=monitoring:monitor

clusterrolebinding.rbac.authorization.k8s.io/monitor-clusterrolebinding created #对monitoring账号授权

root@master001:~/prometheus# cat case3-1-prometheus-cfg.yaml #prometheus配置到ConfigMap --- kind: ConfigMap apiVersion: v1 metadata: labels: app: prometheus name: prometheus-config namespace: monitoring data: prometheus.yml: | global: scrape_interval: 15s scrape_timeout: 10s evaluation_interval: 1m scrape_configs: - job_name: 'kubernetes-node' kubernetes_sd_configs: - role: node relabel_configs: - source_labels: [__address__] regex: '(.*):10250' replacement: '${1}:9100' target_label: __address__ action: replace - action: labelmap regex: __meta_kubernetes_node_label_(.+) - job_name: 'kubernetes-node-cadvisor' kubernetes_sd_configs: - role: node scheme: https tls_config: ca_file: /var/run/secrets/kubernetes.io/serviceaccount/ca.crt bearer_token_file: /var/run/secrets/kubernetes.io/serviceaccount/token relabel_configs: - action: labelmap regex: __meta_kubernetes_node_label_(.+) - target_label: __address__ replacement: kubernetes.default.svc:443 - source_labels: [__meta_kubernetes_node_name] regex: (.+) target_label: __metrics_path__ replacement: /api/v1/nodes/${1}/proxy/metrics/cadvisor - job_name: 'kubernetes-apiserver' kubernetes_sd_configs: - role: endpoints scheme: https tls_config: ca_file: /var/run/secrets/kubernetes.io/serviceaccount/ca.crt bearer_token_file: /var/run/secrets/kubernetes.io/serviceaccount/token relabel_configs: - source_labels: [__meta_kubernetes_namespace, __meta_kubernetes_service_name, __meta_kubernetes_endpoint_port_name] action: keep regex: default;kubernetes;https - job_name: 'kubernetes-service-endpoints' kubernetes_sd_configs: - role: endpoints relabel_configs: - source_labels: [__meta_kubernetes_service_annotation_prometheus_io_scrape] action: keep regex: true - source_labels: [__meta_kubernetes_service_annotation_prometheus_io_scheme] action: replace target_label: __scheme__ regex: (https?) - source_labels: [__meta_kubernetes_service_annotation_prometheus_io_path] action: replace target_label: __metrics_path__ regex: (.+) - source_labels: [__address__, __meta_kubernetes_service_annotation_prometheus_io_port] action: replace target_label: __address__ regex: ([^:]+)(?::\d+)?;(\d+) replacement: $1:$2 - action: labelmap regex: __meta_kubernetes_service_label_(.+) - source_labels: [__meta_kubernetes_namespace] action: replace target_label: kubernetes_namespace - source_labels: [__meta_kubernetes_service_name] action: replace target_label: kubernetes_name

root@master001:~/prometheus# kubectl apply -f case3-1-prometheus-cfg.yaml configmap/prometheus-config created root@master001:~/prometheus# kubectl get configmaps -nmonitoring NAME DATA AGE kube-root-ca.crt 1 91m prometheus-config 1 22s

root@master001:~/prometheus# kubectl describe configmaps -nmonitoring prometheus-config Name: prometheus-config Namespace: monitoring Labels: app=prometheus Annotations: <none> Data ==== prometheus.yml: ---- global: scrape_interval: 15s scrape_timeout: 10s evaluation_interval: 1m scrape_configs: - job_name: 'kubernetes-node' kubernetes_sd_configs: - role: node relabel_configs: - source_labels: [__address__] regex: '(.*):10250' replacement: '${1}:9100' target_label: __address__ action: replace - action: labelmap regex: __meta_kubernetes_node_label_(.+) - job_name: 'kubernetes-node-cadvisor' kubernetes_sd_configs: - role: node scheme: https tls_config: ca_file: /var/run/secrets/kubernetes.io/serviceaccount/ca.crt bearer_token_file: /var/run/secrets/kubernetes.io/serviceaccount/token relabel_configs: - action: labelmap regex: __meta_kubernetes_node_label_(.+) - target_label: __address__ replacement: kubernetes.default.svc:443 - source_labels: [__meta_kubernetes_node_name] regex: (.+) target_label: __metrics_path__ replacement: /api/v1/nodes/${1}/proxy/metrics/cadvisor - job_name: 'kubernetes-apiserver' kubernetes_sd_configs: - role: endpoints scheme: https tls_config: ca_file: /var/run/secrets/kubernetes.io/serviceaccount/ca.crt bearer_token_file: /var/run/secrets/kubernetes.io/serviceaccount/token relabel_configs: - source_labels: [__meta_kubernetes_namespace, __meta_kubernetes_service_name, __meta_kubernetes_endpoint_port_name] action: keep regex: default;kubernetes;https - job_name: 'kubernetes-service-endpoints' kubernetes_sd_configs: - role: endpoints relabel_configs: - source_labels: [__meta_kubernetes_service_annotation_prometheus_io_scrape] action: keep regex: true - source_labels: [__meta_kubernetes_service_annotation_prometheus_io_scheme] action: replace target_label: __scheme__ regex: (https?) - source_labels: [__meta_kubernetes_service_annotation_prometheus_io_path] action: replace target_label: __metrics_path__ regex: (.+) - source_labels: [__address__, __meta_kubernetes_service_annotation_prometheus_io_port] action: replace target_label: __address__ regex: ([^:]+)(?::\d+)?;(\d+) replacement: $1:$2 - action: labelmap regex: __meta_kubernetes_service_label_(.+) - source_labels: [__meta_kubernetes_namespace] action: replace target_label: kubernetes_namespace - source_labels: [__meta_kubernetes_service_name] action: replace target_label: kubernetes_name Events: <none>

root@master001:~/prometheus# kubectl apply -f case3-2-prometheus-deployment.yaml #deploy部署 deployment.apps/prometheus-server created root@master001:~/prometheus# cat case3-2-prometheus-deployment.yaml --- apiVersion: apps/v1 kind: Deployment metadata: name: prometheus-server namespace: monitoring labels: app: prometheus spec: replicas: 1 selector: matchLabels: app: prometheus component: server #matchExpressions: #- {key: app, operator: In, values: [prometheus]} #- {key: component, operator: In, values: [server]} template: metadata: labels: app: prometheus component: server annotations: prometheus.io/scrape: 'false' spec: nodeName: 192.168.192.151 serviceAccountName: monitor containers: - name: prometheus image: prom/prometheus:v2.31.2 imagePullPolicy: IfNotPresent command: - prometheus - --config.file=/etc/prometheus/prometheus.yml - --storage.tsdb.path=/prometheus - --storage.tsdb.retention=720h ports: - containerPort: 9090 protocol: TCP volumeMounts: - mountPath: /etc/prometheus/prometheus.yml name: prometheus-config subPath: prometheus.yml - mountPath: /prometheus/ name: prometheus-storage-volume volumes: - name: prometheus-config configMap: name: prometheus-config items: - key: prometheus.yml path: prometheus.yml mode: 0644 - name: prometheus-storage-volume hostPath: path: /data/prometheusdata type: Directory

root@master001:~/prometheus# kubectl apply -f case3-3-prometheus-svc.yaml #svc部署 service/prometheus created root@master001:~/prometheus# cat case3-3-prometheus-svc.yaml --- apiVersion: v1 kind: Service metadata: name: prometheus namespace: monitoring labels: app: prometheus spec: type: NodePort ports: - port: 9090 targetPort: 9090 nodePort: 30090 protocol: TCP selector: app: prometheus component: server

root@master002:~# netstat -natpl|grep 10250 tcp 0 0 192.168.192.242:10250 0.0.0.0:* LISTEN 946/kubelet tcp 0 0 192.168.192.242:10250 192.168.192.242:48770 ESTABLISHED 946/kubelet tcp 0 0 192.168.192.242:48770 192.168.192.242:10250 ESTABLISHED 869/kube-apiserver tcp 0 0 192.168.192.242:59380 192.168.192.243:10250 ESTABLISHED 869/kube-apiserver tcp 0 0 192.168.192.242:53984 192.168.192.241:10250 ESTABLISHED 869/kube-apiserver

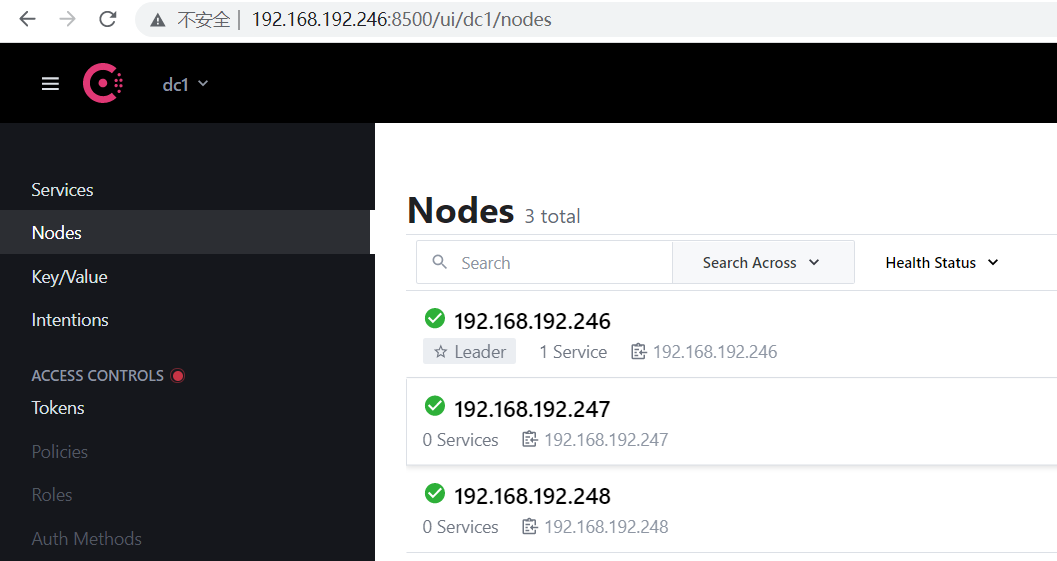

二,consul 集群部署

https://releases.hashicorp.com/consul/

环境 192.168.192.246-248 consul

192.168.192.241-243 k8s

192.168.192.182 prometheus

root@ubuntu20:/apps# unzip consul_1.11.0_linux_amd64.zip Archive: consul_1.11.0_linux_amd64.zip inflating: consul root@ubuntu20:/apps# cp consul /usr/local/bin/

root@ubuntu20:/apps# consul -h #验证

Usage: consul [--version] [--help] <command> [<args>]

mkdir /data/consul -p

root@ubuntu246:/apps# nohup consul agent -server -bootstrap -bind=192.168.192.246 -client=192.168.192.246 --data-dir=/data/consul -ui -node=192.168.192.246 & root@ubuntu247:/apps# nohup consul agent -bind=192.168.192.247 -client=192.168.192.247 --data-dir=/data/consul -node=192.168.192.247 -join=192.168.192.246 & nohup: ignoring input and appending output to 'nohup.out' root@ubuntu20:/apps# nohup consul agent -bind=192.168.192.248 -client=192.168.192.248 --data-dir=/data/consul -node=192.168.192.248 -join=192.168.192.246 & nohup: ignoring input and appending output to 'nohup.out'

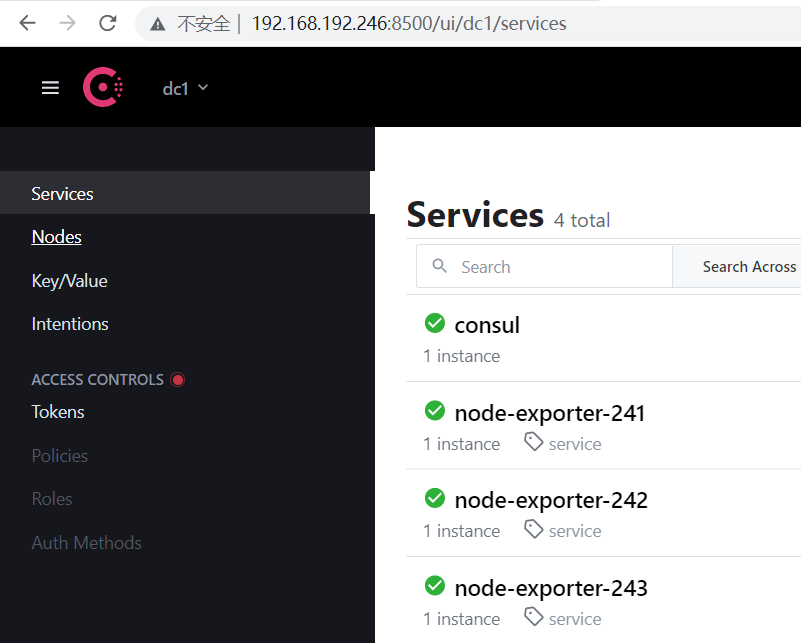

root@jiajiedian:/apps# curl http://192.168.192.246:8500/v1/agent/members root@ubuntu20:/apps# curl -X PUT -d '{"id": "node-exporter-241","name": "node-exporter-241","address": "192.168.192.241","port": 9100,"tags": ["service"],"checks": [{"http": "http://192.168.192.241:9100/","interval": "5s"}]}' http://192.168.192.246:8500/v1/agent/service/register root@ubuntu20:/apps# curl -X PUT -d '{"id": "node-exporter-242","name": "node-exporter-242","address": "192.168.192.242","port": 9100,"tags": ["service"],"checks": [{"http": "http://192.168.192.242:9100/","interval": "5s"}]}' http://192.168.192.246:8500/v1/agent/service/register root@ubuntu20:/apps# curl -X PUT -d '{"id": "node-exporter-243","name": "node-exporter-243","address": "192.168.192.243","port": 9100,"tags": ["service"],"checks": [{"http": "http://192.168.192.243:9100/","interval": "5s"}]}' http://192.168.192.246:8500/v1/agent/service/register

root@shanchu:/apps# curl --request PUT http://192.168.192.246:8500/v1/agent/service/deregister/node-exporter-242

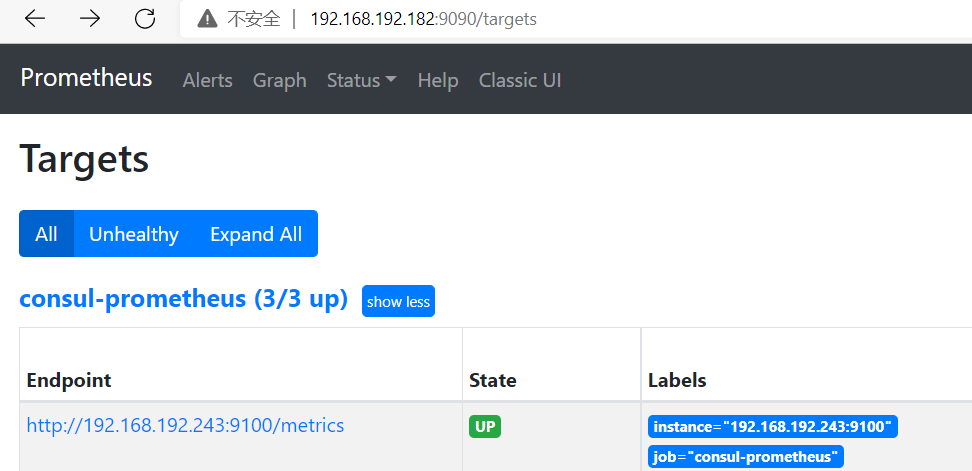

- job_name: 'consul-prometheus' consul_sd_configs: - server: '192.168.192.246:8500' services: [] relabel_configs: - source_labels: [__meta_consul_service] regex: .*consul* action: drop

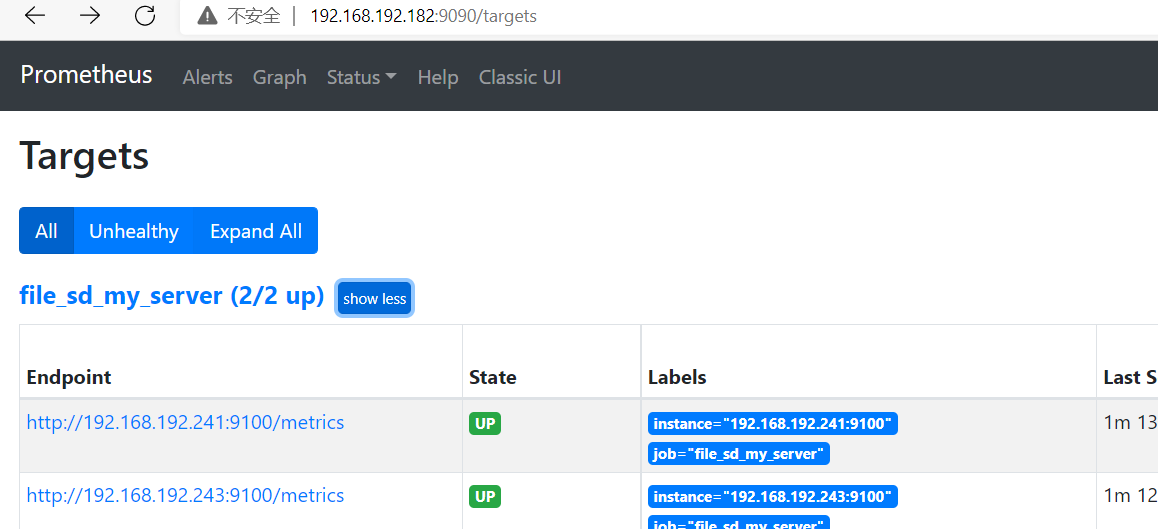

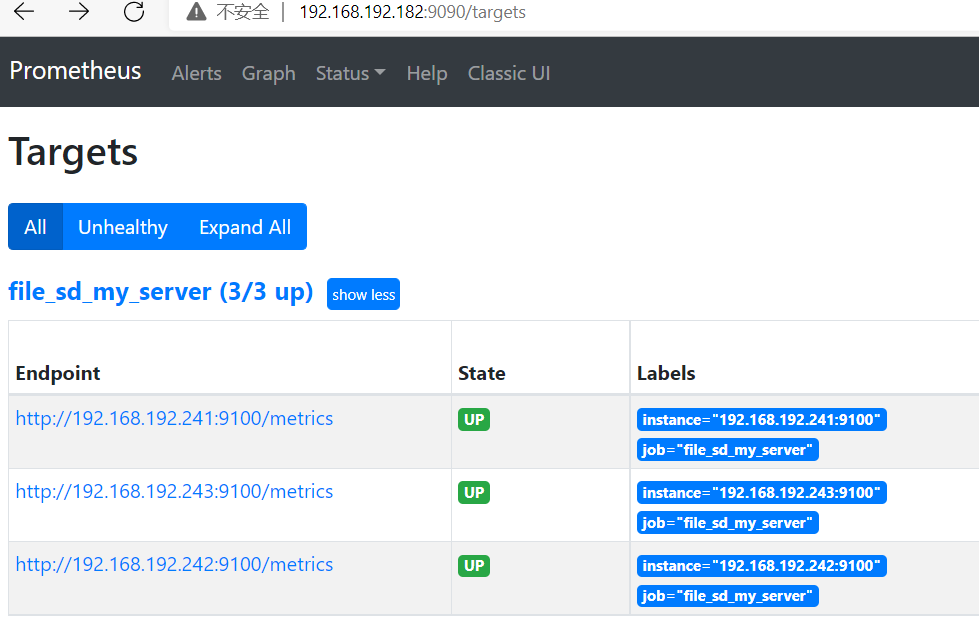

三,file_sd_config

root@pro182:/apps/prometheus# cat prometheus.yml global: scrape_interval: 5s # Set the scrape interval to every 15 seconds. Default is every 1 minute. evaluation_interval: 5s # Evaluate rules every 15 seconds. The default is every 1 minute. #alerting: # alertmanagers: # - static_configs: # - targets: # - 192.168.192.189:9093 rule_files: - "/apps/prometheus/*.yaml" scrape_configs: - job_name: "prometheus-cadvisor" static_configs: - targets: ["192.168.192.243:8080","192.168.192.242:8080","192.168.192.241:8080"] - job_name: 'file_sd_my_server' file_sd_configs: - files: - /apps/prometheus/file_sd/sd_my_server.json refresh_interval: 10s

root@pro182:/apps/prometheus# cat /apps/prometheus/file_sd/sd_my_server.json [ { "targets": [ "192.168.192.241:9100","192.168.192.243:9100"] } ]

root@pro182:/apps/prometheus# cat prometheus.yml global: scrape_interval: 5s # Set the scrape interval to every 15 seconds. Default is every 1 minute. evaluation_interval: 5s # Evaluate rules every 15 seconds. The default is every 1 minute. #alerting: # alertmanagers: # - static_configs: # - targets: # - 192.168.192.189:9093 rule_files: - "/apps/prometheus/*.yaml" scrape_configs: - job_name: "prometheus-cadvisor" static_configs: - targets: ["192.168.192.243:8080","192.168.192.242:8080","192.168.192.241:8080"] - job_name: 'file_sd_my_server' file_sd_configs: - files: - /apps/prometheus/file_sd/sd_my_server.json refresh_interval: 10s - job_name: 'kubernetes-nodes-monitor' scheme: http tls_config: insecure_skip_verify: true bearer_token_file: /apps/prometheus/k8s.token kubernetes_sd_configs: - role: node api_server: https://192.168.192.241:6443 tls_config: insecure_skip_verify: true bearer_token_file: /apps/prometheus/k8s.token relabel_configs: - source_labels: [__address__] regex: '(.*):10250' replacement: '${1}:9100' target_label: __address__ action: replace - source_labels: [__meta_kubernetes_node_label_failure_domain_beta_kubernetes_io_region] regex: '(.*)' replacement: '${1}' action: replace target_label: LOC - source_labels: [__meta_kubernetes_node_label_failure_domain_beta_kubernetes_io_region] regex: '(.*)' replacement: 'NODE' action: replace target_label: Type - source_labels: [__meta_kubernetes_node_label_failure_domain_beta_kubernetes_io_region] regex: '(.*)' replacement: 'K3S-test' action: replace target_label: Env - action: labelmap regex: __meta_kubernetes_node_label_(.+) - job_name: 'kubernetes-pods-monitor' kubernetes_sd_configs: - role: pod api_server: https://192.168.192.241:6443 tls_config: insecure_skip_verify: true bearer_token_file: /apps/prometheus/k8s.token tls_config: insecure_skip_verify: true bearer_token_file: /apps/prometheus/k8s.token relabel_configs: - source_labels: [__meta_kubernetes_pod_annotation_prometheus_io_scrape] action: keep regex: true - source_labels: [__meta_kubernetes_pod_annotation_prometheus_io_path] action: replace target_label: __metrics_path__ regex: (.+) - source_labels: [__address__, __meta_kubernetes_pod_annotation_prometheus_io_port] action: replace regex: ([^:]+)(?::\d+)?;(\d+) replacement: $1:$2 target_label: __address__ - action: labelmap regex: __meta_kubernetes_pod_label_(.+) - source_labels: [__meta_kubernetes_namespace] action: replace target_label: kubernetes_namespace - source_labels: [__meta_kubernetes_pod_name] action: replace target_label: kubernetes_pod_name - source_labels: [__meta_kubernetes_pod_label_pod_template_hash] regex: '(.*)' replacement: 'K8S-test' action: replace target_label: Env

四,在k8s外部授权promethes

root@master001:~/prometheus# cat case4-prom-rbac.yaml apiVersion: v1 kind: ServiceAccount metadata: name: prometheus namespace: monitoring --- apiVersion: rbac.authorization.k8s.io/v1 kind: ClusterRole metadata: name: prometheus rules: - apiGroups: - "" resources: - nodes - services - endpoints - pods - nodes/proxy verbs: - get - list - watch - apiGroups: - "extensions" resources: - ingresses verbs: - get - list - watch - apiGroups: - "" resources: - configmaps - nodes/metrics verbs: - get - nonResourceURLs: - /metrics verbs: - get --- #apiVersion: rbac.authorization.k8s.io/v1beta1 apiVersion: rbac.authorization.k8s.io/v1 kind: ClusterRoleBinding metadata: name: prometheus roleRef: apiGroup: rbac.authorization.k8s.io kind: ClusterRole name: prometheus subjects: - kind: ServiceAccount name: prometheus namespace: monitoring

root@master001:~/prometheus# kubectl get serviceaccount -nmonitoring NAME SECRETS AGE default 1 2d23h monitor 1 2d22h prometheus 1 72s

root@master001:~/prometheus# kubectl get secret -nmonitoring NAME TYPE DATA AGE default-token-r9hd5 kubernetes.io/service-account-token 3 2d23h monitor-token-kbvpj kubernetes.io/service-account-token 3 2d22h prometheus-token-srhnl kubernetes.io/service-account-token 3 2m8s

root@master001:~/prometheus# kubectl describe secret prometheus-token-srhnl -nmonitoring Name: prometheus-token-srhnl Namespace: monitoring Labels: <none> Annotations: kubernetes.io/service-account.name: prometheus kubernetes.io/service-account.uid: 2d9853d0-adfe-415c-963d-dd1da807c6cc Type: kubernetes.io/service-account-token Data ==== ca.crt: 1350 bytes namespace: 10 bytes token: eyJhbGciOiJSUzI1NiIsImtpZCI6Ikc1YlhjN0w1SkJhcmRIWkdwRnBzMlM2NFh4dWxWZ0hkVXVwU0I3dWtFWEEifQ.eyJpc3MiOiJrdWJlcm5ldGVzL3NlcnZpY2VhY2NvdW50Iiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9uYW1lc3BhY2UiOiJtb25pdG9yaW5nIiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9zZWNyZXQubmFtZSI6InByb21ldGhldXMtdG9rZW4tc3JobmwiLCJrdWJlcm5ldGVzLmlvL3NlcnZpY2VhY2NvdW50L3NlcnZpY2UtYWNjb3VudC5uYW1lIjoicHJvbWV0aGV1cyIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VydmljZS1hY2NvdW50LnVpZCI6IjJkOTg1M2QwLWFkZmUtNDE1Yy05NjNkLWRkMWRhODA3YzZjYyIsInN1YiI6InN5c3RlbTpzZXJ2aWNlYWNjb3VudDptb25pdG9yaW5nOnByb21ldGhldXMifQ.r7KzJqb1cDRiC_ioFuHeysQpzmOYTJr_47acfxQq4ngczhv3sn7O0_evOLRFWdfwcG-S80h93Us1rKPu-5tJYdYpo66rWYkQpF8EgjtWdd7UPVD2VG56tm249oL9BFNaRCayEMXLZycy_hX39hR4UcZnfhkHvsnxTiIjRI_S-sFEMFiy9VhD6Szq3VNwDqj197CflaiQqhTj0vVBSZiD16EuTsRE6T_sZDUIByXQIbKmuj2OvaqWGd5zidPeDLcXCbtTOr_TQx8gXFRwL1ZkQnjVp71nvRR8DuRv6f9CwCr4dVnQD1gkbXOISWaGuDA0vtEUdj8s1LW740XO8SraCg

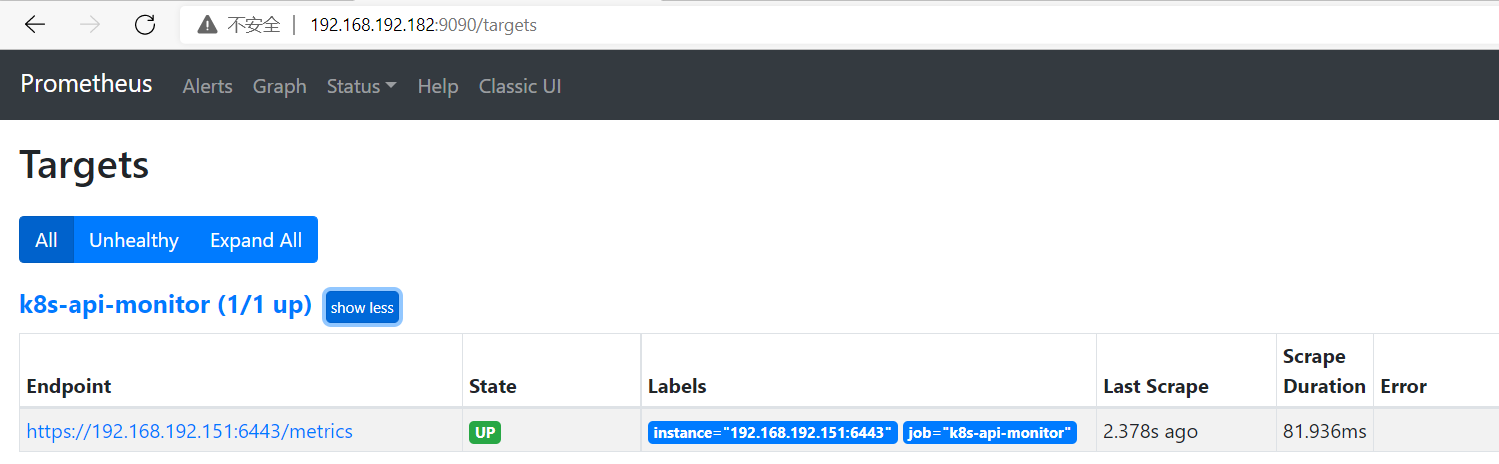

root@pro182:/apps/prometheus# cat prometheus.yml # my global config global: scrape_interval: 15s # Set the scrape interval to every 15 seconds. Default is every 1 minute. evaluation_interval: 15s # Evaluate rules every 15 seconds. The default is every 1 minute. # scrape_timeout is set to the global default (10s). # Alertmanager configuration alerting: alertmanagers: - static_configs: - targets: - 192.168.192.189:9093 # Load rules once and periodically evaluate them according to the global 'evaluation_interval'. rule_files: - "/apps/prometheus/*.yaml" # - "first_rules.yml" # - "second_rules.yml" scrape_configs: - job_name: "prometheus" static_configs: - targets: ["localhost:9090"] - job_name: "prometheus-node_exporter" static_configs: - targets: ["192.168.192.151:39100","192.168.192.152:39100","192.168.192.153:39100"] #网站监控 - job_name: 'wangzhanjiankong-9115' metrics_path: /probe params: module: [http_2xx] static_configs: - targets: ['http://www.baidu.com', 'http://www.xiaomi.com'] labels: instance: http_status group: web relabel_configs: - source_labels: [__address__] target_label: __param_target - source_labels: [__param_target] target_label: url - target_label: __address__ replacement: 192.168.192.189:9115 #端口监控 - job_name: 'port_6443' metrics_path: /probe params: module: [tcp_connect] static_configs: - targets: ['192.168.192.151:6443', '192.168.192.152:6443'] labels: instance: port_status group: port relabel_configs: - source_labels: [__address__] target_label: __param_target - source_labels: [__param_target] target_label: url - target_label: __address__ replacement: 192.168.192.189:9115 - job_name: "prometheus-cadvisor" static_configs: - targets: ["192.168.192.151:8080","192.168.192.152:8080","192.168.192.153:8080"] #二进制监控k8s - job_name: "k8s-api-monitor" kubernetes_sd_configs: - role: endpoints api_server: https://192.168.192.151:6443 tls_config: insecure_skip_verify: true bearer_token_file: /apps/prometheus/k8s.token scheme: https tls_config: insecure_skip_verify: true bearer_token_file: /apps/prometheus/k8s.token relabel_configs: - source_labels: [__meta_kubernetes_namespace, __meta_kubernetes_service_name, __meta_kubernetes_endpoint_port_name] action: keep regex: default;kubernetes;https - target_label: __address__ replacement: 192.168.192.151:6443

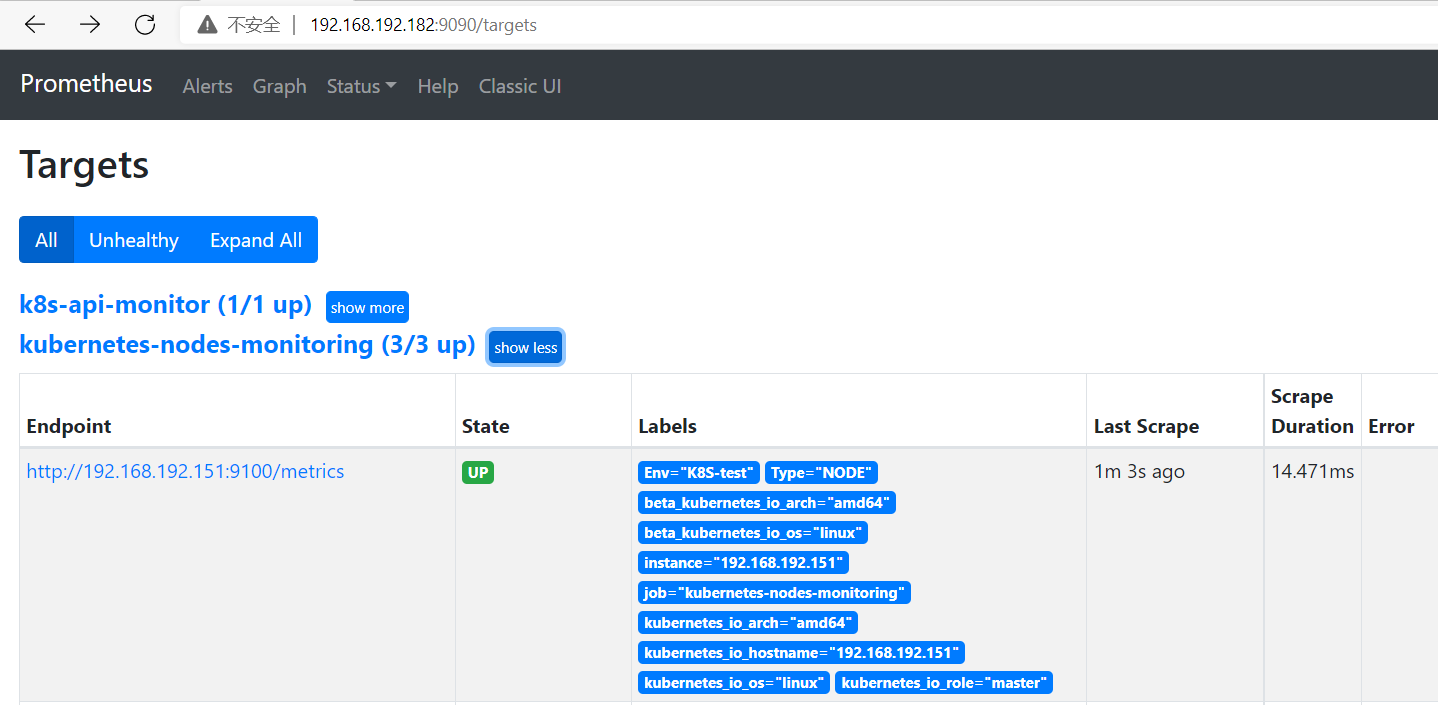

- job_name: 'kubernetes-nodes-monitoring' scheme: http tls_config: insecure_skip_verify: true bearer_token_file: /apps/prometheus/k8s.token kubernetes_sd_configs: - role: node api_server: https://192.168.192.151:6443 tls_config: insecure_skip_verify: true bearer_token_file: /apps/prometheus/k8s.token relabel_configs: - source_labels: [__address__] regex: '(.*):10250' replacement: '${1}:9100' target_label: __address__ action: replace - source_labels: [__meta_kubernetes_node_label_failure_domain_beta_kubernetes_io_region] regex: '(.*)' replacement: '${1}' action: replace target_label: LOC - source_labels: [__meta_kubernetes_node_label_failure_domain_beta_kubernetes_io_region] regex: '(.*)' replacement: 'NODE' action: replace target_label: Type - source_labels: [__meta_kubernetes_node_label_failure_domain_beta_kubernetes_io_region] regex: '(.*)' replacement: 'K8S-test' action: replace target_label: Env - action: labelmap regex: __meta_kubernetes_node_label_(.+)

分类:

监控

【推荐】国内首个AI IDE,深度理解中文开发场景,立即下载体验Trae

【推荐】编程新体验,更懂你的AI,立即体验豆包MarsCode编程助手

【推荐】抖音旗下AI助手豆包,你的智能百科全书,全免费不限次数

【推荐】轻量又高性能的 SSH 工具 IShell:AI 加持,快人一步

· 震惊!C++程序真的从main开始吗?99%的程序员都答错了

· 【硬核科普】Trae如何「偷看」你的代码?零基础破解AI编程运行原理

· 单元测试从入门到精通

· 上周热点回顾(3.3-3.9)

· winform 绘制太阳,地球,月球 运作规律