istio-1.12.0-部署使用

一,k8s安装istio

https://github.com/istio/istio/releases/tag/1.12.0

https://github.com/istio/istio/releases/istio-1.12.0-linux-amd64.tar.gz

root@master001:~/istio/istio-1.12.0/bin# cp -a istioctl /usr/bin/

root@master001:~/istio-1.12.0# istioctl install --set profile=demo This will install the Istio 1.12.0 demo profile with ["Istio core" "Istiod" "Ingress gateways" "Egress gateways"] components into the cluster. Proceed? (y/N) y

root@slave001:~# docker images |grep istio

istio/proxyv2:1.12.0

istio/pilot:1.12.0

root@slave001:~# kubectl get po -A NAMESPACE NAME READY STATUS RESTARTS AGE istio-system istio-egressgateway-7f4864f59c-nz69w 1/1 Running 0 9m48s istio-system istio-ingressgateway-55d9fb9f-trmkq 1/1 Running 0 9m29s istio-system istiod-555d47cb65-dlfs4 1/1 Running 0 24m

root@master001:~/istio/istio-1.12.0/bin# kubectl get svc -n istio-system NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE istio-egressgateway ClusterIP 10.100.185.189 <none> 80/TCP,443/TCP 28m istio-ingressgateway LoadBalancer 10.100.94.111 <pending> 15021:60211/TCP,80:57328/TCP,443:61049/TCP,31400:2464/TCP,15443:59853/TCP 28m istiod ClusterIP 10.100.136.217 <none> 15010/TCP,15012/TCP,443/TCP,15014/TCP 29m

二,Istio部署在线书店bookinfo

2.1、在线书城功能介绍

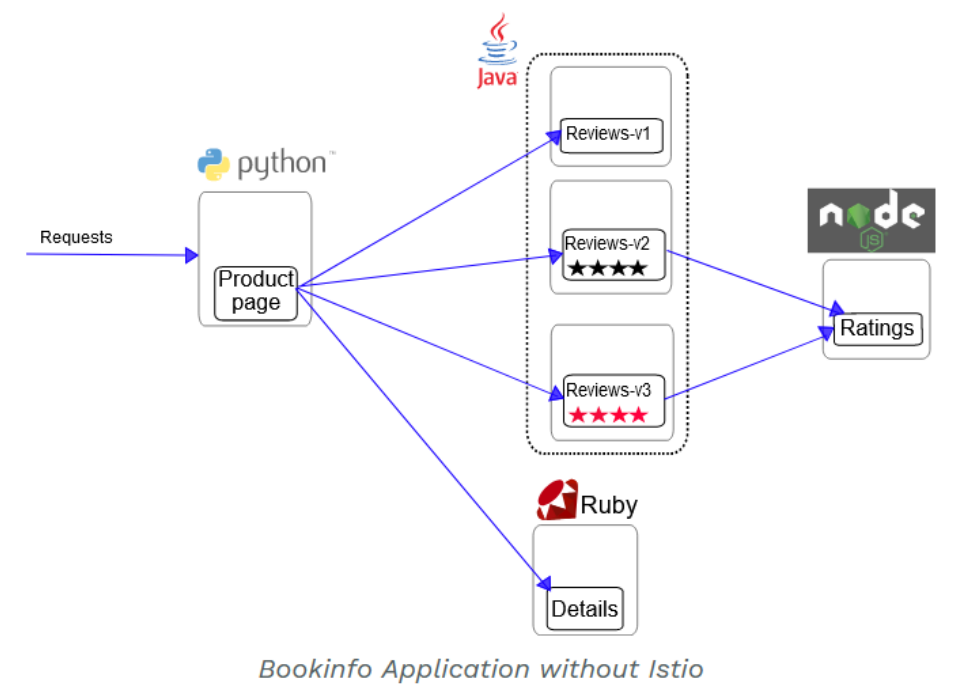

在线书店-bookinfo:该应用由四个单独的微服务构成,这个应用模仿在线书店的一个分类,显示一本书的信息,页面上会显示一本书的描述,书籍的细节(ISBN、页数等),以及关于这本书的一些评论。

Bookinfo应用分为四个单独的微服务

1)productpage这个微服务会调用details和reviews两个微服务,用来生成页面;

2)details这个微服务中包含了书籍的信息;

3)reviews这个微服务中包含了书籍相关的评论,它还会调用ratings微服务;

4)ratings这个微服务中包含了由书籍评价组成的评级信息。

reviews微服务有3个版本

1)v1版本不会调用ratings服务;

2)v2版本会调用ratings服务,并使用1到5个黑色星形图标来显示评分信息;

3)v3版本会调用ratings服务,并使用1到5个红色星形图标来显示评分信息。

Bookinfo应用中的几个微服务是由不同的语言编写的。这些服务对istio并无依赖,但是构成了一个有代表性的服务网格的例子:它由多个服务、多个语言构成,并且reviews服务具有多个版本。

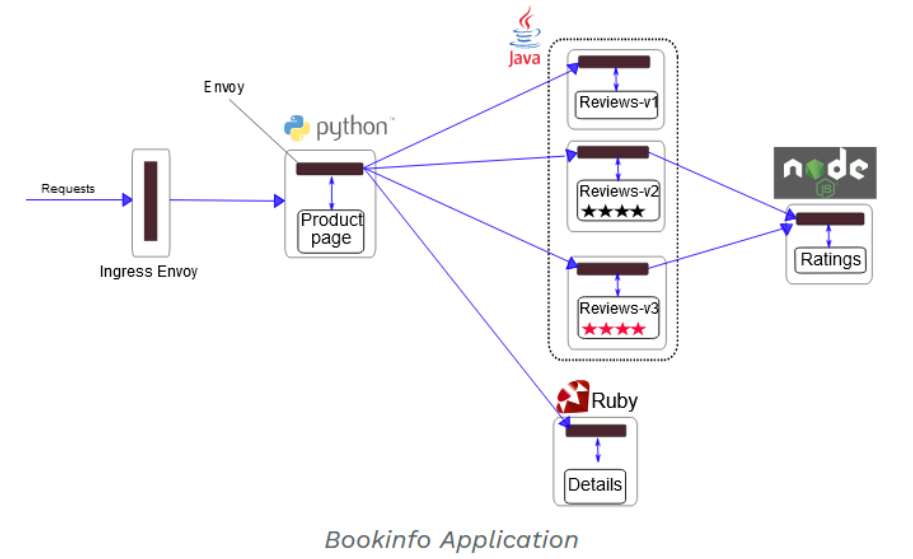

要在Istio中运行这一应用,无需对应用自身做出任何改变。 只要简单的在 Istio 环境中对服务进行配置和运行,具体一点说就是把 Envoy sidecar 注入到每个服务之中。 最终的部署结果将如下图所示:

所有的微服务都和Envoy sidecar集成在一起,被集成服务所有的出入流量都被envoy sidecar 所劫持,这样就为外部控制准备了所需的 Hook,然后就可以利用Istio控制平面为应用提供服务路由、遥测数据收集以及策略实施等功能。

2.2、在线书城部署

1)istio默认自动注入 sidecar,需要为default命名空间打上标签istio-injection=enabled

root@master001:~# kubectl label namespace default istio-injection=enabled

root@master001:~/istio-canary# kubectl describe ns default |grep istio-injection Labels: istio-injection=enabled

root@master001:~/istio-canary# kubectl label namespace default istio-injection=disabled --overwrite namespace/default labeled root@master001:~/istio-canary# kubectl get namespace -L istio-injection

2)使用kubectl部署应用bookinfo

root@master001:~/istio/istio-1.12.0# kubectl apply -f samples/bookinfo/platform/kube/bookinfo.yaml

镜像

docker.io/istio/examples-bookinfo-details-v1:1.16.2

docker.io/istio/examples-bookinfo-productpage-v1:1.16.2

docker.io/istio/examples-bookinfo-ratings-v1:1.16.2

docker.io/istio/examples-bookinfo-reviews-v1:1.16.2

docker.io/istio/examples-bookinfo-reviews-v2:1.16.2

docker.io/istio/examples-bookinfo-reviews-v3:1.16.2

root@slave001:~/bookinfo# kubectl get po NAME READY STATUS RESTARTS AGE details-v1-79f774bdb9-zdmwn 2/2 Running 0 50m productpage-v1-6b746f74dc-g6tgw 2/2 Running 0 50m ratings-v1-b6994bb9-gtv7t 2/2 Running 0 50m reviews-v1-545db77b95-k4tfn 2/2 Running 0 12m reviews-v2-7bf8c9648f-p7mc6 2/2 Running 0 8m20s reviews-v3-84779c7bbc-fb5bq 2/2 Running 0 119s

root@master001:~/istio/istio-1.12.0# kubectl get services NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE canary ClusterIP 10.100.134.132 <none> 80/TCP 24d details ClusterIP 10.100.52.34 <none> 9080/TCP 53s kubernetes ClusterIP 10.100.0.1 <none> 443/TCP 59d productpage ClusterIP 10.100.241.70 <none> 9080/TCP 53s ratings ClusterIP 10.100.69.124 <none> 9080/TCP 53s reviews ClusterIP 10.100.177.75 <none> 9080/TCP 53s

root@master001:~/istio/istio-1.12.0# cat samples/bookinfo/platform/kube/bookinfo.yaml # Copyright Istio Authors # # Licensed under the Apache License, Version 2.0 (the "License"); # you may not use this file except in compliance with the License. # You may obtain a copy of the License at # # http://www.apache.org/licenses/LICENSE-2.0 # # Unless required by applicable law or agreed to in writing, software # distributed under the License is distributed on an "AS IS" BASIS, # WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied. # See the License for the specific language governing permissions and # limitations under the License. ################################################################################################## # This file defines the services, service accounts, and deployments for the Bookinfo sample. # # To apply all 4 Bookinfo services, their corresponding service accounts, and deployments: # # kubectl apply -f samples/bookinfo/platform/kube/bookinfo.yaml # # Alternatively, you can deploy any resource separately: # # kubectl apply -f samples/bookinfo/platform/kube/bookinfo.yaml -l service=reviews # reviews Service # kubectl apply -f samples/bookinfo/platform/kube/bookinfo.yaml -l account=reviews # reviews ServiceAccount # kubectl apply -f samples/bookinfo/platform/kube/bookinfo.yaml -l app=reviews,version=v3 # reviews-v3 Deployment ################################################################################################## ################################################################################################## # Details service ################################################################################################## apiVersion: v1 kind: Service metadata: name: details labels: app: details service: details spec: ports: - port: 9080 name: http selector: app: details --- apiVersion: v1 kind: ServiceAccount metadata: name: bookinfo-details labels: account: details --- apiVersion: apps/v1 kind: Deployment metadata: name: details-v1 labels: app: details version: v1 spec: replicas: 1 selector: matchLabels: app: details version: v1 template: metadata: labels: app: details version: v1 spec: serviceAccountName: bookinfo-details containers: - name: details image: docker.io/istio/examples-bookinfo-details-v1:1.16.2 imagePullPolicy: IfNotPresent ports: - containerPort: 9080 securityContext: runAsUser: 1000 --- ################################################################################################## # Ratings service ################################################################################################## apiVersion: v1 kind: Service metadata: name: ratings labels: app: ratings service: ratings spec: ports: - port: 9080 name: http selector: app: ratings --- apiVersion: v1 kind: ServiceAccount metadata: name: bookinfo-ratings labels: account: ratings --- apiVersion: apps/v1 kind: Deployment metadata: name: ratings-v1 labels: app: ratings version: v1 spec: replicas: 1 selector: matchLabels: app: ratings version: v1 template: metadata: labels: app: ratings version: v1 spec: serviceAccountName: bookinfo-ratings containers: - name: ratings image: docker.io/istio/examples-bookinfo-ratings-v1:1.16.2 imagePullPolicy: IfNotPresent ports: - containerPort: 9080 securityContext: runAsUser: 1000 --- ################################################################################################## # Reviews service ################################################################################################## apiVersion: v1 kind: Service metadata: name: reviews labels: app: reviews service: reviews spec: ports: - port: 9080 name: http selector: app: reviews --- apiVersion: v1 kind: ServiceAccount metadata: name: bookinfo-reviews labels: account: reviews --- apiVersion: apps/v1 kind: Deployment metadata: name: reviews-v1 labels: app: reviews version: v1 spec: replicas: 1 selector: matchLabels: app: reviews version: v1 template: metadata: labels: app: reviews version: v1 spec: serviceAccountName: bookinfo-reviews containers: - name: reviews image: docker.io/istio/examples-bookinfo-reviews-v1:1.16.2 imagePullPolicy: IfNotPresent env: - name: LOG_DIR value: "/tmp/logs" ports: - containerPort: 9080 volumeMounts: - name: tmp mountPath: /tmp - name: wlp-output mountPath: /opt/ibm/wlp/output securityContext: runAsUser: 1000 volumes: - name: wlp-output emptyDir: {} - name: tmp emptyDir: {} --- apiVersion: apps/v1 kind: Deployment metadata: name: reviews-v2 labels: app: reviews version: v2 spec: replicas: 1 selector: matchLabels: app: reviews version: v2 template: metadata: labels: app: reviews version: v2 spec: serviceAccountName: bookinfo-reviews containers: - name: reviews image: docker.io/istio/examples-bookinfo-reviews-v2:1.16.2 imagePullPolicy: IfNotPresent env: - name: LOG_DIR value: "/tmp/logs" ports: - containerPort: 9080 volumeMounts: - name: tmp mountPath: /tmp - name: wlp-output mountPath: /opt/ibm/wlp/output securityContext: runAsUser: 1000 volumes: - name: wlp-output emptyDir: {} - name: tmp emptyDir: {} --- apiVersion: apps/v1 kind: Deployment metadata: name: reviews-v3 labels: app: reviews version: v3 spec: replicas: 1 selector: matchLabels: app: reviews version: v3 template: metadata: labels: app: reviews version: v3 spec: serviceAccountName: bookinfo-reviews containers: - name: reviews image: docker.io/istio/examples-bookinfo-reviews-v3:1.16.2 imagePullPolicy: IfNotPresent env: - name: LOG_DIR value: "/tmp/logs" ports: - containerPort: 9080 volumeMounts: - name: tmp mountPath: /tmp - name: wlp-output mountPath: /opt/ibm/wlp/output securityContext: runAsUser: 1000 volumes: - name: wlp-output emptyDir: {} - name: tmp emptyDir: {} --- ################################################################################################## # Productpage services ################################################################################################## apiVersion: v1 kind: Service metadata: name: productpage labels: app: productpage service: productpage spec: ports: - port: 9080 name: http selector: app: productpage --- apiVersion: v1 kind: ServiceAccount metadata: name: bookinfo-productpage labels: account: productpage --- apiVersion: apps/v1 kind: Deployment metadata: name: productpage-v1 labels: app: productpage version: v1 spec: replicas: 1 selector: matchLabels: app: productpage version: v1 template: metadata: labels: app: productpage version: v1 spec: serviceAccountName: bookinfo-productpage containers: - name: productpage image: docker.io/istio/examples-bookinfo-productpage-v1:1.16.2 imagePullPolicy: IfNotPresent ports: - containerPort: 9080 volumeMounts: - name: tmp mountPath: /tmp securityContext: runAsUser: 1000 volumes: - name: tmp emptyDir: {} ---

root@master001:~/istio/istio-1.12.0# kubectl get serviceAccount --namespace=default NAME SECRETS AGE bookinfo-details 1 4m28s bookinfo-productpage 1 4m27s bookinfo-ratings 1 4m28s bookinfo-reviews 1 4m28s default 1 59d

service account,主要是给service使用的一个账号。

为了让Pod中的进程、服务能访问k8s集群而提出的一个概念,基于service account,pod中的进程、服务能获取到一个username和令牌Token,从而调用kubernetes集群的api server。

3)确认 Bookinfo 应用是否正在运行,在某个Pod中用curl命令对应用发送请求,例如ratings确认运行正常

root@slave001:~# kubectl exec -it $(kubectl get pod -l app=ratings -o jsonpath='{.items[0].metadata.name}') -c ratings -- curl productpage:9080/productpage | grep -o "<title>.*</title>" <title>Simple Bookstore App</title>

4)确定Ingress的IP和端口

现在Bookinfo服务已经启动并运行,你需要使应用程序可以从Kubernetes集群外部访问,例如从浏览器访问,那可以用Istio Gateway来实现这个目标。

# 1、为应用程序定义gateway网关

root@master001:~/istio/istio-1.12.0# cat samples/bookinfo/networking/bookinfo-gateway.yaml apiVersion: networking.istio.io/v1alpha3 kind: Gateway metadata: name: bookinfo-gateway spec: selector: istio: ingressgateway # use istio default controller servers: - port: number: 80 name: http protocol: HTTP hosts: - "*" --- apiVersion: networking.istio.io/v1alpha3 kind: VirtualService metadata: name: bookinfo spec: hosts: - "*" gateways: - bookinfo-gateway http: - match: - uri: exact: /productpage - uri: prefix: /static - uri: exact: /login - uri: exact: /logout - uri: prefix: /api/v1/products route: - destination: host: productpage port: number: 9080

root@master001:~/istio/istio-1.12.0# kubectl apply -f samples/bookinfo/networking/bookinfo-gateway.yaml gateway.networking.istio.io/bookinfo-gateway created virtualservice.networking.istio.io/bookinfo created root@master001:~/istio/istio-1.12.0# kubectl get gateway NAME AGE bookinfo-gateway 12s root@master001:~/istio/istio-1.12.0# kubectl get virtualservice NAME GATEWAYS HOSTS AGE bookinfo ["bookinfo-gateway"] ["*"] 20s

#2,确定ingress ip和端口

root@master001:~/istio/istio-1.12.0# kubectl get svc istio-ingressgateway -n istio-system NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE istio-ingressgateway LoadBalancer 10.100.94.111 <pending> 15021:60211/TCP,80:57328/TCP,443:61049/TCP,31400:2464/TCP,15443:59853/TCP 22h

#3,获取Istio Gateway的地址

root@master001:~/istio/istio-1.12.0# kubectl -n istio-system get service istio-ingressgateway -o jsonpath='{.spec.ports[?(@.name=="http2")].nodePort}' 57328

root@master001:~/istio/istio-1.12.0# export INGRESS_PORT=$(kubectl -n istio-system get service istio-ingressgateway -o jsonpath='{.spec.ports[?(@.name=="http2")].nodePort}') root@master001:~/istio/istio-1.12.0# echo $INGRESS_PORT 57328

root@master001:~/istio/istio-1.12.0# export SECURE_INGRESS_PORT=$(kubectl -n istio-system get service istio-ingressgateway -o jsonpath='{.spec.ports[?(@.name=="https")].nodePort}') root@master001:~/istio/istio-1.12.0# echo $SECURE_INGRESS_PORT 61049

设置gateway url

root@master001:~/istio/istio-1.12.0# INGRESS_HOST=192.168.192.151 root@master001:~/istio/istio-1.12.0# export GATEWAY_URL=$INGRESS_HOST:$INGRESS_PORT root@master001:~/istio/istio-1.12.0# echo $GATEWAY_URL 192.168.192.151:57328

4,使用curl命令确认能从集群外部访问bookinfo应用程序

root@master001:~/istio/istio-1.12.0# curl -s http://${GATEWAY_URL}/productpage | grep -o "<title>.*</title>" <title>Simple Bookstore App</title>

浏览器访问http://192.168.192.151:57328/productpage

5,扩展:添加外部IP-extertal-IP

spec: clusterIP: 10.100.94.111 clusterIPs: - 10.100.94.111 externalIPs: - 192.168.192.151

6,卸载bookinfo服务

# 1.删除路由规则,并销毁应用的 Pod

root@master001:~/istio/istio-1.12.0# bash samples/bookinfo/platform/kube/cleanup.sh

namespace ? [default] y

NAMESPACE y not found.

using NAMESPACE=defaule

# 2.确认应用已经关停 kubectl get virtualservices #-- there should be no virtual services kubectl get destinationrules #-- there should be no destination rules kubectl get gateway #-- there should be no gateway kubectl get pods #-- the Bookinfo pods should be deleted

三,Istio实现灰度发布

金丝雀部署 新老版本逐步交替

root@master001:~/istio-canary# cat deployment.yaml apiVersion: apps/v1 kind: Deployment metadata: name: appv1 labels: app: v1 spec: replicas: 1 selector: matchLabels: app: v1 apply: canary template: metadata: labels: app: v1 apply: canary spec: containers: - name: nginx image: xianchao/canary:v1 imagePullPolicy: IfNotPresent ports: - containerPort: 80 --- apiVersion: apps/v1 kind: Deployment metadata: name: appv2 labels: app: v2 spec: replicas: 1 selector: matchLabels: app: v2 apply: canary template: metadata: labels: app: v2 apply: canary spec: containers: - name: nginx image: xianchao/canary:v2 imagePullPolicy: IfNotPresent ports: - containerPort: 80 root@master001:~/istio-canary# cat service.yaml apiVersion: v1 kind: Service metadata: name: canary labels: apply: canary spec: selector: apply: canary ports: - protocol: TCP port: 80 targetPort: 80 root@master001:~/istio-canary# cat gateway.yaml apiVersion: networking.istio.io/v1beta1 kind: Gateway metadata: name: canary-gateway spec: selector: istio: ingressgateway servers: - port: number: 80 name: http protocol: HTTP hosts: - "*" root@master001:~/istio-canary# cat virtual.yaml apiVersion: networking.istio.io/v1beta1 kind: VirtualService metadata: name: canary spec: hosts: - "*" gateways: - canary-gateway http: - route: - destination: host: canary.default.svc.cluster.local subset: v1 weight: 90 - destination: host: canary.default.svc.cluster.local subset: v2 weight: 10 --- apiVersion: networking.istio.io/v1beta1 kind: DestinationRule metadata: name: canary spec: host: canary.default.svc.cluster.local subsets: - name: v1 labels: app: v1 - name: v2 labels: app: v2

root@master001:~/istio-canary# kubectl get gateway NAME AGE canary-gateway 7m55s root@master001:~/istio-canary# kubectl get virtualservices NAME GATEWAYS HOSTS AGE canary ["canary-gateway"] ["*"] 5m53s

验证效果

kubectl -n istio-system get service istio-ingressgateway -o jsonpath='{.spec.ports[?(@.name=="http2")].nodePort}' for i in `seq 1 100`; do curl 192.168.192.151:57328;done > 1.txt

四,Istio核心资源

4.1、Gateway

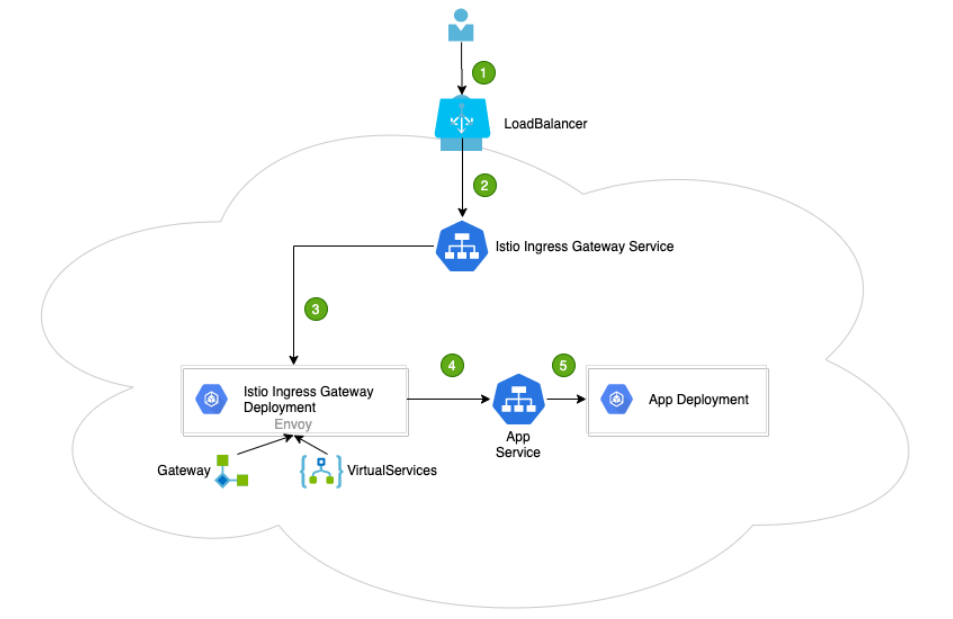

在Kubernetes环境中,Ingress controller用于管理进入集群的流量。在Istio服务网格中 Istio Ingress Gateway承担相应的角色,它使用新的配置模型(Gateway 和 VirtualServices)完成流量管理的功能。通过下图做一个总的描述。

1、用户向某端口发出请求

2、负载均衡器监听端口,并将请求转发到集群中的某个节点上。Istio Ingress Gateway Service 会监听集群节点端口的请求

3、Istio Ingress Gateway Service 将请求交给Istio Ingress Gateway Pod 处理。IngressGateway Pod 通过 Gateway 和 VirtualService 配置规则处理请求。其中,Gateway 用来配置端口、协议和证书;VirtualService 用来配置一些路由信息(找到请求对应处理的服务App Service)

4、Istio Ingress Gateway Pod将请求转给App Service

5、最终的请求会交给App Service 关联的App Deployment处理

root@master001:~/istio/istio-1.12.0# cat /root/istio-canary/gateway.yaml apiVersion: networking.istio.io/v1beta1 kind: Gateway metadata: name: canary-gateway spec: selector: istio: ingressgateway servers: - port: number: 80 name: http protocol: HTTP hosts: - "*" # *表示通配符,通过任何域名都可以访问

网关是一个运行在网格边缘的负载均衡器,用于接收传入或传出的HTTP/TCP连接。主要工作是接受外部请求,把请求转发到内部服务。网格边缘的Ingress 流量,会通过对应的 Istio IngressGateway Controller 进入到集群内部。

在上面这个yaml里我们配置了一个监听80端口的入口网关,它会将80端口的http流量导入到集群内对应的Virtual Service上。

4.2、VirtualService

VirtualService是Istio流量治理的一个核心配置,可以说是Istio流量治理中最重要、最复杂的。VirtualService在形式上表示一个虚拟服务,将满足条件的流量都转发到对应的服务后端,这个服务后端可以是一个服务,也可以是在DestinationRule中定义的服务的子集。

root@master001:~/istio-canary# cat virtual.yaml apiVersion: networking.istio.io/v1beta1 kind: VirtualService metadata: name: canary spec: hosts: - "*" gateways: - canary-gateway http: - route: - destination: host: canary.default.svc.cluster.local subset: v1 weight: 90 - destination: host: canary.default.svc.cluster.local subset: v2 weight: 10 --- apiVersion: networking.istio.io/v1beta1 kind: DestinationRule metadata: name: canary spec: host: canary.default.svc.cluster.local subsets: - name: v1 labels: app: v1 - name: v2 labels: app: v2

# 这个虚拟服务会收到上一个gateway中所有80端口来的http流量

4.2.1、hosts

VirtualService 主要由以下部分组成

虚拟主机名称,如果在 Kubernetes 集群中,则这个主机名可以是service服务名。hosts字段列出了virtual service的虚拟主机。它是客户端向服务发送请求时使用的一个或多个地址,通过该字段提供的地址访问virtual service,进而访问后端服务。在集群内部(网格内)使用时通常与kubernetes的Service同名;当需要在集群外部(网格外)访问时,该字段为gateway请求的地址,即与gateway的hosts字段相同。

hosts:

- reviews

virtual service的主机名可以是IP地址、DNS名称,也可以是短名称(例如Kubernetes服务短名称),该名称会被隐式或显式解析为全限定域名(FQDN),具体取决于istio依赖的平台。可以使用前缀通配符(“*”)为所有匹配的服务创建一组路由规则。virtual service的hosts不一定是Istio服务注册表的一部分,它们只是虚拟目的地,允许用户为网格无法路由到的虚拟主机建立流量模型。

virtual service的hosts短域名在解析为完整的域名时,补齐的namespace是VirtualService所在的命名空间,而非Service所在的命名空间。如上例的hosts会被解析为:reviews.default.svc.cluster.local。

virtualservice配置路由规则

由规则的功能是:满足http.match条件的流量都被路由到http.route.destination,执行重定向(HTTPRedirect)、重写(HTTPRewrite)、重试(HTTPRetry)、故障注入(HTTPFaultInjection)、跨站(CorsPolicy)策略等。HTTPRoute不仅可以做路由匹配,还可以做一些写操作来修改请求本身。

root@master001:~/istio/istio-1.12.0/samples/bookinfo/networking# cat virtual-service-reviews-jason-v2-v3.yaml apiVersion: networking.istio.io/v1alpha3 kind: VirtualService metadata: name: reviews spec: hosts: - reviews http: - match: - headers: end-user: exact: jason route: - destination: host: reviews subset: v2 - route: - destination: host: reviews subset: v3

在 http 字段包含了虚拟服务的路由规则,用来描述匹配条件和路由行为,它们把 HTTP/1.1、HTTP2 和 gRPC 等流量发送到 hosts 字段指定的目标。

示例中的第一个路由规则有一个条件,以 match 字段开始。此路由接收来自 ”jason“ 用户的所有请求,把请求发送到destination指定的v2子集。

路由规则优先级:

在上面例子中,不满足第一个路由规则的流量均流向一个默认的目标,该目标在第二条规则中指定。因此,第二条规则没有 match 条件,直接将流量导向 v3 子集。

多路由规则:

详细配置可参考:https://istio.io/latest/zh/docs/reference/config/networking/virtual-service/#HTTPMatchRequest

apiVersion: networking.istio.io/v1alpha3 kind: VirtualService metadata: name: bookinfo spec: hosts: - bookinfo.com http: - match: - uri: prefix: /reviews route: - destination: host: reviews - match: - uri: prefix: /ratings route: - destination: host: ratings

路由规则是将特定流量子集路由到指定目标地址的工具。可以在流量端口、header 字段、URI 等内容上设置匹配条件。例如,上面这个虚拟服务让用户发送请求到两个独立的服务:ratings 和 reviews,相当于访问http://bookinfo.com/ratings 和http://bookinfo.com/reviews,虚拟服务规则根据请求的 URI 把请求路由到特定的目标地址。

4.2.2、gateway

流量来源网关

4.2.3、路由

路由的destination字段指定了匹配条件的流量的实际地址。与virtual service的主机不同,该host必须是存在于istio的服务注册表(如kubernetes services,consul services等)中的真实目的地或由ServiceEntries声明的hosts,否则Envoy不知道应该将流量发送到哪里。它可以是一个带代理的网格服务或使用service entry添加的非网格服务。在kubernetes作为平台的情况下,host表示名为kubernetes的service名称:

- destination: host: canary.default.svc.cluster.local subset: v1 weight: 90

4.3、DestinationRule

destination rule是istio流量路由功能的重要组成部分。一个virtual service可以看作是如何将流量分发给特定的目的地,然后调用destination rule来配置分发到该目的地的流量。destination rule在virtual service的路由规则之后起作用(即在virtual service的math->route-destination之后起作用,此时流量已经分发到真实的service上),应用于真实的目的地。

可以使用destination rule来指定命名的服务子集,例如根据版本对服务的实例进行分组,然后通过virtual service的路由规则中的服务子集将控制流量分发到不同服务的实例中

root@master001:~/istio-canary# cat DestinationRule.yaml apiVersion: networking.istio.io/v1beta1 kind: DestinationRule metadata: name: canary spec: host: canary.default.svc.cluster.local subsets: - name: v1 labels: app: v1 - name: v2 labels: app: v2

在虚拟服务中使用Hosts配置默认绑定的路由地址,用http.route字段,设置http进入的路由地址,可以看到,上面导入到了目标规则为v1和v2的子集。

v1子集对应的是具有如下标签的pod:

root@master001:~/istio-canary# cat deployment.yaml apiVersion: apps/v1 kind: Deployment metadata: name: appv1 labels: app: v1 spec: replicas: 1 selector: matchLabels: app: v1 apply: canary

五,Istio核心功能演示

5,1 断路器

断路器是创建弹性微服务应用程序的重要模式。断路器使应用程序可以适应网络故障和延迟等网络不良影响。

官网:https://istio.io/latest/zh/docs/tasks/traffic-management/circuit-breaking/

1)在k8s集群创建后端服务

root@master001:~/istio/istio-1.12.0# cat samples/httpbin/httpbin.yaml # Copyright Istio Authors # # Licensed under the Apache License, Version 2.0 (the "License"); # you may not use this file except in compliance with the License. # You may obtain a copy of the License at # # http://www.apache.org/licenses/LICENSE-2.0 # # Unless required by applicable law or agreed to in writing, software # distributed under the License is distributed on an "AS IS" BASIS, # WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied. # See the License for the specific language governing permissions and # limitations under the License. ################################################################################################## # httpbin service ################################################################################################## apiVersion: v1 kind: ServiceAccount metadata: name: httpbin --- apiVersion: v1 kind: Service metadata: name: httpbin labels: app: httpbin service: httpbin spec: ports: - name: http port: 8000 targetPort: 80 selector: app: httpbin --- apiVersion: apps/v1 kind: Deployment metadata: name: httpbin spec: replicas: 1 selector: matchLabels: app: httpbin version: v1 template: metadata: labels: app: httpbin version: v1 spec: serviceAccountName: httpbin containers: - image: docker.io/kennethreitz/httpbin imagePullPolicy: IfNotPresent name: httpbin ports: - containerPort: 80

root@master001:~/istio/istio-1.12.0# kubectl apply -f samples/httpbin/httpbin.yaml

root@master001:~/istio/istio-1.12.0# kubectl get pods | grep httpbin

httpbin-74fb669cc6-qflbt 2/2 Running 0 9m47s

2)配置断路器

# 创建一个目标规则,在调用httpbin服务时应用断路器设置

root@master001:~/istio/istio-1.12.0# kubectl apply -f httpbin_destination.yaml

destinationrule.networking.istio.io/httpbin created

root@master001:~/istio/istio-1.12.0# cat httpbin_destination.yaml apiVersion: networking.istio.io/v1beta1 kind: DestinationRule metadata: name: httpbin spec: host: httpbin trafficPolicy: connectionPool: tcp: maxConnections: 1 http: http1MaxPendingRequests: 1 maxRequestsPerConnection: 1 outlierDetection: consecutiveGatewayErrors: 1 interval: 1s baseEjectionTime: 3m maxEjectionPercent: 100

参数说明

apiVersion: networking.istio.io/v1beta1 kind: DestinationRule metadata: name: httpbin spec: host: httpbin trafficPolicy: connectionPool: #连接池(TCP | HTTP)配置,例如:连接数、并发请求等 tcp: maxConnections: 1 #TCP连接池中的最大连接请求数,当超过这个值,会返回503代码。如两个请求过来,就会有一个请求返回503。 http: http1MaxPendingRequests: 1 #连接到目标主机的最大挂起请求数,也就是待处理请求数。指的是virtualservice路由规则中配置的destination。 maxRequestsPerConnection: 1 #连接池中每个连接最多处理1个请求后就关闭,并根据需要重新创建连接池中的连接 outlierDetection: #异常检测配置,传统意义上的熔断配置,即对规定时间内服务错误数的监测 consecutiveGatewayErrors: 1 #连续错误数1,即连续返回502-504状态码的Http请求错误数 interval: 1s #错误异常的扫描间隔1s,即在interval(1s)内连续发生consecutiveGatewayErrors(1)个错误,则触发服务熔断 baseEjectionTime: 3m #基本驱逐时间3分钟,实际驱逐时间为baseEjectionTime*驱逐次数 maxEjectionPercent: 100 #最大驱逐百分比100%

3)添加客户端访问httpbin服务

创建一个客户端以将流量发送给httpbin服务。该客户端是一个简单的负载测试客户端,Fortio可以控制连接数,并发数和HTTP调用延迟。使用此客户端来“跳闸”在DestinationRule中设置的断路器策略。

# 通过执行下面的命令部署fortio客户端

root@master001:~/istio/istio-1.12.0# kubectl apply -f samples/httpbin/sample-client/fortio-deploy.yaml

root@master001:~/istio/istio-1.12.0# kubectl get pods|grep for

fortio-deploy-687945c6dc-jf4tk 2/2 Running 0

root@master001:~/istio/istio-1.12.0# kubectl exec fortio-deploy-687945c6dc-jf4tk -c fortio -- /usr/bin/fortio curl http://httpbin:8000/get HTTP/1.1 200 OK server: envoy date: Thu, 16 Dec 2021 14:36:09 GMT content-type: application/json content-length: 594 access-control-allow-origin: * access-control-allow-credentials: true x-envoy-upstream-service-time: 19 { "args": {}, "headers": { "Host": "httpbin:8000", "User-Agent": "fortio.org/fortio-1.17.1", "X-B3-Parentspanid": "deca8f54c7772ca1", "X-B3-Sampled": "1", "X-B3-Spanid": "c515a0eeb0cc6f9e", "X-B3-Traceid": "99bb8f10f877af2ddeca8f54c7772ca1", "X-Envoy-Attempt-Count": "1", "X-Forwarded-Client-Cert": "By=spiffe://cluster.local/ns/default/sa/httpbin;Hash=f2ee10d932d71c46db56ea674d2139149fab3952b8e83bbc75f38c3e89b8ad1f;Subject=\"\";URI=spiffe://cluster.local/ns/default/sa/default" }, "origin": "127.0.0.6", "url": "http://httpbin:8000/get" }

4)触发断路器

在DestinationRule设置中,指定了maxConnections: 1和 http1MaxPendingRequests: 1。这些规则表明,如果超过一个以上的连接并发请求,则istio-proxy在为进一步的请求和连接打开路由时,应该会看到下面的情况 。

# 以两个并发连接(-c 2)和发送20个请求(-n 20)调用服务

root@master001:~/istio/istio-1.12.0# cat samples/httpbin/sample-client/fortio-deploy.yaml apiVersion: v1 kind: Service metadata: name: fortio labels: app: fortio service: fortio spec: ports: - port: 8080 name: http selector: app: fortio --- apiVersion: apps/v1 kind: Deployment metadata: name: fortio-deploy spec: replicas: 1 selector: matchLabels: app: fortio template: metadata: annotations: # This annotation causes Envoy to serve cluster.outbound statistics via 15000/stats # in addition to the stats normally served by Istio. The Circuit Breaking example task # gives an example of inspecting Envoy stats via proxy config. proxy.istio.io/config: |- proxyStatsMatcher: inclusionPrefixes: - "cluster.outbound" - "cluster_manager" - "listener_manager" - "server" - "cluster.xds-grpc" labels: app: fortio spec: containers: - name: fortio image: fortio/fortio:latest_release imagePullPolicy: Always ports: - containerPort: 8080 name: http-fortio - containerPort: 8079 name: grpc-ping

root@master001:~/istio/istio-1.12.0# kubectl exec -it fortio-deploy-687945c6dc-jf4tk -c fortio -- /usr/bin/fortio load -c 2 -qps 0 -n 20 -loglevel Warning http://httpbin:8000/get 14:37:17 I logger.go:127> Log level is now 3 Warning (was 2 Info) Fortio 1.17.1 running at 0 queries per second, 2->2 procs, for 20 calls: http://httpbin:8000/get Starting at max qps with 2 thread(s) [gomax 2] for exactly 20 calls (10 per thread + 0) 14:37:17 W http_client.go:806> [1] Non ok http code 503 (HTTP/1.1 503) 14:37:17 W http_client.go:806> [0] Non ok http code 503 (HTTP/1.1 503) 14:37:17 W http_client.go:806> [1] Non ok http code 503 (HTTP/1.1 503) 14:37:17 W http_client.go:806> [0] Non ok http code 503 (HTTP/1.1 503) 14:37:17 W http_client.go:806> [1] Non ok http code 503 (HTTP/1.1 503) Ended after 57.850746ms : 20 calls. qps=345.72 Aggregated Function Time : count 20 avg 0.0056645873 +/- 0.00577 min 0.000439136 max 0.024627815 sum 0.113291747 # range, mid point, percentile, count >= 0.000439136 <= 0.001 , 0.000719568 , 15.00, 3 > 0.001 <= 0.002 , 0.0015 , 20.00, 1 > 0.003 <= 0.004 , 0.0035 , 55.00, 7 > 0.004 <= 0.005 , 0.0045 , 65.00, 2 > 0.005 <= 0.006 , 0.0055 , 75.00, 2 > 0.006 <= 0.007 , 0.0065 , 80.00, 1 > 0.007 <= 0.008 , 0.0075 , 85.00, 1 > 0.012 <= 0.014 , 0.013 , 90.00, 1 > 0.016 <= 0.018 , 0.017 , 95.00, 1 > 0.02 <= 0.0246278 , 0.0223139 , 100.00, 1 # target 50% 0.00385714 # target 75% 0.006 # target 90% 0.014 # target 99% 0.0237023 # target 99.9% 0.0245353 Sockets used: 6 (for perfect keepalive, would be 2) Jitter: false Code 200 : 15 (75.0 %) Code 503 : 5 (25.0 %) #断开了 Response Header Sizes : count 20 avg 172.6 +/- 99.65 min 0 max 231 sum 3452 Response Body/Total Sizes : count 20 avg 678.35 +/- 252.5 min 241 max 825 sum 13567 All done 20 calls (plus 0 warmup) 5.665 ms avg, 345.7 qps

5.2、超时

在生产环境中经常会碰到由于调用方等待下游的响应过长,堆积大量的请求阻塞了自身服务,造成雪崩的情况,通过超时处理来避免由于无限期等待造成的故障,进而增强服务的可用性,Istio 使用虚拟服务来优雅实现超时处理。

下面例子模拟客户端调用 nginx,nginx 将请求转发给 tomcat。nginx 服务设置了超时时间为2秒,如果超出这个时间就不在等待,返回超时错误。tomcat服务设置了响应时间延迟10秒,任何请求都需要等待10秒后才能返回。client 通过访问 nginx 服务去反向代理 tomcat服务,由于 tomcat服务需要10秒后才能返回,但nginx 服务只等待2秒,所以客户端会提示超时错误。

1)创建deployment

root@master001:~/istio/istio-1.12.0/yaml/nginx-tomcat# cat nginx-tomcat-deployment.yaml --- apiVersion: apps/v1 kind: Deployment metadata: name: nginx-tomcat labels: server: nginx app: web spec: replicas: 1 selector: matchLabels: server: nginx app: web template: metadata: name: nginx labels: server: nginx app: web spec: containers: - name: nginx image: nginx:latest imagePullPolicy: IfNotPresent --- apiVersion: apps/v1 kind: Deployment metadata: name: tomcat labels: server: tomcat app: web spec: replicas: 1 selector: matchLabels: server: tomcat app: web template: metadata: name: tomcat labels: server: tomcat app: web spec: containers: - name: tomcat image: docker.io/kubeguide/tomcat-app:v1 imagePullPolicy: IfNotPresent

2)创建service

root@master001:~/istio/istio-1.12.0/yaml/nginx-tomcat# cat nginx-tomcat-svc.yaml --- apiVersion: v1 kind: Service metadata: name: nginx-svc spec: selector: server: nginx ports: - name: http port: 80 targetPort: 80 protocol: TCP --- apiVersion: v1 kind: Service metadata: name: tomcat-svc spec: selector: server: tomcat ports: - name: http port: 8080 targetPort: 8080 protocol: TCP

3)创建VirtualService

root@master001:~/istio/istio-1.12.0/yaml/nginx-tomcat# cat virtual-tomcat-nginx.yaml --- apiVersion: networking.istio.io/v1beta1 kind: VirtualService metadata: name: nginx-vs spec: hosts: - nginx-svc http: - route: - destination: host: nginx-svc timeout: 20s # 说明调用 nginx-svc 的 k8s service,请求超时时间是 2s --- apiVersion: networking.istio.io/v1beta1 kind: VirtualService metadata: name: tomcat-vs spec: hosts: - tomcat-svc http: - fault: delay: percentage: value: 50 fixedDelay: 10s route: - destination: host: tomcat-svc

root@master001:~/istio/istio-1.12.0/yaml/nginx-tomcat# kubectl get virtualservices NAME GATEWAYS HOSTS AGE canary ["canary-gateway"] ["*"] 22d nginx-vs ["nginx-svc"] 36m tomcat-vs ["tomcat-svc"] 36m

4)设置超时时间

root@master001:~/istio/istio-1.12.0/yaml/nginx-tomcat# kubectl exec -it nginx-tomcat-7dd6f74846-qc25b -- /bin/sh # vim /etc/nginx/conf.d/default.conf # apt-get update # apt-get install vim -y # vim /etc/nginx/conf.d/default.conf # nginx -t # nginx -s reload

# cat /etc/nginx/conf.d/default.conf server { listen 80; listen [::]:80; server_name localhost; #access_log /var/log/nginx/host.access.log main; location / { #root /usr/share/nginx/html; #index index.html index.htm; proxy_pass http://tomcat-svc:8080; proxy_http_version 1.1; } #error_page 404 /404.html; # redirect server error pages to the static page /50x.html # error_page 500 502 503 504 /50x.html; location = /50x.html { root /usr/share/nginx/html; } # proxy the PHP scripts to Apache listening on 127.0.0.1:80 # #location ~ \.php$ { # proxy_pass http://127.0.0.1; #} # pass the PHP scripts to FastCGI server listening on 127.0.0.1:9000 # #location ~ \.php$ { # root html; # fastcgi_pass 127.0.0.1:9000; # fastcgi_index index.php; # fastcgi_param SCRIPT_FILENAME /scripts$fastcgi_script_name; # include fastcgi_params; #} # deny access to .htaccess files, if Apache's document root # concurs with nginx's one # #location ~ /\.ht { # deny all; #} }

5)登录客户端验证超时

/ # time wget -q -O - http://nginx-svc wget: server returned error: HTTP/1.1 504 Gateway Timeout Command exited with non-zero status 1 real 0m 2.01s user 0m 0.00s sys 0m 0.00s / # while true; do wget -q -O - http://nginx-svc; done wget: server returned error: HTTP/1.1 504 Gateway Timeout wget: server returned error: HTTP/1.1 504 Gateway Timeout wget: server returned error: HTTP/1.1 504 Gateway Timeout wget: server returned error: HTTP/1.1 504 Gateway Timeout

nginx延迟改为20s, 50%tomcat故障10s

/ # time wget -q -O - http://nginx-svc <!DOCTYPE html> ... </html> real 0m 10.01s user 0m 0.00s sys 0m 0.00s

5.3、故障注入和重试

Istio 重试机制就是如果调用服务失败,Envoy 代理尝试连接服务的最大次数。而默认情况下,Envoy 代理在失败后并不会尝试重新连接服务,除非我们启动 Istio 重试机制。

下面例子模拟客户端调用 nginx,nginx 将请求转发给 tomcat。tomcat 通过故障注入而中止对外服务,nginx 设置如果访问 tomcat 失败则会重试 3 次。

1)创建pod

# 删除之前的pod并新建

root@master001:~/istio/istio-1.12.0/yaml/nginx-tomcat# kubectl apply -f .

2)创建VirtualService

root@master001:~/istio/istio-1.12.0/yaml/nginx-tomcat# cat virtual-attempt.yaml --- apiVersion: networking.istio.io/v1beta1 kind: VirtualService metadata: name: nginx-vs spec: hosts: - nginx-svc http: - route: - destination: host: nginx-svc retries: attempts: 3 # 调用 nginx-svc 的 k8s service,在初始调用失败后最多重试 3 次来连接到服务子集,每个重试都有 2 秒的超时 perTryTimeout: 2s --- apiVersion: networking.istio.io/v1beta1 kind: VirtualService metadata: name: tomcat-vs spec: hosts: - tomcat-svc http: - fault: abort: percentage: value: 100 httpStatus: 503 # 每次调用 tomcat-svc 的service,100%都会返回错误状态码503 route: - destination: host: tomcat-svc

3)验证超时与重试

root@master001:~/istio/istio-1.12.0/yaml/nginx-tomcat# kubectl exec -it nginx-tomcat-7dd6f74846-hjswq -- /bin/sh # apt-get update && apt-get install vim -y # vim /etc/nginx/conf.d/default.conf # nginx -t # nginx -s reload

location / { #root /usr/share/nginx/html; #index index.html index.htm; proxy_pass http://tomcat-svc:8080; proxy_http_version 1.1; }

root@master001:~# kubectl run busybox --image busybox:1.28 --restart=Never --rm -it busybox -- sh

If you don't see a command prompt, try pressing enter.

/ # wget -q -O - http://nginx-svc

wget: server returned error: HTTP/1.1 503 Service Unavailable

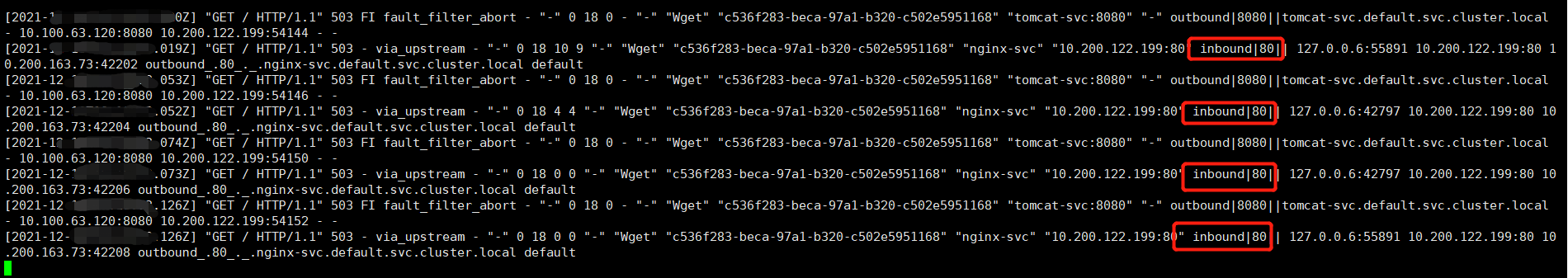

查看日志