rocky的k8s加网络插件搭建

环境准备

| 角色 | 主机名 | ip地址 | 配置 |

|---|---|---|---|

| master | master01 | 192.168.173.100 | 2C4G60G |

| node01 | node01 | 192.168.173.101 | 2C2G60G |

| node02 | node2 | 192.168.173.102 | 2C2G60G |

资源链接:http://mc.iproute.cn:31010/#s/-_CBMW3A

环境初始化

所有master+node操作(没有必要)

# 网卡配置

# cat /etc/NetworkManager/system-connections/ens160.nmconnection

[ipv4]

method=manual

address1=192.168.117.200/24,192.168.117.2

dns=114.114.114.114;114.114.115.115

# cat /etc/NetworkManager/system-connections/ens160.nmconnection

[connection]

autoconnect=false

# 调用 nmcli 重启设备和连接配置

nmcli d d ens192

//nmcli d r ens160

nmcli c r ens160

nmcli d connect ens160

这边字段修改

address1=192.168.173.100/24,192.168.173.2

比如我的是

address1=192.168.117.200/24,192.168.173.2

换为这一步

vi /etc/hosts

127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4

::1 localhost localhost.localdomain localhost6 localhost6.localdomain6

192.168.117.200 k8s-master

192.168.117.202 k8s-node1

192.168.117.204 k8s-node2

注意修改ip

所有master+node操作

# Rocky 系统软件源更换

sed -e 's|^mirrorlist=|#mirrorlist=|g' \

-e 's|^#baseurl=http://dl.rockylinux.org/$contentdir|baseurl=https://mirrors.aliyun.com/rockylinux|g' \

-i.bak \

/etc/yum.repos.d/[Rr]ocky*.repo

dnf makecache

# 防火墙修改 firewalld 为 iptables

systemctl stop firewalld

systemctl disable firewalld

yum -y install iptables-services

systemctl start iptables

iptables -F

systemctl enable iptables

service iptables save

# 禁用 Selinux

setenforce 0

sed -i "s/SELINUX=enforcing/SELINUX=disabled/g" /etc/selinux/config

grubby --update-kernel ALL --args selinux=0

# 查看是否禁用,grubby --info DEFAULT

# 回滚内核层禁用操作,grubby --update-kernel ALL --remove-args selinux

# 设置时区

timedatectl set-timezone Asia/Shanghai

master与node分别操作

# 修改主机名

hostnamectl set-hostname k8s-master

hostnamectl set-hostname k8s-node01

hostnamectl set-hostname k8s-node02

master与node一起操作

# 关闭 swap 分区

swapoff -a

sed -i 's:/dev/mapper/rl-swap:#/dev/mapper/rl-swap:g' /etc/fstab

# 安装 ipvs

yum install -y ipvsadm

# 开启路由转发

echo 'net.ipv4.ip_forward=1' >> /etc/sysctl.conf

sysctl -p

# 加载 bridge

yum install -y epel-release

yum install -y bridge-utils

modprobe br_netfilter

echo 'br_netfilter' >> /etc/modules-load.d/bridge.conf

echo 'net.bridge.bridge-nf-call-iptables=1' >> /etc/sysctl.conf

echo 'net.bridge.bridge-nf-call-ip6tables=1' >> /etc/sysctl.conf

sysctl -p

master与node一起操作

# 添加 docker-ce yum 源

# 中科大(ustc)

sudo dnf config-manager --add-repo https://mirrors.ustc.edu.cn/docker-ce/linux/centos/docker-ce.repo

cd /etc/yum.repos.d

# 切换中科大源

sed -i 's#download.docker.com#mirrors.ustc.edu.cn/docker-ce#g' docker-ce.repo

# 安装 docker-ce

yum -y install docker-ce

# 配置 daemon.

cat > /etc/docker/daemon.json <<EOF

{

"data-root": "/data/docker",

"exec-opts": ["native.cgroupdriver=systemd"],

"log-driver": "json-file",

"log-opts": {

"max-size": "100m",

"max-file": "100"

},

"insecure-registries": ["iproute.cn:6443"],

"registry-mirrors": ["https://iproute.cn:6443"]

}

EOF

mkdir -p /etc/systemd/system/docker.service.d

# 重启docker服务

systemctl daemon-reload && systemctl restart docker && systemctl enable docker

master与node一起操作(cri-dockerd-0.3.9.amd64.tgz本地拉取)

# 安装 cri-docker

wget https://github.com/Mirantis/cri-dockerd/releases/download/v0.3.9/cri-dockerd-0.3.9.amd64.tgz

tar -xf cri-dockerd-0.3.9.amd64.tgz

cp cri-dockerd/cri-dockerd /usr/bin/

chmod +x /usr/bin/cri-dockerd

# 配置 cri-docker 服务

cat <<"EOF" > /usr/lib/systemd/system/cri-docker.service

[Unit]

Description=CRI Interface for Docker Application Container Engine

Documentation=https://docs.mirantis.com

After=network-online.target firewalld.service docker.service

Wants=network-online.target

Requires=cri-docker.socket

[Service]

Type=notify

ExecStart=/usr/bin/cri-dockerd --network-plugin=cni --pod-infra-container-image=registry.aliyuncs.com/google_containers/pause:3.8

ExecReload=/bin/kill -s HUP $MAINPID

TimeoutSec=0

RestartSec=2

Restart=always

StartLimitBurst=3

StartLimitInterval=60s

LimitNOFILE=infinity

LimitNPROC=infinity

LimitCORE=infinity

TasksMax=infinity

Delegate=yes

KillMode=process

[Install]

WantedBy=multi-user.target

EOF

# 添加 cri-docker 套接字

cat <<"EOF" > /usr/lib/systemd/system/cri-docker.socket

[Unit]

Description=CRI Docker Socket for the API

PartOf=cri-docker.service

[Socket]

ListenStream=%t/cri-dockerd.sock

SocketMode=0660

SocketUser=root

SocketGroup=docker

[Install]

WantedBy=sockets.target

EOF

# 启动 cri-docker 对应服务

systemctl daemon-reload

systemctl enable cri-docker

systemctl start cri-docker

systemctl is-active cri-docker

标记一:master与node一起操作

# 添加 kubeadm yum 源

cat <<EOF > /etc/yum.repos.d/kubernetes.repo

[kubernetes]

name=Kubernetes

baseurl=https://pkgs.k8s.io/core:/stable:/v1.29/rpm/

enabled=1

gpgcheck=1

gpgkey=https://pkgs.k8s.io/core:/stable:/v1.29/rpm/repodata/repomd.xml.key

exclude=kubelet kubeadm kubectl cri-tools kubernetes-cni

EOF

# 安装 kubeadm 1.29 版本

yum install -y kubelet-1.29.0 kubectl-1.29.0 kubeadm-1.29.0

systemctl enable kubelet.service

yum install -y kubelet-1.29.2 kubectl-1.29.2 kubeadm-1.29.2

这边注意一下版本

或者cd到哪个包目录下

[root@localhost kubernetes-1.29.2-150500.1.1]# pwd

/kubeadm-rpm/kubernetes-1.29.2-150500.1.1

sudo rpm -ivh ./kubelet-1.29.2-150500.1.1.x86_64.rpm sudo rpm -ivh ./kubectl-1.29.2-150500.1.1.x86_64.rpm sudo rpm -ivh ./kubeadm-1.29.2-150500.1.1.x86_64.rpm

标记一步骤更新如下(kubernetes-1.29.2-150500.1.1.tar.gz本地拉取)

mkdir -p /kubeadm-rpm

cd /kubeadm-rpm/

#将包里的kubernetes-1.29.2-150500.1.1.tar.gz拉到该文件目录里

tar -xzf kubernetes-1.29.2-150500.1.1.tar.gz

cd kubernetes-1.29.2-150500.1.1

sudo rpm -ivh ./kubelet-1.29.2-150500.1.1.x86_64.rpm

sudo rpm -ivh ./kubectl-1.29.2-150500.1.1.x86_64.rpm

sudo rpm -ivh ./kubeadm-1.29.2-150500.1.1.x86_64.rpm

#安装依赖conntrack-tools

sudo dnf install libnetfilter_cthelper libnetfilter_cttimeout libnetfilter_queue

#解决 kubelet 的依赖问题:

sudo rpm -ivh ./conntrack-tools-1.4.7-2.el9.x86_64.rpm

sudo rpm -ivh ./kubernetes-cni-1.3.0-150500.1.1.x86_64.rpm

sudo rpm -ivh ./socat-1.7.4.1-5.el9.x86_64.rpm

sudo rpm -ivh ./kubelet-1.29.2-150500.1.1.x86_64.rpm

#解决 kubeadm 的依赖问题:

sudo rpm -ivh ./cri-tools-1.29.0-150500.1.1.x86_64.rpm

sudo rpm -ivh ./kubeadm-1.29.2-150500.1.1.x86_64.rpm

#验证安装是否成功

kubelet --version

kubectl version --client

kubeadm version

master操作

# 初始化主节点

kubeadm init\

--apiserver-advertise-address=10.100.0.20\

--image-repository registry.aliyuncs.com/google_containers\

--kubernetes-version 1.29.2\

--service-cidr=10.10.0.0/12\

--pod-network-cidr=10.244.0.0/16\

--ignore-preflight-errors=all\

--cri-socket unix:///var/run/cri-dockerd.sock

--apiserver-advertise-address=192.168.173.100\

我的改为

--apiserver-advertise-address=192.168.117.200\

master操作

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

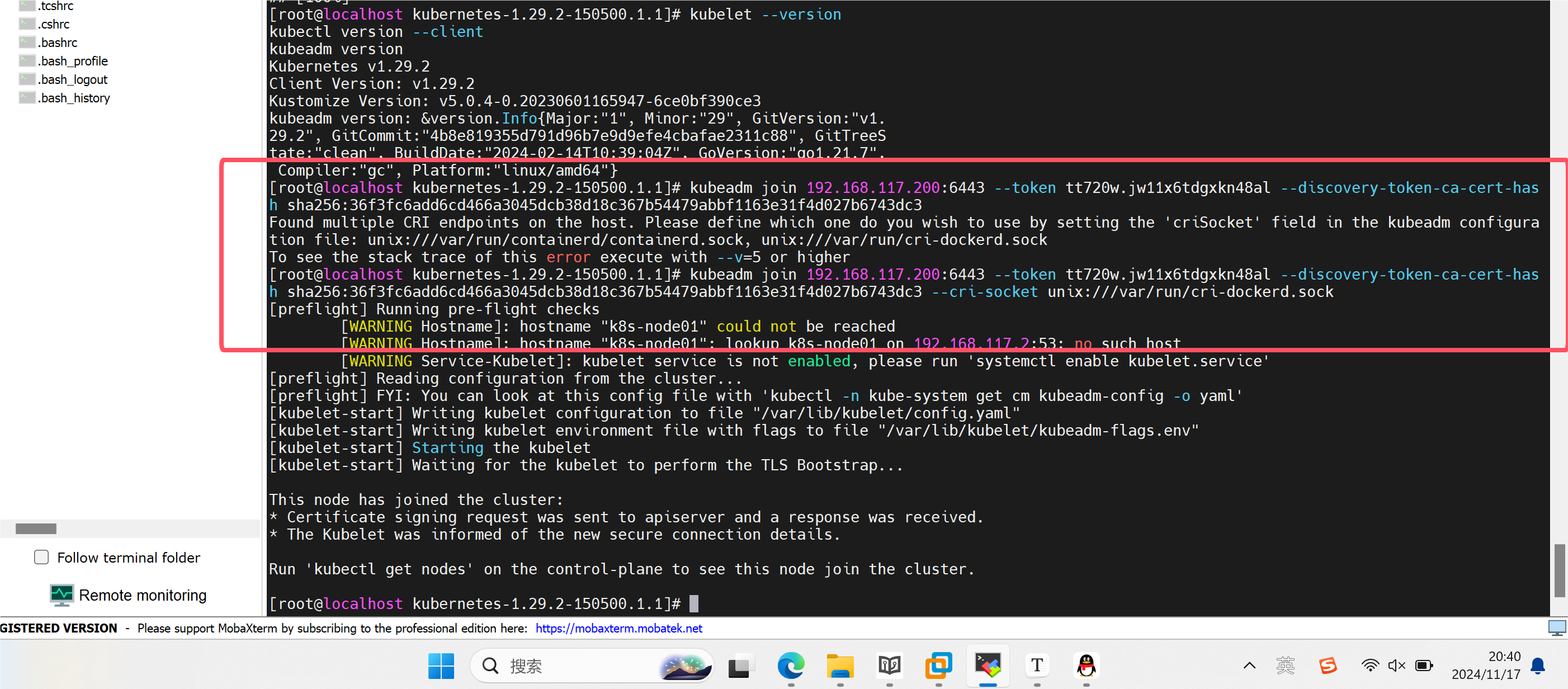

node1,node2操作

# node 加入

kubeadm join 192.168.173.100:6443 --token jghzcm.mz6js92jom1flry0 \

--discovery-token-ca-cert-hash sha256:63253f3d82fa07022af61bb2ae799177d2b0b6fe8398d5273098f4288ce67793 --cri-socket unix:///var/run/cri-dockerd.sock

# work token 过期后,重新申请

kubeadm token create --print-join-command

注意这边不要照搬

注意这个现象

多个容器运行时接口(CRI)的端点。

kubeadm在尝试加入 Kubernetes 集群时,需要明确指定使用哪一个 CRI 端点。在kubeadm token create --print-join-command

这个结果后添加 --cri-socket unix:///var/run/cri-dockerd.sock 即可

部署网络插件(拉取本地文件 calico-typha.yaml)(标记二)

#官方网址

#https://docs.tigera.io/calico/latest/getting-started/kubernetes/self-managed-onprem/onpremises

#install-calico-with-kubernetes-api-datastore-more-than-50-nodes

curl https://raw.githubusercontent.com/projectcalico/calico/v3.26.3/manifests/calico-typha.yaml -o calico.yaml

CALICO_IPV4POOL_CIDR 指定为 pod 地址

# 修改为 BGP 模式

# Enable IPIP

- name: CALICO_IPV4POOL_IPIP

value: "Always" #改成Off

kubectl apply -f calico-typha.yaml

kubectl get pod -A

第4814行 value: "Always"改为 value: "Off"

第4842和4843行注释去掉

value的ip改为网段前两段ip

之后再

kubectl apply -f calico-typha.yaml kubectl get pod -A

标记二部分可以完全本地拉取(更推荐)(拉取本地calico.zip)

cd

yum -y install unzip

unzip calico.zip

cd calico

tar xzvf calico-images.tar.gz

cp calico-typha.yaml calico.yaml

vim calico.yaml

#照上面的改

cd calico-images

ls

cd ..

mv calico-images ~/

#[root@localhost calico]# find / -name calico-node-v3.26.3.tar

#/root/calico-images/calico-node-v3.26.3.tar

cd

如果说你只有master有calico镜像,那么可以通过下面命令将文件传输到其他节点,如node1,node2

scp -r calico-images/ root@node01:/root scp -r calico-images/ root@node02:/root

cd

cd /root/calico-images/

ls

docker load -i calico-cni-v3.26.3.tar

docker load -i calico-kube-controllers-v3.26.3.tar

docker load -i calico-node-v3.26.3.tar

docker load -i calico-typha-v3.26.3.tar

cd

#查看

kubectl get node

kubectl get node -o wide

kubectl get pod -n kube-system

master操作

#查看

kubectl get node

kubectl get node -o wide

kubectl get pod -n kube-system

cd calico

ls

kubectl apply -f calico.yaml

kubectl get pod -n kube-system

#(可选,ctrl+c打断)kubectl get pod -n kube-system -w

固定网卡(可选)(下面没有操作)

# 目标 IP 或域名可达

- name: calico-node

image: registry.geoway.com/calico/node:v3.19.1

env:

# Auto-detect the BGP IP address.

- name: IP

value: "autodetect"

- name: IP_AUTODETECTION_METHOD

value: "can-reach=www.google.com"

kubectl set env daemonset/calico-node -n kube-system IP_AUTODETECTION_METHOD=can-reach=www.google.com

# 匹配目标网卡

- name: calico-node

image: registry.geoway.com/calico/node:v3.19.1

env:

# Auto-detect the BGP IP address.

- name: IP

value: "autodetect"

- name: IP_AUTODETECTION_METHOD

value: "interface=eth.*"

# 排除匹配网卡

- name: calico-node

image: registry.geoway.com/calico/node:v3.19.1

env:

# Auto-detect the BGP IP address.

- name: IP

value: "autodetect"

- name: IP_AUTODETECTION_METHOD

value: "skip-interface=eth.*"

# CIDR

- name: calico-node

image: registry.geoway.com/calico/node:v3.19.1

env:

# Auto-detect the BGP IP address.

- name: IP

value: "autodetect"

- name: IP_AUTODETECTION_METHOD

value: "cidr=192.168.200.0/24,172.15.0.0/24"

修改kube-proxy 模式为 ipvs(下面没有操作)

# kubectl edit configmap kube-proxy -n kube-system

mode: ipvs

kubectl delete pod -n kube-system -l k8s-app=kube-proxy

问题解决文档

1.k8s在手动重启后kubectl报错(看起来是api问题)

检查 API 服务器状态

systemctl status kubelet

发现

[root@k8s-master ~]# systemctl status kubelet

○ kubelet.service - kubelet: The Kubernetes Node Agent

Loaded: loaded (/usr/lib/systemd/system/kubelet.service; disabled; preset: disabled)

Drop-In: /usr/lib/systemd/system/kubelet.service.d

└─10-kubeadm.conf

Active: inactive (dead)

Docs: https://kubernetes.io/docs/

启动API 服务器

[root@k8s-master ~]# systemctl restart kubelet

#查看状态,可以ctrl+c打断

[root@k8s-master ~]# systemctl status kubelet

● kubelet.service - kubelet: The Kubernetes Node Agent

Loaded: loaded (/usr/lib/systemd/system/kubelet.service; disabled; preset: disabled)

Drop-In: /usr/lib/systemd/system/kubelet.service.d

└─10-kubeadm.conf

Active: active (running) since Mon 2024-11-18 20:12:28 CST; 5s ago

Docs: https://kubernetes.io/docs/

Main PID: 7260 (kubelet)

Tasks: 12 (limit: 10892)

Memory: 99.5M

CPU: 859ms

CGroup: /system.slice/kubelet.service

└─7260 /usr/bin/kubelet --bootstrap-kubeconfig=/etc/kubernetes/bootstrap-kubelet.conf --kubeconfig=/etc/kubernetes/kubelet.conf >

Nov 18 20:12:31 k8s-master kubelet[7260]: I1118 20:12:31.338831 7260 pod_container_deletor.go:80] "Container not found in pod's containers>

Nov 18 20:12:31 k8s-master kubelet[7260]: I1118 20:12:31.368284 7260 pod_container_deletor.go:80] "Container not found in pod's containers>

Nov 18 20:12:31 k8s-master kubelet[7260]: I1118 20:12:31.687345 7260 pod_container_deletor.go:80] "Container not found in pod's containers>

Nov 18 20:12:32 k8s-master kubelet[7260]: E1118 20:12:32.654027 7260 controller.go:145] "Failed to ensure lease exists, will retry" err="G>

Nov 18 20:12:32 k8s-master kubelet[7260]: I1118 20:12:32.765301 7260 kubelet_node_status.go:73] "Attempting to register node" node="k8s-ma>

Nov 18 20:12:32 k8s-master kubelet[7260]: E1118 20:12:32.770780 7260 kubelet_node_status.go:96] "Unable to register node with API server" >

Nov 18 20:12:32 k8s-master kubelet[7260]: W1118 20:12:32.778427 7260 reflector.go:539] vendor/k8s.io/client-go/informers/factory.go:159: f>

Nov 18 20:12:32 k8s-master kubelet[7260]: E1118 20:12:32.778678 7260 reflector.go:147] vendor/k8s.io/client-go/informers/factory.go:159: F>

Nov 18 20:12:32 k8s-master kubelet[7260]: W1118 20:12:32.791834 7260 reflector.go:539] vendor/k8s.io/client-go/informers/factory.go:159: f>

Nov 18 20:12:32 k8s-master kubelet[7260]: E1118 20:12:32.792068 7260 reflector.go:147] vendor/k8s.io/client-go/informers/factory.go:159: F>

[root@k8s-master ~]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

k8s-master Ready control-plane 23h v1.29.2

k8s-node01 NotReady <none> 23h v1.29.2

k8s-node02 NotReady <none> 23h v1.29.2

[root@k8s-master ~]#

解决因重启而导致node节点网络问题

#master+node

systemctl restart kubelet

kubectl get nodes

#基本上到这边就解决了,如果还是不行,参考下面部分

#这下面的一部分相当于k8s小重置,我亲身试过,可以解决很多问题

docker rm $(docker ps -a -q -f status=exited)

cd /root/calico-images/

ls

docker load -i calico-cni-v3.26.3.tar

docker load -i calico-kube-controllers-v3.26.3.tar

docker load -i calico-node-v3.26.3.tar

docker load -i calico-typha-v3.26.3.tar

#master

kubectl delete -f calico.yaml

kubectl apply -f calico.yaml

额外部分

1.设置秘钥,方便频繁切node节点时要输密码

.ssh目录的权限是否为700,以及authorized_keys文件的权限是否为644

#deploy节点申请密钥,方便密钥登录(看控制节点)生成密钥

ssh-keygen -t rsa -f /root/.ssh/id_rsa

#拷贝公钥到个主机

#ssh-copy-id root@work节点IP

scp /root/.ssh/id_rsa.pub root@192.168.117.202:/root/.ssh/authorized_keys

sudo service sshd restart

注意ip

sudo service sshd restart