动手实现深度学习(10):第五篇:解决过拟合的方法

第五篇:解决过拟合的方法

传送门: https://www.cnblogs.com/greentomlee/p/12314064.html

github: Leezhen2014: https://github.com/Leezhen2014/python_deep_learning

七、解决过拟合的方法

7.1 DropOut的实现

DroupOut作为抑制过拟合的一种方法,会在每次训练的时候随机的将一些神经元失活。

1 class Dropout: 2 """ 3 http://arxiv.org/abs/1207.0580 4 """ 5 def __init__(self, dropout_ratio=0.5): 6 self.dropout_ratio = dropout_ratio 7 self.mask = None 8 9 def forward(self, x, train_flg=True): 10 """ 11 正向传播时,随机标记mask, 这样看起来就像是在反馈的时候失活了 12 """ 13 if train_flg: 14 self.mask = np.random.rand(*x.shape) > self.dropout_ratio 15 return x * self.mask 16 else: 17 return x * (1.0 - self.dropout_ratio) 18 19 def backward(self, dout): 20 21 ''' 22 return dout * self.mask

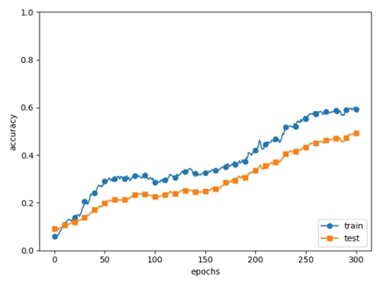

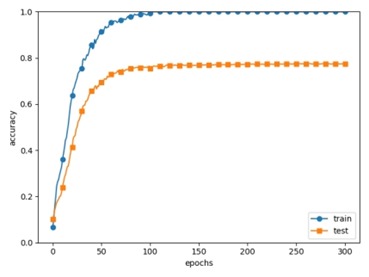

左图是使用了dropout, 右图是没有使用dropout. 之所以会出现这种情况,是因为dropout会是得一些神经元失活

7.2 权值衰减的实现

权值衰减也是抑制过拟合的一种方法。

这种方法通过在训练过程中的权值进行“惩罚”,从而抑制拟合。

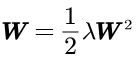

本案例在损失函数获取以后,更新权重。在更新权重的时候加上L2范数,这样便可以抑制权重变大。L2范数如下,lamda是权值衰减系数。

代码实现:

1 def loss(self, x, t): 2 """ 3 损失函数 4 :param x: 输入数据 5 :param t: 标签数据 6 :return: 损失值 7 """ 8 9 y = self.predict(x) 10 11 weight_decay = 0 12 for idx in range(1, self.hidden_layer_num + 2): 13 W = self.params['W' + str(idx)] 14 weight_decay += 0.5 * self.weight_decay_lambda * np.sum(W ** 2) 15 16 return self.last_layer.forward(y, t) + weight_decay

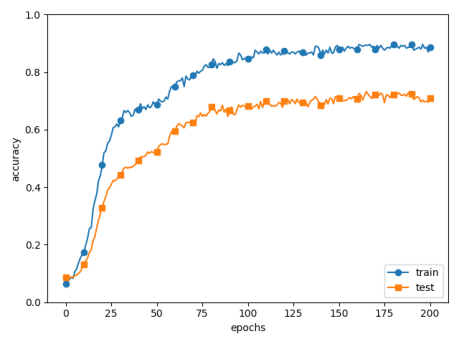

绘图以及验证:

1 # coding: utf-8 2 3 import numpy as np 4 import matplotlib.pyplot as plt 5 from src.datasets.mnist import load_mnist 6 from src.common.multi_layer_net import MultiLayerNet 7 from src.common.optimizer import SGD 8 9 (x_train, t_train), (x_test, t_test) = load_mnist(normalize=True) 10 11 # 只是选取300个样本 12 x_train = x_train[:300] 13 t_train = t_train[:300] 14 15 # weight decay系数 16 #weight_decay_lambda = 0 17 weight_decay_lambda = 0.1 18 # ==================================================== 19 20 network = MultiLayerNet(input_size=784, hidden_size_list=[100, 100, 100, 100, 100, 100], output_size=10, 21 weight_decay_lambda=weight_decay_lambda) 22 optimizer = SGD(lr=0.01) 23 24 max_epochs = 201 25 train_size = x_train.shape[0] 26 batch_size = 100 27 28 train_loss_list = [] 29 train_acc_list = [] 30 test_acc_list = [] 31 32 iter_per_epoch = max(train_size / batch_size, 1) 33 epoch_cnt = 0 34 35 for i in range(1000000000): 36 batch_mask = np.random.choice(train_size, batch_size) 37 x_batch = x_train[batch_mask] 38 t_batch = t_train[batch_mask] 39 40 grads = network.gradient(x_batch, t_batch) 41 optimizer.update(network.params, grads) 42 43 if i % iter_per_epoch == 0: 44 train_acc = network.accuracy(x_train, t_train) 45 test_acc = network.accuracy(x_test, t_test) 46 train_acc_list.append(train_acc) 47 test_acc_list.append(test_acc) 48 49 print("epoch:" + str(epoch_cnt) + ", train acc:" + str(train_acc) + ", test acc:" + str(test_acc)) 50 51 epoch_cnt += 1 52 if epoch_cnt >= max_epochs: 53 break 54 55 56 # 3.绘制 57 markers = {'train': 'o', 'test': 's'} 58 x = np.arange(max_epochs) 59 plt.plot(x, train_acc_list, marker='o', label='train', markevery=10) 60 plt.plot(x, test_acc_list, marker='s', label='test', markevery=10) 61 plt.xlabel("epochs") 62 plt.ylabel("accuracy") 63 plt.ylim(0, 1.0) 64 plt.legend(loc='lower right') 65 plt.show()

我心匪石,不可转也。我心匪席,不可卷也。