一、准备条件

3-kafka在stand alone node上身份认证机制理解

二、使用helm 安装k8s微服务(kafka和zookeeper)

1) 安装微服务

helm repo add gs-all repoUrl(helm repo remove gs-all repoUrl)

export NAMESPACE=eric-schema-registry-sr-install

export TLS=false

kubectl create ns $NAMESPACE

helm install eric-data-coordinator-zk gs-all/eric-data-coordinator-zk --namespace=$NAMESPACE --devel --wait --timeout 20000s --set global.security.tls.enabled=$TLS --set replicas=1 --set persistence.persistentVolumeClaim.enabled=false

helm install eric-data-message-bus-kf gs-all/eric-data-message-bus-kf --namespace=$NAMESPACE --devel --wait --timeout 20000s --set global.security.tls.enabled=$TLS --set replicaCount=3 --set persistence.persistentVolumeClaim.enabled=false

2)确认安装

## kubectl get pods -n eric-schema-registry-sr-install NAME READY STATUS RESTARTS AGE eric-data-coordinator-zk-0 1/1 Running 57 98d eric-data-message-bus-kf-0 1/1 Running 46 75d eric-data-message-bus-kf-1 1/1 Running 43 75d eric-data-message-bus-kf-2 1/1 Running 43 75d ## kubectl describe -n eric-schema-registry-sr-install error: You must specify the type of resource to describe. Use "kubectl api-resources" for a complete list of supported resources. ehunjng@CN-00005131:~$ kubectl describe namespace eric-schema-registry-sr-install Name: eric-schema-registry-sr-install Labels: <none> Annotations: <none> Status: Active No resource quota. No LimitRange resource. ## kubectl describe all Name: kubernetes Namespace: default Labels: component=apiserver provider=kubernetes Annotations: <none> Selector: <none> Type: ClusterIP IP Families: <none> IP: 10.96.0.1 IPs: <none> Port: https 443/TCP TargetPort: 6443/TCP Endpoints: 192.168.65.4:6443 Session Affinity: None Events: <none>

3) 进到container里面, 确认下文件

查看所有的images

docker ps –a

进到k8s pod中

kubectl exec -it eric-data-message-bus-kf-0 -n eric-schema-registry-sr-install -- /bin/sh

Defaulted container "messagebuskf" out of: messagebuskf, checkzkready (init)

sh-4.4$

进到docker container中:docker exec –it containerId(containername) bash(/bin/bash)

docker exec -it k8s_messagebuskf_eric-data-message-bus-kf-0_eric-schema-registry-sr-install_a71d1214-c7bb-4e19-ab36-9832e26896a5_46 bash

bash-4.4$ cd /etc/confluent/docker/

bash-4.4$ ls

configure entrypoint kafka.properties.template kafka_server_jaas.conf.properties log4j.properties.template monitorcertZK.sh renewcertZK.sh tools-log4j.properties

ensure initcontainer.sh kafkaPartitionReassignment.sh launch monitorcertKF.sh renewcertKF.sh run tools-log4j.properties.template

docker exec -it k8s_messagebuskf_eric-data-message-bus-kf-0_eric-schema-registry-sr-install_a71d1214-c7bb-4e19-ab36-9832e26896a5_46 bash

bash-4.4$

4) 拷贝文件到container(pod)中

docker ps docker cp k8s_messagebuskf_eric-data-message-bus-kf-0_eric-schema-registry-sr-install_b0c9eb0f-881b-4081-9931-b9fc0b314bb9_5:/etc/kafka /mnt/c/repo/k8skafka

docker cp messagebuskf:/etc/kafka/* /mnt/c/repo/k8skafka

kubectl -n eric-schema-registry-sr-install cp /mnt/c/repo/k8skafka/kafka/kafka_server_jaas.conf eric-data-message-bus-kf-1:/etc/kafka

docker cp k8s_messagebuskf_eric-data-message-bus-kf-0_eric-schema-registry-sr-install_b0c9eb0f-881b-4081-9931-b9fc0b314bb9_5:/usr/bin /mnt/c/repo/k8skafka docker cp /mnt/c/repo/k8skafka/kafka_server_jaas.conf k8s_messagebuskf_eric-data-message-bus-kf-0_eric-schema-registry-sr-install_b0c9eb0f-881b-4081-9931-b9fc0b314bb9_5:/etc/kafka/

5)确认node和k8s集群中服务

kubectl get node NAME STATUS ROLES AGE VERSION docker-desktop Ready master 161d v1.19.7 kubectl get services -n eric-schema-registry-sr-install NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE eric-data-coordinator-zk ClusterIP 10.105.141.43 <none> 2181/TCP,8080/TCP,21007/TCP 98d eric-data-coordinator-zk-ensemble-service ClusterIP None <none> 2888/TCP,3888/TCP 98d eric-data-message-bus-kf ClusterIP None <none> 9092/TCP 75d eric-data-message-bus-kf-0-nodeport NodePort 10.111.66.146 <none> 9092:31090/TCP 75d eric-data-message-bus-kf-1-nodeport NodePort 10.108.230.113 <none> 9092:31091/TCP 75d eric-data-message-bus-kf-2-nodeport NodePort 10.98.161.13 <none> 9092:31092/TCP 75d eric-data-message-bus-kf-client ClusterIP 10.102.191.90 <none> 9092/TCP 75d zookeeper-nodeport NodePort 10.105.151.183 <none> 2181:32181/TCP 83d

三、学习kafka微服务中helm chart中文件内容

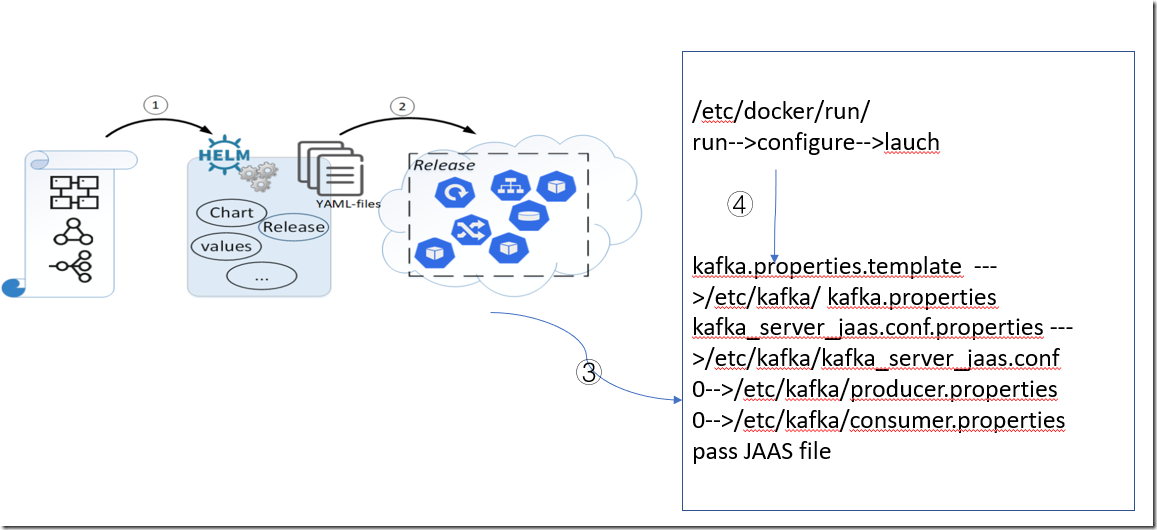

结合pod关于kafka的环境变量、helm chart如何通过values和templates去控制pod的环境变量、container中从

/etc/docker/run/run-->configure-->lauch这样一个逻辑,从上到下从总到分的逻辑。那么如果想要开启或者配置container中kafka的功能,

需要修改helm chart中的values值,以及需要在values中添加对应的值,

然后通过helm install或者helm upgrade使得值生效,然后控制container中kafka的一些环境变量和配置文件的生成。

四、修改values和chart file,开启helm对于kafka SASL/PLAIN的支持

1)kafka微服务的helm chart

从本地helm repo中找到chart压缩包,eric-data-message-bus-kf-1.17.0-28.tgz,解压。

2) 修改charts中对应的文件

value.yaml=====>templates(kafka-ss.yaml)====>pod env variables===>/etc/confluent/docker/(files)=====>/etc/kafka/properties. ****pod env variables and /etc/kafka/*properties control /usr/bin/kafka

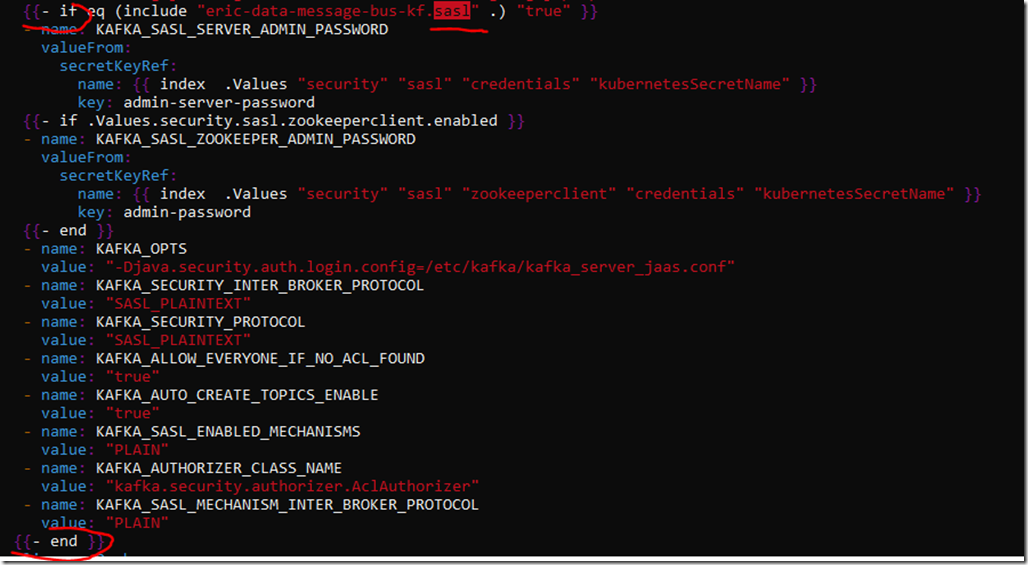

3)修改values.yaml,添加或者修改现有的值。应该修改或者添加哪个值,思路是:

查看template/kafka-ss.yaml,搜索sasl

因此,在k8s集群内部,首先要修改eric-data-message-bus-kf.sasl的值为true。 查看templates/_helpers.tpl, eric-data-message-bus-kf.sasl如何被映射到

values.yaml中。

security:

# policyBinding:

# create: false

# policyReferenceMap:

# default-restricted-security-policy: "default-restricted-security-policy"

# tls:

# enabled: true

sasl:

enabled: true

kafka-ss.yaml

from :

260 {{- else }}

261 port: {{ template "eric-data-message-bus-kf.plaintextPort" . }}

262 {{- end }}

to:

260 {{- else }}

261 port: {{ template "eric-data-message-bus-kf.saslPlaintextPort" . }}

262 {{- end }}

修改完之后,使用docker cp把文件copy到container中,使用helm install /helm upgrade重新部署k8s微服务。

helm upgrade eric-data-message-bus-kf . --reuse-values --set global.security.sasl.enabled=true --set global.security.tls.enabled=false -n eric-schema-r

egistry-sr-install - -----因该命令不生效,故修改values.yaml re-install

helm uninstall eric-data-message-bus-kf n eric-schema-registry-sr-install

helm install eric-data-message-bus-kf /home/ehunjng/helm-study/eric-data-message-bus-kf/ --namespace=$NAMESPACE --devel --wait --timeout 20000s --set global.security.tls.enabled=$TLS --se

t replicaCount=3 --set persistence.persistentVolumeClaim.enabled=false

确认重新部署后的状态:

kubectl get pods -n namespace

kubectl logs eric-data-message-bus-kf-0 -n namespace

kubectl describe pods eric-data-message-bus-kf-2 -n namespace

验证:

分别在不同的container中 启动producer和consumer,看是否能通信。

kubectl exec -it eric-data-message-bus-kf-0 -n eric-schema-registry-sr-install -- /bin/sh

/usr/bin/kafka-console-producer.sh --broker-list localhost:9091 --topic test0730 --producer.config /etc/kafka/producer.properties

kubectl exec -it eric-data-message-bus-kf-1-n eric-schema-registry-sr-install -- /bin/sh

/usr/bin/kafka-console-consumer.sh --bootstrap-server localhost:9091 --topic test0730 --consumer.config /etc/kafka/consumer.properties

五、k8s外部通过用户名密码访问kafka

1) 首先是要k8s集群要对外暴露服务,通过nodeport来对外暴露服务开启SASL支持,在values.yaml中添加:

#options required for external access via nodeport "advertised.listeners": EXTERNAL://127.0.0.1:$((31090 + ${KAFKA_BROKER_ID}))

"listener.security.protocol.map": SASL_PLAINTEXT:SASL_PLAINTEXT,EXTERNAL:SASL_PLAINTEXT nodeport: enabled: true servicePort: 9092 firstListenerPort: 31090

2) 其次,参考cp-helm-charts/statefulset.yaml at master · confluentinc/cp-helm-charts · GitHub

修改一下kafka-ss.yaml。

command:

- sh

- -exc

- |

export KAFKA_BROKER_ID=${HOSTNAME##*-} && \

export KAFKA_ADVERTISED_LISTENERS=PLAINTEXT://${POD_NAME}.{{ template "cp-kafka.fullname" . }}-headless.${POD_NAMESPACE}:9092{{ include "cp-kafka.configuration.advertised.listeners" . }} && \

exec /etc/confluent/docker/run

3) 最后,同样 改完之后helm 重新安装或者

helm upgrade --install eric-data-message-bus-kf . --reuse-values --set global.security.sasl.enabled=true --set global.security.tls.enabled=false -n eric-schema-registry-sr-install

配置的过程中参考:

kafka的参数解释

https://blog.csdn.net/lidelin10/article/details/105316252

kafka/KafkaConfig.scala at trunk · apache/kafka (github.com)

K8S环境快速部署Kafka(K8S外部可访问):https://www.cnblogs.com/bolingcavalry/p/13917562.html

4) 验证

①在客户端安装kafka,并开启支持。

②进到container内部 查看用户名密码:

bash-4.4$ cat /etc/kafka/kafka_server_jaas.conf

KafkaServer {

org.apache.kafka.common.security.plain.PlainLoginModule required

username="admin"

password="h7801XHzaC"

user_admin="h7801XHzaC";

};

KafkaClient {

org.apache.kafka.common.security.plain.PlainLoginModule required

username="admin"

password="h7801XHzaC";

};

③当client端使用错误的用户名密码

ehunjng@CN-00005131:~/kafka_2.12-2.4.0$ bin/kafka-console-producer.sh --broker-list 127.0.0.1:31090 -topic kafkatest0804 --producer.config config/producer.properties

>[2021-10-27 14:36:31,697] ERROR [Producer clientId=console-producer] Connection to node -1 (localhost/127.0.0.1:31090) failed authentication due to: Authentication failed: Invalid username or password (org.apache.kafka.clients.NetworkClient)

[2021-10-27 14:36:32,106] ERROR [Producer clientId=console-producer] Connection to node -1 (localhost/127.0.0.1:31090) failed authentication due to: Authentication failed: Invalid username or password (org.apache.kafka.clients.NetworkClient)

[2021-10-27 14:36:32,872] ERROR [Producer clientId=console-producer] Connection to node -1 (localhost/127.0.0.1:31090) failed authentication due to: Authentication failed: Invalid username or password (org.apache.kafka.clients.NetworkClient)

[2021-10-27 14:36:34,145] ERROR [Producer clientId=console-producer] Connection to node -1 (localhost/127.0.0.1:31090) failed authentication due to: Authentication failed: Invalid username or password (org.apache.kafka.clients.NetworkClient)

[2021-10-27 14:36:35,367] ERROR [Producer clientId=console-producer] Connection to node -1 (localhost/127.0.0.1:31090) failed authentication due to: Authentication failed: Invalid username or password (org.apache.kafka.clients.NetworkClient)

^Cehunjng@CN-00005131:~/kafka_2.12-2.4.0$ cat kafka_client_jaas.conf

KafkaClient {

org.apache.kafka.common.security.plain.PlainLoginModule required

username="kafka"

password="kafkapasswd";

};

④当client端使用正确的用户名密码

查看下对外暴露服务的node port kubectl get services -n eric-schema-registry-sr-install NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE eric-data-coordinator-zk ClusterIP 10.105.141.43 <none> 2181/TCP,8080/TCP,21007/TCP 98d eric-data-coordinator-zk-ensemble-service ClusterIP None <none> 2888/TCP,3888/TCP 98d eric-data-message-bus-kf ClusterIP None <none> 9092/TCP 75d eric-data-message-bus-kf-0-nodeport NodePort 10.111.66.146 <none> 9092:31090/TCP 75d eric-data-message-bus-kf-1-nodeport NodePort 10.108.230.113 <none> 9092:31091/TCP 75d eric-data-message-bus-kf-2-nodeport NodePort 10.98.161.13 <none> 9092:31092/TCP 75d eric-data-message-bus-kf-client ClusterIP 10.102.191.90 <none> 9092/TCP 75d zookeeper-nodeport NodePort 10.105.151.183 <none> 2181:32181/TCP 84d 修改正确的用户名密码, cat kafka_client_jaas.conf KafkaClient { org.apache.kafka.common.security.plain.PlainLoginModule required username="admin" password="h7801XHzaC"; };

启动producer,并把pod-0当作消息中转broker bin/kafka-console-producer.sh --broker-list 127.0.0.1:31090 -topic kafkatestExternal1027 --producer.config config/producer.properties >external1027-1 >external1027-2 >external1027-3 >external1027-4 > 启动consumer,并把pod-2当作消息中转broker bin/kafka-console-consumer.sh --bootstrap-server 127.0.0.1:31092 --topic kafkatestExternal1027 --from-beginning --consumer.config config/consumer.properties external1027-1 external1027-2 external1027-3 external1027-4

浙公网安备 33010602011771号

浙公网安备 33010602011771号