首先按照yolov8的环境部署

https://www.cnblogs.com/gooutlook/p/18511319

教程

https://docs.ultralytics.com/zh/guides/raspberry-pi/#convert-model-to-ncnn-and-run-inference

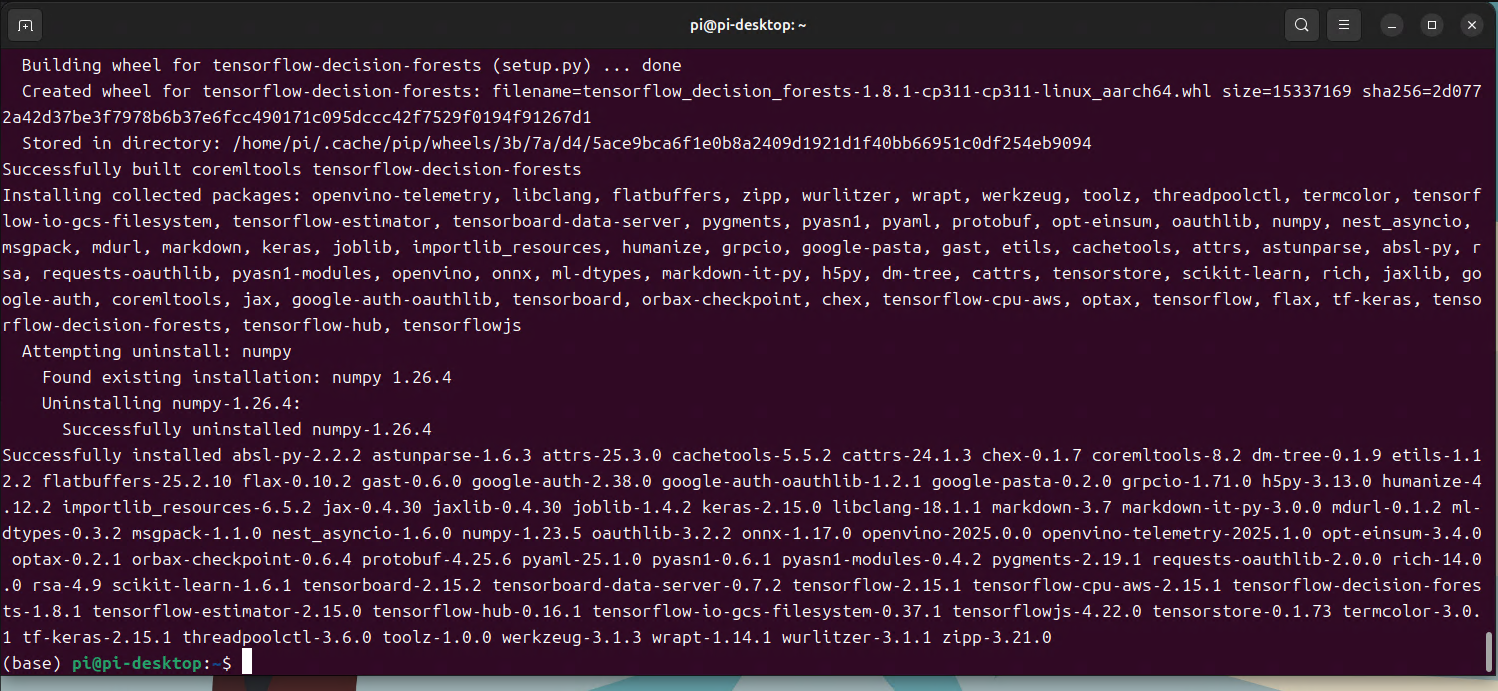

1更新软件包列表,安装 pip 并升级到最新版本 sudo apt update sudo apt install python3-pip -y pip install -U pip 2安装 ultralytics pip 软件包与可选依赖项 pip install ultralytics[export] 3重启设备 sudo reboot

在 Raspberry Pi 上使用NCNN

在Ultralytics.NET 支持的所有模型导出格式中,.NET 是最受欢迎的、 NCNN由于NCNN 针对移动/嵌入式平台(如 ARM 架构)进行了高度优化,因此在使用 Raspberry Pi 设备时可提供最佳推理性能。

将模型转换为NCNN 并运行推理

PyTorch 格式的 YOLO11n 模型被转换为NCNN ,以便使用导出的模型进行推理。

from ultralytics import YOLO

# Load a YOLO11n PyTorch model

model = YOLO("yolo11n.pt")

# Export the model to NCNN format

model.export(format="ncnn") # creates 'yolo11n_ncnn_model'

# Load the exported NCNN model

ncnn_model = YOLO("yolo11n_ncnn_model")

# Run inference

results = ncnn_model("https://ultralytics.com/images/bus.jpg")

Ultralytics 基准

from ultralytics import YOLO

# Load a YOLO11n PyTorch model

model = YOLO("yolo11n.pt")

# Benchmark YOLO11n speed and accuracy on the COCO8 dataset for all all export formats

results = model.benchmark(data="coco8.yaml", imgsz=640)

下载测试模型

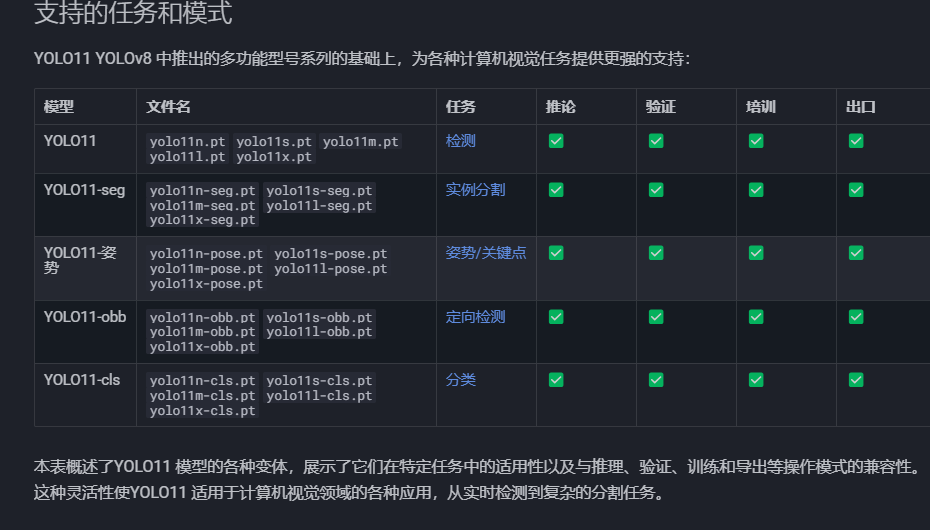

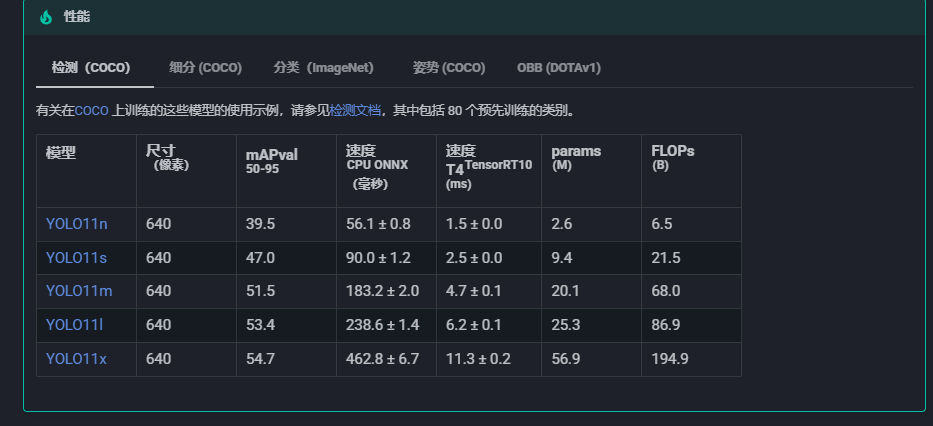

https://docs.ultralytics.com/zh/models/yolo11/#overview

https://huggingface.co/Ultralytics/YOLO11/blob/365ed86341e7a7456dbc4cafc09f138814ce9ff1/yolo11n.pt

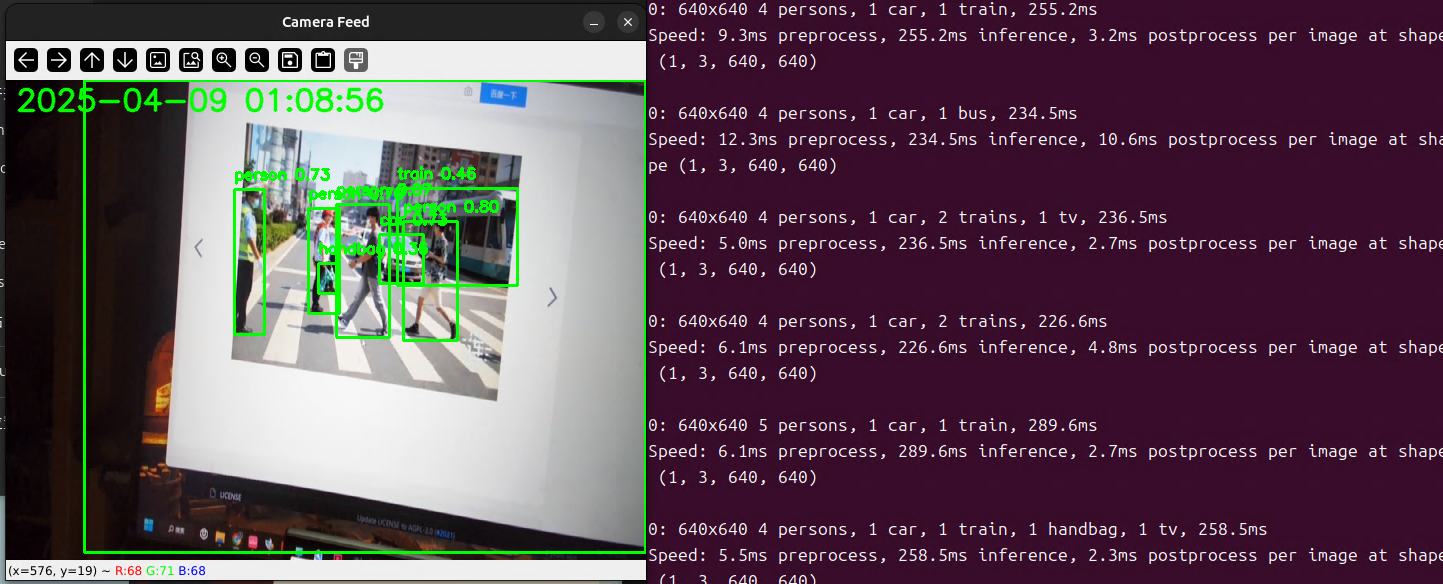

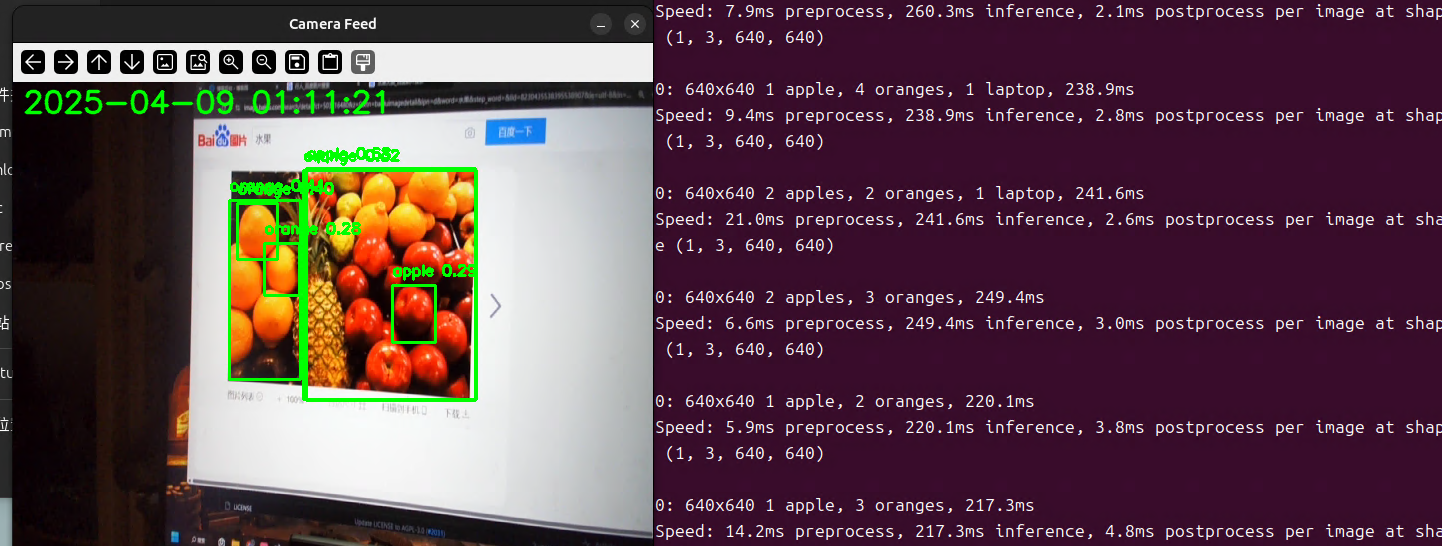

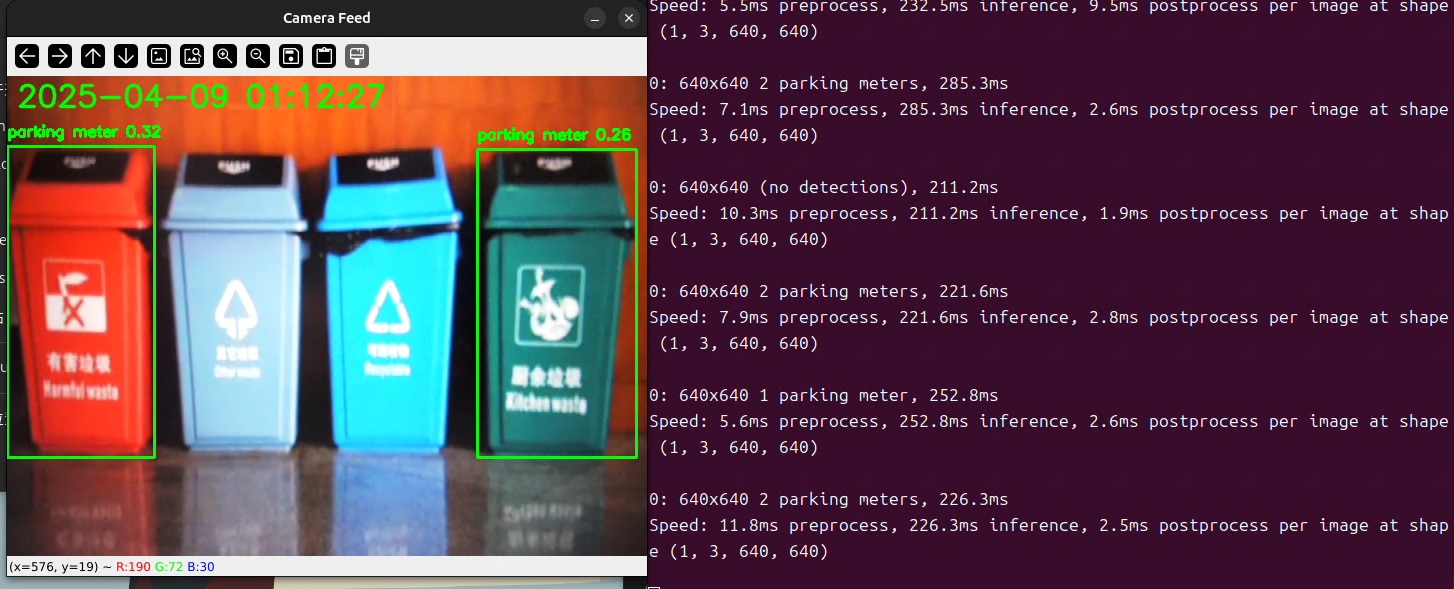

多线程测试代码

import cv2

import threading

import numpy as np

from ultralytics import YOLO

import datetime

use_ncnn = 1

# 加载YOLOv8模型

model = YOLO('./yolo11n.pt')

# Export the model to NCNN format

model.export(format="ncnn") # creates 'yolo11n_ncnn_model'

# Load the exported NCNN model

ncnn_model = YOLO("yolo11n_ncnn_model")

# 共享内存类

class SharedMemory:

def __init__(self):

self.frame = None

self.lock = threading.Lock()

self.running = True # 添加运行状态标志

# 从 USB 相机读取图像的线程

def capture_thread(shared_memory):

cap = cv2.VideoCapture(0)

if not cap.isOpened():

print("无法打开相机")

return

while shared_memory.running:

ret, frame = cap.read()

if not ret:

print("无法读取图像")

break

# 将图像存储到共享内存

with shared_memory.lock:

shared_memory.frame = frame.copy()

cap.release()

# 从共享内存读取图像并展示的线程

def display_thread(shared_memory):

while shared_memory.running:

with shared_memory.lock:

if shared_memory.frame is not None:

global results

if use_ncnn==1:

results = ncnn_model(shared_memory.frame)

else:

# 使用YOLOv8进行检测

results = model(shared_memory.frame)

frame = shared_memory.frame

# 解析结果并绘制检测框

for result in results:

boxes = result.boxes

for box in boxes:

x1, y1, x2, y2 = map(int, box.xyxy[0]) # 获取检测框坐标

confidence = box.conf[0] # 获取置信度

cls = int(box.cls[0]) # 获取类别

label = model.names[cls] # 获取类别名称

# 绘制检测框和标签

cv2.rectangle(frame, (x1, y1), (x2, y2), (0, 255, 0), 2)

cv2.putText(frame, f"{label} {confidence:.2f}", (x1, y1 - 10), cv2.FONT_HERSHEY_SIMPLEX, 0.5, (0, 255, 0), 2)

# 获取当前时间

now = datetime.datetime.now()

time_str = now.strftime("%Y-%m-%d %H:%M:%S")

cv2.putText(frame, time_str, (10, 30), cv2.FONT_HERSHEY_SIMPLEX, 1, (0, 255, 0), 2, cv2.LINE_AA)

cv2.imshow('Camera Feed', frame)

# 检查按键是否为 'q'

if cv2.waitKey(1) & 0xFF == ord('q'):

shared_memory.running = False # 设置运行状态为 False

break

cv2.destroyAllWindows()

# 主函数

if __name__ == "__main__":

shared_memory = SharedMemory()

# 启动捕获线程

capture_thread = threading.Thread(target=capture_thread, args=(shared_memory,))

capture_thread.start()

# 启动显示线程

display_thread = threading.Thread(target=display_thread, args=(shared_memory,))

display_thread.start()

capture_thread.join()

display_thread.join()

浙公网安备 33010602011771号

浙公网安备 33010602011771号